笔记:

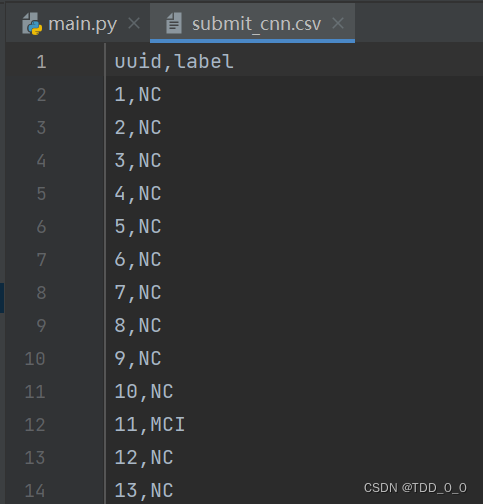

先把结果搬上来↓

大致分析一下CNN代码(叠一下甲,我python和深度学习的代码都不太熟悉,有错误欢迎指出)

个人对代码的分析:

(1)先导入要用到的库和包

import os

import sys

import glob

import argparse

import pandas as pd

import numpy as np

import albumentations as A

import cv2

import torch

import nibabel as nib

import torchvision.models as models

import torchvision.transforms as transforms

import torchvision.datasets as datasets

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.autograd import Variable

from torch.utils.data.dataset import Dataset

from nibabel.viewers import OrthoSlicer3D

from tqdm import tqdm

from PIL import Image

from sklearn.model_selection import train_test_split, StratifiedKFold, KFold(2)说明读取本地文件的地址,复制过来的代码一定要改成本地文件的地址哈,不然连数据集都读不进去。还有就是打乱顺序函数具体参考这位哥们解说↓numpy.random.shuffle打乱顺序函数_np.random.shuffle()函数_还能坚持的博客-CSDN博客

train_path = glob.glob('E:/XUNFEIproject/脑PET图像分析和疾病预测挑战赛公开数据/Train/*/*')

test_path = glob.glob('E:/XUNFEIproject/脑PET图像分析和疾病预测挑战赛公开数据/Test/*')

np.random.shuffle(train_path)

np.random.shuffle(test_path)(3)明显这个就是处理自定义数据集,下面是我看的大佬解说参考↓

Pytorch Dataset和Dataloader构建自定义数据集,代码模板 - 知乎 (zhihu.com)

class XunFeiDataset(Dataset):

def __init__(self, img_path, transform=None):

self.img_path = img_path

if transform is not None:

self.transform = transform

else:

self.transform = None

def __getitem__(self, index):

if self.img_path[index] in DATA_CACHE:

img = DATA_CACHE[self.img_path[index]]

else:

img = nib.load(self.img_path[index])

img = img.dataobj[:,:,:, 0]

DATA_CACHE[self.img_path[index]] = img

# 随机选择一些通道

idx = np.random.choice(range(img.shape[-1]), 50)

img = img[:, :, idx]

img = img.astype(np.float32)

if self.transform is not None:

img = self.transform(image = img)['image']

img = img.transpose([2,0,1])

return img,torch.from_numpy(np.array(int('NC' in self.img_path[index])))

def __len__(self):

return len(self.img_path)(4)就是选择神经网络了。CNN_baseline中选用了resnet18这个模型,这样子的话你当然可以选择resnet50甚至自己来设定各个层来自定义网络。这里推荐一些资料阅读↓

pytorch实践(改造属于自己的resnet网络结构并训练二分类网络) - 知乎 (zhihu.com)

当时听水哥说用resnet18就足够了,所以我也继续用了resnet18

class XunFeiNet(nn.Module):

def __init__(self):

super(XunFeiNet, self).__init__()

model = models.resnet18(True)

model.conv1 = torch.nn.Conv2d(50, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

model.avgpool = nn.AdaptiveAvgPool2d(1)

model.fc = nn.Linear(512, 2)

self.resnet = model

def forward(self, img):

out = self.resnet(img)

return out(5)定义训练函数train

先设置训练模式;初始化训练损失;再使用for循环遍历训练集的每一批次。

部署input和target后,设置好损失函数loss,优化器optimizer

def train(train_loader, model, criterion, optimizer):

model.train()

train_loss = 0.0

for i, (input, target) in enumerate(train_loader):

input = input.cuda() # (non_blocking=True)

target = target.cuda() # (non_blocking=True)

output = model(input)

loss = criterion(output, target.long())

optimizer.zero_grad()

loss.backward()

optimizer.step()

if i % 20 == 0: # 设置log_interval=20,所以每隔20个batch会输出,而batch_size=2,所以每隔40个数据输出一次。

print(loss.item())

train_loss += loss.item()

return train_loss / len(train_loader)同理设置好验证函数↓

def validate(val_loader, model, criterion):

model.eval()

val_acc = 0.0

with torch.no_grad():

for i, (input, target) in enumerate(val_loader):

input = input.cuda()

target = target.cuda()

# compute output

output = model(input)

loss = criterion(output, target.long())

val_acc += (output.argmax(1) == target).sum().item()

return val_acc / len(val_loader.dataset)(6)

对训练集,验证集,测试集的设置(数据增强等)

我用到了后面的一些内容,交叉验证,更多数据增强以及更多循环训练次数设置了69次,所以代码与原始CNN会有些许不同。

from sklearn.model_selection import train_test_split, StratifiedKFold, KFold

skf = KFold(n_splits=10, random_state=233, shuffle=True)

for fold_idx, (train_idx, val_idx) in enumerate(skf.split(train_path, train_path)):

train_loader = torch.utils.data.DataLoader(

XunFeiDataset(train_path[:-10],

A.Compose([

A.RandomCrop(120, 120),

A.RandomRotate90(p=0.5),

A.HorizontalFlip(p=0.5),

A.RandomContrast(p=0.5),

A.RandomBrightnessContrast(p=0.5),

A.ShiftScaleRotate(shift_limit=0.0625, scale_limit=0.1, rotate_limit=45, interpolation=1,

border_mode=4, value=None, mask_value=None, always_apply=False, p=0.4),

A.GridDistortion(num_steps=10, distort_limit=0.3, border_mode=4, always_apply=False, p=0.4),

])

), batch_size=8, shuffle=True#, num_workers=0, pin_memory=False

)

val_loader = torch.utils.data.DataLoader(

XunFeiDataset(train_path[-10:],

A.Compose([

A.RandomCrop(120, 120),

])

), batch_size=8, shuffle=False#, num_workers=0, pin_memory=False

)

model = XunFeiNet()

model = model.to('cuda')

criterion = nn.CrossEntropyLoss().cuda()

optimizer = torch.optim.AdamW(model.parameters(), 0.001)

for _ in range(69):

train_loss = train(train_loader, model, criterion, optimizer)

val_acc = validate(val_loader, model, criterion)

train_acc = validate(train_loader, model, criterion)

print(train_loss, train_acc, val_acc)

torch.save(model.state_dict(), 'E:/XUNFEIproject/resnet18_fold{0}.pt'.format(fold_idx))

test_loader = torch.utils.data.DataLoader(

XunFeiDataset(test_path,

A.Compose([

A.RandomCrop(120, 120),

A.HorizontalFlip(p=0.5),

A.RandomContrast(p=0.5),

])

), batch_size=8, shuffle=False#, num_workers=0, pin_memory=False

)(7)最后就是综合预测与打印结果了

def predict(test_loader, model, criterion):

model.eval()

val_acc = 0.0

test_pred = []

with torch.no_grad():

for i, (input, target) in enumerate(test_loader):

input = input.cuda()

target = target.cuda()

output = model(input)

test_pred.append(output.data.cpu().numpy())

return np.vstack(test_pred)

pred = None

model_path = glob.glob(r'E:\XUNFEIproject')

for model_path in ['resnet18_fold0.pt', 'resnet18_fold1.pt', 'resnet18_fold2.pt',

'resnet18_fold3.pt', 'resnet18_fold4.pt', 'resnet18_fold5.pt',

'resnet18_fold6.pt', 'resnet18_fold7.pt', 'resnet18_fold8.pt',

'resnet18_fold9.pt']:

model = XunFeiNet()

model = model.to('cuda')

model.load_state_dict(torch.load(model_path))

criterion = nn.CrossEntropyLoss().cuda()

optimizer = torch.optim.AdamW(model.parameters(), 0.001)

for _ in range(10):

if pred is None:

pred = predict(test_loader, model, criterion)

else:

pred += predict(test_loader, model, criterion)

submit = pd.DataFrame(

{

'uuid': [int(x.split('/')[-1][:-4].split("\\")[-1]) for x in test_path],

'label': pred.argmax(1)

})

submit['label'] = submit['label'].map({1: 'NC', 0: 'MCI'})

submit = submit.sort_values(by='uuid')

submit.to_csv('submit_cnn_Kflod5.csv', index=None)OVER!

下面我将讲解我本地环境部署时遇到的问题与解决方法。

本地环境部署时遇到的问题与解决办法:

(假设环境全配好了)

问题一:

找不到cudnn,查阅了大量博主的回答得知有可能是pytorch,torchvison,cuda,cudnn,python里面有版本的匹配错误,必须要互相适配版本!(但是警告是黄色的,不理会也没问题狗头护体

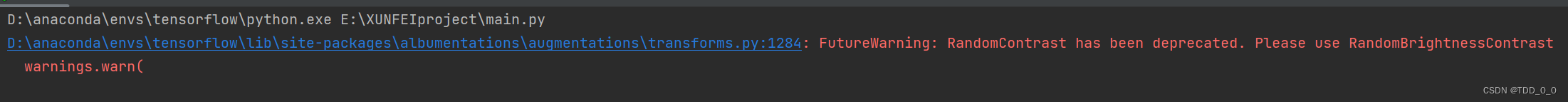

问题二:

这个意思是以后版本的公式可能会改动,所以这条可以忽视,不影响运行

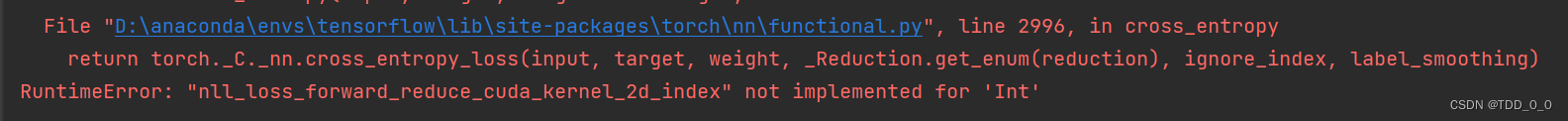

问题三:

查阅大量资料可参考这篇文章↓“nll_loss_forward_reduce_cuda_kernel_2d_index“ not implemented for ‘Int‘ 问题解决_weixin_45218778的博客-CSDN博客

即在代码中所有的↓

loss = criterion(output, target)改为↓

loss = criterion(output, target.long())即转换格式,问题就解决了

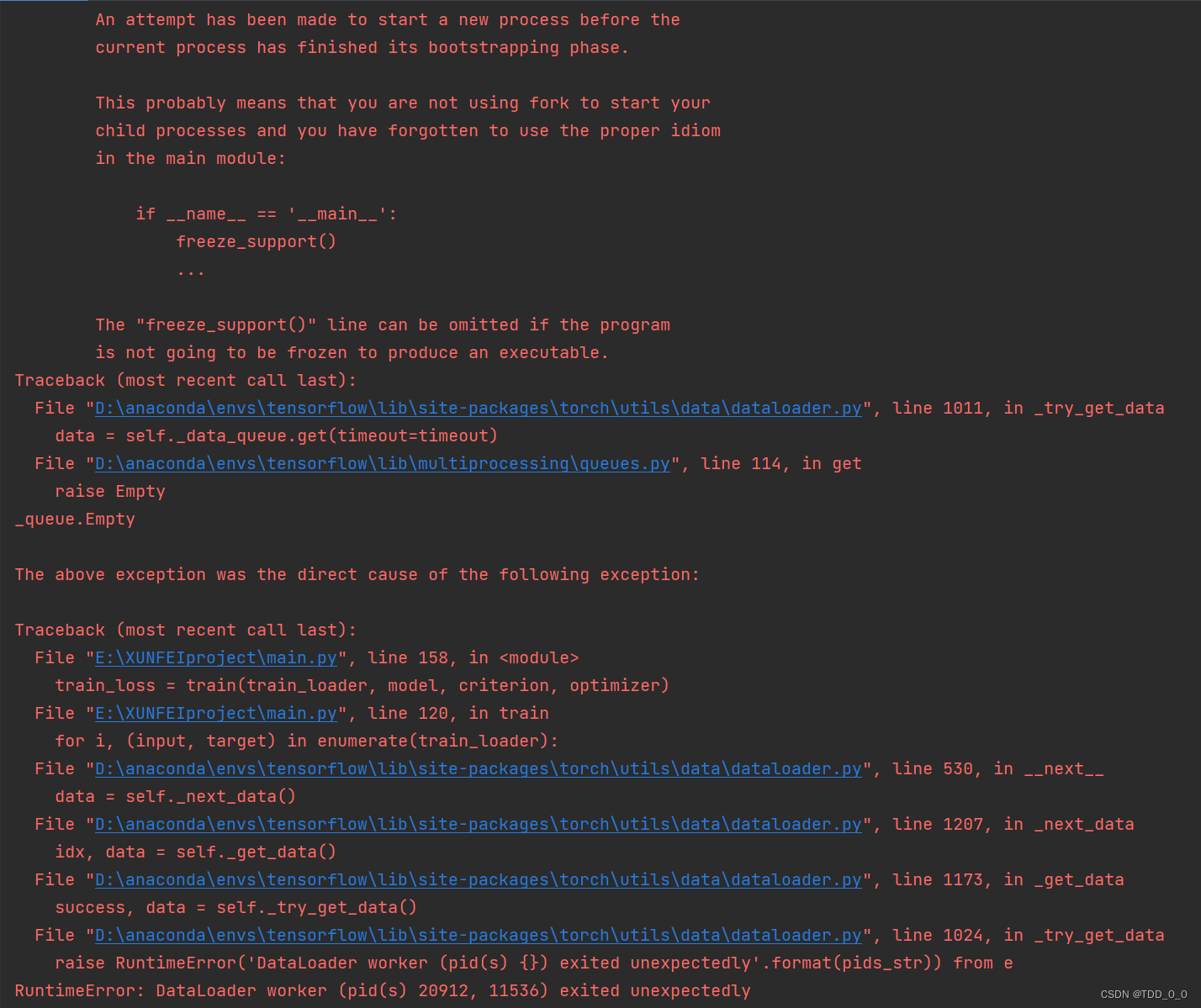

问题四:

出现了这个问题,差点把我劝退,因为找了一晚上没找到解决方法。后面还是查阅了大量文章,终于找到了解决这个问题的蛛丝马迹,具体可参考↓

即将DataLoader中的num_workers=2,改成num_workers=0,仅执行主进程。

在我的CNN中就是将下面代码↓

train_loader = torch.utils.data.DataLoader(

XunFeiDataset(train_path[:-10],

A.Compose([

A.RandomRotate90(),

A.RandomCrop(120, 120),

A.HorizontalFlip(p=0.5),

A.RandomContrast(p=0.5),

A.RandomBrightnessContrast(p=0.5),

])

), batch_size=2, shuffle=True, num_workers=2, pin_memory=False

)

val_loader = torch.utils.data.DataLoader(

XunFeiDataset(train_path[-10:],

A.Compose([

A.RandomCrop(120, 120),

])

), batch_size=2, shuffle=False, num_workers=2, pin_memory=False

)

test_loader = torch.utils.data.DataLoader(

XunFeiDataset(test_path,

A.Compose([

A.RandomCrop(128, 128),

A.HorizontalFlip(p=0.5),

A.RandomContrast(p=0.5),

])

), batch_size=2, shuffle=False, num_workers=2, pin_memory=False

)改为↓

train_loader = torch.utils.data.DataLoader(

XunFeiDataset(train_path[:-10],

A.Compose([

A.RandomRotate90(),

A.RandomCrop(120, 120),

A.HorizontalFlip(p=0.5),

A.RandomContrast(p=0.5),

A.RandomBrightnessContrast(p=0.5),

])

), batch_size=2, shuffle=True, num_workers=0, pin_memory=False

)

val_loader = torch.utils.data.DataLoader(

XunFeiDataset(train_path[-10:],

A.Compose([

A.RandomCrop(120, 120),

])

), batch_size=2, shuffle=False, num_workers=0, pin_memory=False

)

test_loader = torch.utils.data.DataLoader(

XunFeiDataset(test_path,

A.Compose([

A.RandomCrop(128, 128),

A.HorizontalFlip(p=0.5),

A.RandomContrast(p=0.5),

])

), batch_size=2, shuffle=False, num_workers=0, pin_memory=False

)问题五:

老问题了,具体看我的上一篇AI训练营|任务一笔记|含本地运行基线任务讲解_TDD_0_0的博客-CSDN博客

即将uuid行代码改为↓

'uuid': [int(x.split('/')[-1][:-4].split("\\")[-1]) for x in test_path],------------------------------------------------------分隔问题---------------------------------------------------------------

至此问题全部解决!!!!!!!!!!

运行!输出.csv文件!!!!!!!!!!!!

最后附上我目前的CNN训练代码,还有待改进

import os

import sys

import glob

import argparse

import pandas as pd

import numpy as np

import albumentations as A

import cv2

import torch

import nibabel as nib

import torchvision.models as models

import torchvision.transforms as transforms

import torchvision.datasets as datasets

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.autograd import Variable

from torch.utils.data.dataset import Dataset

from nibabel.viewers import OrthoSlicer3D

from tqdm import tqdm

from PIL import Image

from sklearn.model_selection import train_test_split, StratifiedKFold, KFold

train_path = glob.glob('E:/XUNFEIproject/脑PET图像分析和疾病预测挑战赛公开数据/Train/*/*')

test_path = glob.glob('E:/XUNFEIproject/脑PET图像分析和疾病预测挑战赛公开数据/Test/*')

np.random.shuffle(train_path)

np.random.shuffle(test_path)

DATA_CACHE = {}

torch.manual_seed(0)

torch.backends.cudnn.deterministic = False

torch.backends.cudnn.benchmark = True

class XunFeiDataset(Dataset):

def __init__(self, img_path, transform=None):

self.img_path = img_path

if transform is not None:

self.transform = transform

else:

self.transform = None

def __getitem__(self, index):

if self.img_path[index] in DATA_CACHE:

img = DATA_CACHE[self.img_path[index]]

else:

img = nib.load(self.img_path[index])

img = img.dataobj[:, :, :, 0]

DATA_CACHE[self.img_path[index]] = img

# 随机选择一些通道

idx = np.random.choice(range(img.shape[-1]), 50)

img = img[:, :, idx]

img = img.astype(np.float32)

if self.transform is not None:

img = self.transform(image=img)['image']

img = img.transpose([2, 0, 1])

return img, torch.from_numpy(np.array(int('NC' in self.img_path[index])))

def __len__(self):

return len(self.img_path)

class XunFeiNet(nn.Module):

def __init__(self):

super(XunFeiNet, self).__init__()

model = models.resnet18(True)

model.conv1 = torch.nn.Conv2d(50, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

model.avgpool = nn.AdaptiveAvgPool2d(1)

model.fc = nn.Linear(512, 2)

self.resnet = model

def forward(self, img):

out = self.resnet(img)

return out

def train(train_loader, model, criterion, optimizer):

model.train()

train_loss = 0.0

for i, (input, target) in enumerate(train_loader):

input = input.cuda() # (non_blocking=True)

target = target.cuda() # (non_blocking=True)

output = model(input)

loss = criterion(output, target.long())

optimizer.zero_grad()

loss.backward()

optimizer.step()

if i % 20 == 0: # 设置log_interval=20,所以每隔20个batch会输出,而batch_size=2,所以每隔40个数据输出一次。

print(loss.item())

train_loss += loss.item()

return train_loss / len(train_loader)

def validate(val_loader, model, criterion):

model.eval()

val_acc = 0.0

with torch.no_grad():

for i, (input, target) in enumerate(val_loader):

input = input.cuda()

target = target.cuda()

# compute output

output = model(input)

loss = criterion(output, target.long())

val_acc += (output.argmax(1) == target).sum().item()

return val_acc / len(val_loader.dataset)

from sklearn.model_selection import train_test_split, StratifiedKFold, KFold

skf = KFold(n_splits=10, random_state=233, shuffle=True)

for fold_idx, (train_idx, val_idx) in enumerate(skf.split(train_path, train_path)):

train_loader = torch.utils.data.DataLoader(

XunFeiDataset(train_path[:-10],

A.Compose([

A.RandomCrop(120, 120),

A.RandomRotate90(p=0.5),

A.HorizontalFlip(p=0.5),

A.RandomContrast(p=0.5),

A.RandomBrightnessContrast(p=0.5),

A.ShiftScaleRotate(shift_limit=0.0625, scale_limit=0.1, rotate_limit=45, interpolation=1,

border_mode=4, value=None, mask_value=None, always_apply=False, p=0.4),

A.GridDistortion(num_steps=10, distort_limit=0.3, border_mode=4, always_apply=False, p=0.4),

])

), batch_size=8, shuffle=True#, num_workers=0, pin_memory=False

)

val_loader = torch.utils.data.DataLoader(

XunFeiDataset(train_path[-10:],

A.Compose([

A.RandomCrop(120, 120),

])

), batch_size=8, shuffle=False#, num_workers=0, pin_memory=False

)

model = XunFeiNet()

model = model.to('cuda')

criterion = nn.CrossEntropyLoss().cuda()

optimizer = torch.optim.AdamW(model.parameters(), 0.001)

for _ in range(69):

train_loss = train(train_loader, model, criterion, optimizer)

val_acc = validate(val_loader, model, criterion)

train_acc = validate(train_loader, model, criterion)

print(train_loss, train_acc, val_acc)

torch.save(model.state_dict(), 'E:/XUNFEIproject/resnet18_fold{0}.pt'.format(fold_idx))

test_loader = torch.utils.data.DataLoader(

XunFeiDataset(test_path,

A.Compose([

A.RandomCrop(120, 120),

A.HorizontalFlip(p=0.5),

A.RandomContrast(p=0.5),

])

), batch_size=8, shuffle=False#, num_workers=0, pin_memory=False

)

def predict(test_loader, model, criterion):

model.eval()

val_acc = 0.0

test_pred = []

with torch.no_grad():

for i, (input, target) in enumerate(test_loader):

input = input.cuda()

target = target.cuda()

output = model(input)

test_pred.append(output.data.cpu().numpy())

return np.vstack(test_pred)

pred = None

model_path = glob.glob(r'E:\XUNFEIproject')

for model_path in ['resnet18_fold0.pt', 'resnet18_fold1.pt', 'resnet18_fold2.pt',

'resnet18_fold3.pt', 'resnet18_fold4.pt', 'resnet18_fold5.pt',

'resnet18_fold6.pt', 'resnet18_fold7.pt', 'resnet18_fold8.pt',

'resnet18_fold9.pt']:

model = XunFeiNet()

model = model.to('cuda')

model.load_state_dict(torch.load(model_path))

criterion = nn.CrossEntropyLoss().cuda()

optimizer = torch.optim.AdamW(model.parameters(), 0.001)

for _ in range(10):

if pred is None:

pred = predict(test_loader, model, criterion)

else:

pred += predict(test_loader, model, criterion)

submit = pd.DataFrame(

{

'uuid': [int(x.split('/')[-1][:-4].split("\\")[-1]) for x in test_path],

'label': pred.argmax(1)

})

submit['label'] = submit['label'].map({1: 'NC', 0: 'MCI'})

submit = submit.sort_values(by='uuid')

submit.to_csv('submit_cnn_Kflod5.csv', index=None)

感谢观看❀

942

942

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?