🍨 本文为[🔗365天深度学习训练营]中的学习记录博客

一、 前期准备

1. 设置GPU

如果设备上支持GPU就使用GPU,否则使用CPU

import torch

import torchvision

import torch.nn as nn

import os,PIL,pathlib,warnings

import torchvision.transforms as transforms

from torchvision import transforms, datasets

warnings.filterwarnings("ignore")

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

device 2.导入数据

data_dir = "/content/drive/MyDrive/Colab Notebooks/data"

data_dir = pathlib.Path(data_dir)

data_path = list(data_dir.glob('*'))

data_path

classeName = [str(path).split('/')[-1] for path in data_path]

classeName = [name for name in classeName if name != ".DS_Store"]

classeName

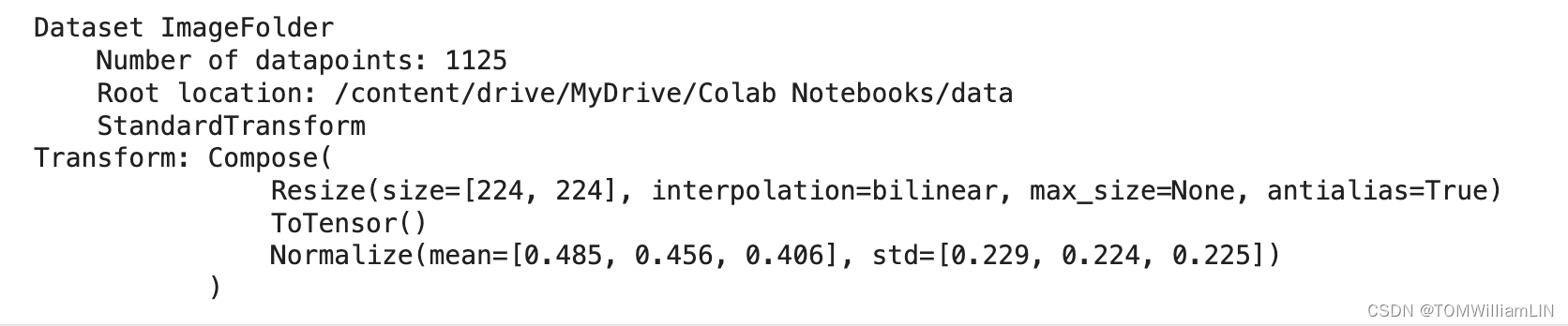

train_transforms = transforms.Compose([

transforms.Resize([224,224]),

transforms.ToTensor(),

transforms.Normalize(

mean = [0.485,0.456,0.406],

std = [0.229,0.224,0.225])

])

test_transforms = transforms.Compose([

transforms.Resize([224,224]),

transforms.ToTensor(),

transforms.Normalize(

mean = [0.485,0.456,0.406],

std = [0.229,0.224,0.225])

])

total_data = datasets.ImageFolder("/content/drive/MyDrive/Colab Notebooks/data",transform=train_transforms)

total_data

3.划分数据集

train_size = int(len(total_data)*0.8)

test_size = len(total_data) - train_size

train_dataset,test_dataset = torch.utils.data.random_split(total_data,[train_size,test_size])

train_dataset,test_dataset

batch_size = 32

train_dl = torch.utils.data.DataLoader(train_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

for X,y in test_dl:

print("Shape of X [N,C,H,W]: ",X.shape)

print("Shape of y: ",y.shape,y.dtype)

break

二、搭建包含C3模块的模型

1. 搭建模型

import torch.nn.functional as F

def autopad(k,p=None):

if p is None:

p = k // 2 if isinstance(k,int) else [x//2 for x in k]

return p

class Conv(nn.Module):

def __init__(self,c1,c2,k=1,s=1,p=None,g=1,act=True):

super().__init__()

self.conv = nn.Conv2d(c1,c2,k,s,autopad(k,p),groups=g,bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = nn.SiLU() if act is True else (act if isinstance(act,nn.Module) else nn.Identity())

def forward(self,x):

return self.act(self.bn(self.conv(x)))

class Bottleneck(nn.Module):

def __init__(self,c1,c2,shortcut=True,g=1,e=0.5):

super().__init__()

c_ = int(c2*e)

self.cv1 = Conv(c1,c_,1,1)

self.cv2 = Conv(c_,c2,3,1)

self.add = shortcut and c1 == c2

def forward(self,x):

out = self.cv2(self.cv1(x))

return x + out if self.add else out

class C3(nn.Module):

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

super().__init__()

c_ = int(c2*e)

self.cv1 = Conv(c1,c_,1,1)

self.cv2 = Conv(c1,c_,1,1)

self.cv3 = Conv(2*c_,c2,1)

self.m = nn.Sequential(*(Bottleneck(c_,c_,shortcut,g,e=1.0)for _ in range(n)))

def forward(self,x):

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)),dim=1))

class model_K(nn.Module):

def __init__(self):

super(model_K,self).__init__()

self.Conv = Conv(3,32,3,2)

self.C3_1 = C3(32,64,3)

self.classifier = nn.Sequential(

nn.Linear(in_features=802816,out_features=100),

nn.ReLU(),

nn.Linear(in_features=100,out_features=4)

)

def forward(self,x):

x = self.Conv(x)

x = self.C3_1(x)

x = torch.flatten(x,start_dim=1)

x = self.classifier(x)

return x

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f'Using {device} device')

model = model_K().to(device)

modelUsing cuda device

model_K(

(Conv): Conv(

(conv): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): SiLU()

)

(C3_1): C3(

(cv1): Conv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): SiLU()

)

(cv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): SiLU()

)

(cv3): Conv(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): SiLU()

)

(m): Sequential(

(0): Bottleneck(

(cv1): Conv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): SiLU()

)

(cv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): SiLU()

)

)

(1): Bottleneck(

(cv1): Conv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): SiLU()

)

(cv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): SiLU()

)

)

(2): Bottleneck(

(cv1): Conv(

(conv): Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): SiLU()

)

(cv2): Conv(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act): SiLU()

)

)

)

)

(classifier): Sequential(

(0): Linear(in_features=802816, out_features=100, bias=True)

(1): ReLU()

(2): Linear(in_features=100, out_features=4, bias=True)

)

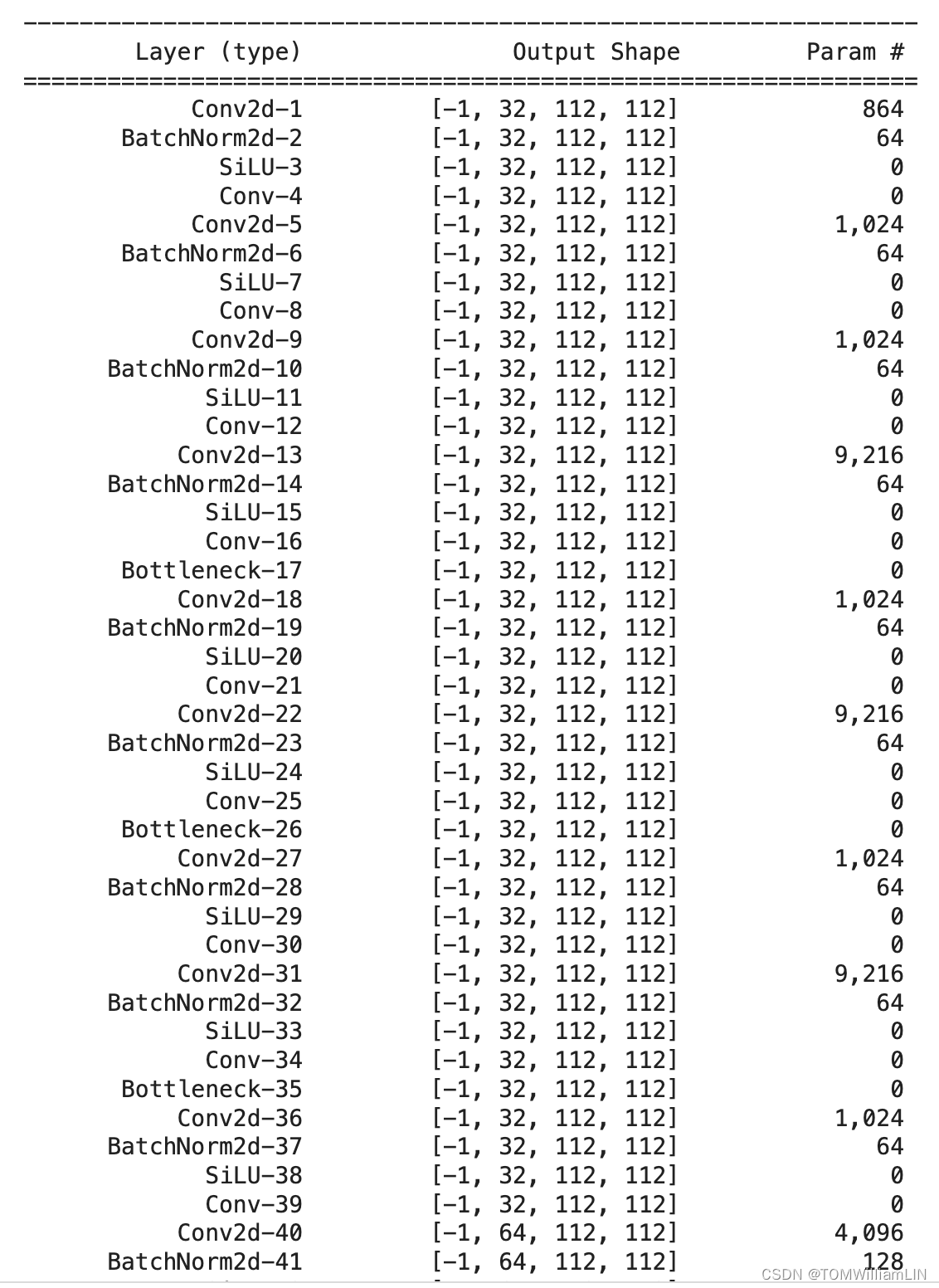

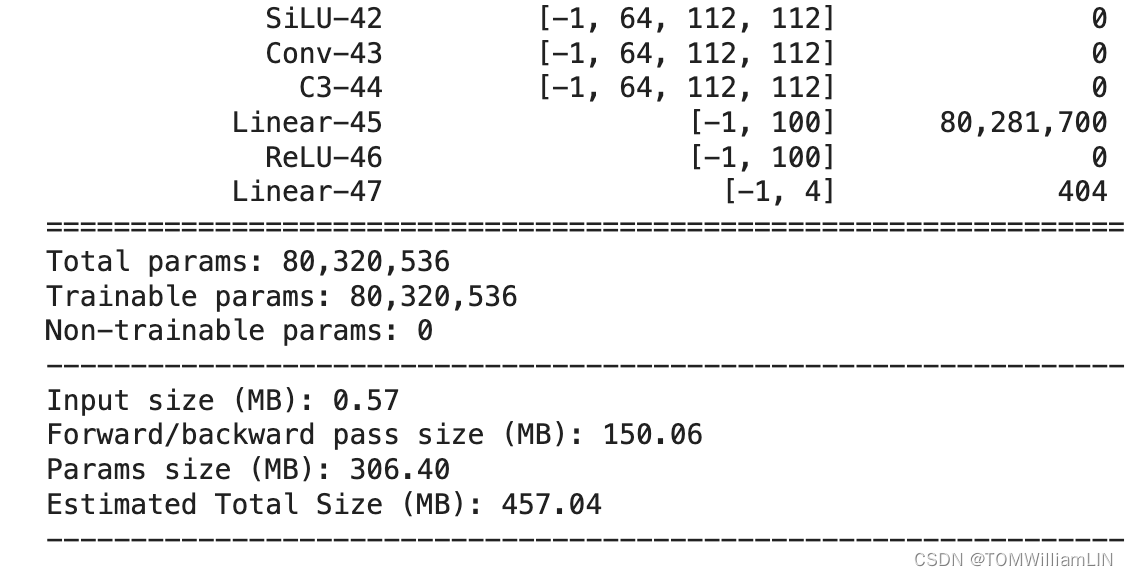

)import torchsummary as summary

summary.summary(model,(3,224,224))

三、训练模型

1.编写训练模型

def train(dataloader,model,loss_fn,optimizer):

size = len(dataloader.dataset)

num_batches = len(dataloader)

train_acc,train_loss = 0,0

for X,y in dataloader:

X, y = X.to(device), y.to(device)

pred = model(X)

loss = loss_fn(pred,y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_acc += (pred.argmax(1)== y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc,train_loss

2.编写测试函数

def test(dataloader,model,loss_fn):

size = len(dataloader.dataset)

num_batches = len(dataloader)

test_acc,test_loss = 0,0

with torch.no_grad():

for imgs,target in dataloader:

imgs,target = imgs.to(device), target.to(device)

target_pred = model(imgs)

loss = loss_fn(target_pred,target)

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_loss += loss.item()

test_acc /= size

test_loss /= num_batches

return test_acc,test_loss

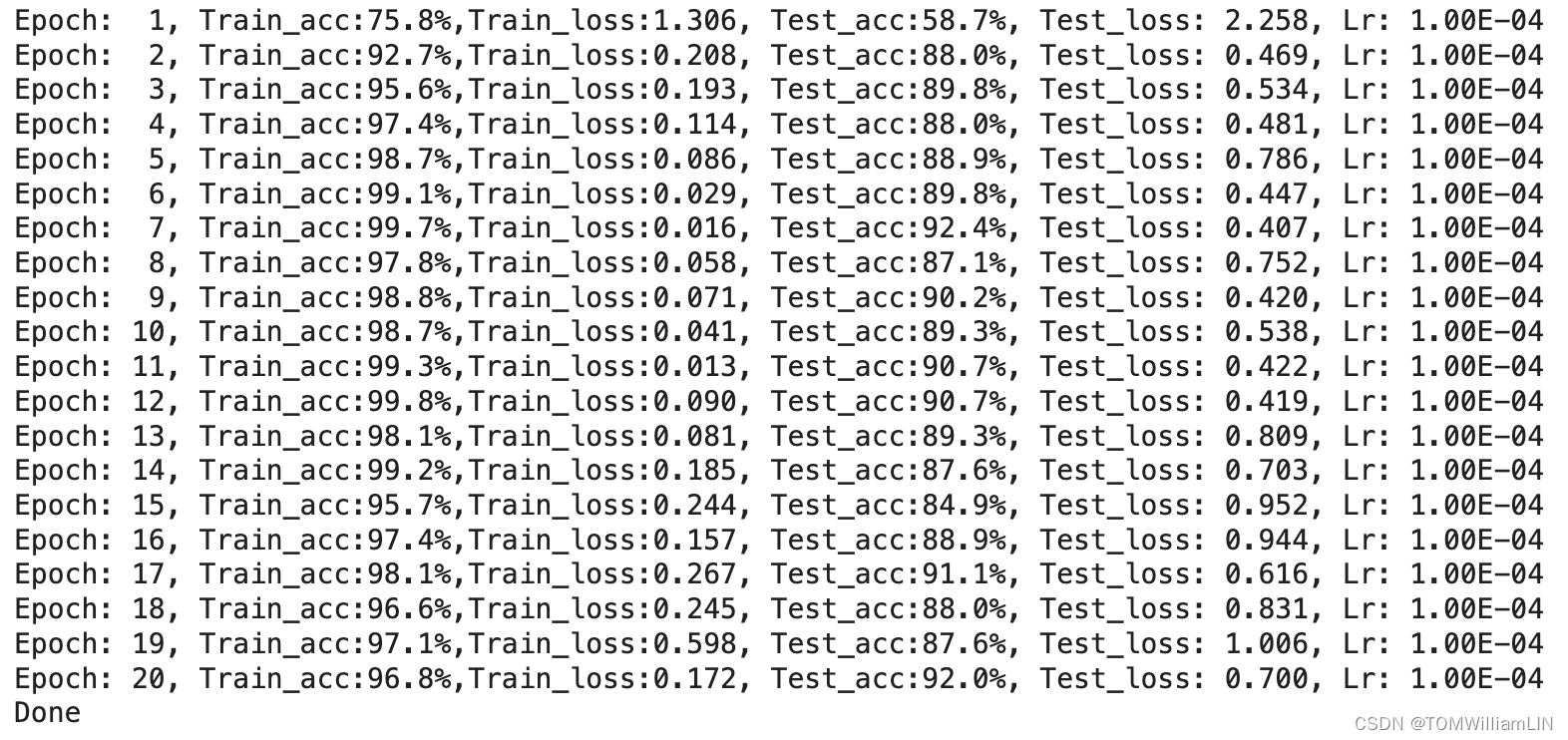

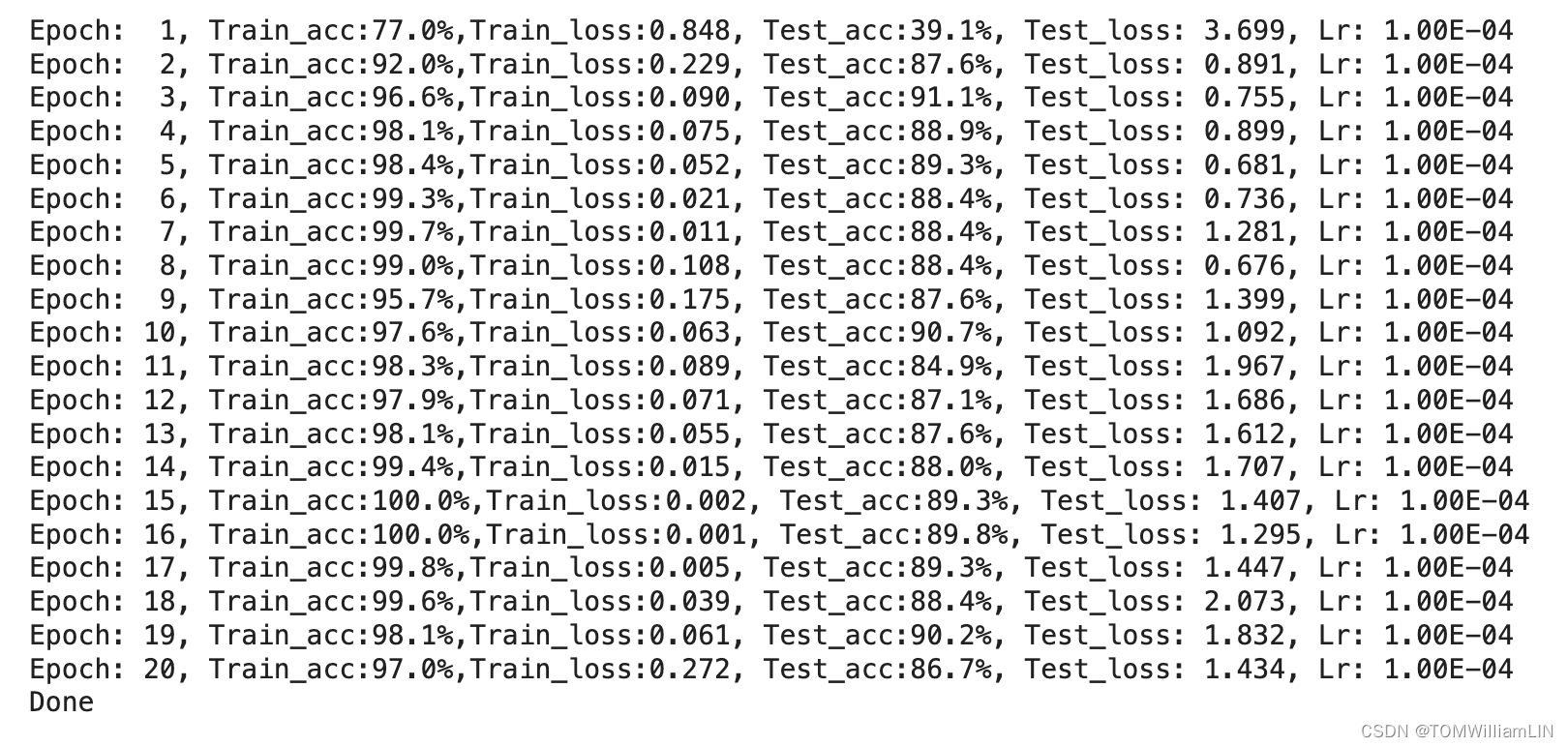

3.正式训练

import copy

optimizer = torch.optim.Adam(model.parameters(),lr=1e-4)

loss_fn = nn.CrossEntropyLoss()

epochs = 20

train_acc = []

train_loss = []

test_acc = []

test_loss = []

best_loss = 0

for epoch in range(epochs):

model.train()

epoch_train_acc,epoch_train_loss = train(train_dl,model,loss_fn,optimizer)

model.eval()

epoch_test_acc,epoch_test_loss = test(test_dl,model,loss_fn)

if epoch_test_acc > best_loss:

best_loss = epoch_test_acc

best_model = copy.deepcopy(model)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_test_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

lr = optimizer.state_dict()['param_groups'][0]['lr']

template = ('Epoch: {:2d}, Train_acc:{:.1f}%,Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss: {:.3f}, Lr: {:.2E}')

print(template.format(epoch+1, epoch_train_acc*100,epoch_train_loss,epoch_test_acc*100,epoch_test_loss,lr))

PATH = './best_model.pth'

torch.save(model.state_dict(),PATH)

print("Done")

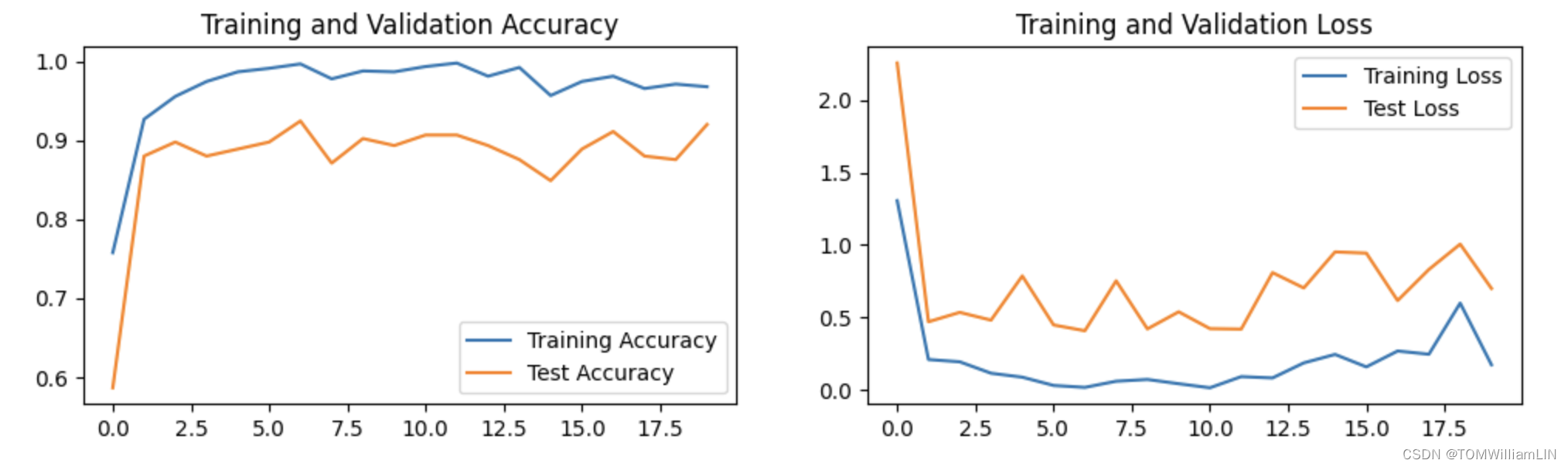

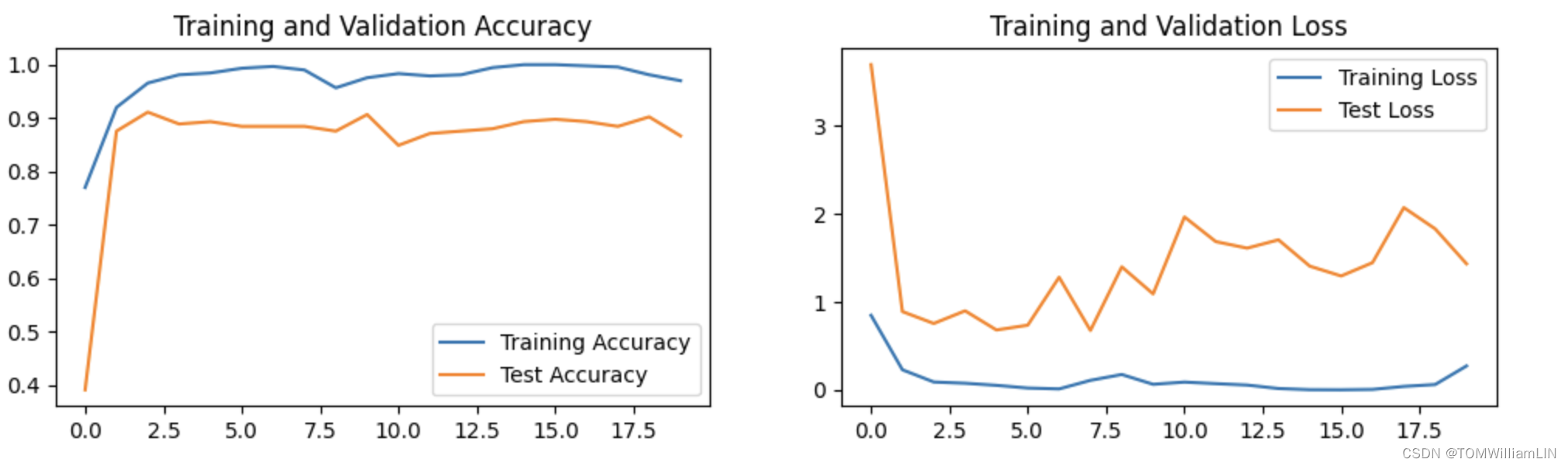

四、结果可视化

1.Loss 与 Accuracy图

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings("ignore")

epoch_range = range(epochs)

plt.figure(figsize=(12,3))

plt.subplot(121)

plt.plot(epoch_range,train_acc,label="Training Accuracy")

plt.plot(epoch_range,test_acc,label="Test Accuracy")

plt.legend(loc='lower right')

plt.title("Training and Validation Accuracy")

plt.subplot(122)

plt.plot(epoch_range,train_loss,label="Training Loss")

plt.plot(epoch_range,test_loss,label="Test Loss")

plt.legend(loc='upper right')

plt.show()

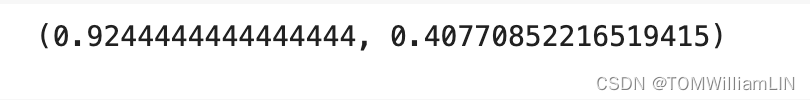

2.模型评估

best_model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl,best_model,loss_fn)

epoch_test_acc,epoch_test_loss

epoch_test_acc

五、个人总结

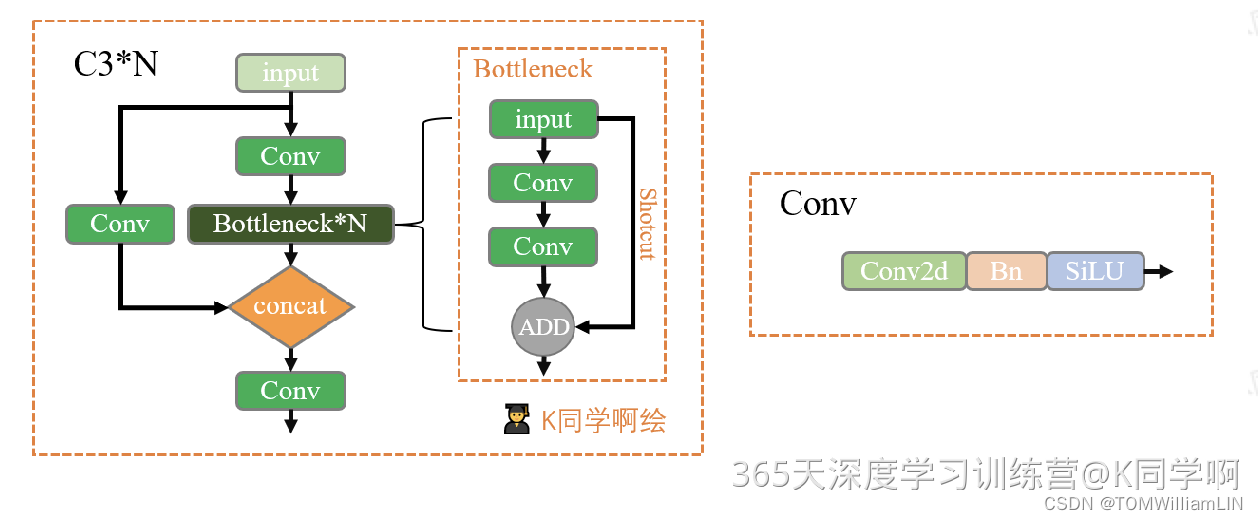

1. 了解 YOLOv5

在本次实战中,初步了解了YOLOv5 模型的整体架构,并且使用了其中C3模块来搭建模型

理解了C3模块的工作原理 C3 类是一个自定义的神经网络模块,,并且集成了多个卷积层和瓶颈模块。C3 是由三层卷积和n个瓶颈模块组成的这些瓶颈模块可以提升特征提取能力,同时利用残差连接来避免深层网络中的梯度消失问题,并且C3 模块的设计允许网络通过多个路径学习输入数据的特征,通过并行和连续的结构来提取不同的特征,并在最后通过合并层综合这些特征,从而丰富特征表示,增强模型的学习能力。

增加C3模块与Conv模块

class model_K(nn.Module):

def __init__(self):

super(model_K,self).__init__()

self.Conv = Conv(3,32,3,2)

self.C3_1 = C3(32,64,3)

self.Conv2 = Conv(64,128,3,2)

self.C3_2 = C3(128,256,3)

self.classifier = nn.Sequential(

nn.Linear(in_features=802816,out_features=100),

nn.ReLU(),

nn.Linear(in_features=100,out_features=4)

)

def forward(self,x):

x = self.Conv(x)

x = self.C3_1(x)

x = self.Conv2(x)

x = self.C3_2(x)

x = torch.flatten(x,start_dim=1)

x = self.classifier(x)

return x

在增加两个新的模块后发现模型的准确率并没有提高反而下降了,这是有可能增加了网络的复杂性,可能导致模型在训练集上过拟合,而在测试集或验证集上的泛化能力变差。

1434

1434

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?