CNN

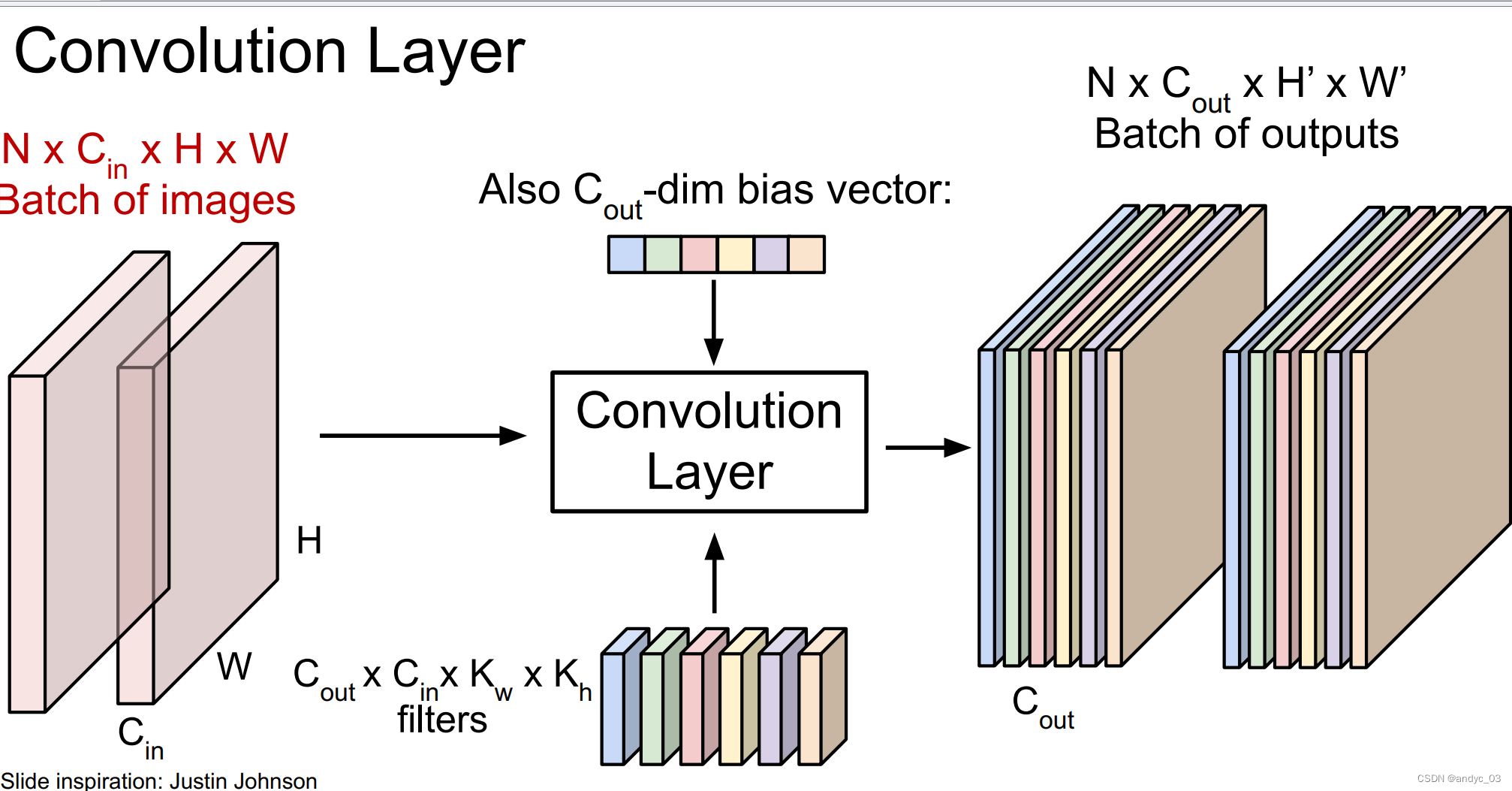

Convolutional Layer

We use a filter to slide over the image spatially (computing dot products)

Interspersed with activation function as well

What it learns?

First-layer conv filters: local image templates (Often learns oriented edges, opposing colors)

Problems:

- For large images, we need many layers to get information about the whole image

Solution: Downsample inside the network

-

Feature map shrinks with each layer

Solution: Padding : adding zeros around the input

Pooling layer

-> downsampling

Without parameters that needs to be learnt.

ex:

max pooling

Aver pooling

…

FC layer(Fully Connected)

The last layer should always be a FC layer.

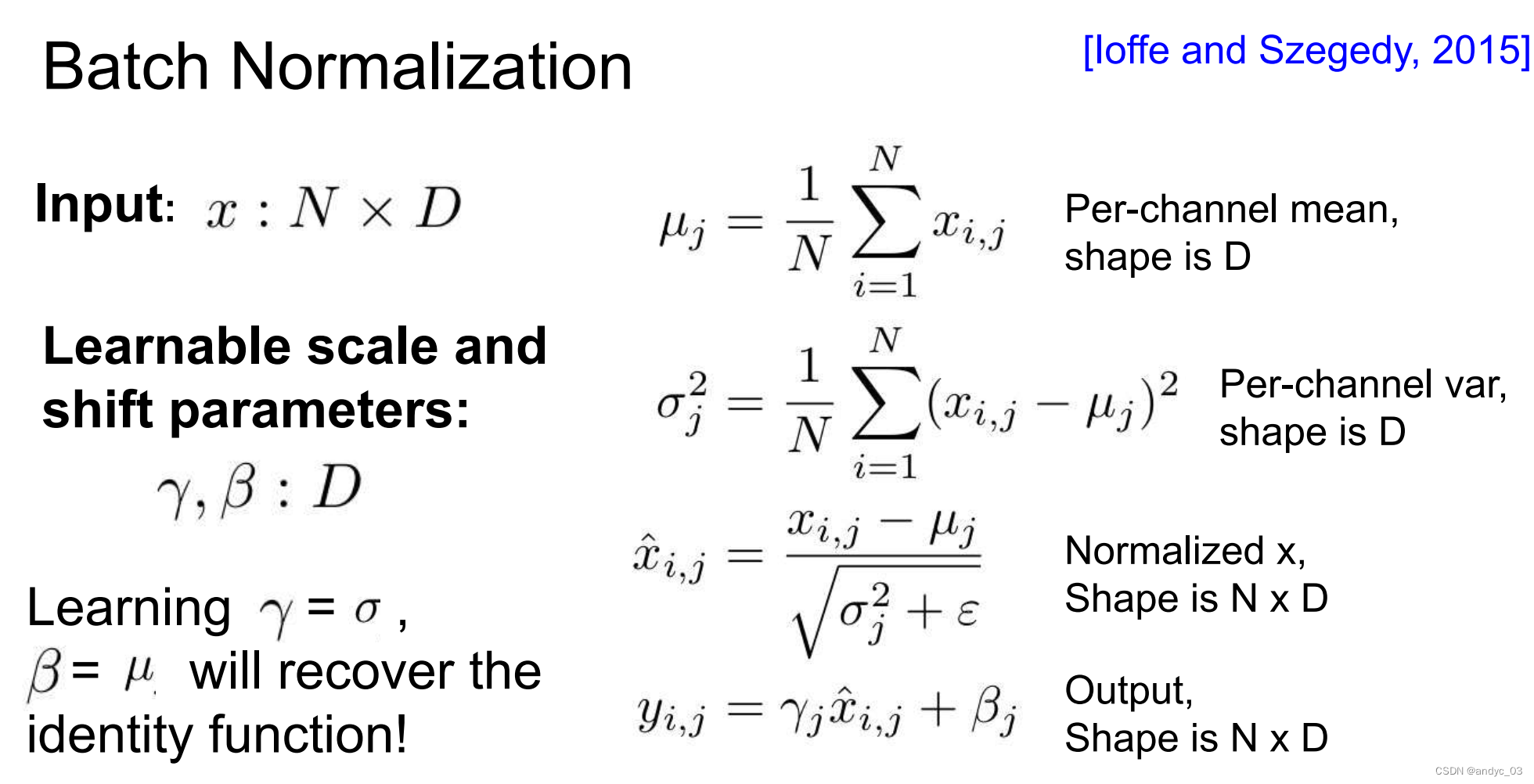

Batch normalization

we need to force inputs to be nicely scaled at each layer so that we can do the optimization more easily.

Usually inserted after FC layer / Convolutional layer, before non-linearity

Pros:

make the network easier to train

robust to initialization

Cons:

behaves differently during training and testing

Architechtures (History of ImageNet Challenge)

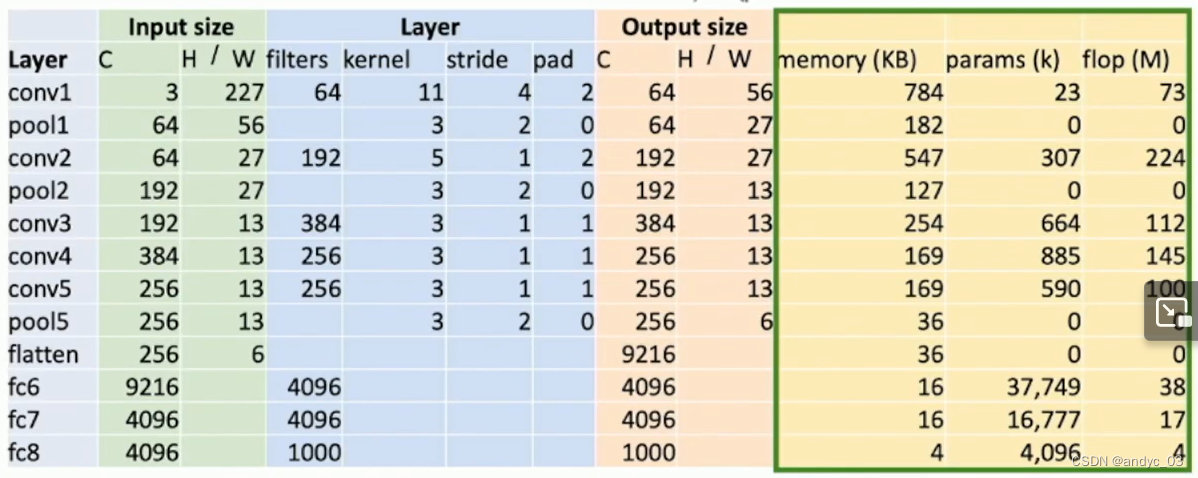

AlexNet

Input 3 * 277 * 277

Layer filters 64 kernel 11 stride 4 pad 2

We need to pay attention to the Memory, pramas, flop size

ZFNet

larger AlexNet

VGG

Rules:

- All conv 3*3 stride 1 pad 1

- max pool 2*2 stride 2

- after pool double channels

Stages:

conv-conv-pool

conv-conv-pool

conv-conv-pool

conv-conv-[conv]-pool

conv-conv-[conv]-pool

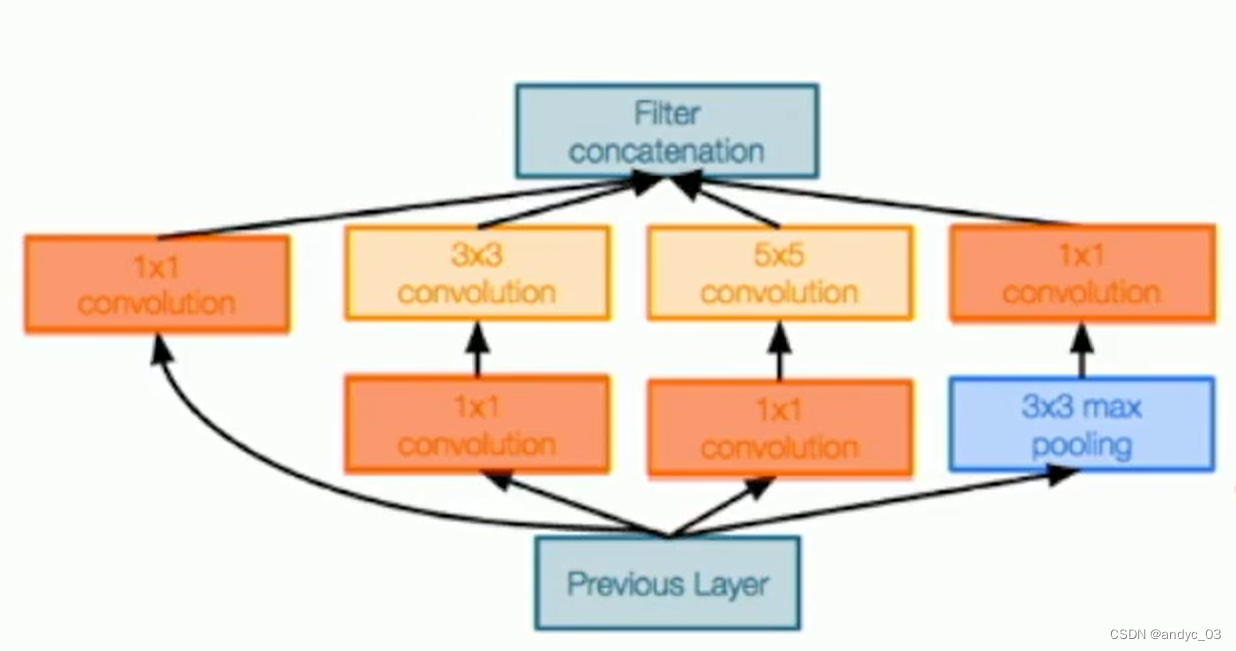

GoogLeNet

Stem network: aggressively downsamples input

Inception module:

Use such local unit with different kernal size

Use 1*1 Bottleneck to reduce channel dimensions

At the end, rather than flatting to destroy the spatial information with giant parameters

GoogLeNet use average pooling: 7 * 7 * 1024 -> 1024

There is only on FClayer at the last.

找到瓶颈位置,尽可能降低需要学习的参数数量/内存占用

Auxiliary Classifiers:

To help the deep network converge (batch normalization was not invented then): Auxiliary classification outputs to inject additional gradient at lower layers

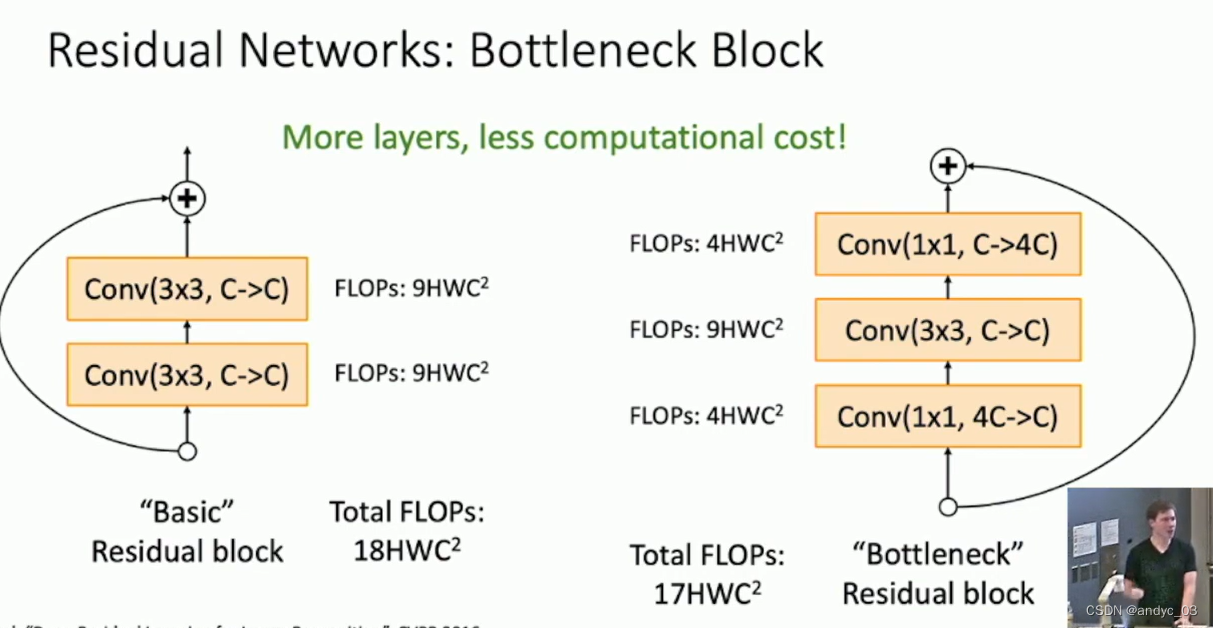

Residual Networks

We find out that, somtimes we make the net deeper but it turns out to be underfitted.

Deeper network should strictly have the capability to do whatever a shallow one can, but it’s hard to learn the parameters.

So we need the residual network!

This can help learning Identity, with all the parameters to be 0.

The still imitate VGG with its sat b

ResNeXt

Adding grops improves preforamance with same computational complexity.

MobileNets

reduce cost to make it affordable on mobile devices

Transfer learning

We can pretrain the model on a dataset.

When applying it to a new dataset, just finetune/Use linear classifier on the top layers.

Froze the main body of the net.

有一定争议,不需要预训练也能在2-3x的时间达到近似的效果

4946

4946

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?