MobileNetv1:

针对移动端以及嵌入式视觉的应用提出了一类有效的模型叫MobileNets。MobileNets基于一种流线型结构使用深度可分离卷积来构造轻型权重深度神经网络。

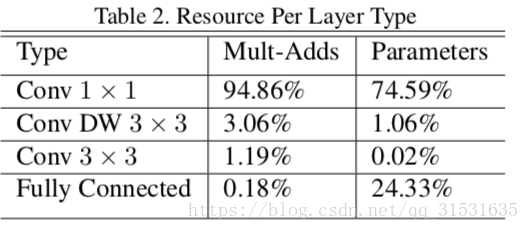

MobileNet的核心部分也就是深度可分离卷积。然后描述描述MobileNet的网络结构和两个模型收缩超参数即宽度乘法器和分辨率乘法器。

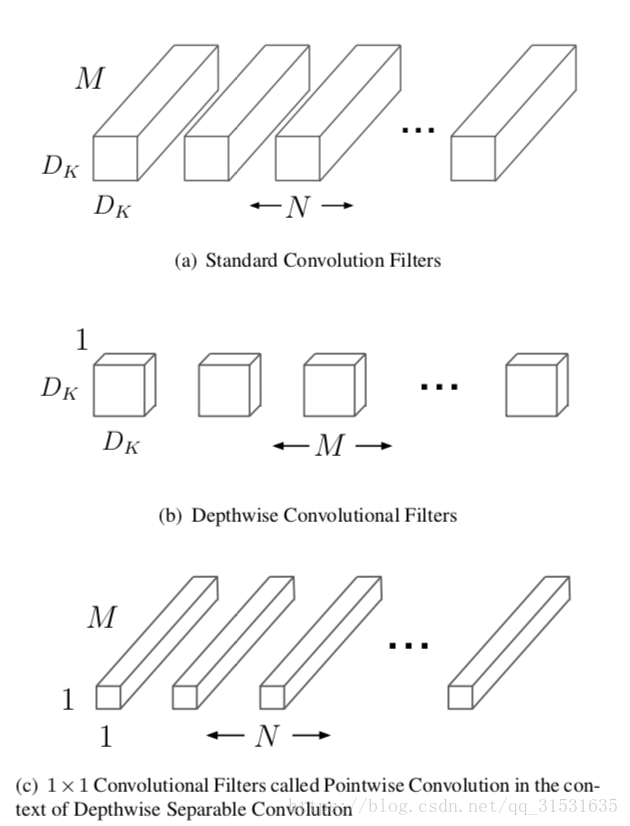

深度可分离卷积

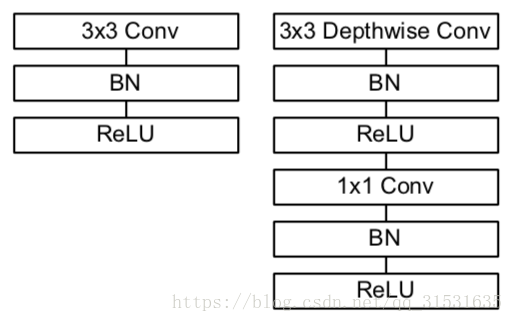

MobileNet是一种基于深度可分离卷积的模型,深度可分离卷积是一种将标准卷积分解成深度卷积以及一个1x1的卷积即逐点卷积。对于MobileNet而言,深度卷积针对每个单个输入通道应用单个滤波器进行滤波,然后逐点卷积应用1x1的卷积操作来结合所有深度卷积得到的输出。而标准卷积一步即对所有的输入进行结合得到新的一系列输出。深度可分离卷积将其分成了两步,针对每个单独层进行滤波然后下一步即结合。这种分解能够有效的大量减少计算量以及模型的大小。

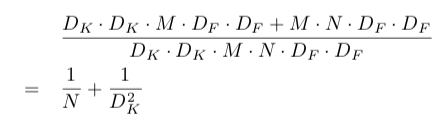

然后做了计算复杂度上的对比,

标准卷积:![]()

深度可分离卷积:![]()

深度可分离卷积+逐点卷积:![]()

然后与标准卷积相比较: ,在MobileNet使用3X3深度可分离卷积核的情况下,计算量比标准的卷积减少了8~9倍。

,在MobileNet使用3X3深度可分离卷积核的情况下,计算量比标准的卷积减少了8~9倍。

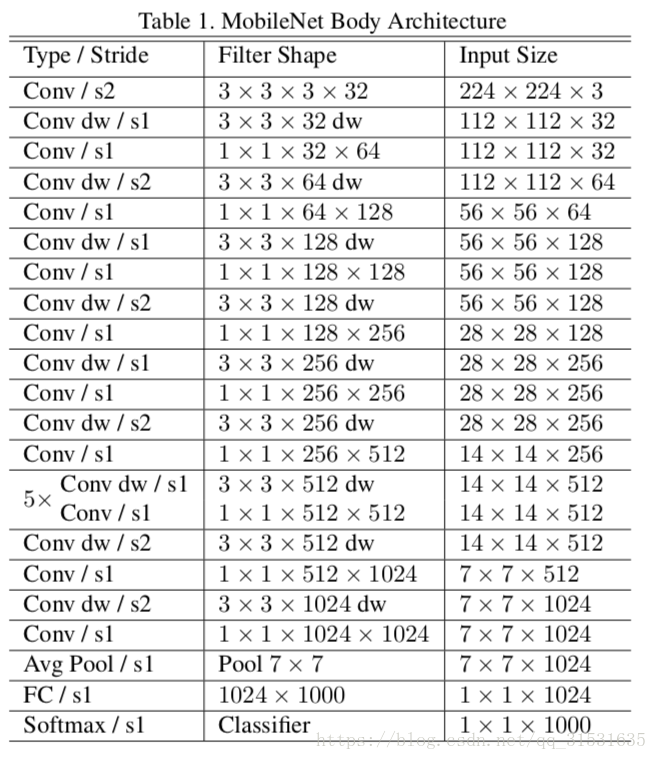

网络结构

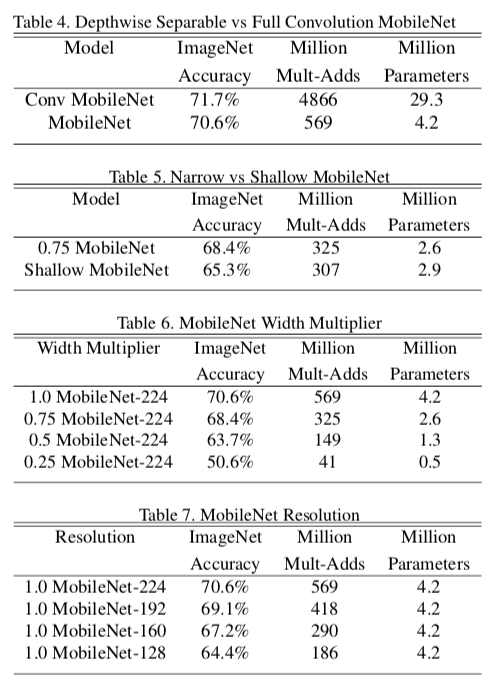

宽度乘法器:更薄的模型

尽管最基本的MobileNet结构已经非常小并且低延迟。而很多时候特定的案例或者应用可能会要求模型变得更小更快。为了构建这些更小并且计算量更小的模型,我们引入了一种非常简单的参数α叫做宽度乘法器。宽度乘法器αα的作用就是对每一层均匀薄化。给定一个层以及宽度乘法器α,输入通道数M变成了αM并且输出通道数变成αN。

计算:![]() ,这样计算量进一步减少

,这样计算量进一步减少

分辨率乘法器:约化表达

第二个薄化神经网络计算量的超参数是分辨率乘法器ρ。实际上,我们通过设置ρ来隐式的设置输入的分辨率大小。

计算:![]()

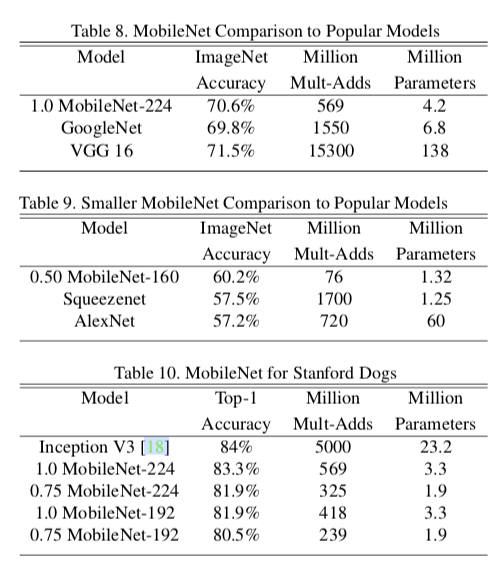

论文的思想就是如上,设法减少计算量,其余部分是实验验证,验证了在损失很小性能的情况下,参数量大幅减少。

网络实现:

# mobilenet_v1网络定义

def mobilenet_v1(inputs, alpha, is_training):

assert const.use_batch_norm == True

# assert断言是声明其布尔值必须为真的判定,如果发生异常就说明表达示为假

# 缩小因子, 只能为1,0.75,0.5,0.25

if alpha not in [0.25, 0.50, 0.75, 1.0]:

raise ValueError('alpha can be one of'

'`0.25`, `0.50`, `0.75` or `1.0` only.')

filter_initializer = tf.contrib.layers.xavier_initializer()

# 卷积,BN,RELU

def _conv2d(inputs, filters, kernel_size, stride, scope=''):

with tf.variable_scope(scope):

outputs = tf.layers.conv2d(inputs, filters, kernel_size,

strides=(stride, stride), padding='same',

activation=None, use_bias=False,

kernel_initializer=filter_initializer)

# 非线性激活之前进行BN批标准化

outputs = tf.layers.batch_normalization(outputs, training=is_training)

outputs = tf.nn.relu(outputs)

return outputs

# 深度可分离卷积,标准卷积分解成深度卷积(depthwise convolution)和逐点卷积(pointwise convolution)

def _depthwise_conv2d(inputs,

pointwise_conv_filters,

depthwise_conv_kernel_size,

stride,

scope=''):

with tf.variable_scope(scope):

with tf.variable_scope('depthwise_conv'):

outputs = tf.contrib.layers.separable_conv2d(

inputs,

None,

depthwise_conv_kernel_size,

depth_multiplier=1,

stride=(stride, stride),

padding='SAME',

activation_fn=None,

weights_initializer=filter_initializer,

biases_initializer=None)

outputs = tf.layers.batch_normalization(outputs, training=is_training)

outputs = tf.nn.relu(outputs)

with tf.variable_scope('pointwise_conv'):

pointwise_conv_filters = int(pointwise_conv_filters * alpha)

outputs = tf.layers.conv2d(outputs,

pointwise_conv_filters,

(1,1),

padding='same',

activation=None,

use_bias=False,

kernel_initializer=filter_initializer)

outputs = tf.layers.batch_normalization(outputs, training=is_training)

outputs = tf.nn.relu(outputs)

return outputs

# 平均池化

def _avg_pool2d(inputs, scope=''):

inputs_shape = inputs.get_shape().as_list()

assert len(inputs_shape) == 4

pool_height = inputs_shape[1]

pool_width = inputs_shape[2]

with tf.variable_scope(scope):

outputs = tf.layers.average_pooling2d(inputs,

[pool_height, pool_width],

strides=(1, 1),

padding='valid')

return outputs

with tf.variable_scope('mobilenet_v1', 'mobilenet_v1', [inputs]):

end_points = {}

net = inputs

net = _conv2d(net, 32, [3,3], stride=2, scope='block0')

end_points['block0'] = net

net = _depthwise_conv2d(net, 64, [3, 3], stride=1, scope='block1')

end_points['block1'] = net

net = _depthwise_conv2d(net, 128, [3, 3], stride=2, scope='block2')

end_points['block2'] = net

net = _depthwise_conv2d(net, 128, [3, 3], stride=1, scope='block3')

end_points['block3'] = net

net = _depthwise_conv2d(net, 256, [3, 3], stride=2, scope='block4')

end_points['block4'] = net

net = _depthwise_conv2d(net, 256, [3, 3], stride=1, scope='block5')

end_points['block5'] = net

net = _depthwise_conv2d(net, 512, [3, 3], stride=2, scope='block6')

end_points['block6'] = net

net = _depthwise_conv2d(net, 512, [3, 3], stride=1, scope='block7')

end_points['block7'] = net

net = _depthwise_conv2d(net, 512, [3, 3], stride=1, scope='block8')

end_points['block8'] = net

net = _depthwise_conv2d(net, 512, [3, 3], stride=1, scope='block9')

end_points['block9'] = net

net = _depthwise_conv2d(net, 512, [3, 3], stride=1, scope='block10')

end_points['block10'] = net

net = _depthwise_conv2d(net, 512, [3, 3], stride=1, scope='block11')

end_points['block11'] = net

net = _depthwise_conv2d(net, 1024, [3, 3], stride=2, scope='block12')

end_points['block12'] = net

net = _depthwise_conv2d(net, 1024, [3, 3], stride=1, scope='block13')

end_points['block13'] = net

output = _avg_pool2d(net, scope='output')

return output, end_points

1492

1492

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?