warnings.warn(msg)

Downloading: “https://download.pytorch.org/models/resnet18-f37072fd.pth” to /home/thomas/.cache/torch/hub/checkpoints/resnet18-f37072fd.pth

100.0%

0.672721, 30.660010

save

Traceback (most recent call last):

File “/home/thomas/dev_ws/install/line_follower_model/lib/line_follower_model/training”, line 33, in

sys.exit(load_entry_point(‘line-follower-model==0.0.0’, ‘console_scripts’, ‘training’)())

File “/home/thomas/dev_ws/install/line_follower_model/lib/python3.10/site-packages/line_follower_model/training_member_function.py”, line 131, in main

torch.save(model.state_dict(), BEST_MODEL_PATH)

File “/home/thomas/.local/lib/python3.10/site-packages/torch/serialization.py”, line 628, in save

with _open_zipfile_writer(f) as opened_zipfile:

File “/home/thomas/.local/lib/python3.10/site-packages/torch/serialization.py”, line 502, in _open_zipfile_writer

return container(name_or_buffer)

File “/home/thomas/.local/lib/python3.10/site-packages/torch/serialization.py”, line 473, in init

super().init(torch._C.PyTorchFileWriter(self.name))

RuntimeError: File ./best_line_follower_model_xy.pth cannot be opened.

这是由于没有文件夹的写权限

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning$ ls -l

total 8

drwxr-xr-x 3 root root 4096 Mar 27 11:03 10_model_convert

drwxr-xr-x 7 root root 4096 Mar 27 14:29 line_follower_model

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning$ sudo chmod 777 *

[sudo] password for thomas:

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning$ ls

10_model_convert line_follower_model

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning$ ls -l

total 8

drwxrwxrwx 3 root root 4096 Mar 27 11:03 10_model_convert

drwxrwxrwx 7 root root 4096 Mar 27 14:29 line_follower_model

再次执行

ros2 run line_follower_model training

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning/line_follower_model$ ros2 run line_follower_model training

/home/thomas/.local/lib/python3.10/site-packages/torchvision/models/_utils.py:208: UserWarning: The parameter ‘pretrained’ is deprecated since 0.13 and may be removed in the future, please use ‘weights’ instead.

warnings.warn(

/home/thomas/.local/lib/python3.10/site-packages/torchvision/models/_utils.py:223: UserWarning: Arguments other than a weight enum or None for ‘weights’ are deprecated since 0.13 and may be removed in the future. The current behavior is equivalent to passing weights=ResNet18_Weights.IMAGENET1K_V1. You can also use weights=ResNet18_Weights.DEFAULT to get the most up-to-date weights.

warnings.warn(msg)

0.722548, 6.242182

save

0.087550, 5.827808

save

0.045032, 0.380008

save

0.032235, 0.111976

save

0.027896, 0.039962

save

0.030725, 0.204738

0.025075, 0.036258

save

0.028099, 0.040965

0.016858, 0.032197

save

0.019491, 0.036230

0.018325, 0.043560

0.019858, 0.322563

0.015115, 0.070269

0.014820, 0.030373

模型训练过程需要一段时间,几十分钟或者一个小时,需要耐心等待,完成后可以看到生成的文件 best\_line\_follower\_model\_xy.pth

thomas@thomas-J20:~/dev_ws/src/originbot_desktop/originbot_deeplearning/line_follower_model$ ls -l

total 54892

-rw-rw-r-- 1 thomas thomas 44789846 Mar 28 13:28 best_line_follower_model_xy.pth

#### **模型转换**

pytorch训练得到的浮点模型如果直接运行在RDK X3上效率会很低,为了提高运行效率,发挥BPU的5T算力,这里需要进行浮点模型转定点模型操作。

[](https://www.originbot.org/application/image/deeplearning_line_follower/model_transform.jpg)

##### **生成onnx模型**

接下来执行 generate\_onnx 将之前训练好的模型,转换成 onnx 模型:

ros2 run line_follower_model generate_onnx

运行后在当前目录下得到生成 best\_line\_follower\_model\_xy.onnx 模型

thomas@J-35:~/dev_ws/src/originbot_desktop/originbot_deeplearning/line_follower_model$ ls -l

total 98556

-rw-rw-r-- 1 thomas thomas 44700647 Apr 2 21:02 best_line_follower_model_xy.onnx

-rw-rw-r-- 1 thomas thomas 44789846 Apr 2 19:37 best_line_follower_model_xy.pth

##### **启动AI工具链docker**

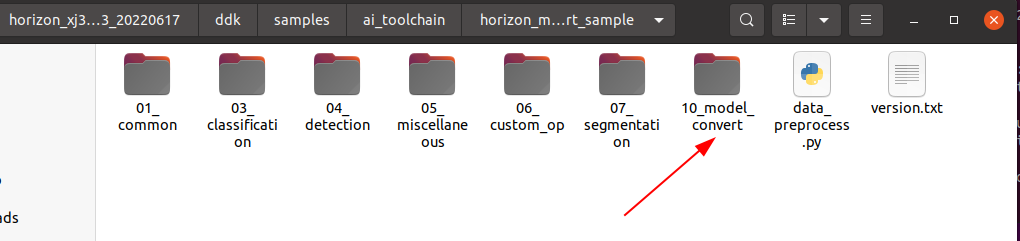

解压缩之前下载好的AI工具链的docker镜像和OE包,OE包目录结构如下:

.

├── bsp

│ └── X3J3-Img-PL2.2-V1.1.0-20220324.tgz

├── ddk

│ ├── package

│ ├── samples

│ └── tools

├── doc

│ ├── cn

│ ├── ddk_doc

│ └── en

├── release_note-CN.txt

├── release_note-EN.txt

├── run_docker.sh

└── tools

├── 0A_CP210x_USB2UART_Driver.zip

├── 0A_PL2302-USB-to-Serial-Comm-Port.zip

├── 0A_PL2303-M_LogoDriver_Setup_v202_20200527.zip

├── 0B_hbupdate_burn_secure-key1.zip

├── 0B_hbupdate_linux_cli_v1.1.tgz

├── 0B_hbupdate_linux_gui_v1.1.tgz

├── 0B_hbupdate_mac_v1.0.5.app.tar.gz

└── 0B_hbupdate_win64_v1.1.zip

将 originbot\_desktop 代码仓库中的 10\_model\_convert 包拷贝到至OE开发包 ddk/samples/ai\_toolchain/horizon\_model\_convert\_sample/03\_classification/ 目录下。

[](https://www.originbot.org/application/image/deeplearning_line_follower/2022-09-15_17-23.png)

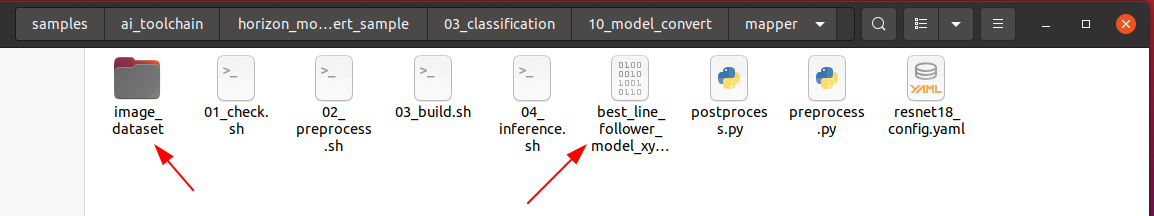

再把 line\_follower\_model 功能包下标注好的数据集文件夹 image\_dataset 和生成的 best\_line\_follower\_model\_xy.onnx 模型拷贝到以上 ddk/samples/ai\_toolchain/horizon\_model\_convert\_sample/03\_classification/10\_model\_convert/mapper/ 目录下,数据集文件夹 image\_dataset 保留100张左右的数据用于校准:

[](https://www.originbot.org/application/image/deeplearning_line_follower/2022-09-16_17-20.png)

然后回到OE包的根目录下,加载AI工具链的docker镜像:

cd /home/thomas/Me/deeplearning/horizon_xj3_open_explorer_v2.3.3_20220727/

sh run_docker.sh /data/

**生成校准数据**

在启动的Docker镜像中,完成如下操作:

cd ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper

sh 02_preprocess.sh

命令执行过程如下:

thomas@J-35:~/Me/deeplearning/horizon_xj3_open_explorer_v2.3.3_20220727$ sudo sh run_docker.sh /data/

[sudo] password for thomas:

run_docker.sh: 14: [: unexpected operator

run_docker.sh: 23: [: openexplorer/ai_toolchain_centos_7_xj3: unexpected operator

docker version is v2.3.3

dataset path is /data

open_explorer folder path is /home/thomas/Me/deeplearning/horizon_xj3_open_explorer_v2.3.3_20220727

[root@1e1a1a7e24f4 open_explorer]# cd ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper

[root@1e1a1a7e24f4 mapper]# sh 02_preprocess.sh

cd $(dirname $0) || exit

python3 …/…/…/data_preprocess.py

–src_dir ./image_dataset

–dst_dir ./calibration_data_bgr_f32

–pic_ext .rgb

–read_mode opencv

Warning please note that the data type is now determined by the name of the folder suffix

Warning if you need to set it explicitly, please configure the value of saved_data_type in the preprocess shell script

regular preprocess

write:./calibration_data_bgr_f32/xy_008_160_31a8e30a-eca6-11ee-bb07-dfd665df7b81.rgb

write:./calibration_data_bgr_f32/xy_009_160_39c18c40-eca6-11ee-bb07-dfd665df7b81.rgb

write:./calibration_data_bgr_f32/xy_028_092_3327df66-ec9b-11ee-bb07-dfd665df7b81.rgb

#####

##### **模型编译生成定点模型**

接下来执行以下命令生成定点模型文件,稍后会在机器人上部署:

cd ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper

sh 03_build.sh

命令执行过程如下:

[root@1e1a1a7e24f4 mapper]# sh 03_build.sh

2024-04-02 21:46:50,078 INFO Start hb_mapper…

2024-04-02 21:46:50,079 INFO log will be stored in /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/hb_mapper_makertbin.log

2024-04-02 21:46:50,079 INFO hbdk version 3.37.2

2024-04-02 21:46:50,080 INFO horizon_nn version 0.14.0

2024-04-02 21:46:50,080 INFO hb_mapper version 1.9.9

2024-04-02 21:46:50,081 INFO Start Model Convert…

2024-04-02 21:46:50,100 INFO Using abs path /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/best_line_follower_model_xy.onnx

2024-04-02 21:46:50,102 INFO validating model_parameters…

2024-04-02 21:46:50,231 WARNING User input ‘log_level’ deleted,Please do not use this parameter again

2024-04-02 21:46:50,231 INFO Using abs path /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/model_output

2024-04-02 21:46:50,232 INFO validating model_parameters finished

2024-04-02 21:46:50,232 INFO validating input_parameters…

2024-04-02 21:46:50,232 INFO input num is set to 1 according to input_names

2024-04-02 21:46:50,233 INFO model name missing, using model name from model file: [‘input’]

2024-04-02 21:46:50,233 INFO model input shape missing, using shape from model file: [[1, 3, 224, 224]]

2024-04-02 21:46:50,233 INFO validating input_parameters finished

2024-04-02 21:46:50,233 INFO validating calibration_parameters…

2024-04-02 21:46:50,233 INFO Using abs path /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/calibration_data_bgr_f32

2024-04-02 21:46:50,234 INFO validating calibration_parameters finished

2024-04-02 21:46:50,234 INFO validating custom_op…

2024-04-02 21:46:50,234 INFO custom_op does not exist, skipped

2024-04-02 21:46:50,234 INFO validating custom_op finished

2024-04-02 21:46:50,234 INFO validating compiler_parameters…

2024-04-02 21:46:50,235 INFO validating compiler_parameters finished

2024-04-02 21:46:50,239 WARNING Please note that the calibration file data type is set to float32, determined by the name of the calibration dir name suffix

2024-04-02 21:46:50,239 WARNING if you need to set it explicitly, please configure the value of cal_data_type in the calibration_parameters group in yaml

2024-04-02 21:46:50,240 INFO *******************************************

2024-04-02 21:46:50,240 INFO First calibration picture name: xy_008_160_31a8e30a-eca6-11ee-bb07-dfd665df7b81.rgb

2024-04-02 21:46:50,240 INFO First calibration picture md5:

83281dbdee2db08577524faa7f892adf /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/calibration_data_bgr_f32/xy_008_160_31a8e30a-eca6-11ee-bb07-dfd665df7b81.rgb

2024-04-02 21:46:50,265 INFO *******************************************

2024-04-02 21:46:51,682 INFO [Tue Apr 2 21:46:51 2024] Start to Horizon NN Model Convert.

2024-04-02 21:46:51,683 INFO Parsing the input parameter:{‘input’: {‘input_shape’: [1, 3, 224, 224], ‘expected_input_type’: ‘YUV444_128’, ‘original_input_type’: ‘RGB’, ‘original_input_layout’: ‘NCHW’, ‘means’: array([123.675, 116.28 , 103.53 ], dtype=float32), ‘scales’: array([0.0171248, 0.017507 , 0.0174292], dtype=float32)}}

2024-04-02 21:46:51,684 INFO Parsing the calibration parameter

2024-04-02 21:46:51,684 INFO Parsing the hbdk parameter:{‘hbdk_pass_through_params’: ‘–fast --O3’, ‘input-source’: {‘input’: ‘pyramid’, ‘_default_value’: ‘ddr’}}

2024-04-02 21:46:51,685 INFO HorizonNN version: 0.14.0

2024-04-02 21:46:51,685 INFO HBDK version: 3.37.2

2024-04-02 21:46:51,685 INFO [Tue Apr 2 21:46:51 2024] Start to parse the onnx model.

2024-04-02 21:46:51,770 INFO Input ONNX model infomation:

ONNX IR version: 6

Opset version: 11

Producer: pytorch2.2.2

Domain: none

Input name: input, [1, 3, 224, 224]

Output name: output, [1, 2]

2024-04-02 21:46:52,323 INFO [Tue Apr 2 21:46:52 2024] End to parse the onnx model.

2024-04-02 21:46:52,324 INFO Model input names: [‘input’]

2024-04-02 21:46:52,324 INFO Create a preprocessing operator for input_name input with means=[123.675 116.28 103.53 ], std=[58.39484253 57.12000948 57.37498298], original_input_layout=NCHW, color convert from ‘RGB’ to ‘YUV_BT601_FULL_RANGE’.

2024-04-02 21:46:52,750 INFO Saving the original float model: resnet18_224x224_nv12_original_float_model.onnx.

2024-04-02 21:46:52,751 INFO [Tue Apr 2 21:46:52 2024] Start to optimize the model.

2024-04-02 21:46:53,782 INFO [Tue Apr 2 21:46:53 2024] End to optimize the model.

2024-04-02 21:46:53,953 INFO Saving the optimized model: resnet18_224x224_nv12_optimized_float_model.onnx.

2024-04-02 21:46:53,953 INFO [Tue Apr 2 21:46:53 2024] Start to calibrate the model.

2024-04-02 21:46:53,954 INFO There are 100 samples in the calibration data set.

2024-04-02 21:46:54,458 INFO Run calibration model with kl method.

2024-04-02 21:47:06,290 INFO [Tue Apr 2 21:47:06 2024] End to calibrate the model.

2024-04-02 21:47:06,291 INFO [Tue Apr 2 21:47:06 2024] Start to quantize the model.

2024-04-02 21:47:09,926 INFO input input is from pyramid. Its layout is set to NHWC

2024-04-02 21:47:10,502 INFO [Tue Apr 2 21:47:10 2024] End to quantize the model.

2024-04-02 21:47:11,101 INFO Saving the quantized model: resnet18_224x224_nv12_quantized_model.onnx.

2024-04-02 21:47:14,165 INFO [Tue Apr 2 21:47:14 2024] Start to compile the model with march bernoulli2.

2024-04-02 21:47:15,502 INFO Compile submodel: main_graph_subgraph_0

2024-04-02 21:47:16,985 INFO hbdk-cc parameters:[‘–fast’, ‘–O3’, ‘–input-layout’, ‘NHWC’, ‘–output-layout’, ‘NHWC’, ‘–input-source’, ‘pyramid’]

2024-04-02 21:47:17,276 INFO INFO: “-j” or “–jobs” is not specified, launch 2 threads for optimization

2024-04-02 21:47:17,277 WARNING missing stride for pyramid input[0], use its aligned width by default.

[==================================================] 100%

2024-04-02 21:47:25,296 INFO consumed time 8.06245

2024-04-02 21:47:25,555 INFO FPS=121.27, latency = 8246.2 us (see main_graph_subgraph_0.html)

2024-04-02 21:47:25,895 INFO [Tue Apr 2 21:47:25 2024] End to compile the model with march bernoulli2.

2024-04-02 21:47:25,896 INFO The converted model node information:

Node ON Subgraph Type Cosine Similarity Threshold

HZ_PREPROCESS_FOR_input BPU id(0) HzSQuantizedPreprocess 0.999952 127.000000

/conv1/Conv BPU id(0) HzSQuantizedConv 0.999723 3.186383

/maxpool/MaxPool BPU id(0) HzQuantizedMaxPool 0.999790 3.562476

/layer1/layer1.0/conv1/Conv BPU id(0) HzSQuantizedConv 0.999393 3.562476

/layer1/layer1.0/conv2/Conv BPU id(0) HzSQuantizedConv 0.999360 2.320694

/layer1/layer1.1/conv1/Conv BPU id(0) HzSQuantizedConv 0.997865 5.567303

/layer1/layer1.1/conv2/Conv BPU id(0) HzSQuantizedConv 0.998228 2.442273

/layer2/layer2.0/conv1/Conv BPU id(0) HzSQuantizedConv 0.995588 6.622376

/layer2/layer2.0/conv2/Conv BPU id(0) HzSQuantizedConv 0.996943 3.076967

/layer2/layer2.0/downsample/downsample.0/Conv BPU id(0) HzSQuantizedConv 0.997177 6.622376

/layer2/layer2.1/conv1/Conv BPU id(0) HzSQuantizedConv 0.996080 3.934074

/layer2/layer2.1/conv2/Conv BPU id(0) HzSQuantizedConv 0.997443 3.025215

/layer3/layer3.0/conv1/Conv BPU id(0) HzSQuantizedConv 0.998448 4.853349

/layer3/layer3.0/conv2/Conv BPU id(0) HzSQuantizedConv 0.998819 2.553357

/layer3/layer3.0/downsample/downsample.0/Conv BPU id(0) HzSQuantizedConv 0.998717 4.853349

/layer3/layer3.1/conv1/Conv BPU id(0) HzSQuantizedConv 0.998631 3.161120

/layer3/layer3.1/conv2/Conv BPU id(0) HzSQuantizedConv 0.998802 2.501193

/layer4/layer4.0/conv1/Conv BPU id(0) HzSQuantizedConv 0.999474 5.645166

/layer4/layer4.0/conv2/Conv BPU id(0) HzSQuantizedConv 0.999709 2.401657

/layer4/layer4.0/downsample/downsample.0/Conv BPU id(0) HzSQuantizedConv 0.999250 5.645166

/layer4/layer4.1/conv1/Conv BPU id(0) HzSQuantizedConv 0.999808 5.394126

/layer4/layer4.1/conv2/Conv BPU id(0) HzSQuantizedConv 0.999865 3.072157

/avgpool/GlobalAveragePool BPU id(0) HzSQuantizedConv 0.999965 17.365398

/fc/Gemm BPU id(0) HzSQuantizedConv 0.999967 2.144315

/fc/Gemm_NHWC2NCHW_LayoutConvert_Output0_reshape CPU – Reshape

2024-04-02 21:47:25,897 INFO The quantify model output:

Node Cosine Similarity L1 Distance L2 Distance Chebyshev Distance

/fc/Gemm 0.999967 0.007190 0.005211 0.008810

2024-04-02 21:47:25,898 INFO [Tue Apr 2 21:47:25 2024] End to Horizon NN Model Convert.

2024-04-02 21:47:26,084 INFO start convert to *.bin file…

2024-04-02 21:47:26,183 INFO ONNX model output num : 1

2024-04-02 21:47:26,184 INFO ############# model deps info #############

2024-04-02 21:47:26,185 INFO hb_mapper version : 1.9.9

2024-04-02 21:47:26,185 INFO hbdk version : 3.37.2

2024-04-02 21:47:26,185 INFO hbdk runtime version: 3.14.14

2024-04-02 21:47:26,186 INFO horizon_nn version : 0.14.0

2024-04-02 21:47:26,186 INFO ############# model_parameters info #############

2024-04-02 21:47:26,186 INFO onnx_model : /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/best_line_follower_model_xy.onnx

2024-04-02 21:47:26,186 INFO BPU march : bernoulli2

2024-04-02 21:47:26,187 INFO layer_out_dump : False

2024-04-02 21:47:26,187 INFO log_level : DEBUG

2024-04-02 21:47:26,187 INFO working dir : /open_explorer/ddk/samples/ai_toolchain/horizon_model_convert_sample/03_classification/10_model_convert/mapper/model_output

2024-04-02 21:47:26,187 INFO output_model_file_prefix: resnet18_224x224_nv12

2024-04-02 21:47:26,188 INFO ############# input_parameters info #############

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Python工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Python开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上前端开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以扫码获取!!!(备注:Python)

…(img-cQQNH9XG-1713711968274)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上前端开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以扫码获取!!!(备注:Python)

3157

3157

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?