目录

作业 7

一开始还是要看与上一次作业框架的差别

main

main 与上一次差不多,定义模型和光源之后就开始渲染

Renderer

这里与上一次不同的是,这里在确定某一个像素的位置和从摄像机到这个像素的方向之后,重复了 spp 遍光线投射

for (int k = 0; k < spp; k++){

framebuffer[m] += scene.castRay(Ray(eye_pos, dir), 0) / spp;

}

castRay

这个应该是要看最后的伪代码

sampleLight

我看了一下,应该还是要遍历灯光的,于是我修改成

shade(p, w0)

# Contribution from the light source

for(light : lights)

Uniformly sample the light at x' (pdf_light = 1 / A)

Shoot a ray from p to x'

If the ray is not blocked in the middle

L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p|^2 / pdf_light

# Contribution from other reflectors

L_indir = 0.0

Test Russian Roulette with probability P_RR

Uniformly sample the hemisphere toward wi (pdf_hemi = 1 / 2pi)

Trace a ray r(p, wi)

If ray r hit a non-emitting object at q

L_indir = shade(q, -wi) * f_r * cos(theta) / pdf_hemi / P_RR

Return L_dir + L_indir

一开始遍历 lights 我写的是

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray &ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

Vector3f color;

for (auto light : lights) {

}

return color;

}

但是这样写会报错,看了别人的才知道

range based for loops 是 C++11 的特性,直接使用 auto 表示拷贝赋值一个临时量

但是这里的 light 是用 std::unique_ptr<Light> 表示的,unique_ptr 需要维护所有权的唯一性,所以没有实现拷贝复制

https://blog.csdn.net/lemonxiaoxiao/article/details/108603916

但是引用是可以的,使用引用可以避免所有权的转移

因此使用 auto& 就行了

https://stackoverflow.com/questions/29859796/c-auto-vs-auto

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray &ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

Vector3f color;

for (auto& light : lights) {

}

return color;

}

之后我根据伪代码本来应该写成

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray &ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

Vector3f color;

// Contribution from the light source

for (auto& light : lights) {

// Uniformly sample the light at x' (pdf_light = 1 / A)

// Shoot a ray from p to x'

// If the ray is not blocked in the middle

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

}

return color;

}

但是我看到作业里面说了使用 Scene::sampleLight() 才反应过来,原来别人已经写好了

而且是,他这里是默认把所有的灯光的面积加起来,随机取一个面积值,然后根据这个值取到某一个光源

所以相当于每一次 castRay 就随机一个面积,然后取到一个随机的光源……

一开始我看这个 sampleLight 的参数是一个 Intersection 我还以为是先知道了相交结果,然后再去采样光源

之后看 sampleLight 内部调用的 Sample 函数,这个函数里面是对 Intersection 类型的变量赋值的,我才看懂,这个形参是用来接受采样结果的

其中的 BVHAccel::getSample 是根据输入的面积来在 BVH 中找节点的,而不是根据光线与包围盒相交找节点的

也就是说,其实只要调用了 sampleLight,必然会采到一个“交点”的,这里还没有光线投射的过程

那么按道理我应该直接新建两个参数,然后输入到 sampleLight 就行了

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray &ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

Vector3f color;

// Contribution from the light source

// Uniformly sample the light at x' (pdf_light = 1 / A)

Intersection inter;

float pdf;

sampleLight(inter, pdf);

// Shoot a ray from p to x'

Ray p2x()

// If the ray is not blocked in the middle

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

return color;

}

但是我在想写从着色点 p 到采样点 x’ 的时候,我就发现原来我没有着色点 p

Scene::intersect(ray)

所以还要参考上一次作业,使用 Scene::intersect(ray) 获得本函数的输入光线与场景的交点

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray &ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

if (depth > this->maxDepth) {

return Vector3f(0.0, 0.0, 0.0);

}

Intersection inter_shade_point = Scene::intersect(ray);

Material* m = inter_shade_point.m;

Object* hitObject = inter_shade_point.obj;

Vector3f hitColor = this->backgroundColor;

if (inter_shade_point.happened) {

// Contribution from the light source

// Uniformly sample the light at x' (pdf_light = 1 / A)

Intersection inter_sample_point;

float pdf;

sampleLight(inter_sample_point, pdf);

if (inter_sample_point.happened) {

// Shoot a ray from p to x'

Ray p2x(inter_shade_point.coords, normalize(inter_sample_point.coords - inter_shade_point.coords));

// If the ray is not blocked in the middle

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

}

}

return hitColor;

}

因为 sampleLight 那里是直接获得的一个物体表面上随机一点

所以之后还要打一条光线来判断

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray &ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

if (depth > this->maxDepth) {

return Vector3f(0.0, 0.0, 0.0);

}

Intersection inter_shade_point = Scene::intersect(ray);

Material* m = inter_shade_point.m;

Object* hitObject = inter_shade_point.obj;

Vector3f hitColor = Vector3f(0.0, 0.0, 0.0);

if (inter_shade_point.happened) {

// Contribution from the light source

// Uniformly sample the light at x' (pdf_light = 1 / A)

Intersection inter_sample_point;

float pdf;

sampleLight(inter_sample_point, pdf);

Vector3f x = inter_sample_point.coords;

if (inter_sample_point.happened) {

// Shoot a ray from p to x'

Vector3f dir_p2x = normalize(inter_sample_point.coords - inter_shade_point.coords);

Vector3f p_offset = inter_shade_point.coords + EPSILON;

Ray p2x(p_offset, dir_p2x);

// If the ray is not blocked in the middle

Intersection inter_p2x = Scene::intersect(p2x);

bool is_blocked = inter_p2x.happened;

if (inter_p2x.happened) {

if (inter_p2x.obj == inter_sample_point.obj) {

is_blocked = (inter_p2x.coords - inter_sample_point.coords).norm() > EPSILON;

}

}

if (!is_blocked) {

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

hitColor +=

}

}

}

return hitColor;

}

f_r

但是我不知道这个

L o ( p , w o ) = ∫ Ω + L i ( p , w i ) f r ( p , w i , w o ) cos θ d w i = ∫ A L i ( p , w i ) f r ( p , w i , w o ) cos θ cos θ ′ ∣ ∣ x ′ − x ∣ ∣ 2 d A L_o(p,w_o) = \int_{\Omega+}L_i(p,w_i)f_r(p,w_i,w_o)\cos\theta\mathrm d w_i \\ = \int_A L_i(p,w_i)f_r(p,w_i,w_o)\dfrac{\cos\theta \cos \theta'}{||x' - x||^2}\mathrm d A Lo(p,wo)=∫Ω+Li(p,wi)fr(p,wi,wo)cosθdwi=∫ALi(p,wi)fr(p,wi,wo)∣∣x′−x∣∣2cosθcosθ′dA

的公式对应到 L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light 具体该怎么做

之后看到了作业介绍 pdf 里面的伪代码

shade (p, wo)

sampleLight ( inter , pdf_light )

Get x, ws , NN , emit from inter

Shoot a ray from p to x

If the ray is not blocked in the middle

L_dir = emit * eval (wo , ws , N) * dot (ws , N) * dot (ws , NN) / |x-p |^2 / pdf_light

L_indir = 0.0

Test Russian Roulette with probability RussianRoulette

wi = sample (wo , N)

Trace a ray r(p, wi)

If ray r hit a non - emitting object at q

L_indir = shade (q, wi) * eval (wo , wi , N) * dot (wi , N) / pdf (wo , wi , N) / RussianRoulette

Return L_dir + L_indir

才知道要用什么函数

但是我有个问题是

Vector3f Material::eval(const Vector3f &wi, const Vector3f &wo, const Vector3f &N) 第一个参数是入射方向,第二个参数是出射方向,那他

If the ray is not blocked in the middle

L_dir = emit * eval (wo , ws , N) * dot (ws , N) * dot (ws , NN) / |x-p |^2 / pdf_light

这里还为什么会是写成 eval (wo , ws , N)?我觉得 ws 是 p 到 x 的方向向量,wo 是着色点的出射方向,那么应该是 eval (ws , wo , N) 才对啊?

总之我就是按照我的想法写成了

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray &ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

if (depth > this->maxDepth) {

return Vector3f(0.0, 0.0, 0.0);

}

Intersection inter_shade_point = Scene::intersect(ray);

Vector3f hitColor = Vector3f(0.0, 0.0, 0.0);

if (inter_shade_point.happened) {

Material* mat_p = inter_shade_point.m;

Object* hitObject = inter_shade_point.obj;

Vector3f p = inter_shade_point.coords;

Vector3f normal_p = inter_shade_point.normal;

// Contribution from the light source

// Uniformly sample the light at x' (pdf_light = 1 / A)

Intersection inter_sample_point;

float pdf;

sampleLight(inter_sample_point, pdf);

if (inter_sample_point.happened) {

// Shoot a ray from p to x'

Vector3f x = inter_sample_point.coords;

Vector3f dir_p2x = normalize(x - p);

Vector3f p_offset = p + EPSILON * dir_p2x;

Ray p2x(p_offset, dir_p2x);

// If the ray is not blocked in the middle

Intersection inter_p2x = Scene::intersect(p2x);

bool is_blocked = inter_p2x.happened;

if (inter_p2x.happened) {

if (inter_p2x.obj == inter_sample_point.obj) {

is_blocked = (inter_p2x.coords - inter_sample_point.coords).norm() > EPSILON;

}

}

if (!is_blocked) {

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

Vector3f L_x2p = inter_sample_point.emit;

Vector3f normal_x = inter_sample_point.normal;

hitColor += L_x2p * mat_p->eval(dir_p2x, ray.direction, normal_p) * dotProduct(dir_p2x, normal_p) * dotProduct(-dir_p2x, normal_x) / pow(inter_sample_point.distance, 2) / pdf;

}

}

// Contribution from other reflectors

float p_rr = get_random_float();

if (p_rr < RussianRoulette) {

Vector3f dir_i = mat_p->sample(ray.direction, normal_p);

Intersection inter_indir_point = Scene::intersect(Ray(p, dir_i));

if (inter_indir_point.happened) {

if (!inter_indir_point.obj->hasEmit()) {

hitColor += castRay(Ray(inter_indir_point.coords, -dir_i), depth + 1) * mat_p->eval(dir_i, ray.direction, normal_p) * dotProduct(dir_i, normal_p) / pdf_hemi / p_rr;

}

}

}

}

return hitColor;

}

判断光源与物体之间是否有阻挡

之后发现的我的图是全黑的

检查了才发现我的判断光源与物体之间是否有阻挡这一步搞错了,正确的应该用 p2x 来判断

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray &ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

if (depth > this->maxDepth) {

return Vector3f(0.0, 0.0, 0.0);

}

Intersection inter_shade_point = Scene::intersect(ray);

Vector3f hitColor = Vector3f(0.0, 0.0, 0.0);

if (inter_shade_point.happened) {

Material* mat_p = inter_shade_point.m;

Object* hitObject = inter_shade_point.obj;

Vector3f p = inter_shade_point.coords;

Vector3f normal_p = inter_shade_point.normal;

// Contribution from the light source

// Uniformly sample the light at x' (pdf_light = 1 / A)

Intersection inter_sample_point;

float pdf;

sampleLight(inter_sample_point, pdf);

Vector3f x = inter_sample_point.coords;

Vector3f dir_p2x = normalize(x - p);

Vector3f p_offset = p + EPSILON * dir_p2x;

// Shoot a ray from p to x'

Intersection inter_p2x = Scene::intersect(Ray(p_offset, dir_p2x));

// If the ray is not blocked in the middle

bool is_blocked = inter_p2x.happened;

if (inter_p2x.happened) {

if (inter_p2x.obj == inter_sample_point.obj) {

is_blocked = (inter_p2x.coords - inter_sample_point.coords).norm() > EPSILON;

}

}

if (!is_blocked) {

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

Vector3f L_x2p = inter_sample_point.emit;

Vector3f normal_x = inter_sample_point.normal;

hitColor += L_x2p * mat_p->eval(dir_p2x, ray.direction, normal_p) * dotProduct(dir_p2x, normal_p) * dotProduct(-dir_p2x, normal_x) / pow(inter_sample_point.distance, 2) / pdf;

}

// Contribution from other reflectors

float p_rr = get_random_float();

if (p_rr < RussianRoulette) {

Vector3f dir_i = mat_p->sample(ray.direction, normal_p);

Intersection inter_indir_point = Scene::intersect(Ray(p, dir_i));

if (inter_indir_point.happened) {

if (!inter_indir_point.obj->hasEmit()) {

hitColor += castRay(Ray(inter_indir_point.coords, -dir_i), depth + 1) * mat_p->eval(dir_i, ray.direction, normal_p) * dotProduct(dir_i, normal_p) / pdf_hemi / p_rr;

}

}

}

}

return hitColor;

}

之后又发现是纯黑的,于是又发现了一些错误,比如把 castRay 的参数 Ray 的方向作为出射方向的时候,应该加一个负号,还有 p 和 x 之间的距离的计算

于是修改成:

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray &ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

if (depth > this->maxDepth) {

return Vector3f(0.0, 0.0, 0.0);

}

Intersection inter_shade_point = Scene::intersect(ray);

Vector3f hitColor = Vector3f(0.0, 0.0, 0.0);

if (inter_shade_point.happened) {

Material* mat_p = inter_shade_point.m;

Object* hitObject = inter_shade_point.obj;

Vector3f p = inter_shade_point.coords;

Vector3f normal_p = inter_shade_point.normal;

// Contribution from the light source

// Uniformly sample the light at x' (pdf_light = 1 / A)

Intersection inter_sample_point;

float pdf;

sampleLight(inter_sample_point, pdf);

Vector3f x = inter_sample_point.coords;

Vector3f dir_p2x = normalize(x - p);

Vector3f p_offset = p + EPSILON * dir_p2x;

// Shoot a ray from p to x'

Intersection inter_p2x = Scene::intersect(Ray(p_offset, dir_p2x));

// If the ray is not blocked in the middle

bool is_blocked = inter_p2x.happened;

if (inter_p2x.happened) {

if (inter_p2x.obj == inter_sample_point.obj) {

is_blocked = (inter_p2x.coords - inter_sample_point.coords).norm() > EPSILON;

}

}

if (!is_blocked) {

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

Vector3f L_x2p = inter_sample_point.emit;

Vector3f normal_x = inter_sample_point.normal;

hitColor += L_x2p * mat_p->eval(dir_p2x, -ray.direction, normal_p) * dotProduct(dir_p2x, normal_p) * dotProduct(-dir_p2x, normal_x) / pow((x - p).norm(), 2) / pdf;

}

// Contribution from other reflectors

float p_rr = get_random_float();

if (p_rr < RussianRoulette) {

Vector3f dir_i = mat_p->sample(-ray.direction, normal_p);

Intersection inter_indir_point = Scene::intersect(Ray(p, dir_i));

if (inter_indir_point.happened) {

if (!inter_indir_point.obj->hasEmit()) {

hitColor += castRay(Ray(inter_indir_point.coords, -dir_i), depth + 1) * mat_p->eval(dir_i, -ray.direction, normal_p) * dotProduct(dir_i, normal_p) / pdf_hemi / p_rr;

}

}

}

}

return hitColor;

}

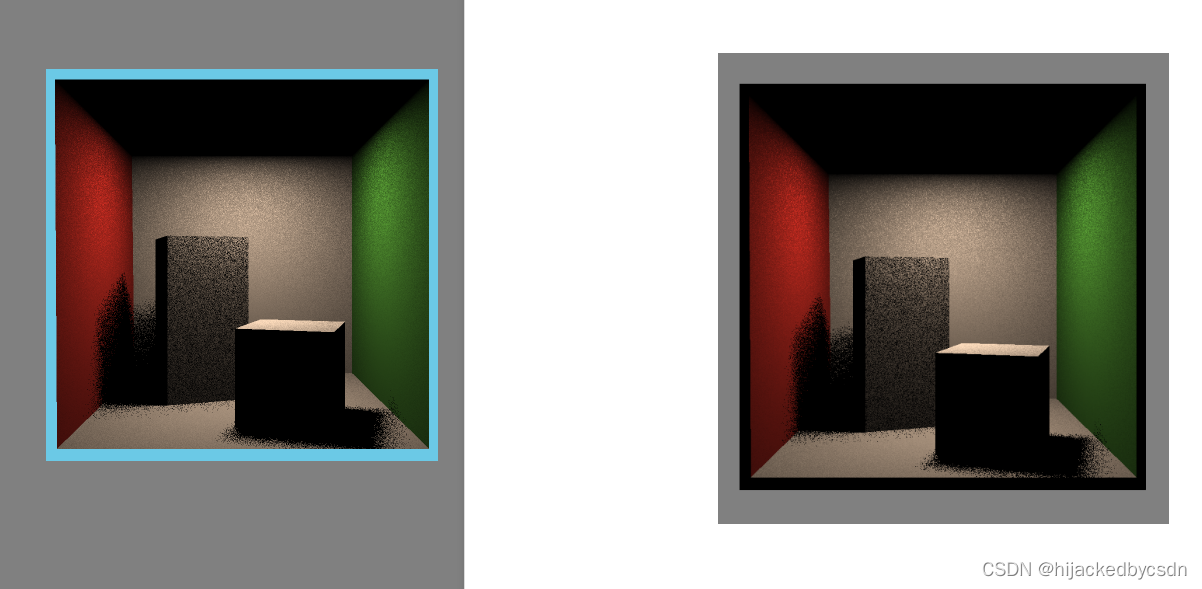

结果很怪……

参考了别人的

https://blog.csdn.net/Xuuuuuuuuuuu/article/details/129001805

我把 IntersectP 中的等于号去掉了,得到的仍然是全黑的……

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray &ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

if (depth > this->maxDepth) {

return Vector3f(0.0, 0.0, 0.0);

}

Intersection inter_shade_point = Scene::intersect(ray);

Vector3f hitColor = Vector3f(0.0, 0.0, 0.0);

if (inter_shade_point.happened) {

Material* mat_p = inter_shade_point.m;

Object* hitObject = inter_shade_point.obj;

Vector3f p = inter_shade_point.coords;

Vector3f normal_p = inter_shade_point.normal;

// Contribution from the light source

// Uniformly sample the light at x' (pdf_light = 1 / A)

Intersection inter_sample_point;

float pdf_x;

sampleLight(inter_sample_point, pdf_x);

Vector3f x = inter_sample_point.coords;

Vector3f dir_p2x = normalize(x - p);

// Shoot a ray from p to x'

Intersection inter_p2x = Scene::intersect(Ray(p, dir_p2x));

// If the ray is not blocked in the middle

bool is_blocked = inter_p2x.happened;

if (inter_p2x.happened) {

if (inter_p2x.obj == inter_sample_point.obj) {

is_blocked = (inter_p2x.coords - inter_sample_point.coords).norm() > EPSILON;

}

}

if (!is_blocked) {

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

Vector3f L_x2p = inter_sample_point.emit;

Vector3f normal_x = inter_sample_point.normal;

//Vector3f L_dir = L_x2p * mat_p->eval(dir_p2x, -ray.direction, normal_p) * dotProduct(dir_p2x, normal_p) * dotProduct(-dir_p2x, normal_x) / pow((x - p).norm(), 2) / pdf_x;

Vector3f L_dir = L_x2p * mat_p->eval(ray.direction, dir_p2x, normal_p) * dotProduct(dir_p2x, normal_p) * dotProduct(-dir_p2x, normal_x) / pow((x - p).norm(), 2) / pdf_x;

hitColor += L_dir;

//std::cout << L_dir << std::endl;

}

// Contribution from other reflectors

//float p_rr = get_random_float();

//if (p_rr < RussianRoulette) {

// //Vector3f dir_i = mat_p->sample(-ray.direction, normal_p);

// Vector3f dir_i = mat_p->sample(ray.direction, normal_p);

// Intersection inter_indir_point = Scene::intersect(Ray(p, dir_i));

// if (inter_indir_point.happened) {

// if (!inter_indir_point.obj->hasEmit()) {

// float pdf_shade_point = mat_p->pdf(dir_i, -ray.direction, normal_p);

// Vector3f L_indir = castRay(Ray(inter_indir_point.coords, -dir_i), depth + 1) * mat_p->eval(dir_i, -ray.direction, normal_p) * dotProduct(dir_i, normal_p) / pdf_shade_point / p_rr;

// hitColor += L_indir;

// //std::cout << L_indir << std::endl;

// }

// }

//

//}

}

return hitColor;

}

我后面看了一下……我感觉这里所有的代码都默认了,入射方向是从外面打到着色点的,然后反射方向是从着色点打到外面的

所以我在测试直接光照的时候都遵循了这个假设……

我看别人跟我的类似的

Vector3f Scene::castRay(const Ray& ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

Vector3f hitColor = this->backgroundColor;

Intersection shade_point_inter = Scene::intersect(ray);

if (shade_point_inter.happened)

{

Vector3f p = shade_point_inter.coords;

Vector3f wo = ray.direction;

Vector3f N = shade_point_inter.normal;

Vector3f L_dir(0), L_indir(0);

//sampleLight(inter,pdf_light)

Intersection light_point_inter;

float pdf_light;

sampleLight(light_point_inter, pdf_light);

//Get x,ws,NN,emit from inter

Vector3f x = light_point_inter.coords;

Vector3f ws = normalize(x - p);

Vector3f NN = light_point_inter.normal;

Vector3f emit = light_point_inter.emit;

float distance_pTox = (x - p).norm();

//Shoot a ray from p to x

Vector3f p_deviation = (dotProduct(ray.direction, N) < 0) ?

p + N * EPSILON :

p - N * EPSILON;

Ray ray_pTox(p_deviation, ws);

//If the ray is not blocked in the middleff

Intersection blocked_point_inter = Scene::intersect(ray_pTox);

if (abs(distance_pTox - blocked_point_inter.distance < 0.01))

{

L_dir = emit * shade_point_inter.m->eval(wo, ws, N) * dotProduct(ws, N) * dotProduct(-ws, NN) / (distance_pTox * distance_pTox * pdf_light);

}

hitColor = L_dir;

}

return hitColor;

}

最后唯一的差别就是在判断光源与物体之间是否有阻挡这里了

我输出了 std::cout << inter_p2x.obj << " " << inter_shade_point.obj << std::endl;,最后发现其实这两次求交击中的 object 不需要是一样的……之前是我搞错了

因为这里的 object 是三角面……所以其实都是那种很小的三角面,很容易会击中不一样的

所以直接考虑两次击中点的距离之间的是否比较小就行了

对比了一下我的和别人的,似乎是一样的

过亮 过度曝光?

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray& ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

if (depth > this->maxDepth) {

return Vector3f(0.0, 0.0, 0.0);

}

Intersection inter_shade_point = Scene::intersect(ray);

Vector3f hitColor = Vector3f(0.0, 0.0, 0.0);

if (inter_shade_point.happened) {

Material* mat_p = inter_shade_point.m;

Object* hitObject = inter_shade_point.obj;

Vector3f p = inter_shade_point.coords;

Vector3f normal_p = inter_shade_point.normal;

// Contribution from the light source

// Uniformly sample the light at x' (pdf_light = 1 / A)

Intersection inter_sample_point;

float pdf_x;

sampleLight(inter_sample_point, pdf_x);

Vector3f x = inter_sample_point.coords;

Vector3f dir_p2x = normalize(x - p);

Vector3f p_deviation = (dotProduct(ray.direction, normal_p) < 0) ?

p + normal_p * EPSILON :

p - normal_p * EPSILON;

// Shoot a ray from p to x'

Intersection inter_p2x = Scene::intersect(Ray(p_deviation, dir_p2x));

// If the ray is not blocked in the middle

if ((inter_p2x.coords - x).norm() < 0.01f) {

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

Vector3f L_x2p = inter_sample_point.emit;

Vector3f normal_x = inter_sample_point.normal;

Vector3f L_dir = L_x2p * mat_p->eval(ray.direction, dir_p2x, normal_p) * dotProduct(dir_p2x, normal_p) * dotProduct(-dir_p2x, normal_x) / pow((x - p).norm(), 2) / pdf_x;

hitColor += L_dir;

//std::cout << L_dir << std::endl;

}

// Contribution from other reflectors

float p_rr = get_random_float();

if (p_rr < RussianRoulette) {

Vector3f dir_o = mat_p->sample(ray.direction, normal_p);

Intersection inter_indir_point = Scene::intersect(Ray(p_deviation, dir_o));

if (inter_indir_point.happened) {

if (!inter_indir_point.obj->hasEmit()) {

float pdf_shade_point = mat_p->pdf(ray.direction, dir_o, normal_p);

if (pdf_shade_point > EPSILON) {

Vector3f L_indir = castRay(Ray(inter_indir_point.coords, dir_o), depth + 1) * mat_p->eval(ray.direction, dir_o, normal_p) * dotProduct(dir_o, normal_p) / pdf_shade_point / p_rr;

hitColor += L_indir;

}

//std::cout << L_indir << std::endl;

}

}

}

}

return hitColor;

}

看了 https://games-cn.org/forums/topic/games101-zuoye7-raokengyinlu-windows/

他说过曝的可能错误点在于,在间接采样的时候没有判断间接光击中的物体是否是发射光的

但是我明明已经用 if (!inter_indir_point.obj->hasEmit()) 判断过了……

再考虑到我与别人的差别就仅仅在于别人用的是材质里面的 hasEmission() 来判断的,而我用的是物体的 hasEmit() 来判断的

我觉得这两个没有区别啊……因为 Object 的 hasEmit() 里面也是调用的材质里面的 hasEmission()

之后也是改了

float p_rr = get_random_float();

if (p_rr < RussianRoulette) {

Vector3f dir_o = normalize(mat_p->sample(ray.direction, normal_p));

Intersection inter_indir_point = Scene::intersect(Ray(p_deviation, dir_o));

if (inter_indir_point.happened && !inter_indir_point.m->hasEmission()) {

float pdf_shade_point = mat_p->pdf(ray.direction, dir_o, normal_p);

if (pdf_shade_point > EPSILON) {

Vector3f L_indir = castRay(Ray(inter_indir_point.coords, dir_o), depth + 1) * mat_p->eval(ray.direction, dir_o, normal_p) * dotProduct(dir_o, normal_p) / pdf_shade_point / p_rr;

hitColor += L_indir;

}

}

}

确实没有区别

之后我才发现我的错误在于,我除以的俄罗斯轮盘赌的系数不对……

Vector3f L_indir = castRay(Ray(inter_indir_point.coords, dir_o), depth + 1) * mat_p->eval(ray.direction, dir_o, normal_p) * dotProduct(dir_o, normal_p) / (pdf_shade_point * RussianRoulette);

我应该一直除以 RussianRoulette 而不是 p_rr 的

最后得到的正确的

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray& ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

if (depth > this->maxDepth) {

return Vector3f(0.0, 0.0, 0.0);

}

Intersection inter_shade_point = Scene::intersect(ray);

Vector3f hitColor = Vector3f(0.0, 0.0, 0.0);

if (inter_shade_point.happened) {

Material* mat_p = inter_shade_point.m;

Object* hitObject = inter_shade_point.obj;

Vector3f p = inter_shade_point.coords;

Vector3f normal_p = inter_shade_point.normal;

// Contribution from the light source

// Uniformly sample the light at x' (pdf_light = 1 / A)

Intersection inter_sample_point;

float pdf_x;

sampleLight(inter_sample_point, pdf_x);

Vector3f x = inter_sample_point.coords;

Vector3f dir_p2x = normalize(x - p);

Vector3f p_deviation = (dotProduct(ray.direction, normal_p) < 0) ?

p + normal_p * EPSILON :

p - normal_p * EPSILON;

// Shoot a ray from p to x'

Intersection inter_p2x = Scene::intersect(Ray(p_deviation, dir_p2x));

// If the ray is not blocked in the middle

if ((inter_p2x.coords - x).norm() < 0.01f) {

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

Vector3f L_x2p = inter_sample_point.emit;

Vector3f normal_x = inter_sample_point.normal;

Vector3f L_dir = L_x2p * mat_p->eval(ray.direction, dir_p2x, normal_p) * dotProduct(dir_p2x, normal_p) * dotProduct(-dir_p2x, normal_x) / pow((x - p).norm(), 2) / pdf_x;

hitColor += L_dir;

}

// Contribution from other reflectors

float p_rr = get_random_float();

if (p_rr < RussianRoulette) {

Vector3f dir_o = normalize(mat_p->sample(ray.direction, normal_p));

Intersection inter_indir_point = Scene::intersect(Ray(p_deviation, dir_o));

if (inter_indir_point.happened && !inter_indir_point.m->hasEmission()) {

float pdf_shade_point = mat_p->pdf(ray.direction, dir_o, normal_p);

if (pdf_shade_point > EPSILON) {

Vector3f L_indir = castRay(Ray(inter_indir_point.coords, dir_o), depth + 1) * mat_p->eval(ray.direction, dir_o, normal_p) * dotProduct(dir_o, normal_p) / (pdf_shade_point * RussianRoulette);

hitColor += L_indir;

}

}

}

// make light visible

hitColor += mat_p->getEmission();

}

return hitColor;

}

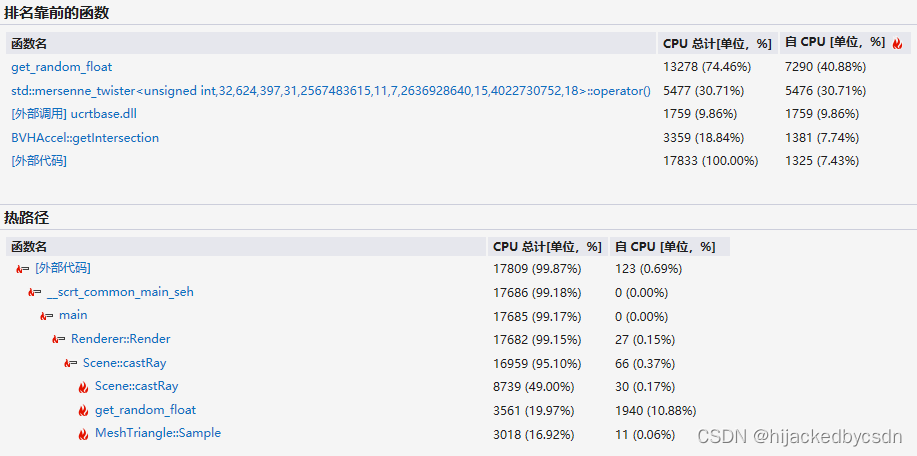

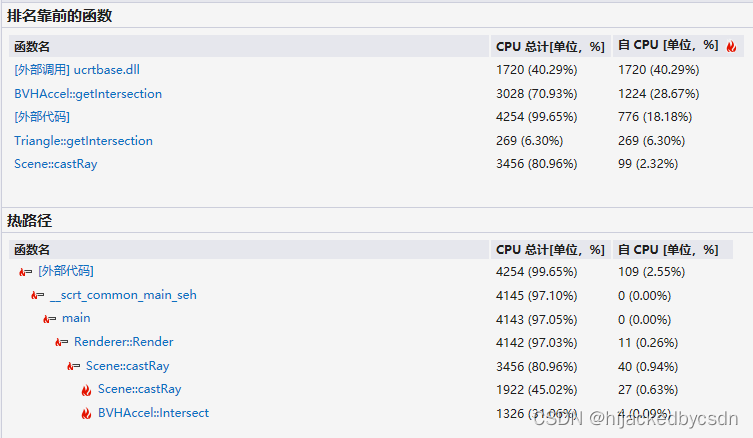

随机数生成

别人说性能瓶颈是随机数生成

https://games-cn.org/forums/topic/zuoyeqidexingnengpingjingshisuijishushengcheng/

我也用 C++ 的性能探查器看了,确实如此

但是我没有出现别人所说的那种每一次随机数都一样的情况

https://blog.csdn.net/weixin_43485513/article/details/122779134?spm=1001.2014.3001.5506

我自己也输出看了

似乎是已经修复了的问题

http://games-cn.org/forums/topic/games101-zuoye7-raokengyinlu-windows/

但是确实把那些需要构造的变量改成 static,只需要构造一次之后,随机数生成就不是瓶颈了

static std::random_device dev;

static std::mt19937 rng(dev());

static std::uniform_real_distribution<float> dist(0.f, 1.f);

inline float get_random_float()

{

return dist(rng);

}

多线程

之前我看到别人的帖子说

在spp循环里进行多线程调用castRay来更新framebuffer,而且线程应该要复用,不然每次创建一个线程都会有时间开销

https://games-cn.org/forums/topic/zuoyeqidejieguomeiyoushilizhongdeliangdugao/

但是之后我尝试着写了一下,发现我创建线程的时候就已经要分配好函数句柄了

这是一个不对的写法,只是展示一下我一开始的想法是,在 spp 循环里面分配多线程然后运行

之后我发现要是想这么做的话,首先就是我必须在 spp 里面创建了,不能复用了,又因为每一次的 x 和 y 是不一样的,所以我必须对每一个 x 和 y 都有创建线程的开销……这样更不好了

// multi thread

int spp = 16;

std::vector<std::thread> threads(spp);

std::mutex mtx;

// change the spp value to change sample ammount

int spp = 1;

std::cout << "SPP: " << spp << "\n";

for (uint32_t j = 0; j < scene.height; ++j) {

for (uint32_t i = 0; i < scene.width; ++i) {

// generate primary ray direction

float x = (2 * (i + 0.5) / (float)scene.width - 1) *

imageAspectRatio * scale;

float y = (1 - 2 * (j + 0.5) / (float)scene.height) * scale;

Vector3f dir = normalize(Vector3f(-x, y, 1));

for (int k = 0; k < spp; k++){

auto para_castray = [&]() {

Vector3f color = scene.castRay(Ray(eye_pos, dir), 0) / spp;

mtx.lock();

framebuffer[m] += color;

mtx.unlock();

};

threads[k](para_castray);

threads[k].join();

}

m++;

}

UpdateProgress(j / (float)scene.height);

}

UpdateProgress(1.f);

最终还是抄了别人的

https://blog.csdn.net/Xuuuuuuuuuuu/article/details/129001805

// change the spp value to change sample ammount

int spp = 32; //16

int thread_num = 8;//我的电脑有8核,所以开8个线程。注:屏幕的高度一定要是线程数的倍数

int thread_height = scene.height / thread_num;

std::vector<std::thread> threads(thread_num);

std::cout << "SPP: " << spp << "\n";

//多线程实现

std::mutex mtx;

float process = 0;

float Reciprocal_Scene_height = 1.f / (float)scene.height;

auto castRay = [&](int thread_index)

{

int height = thread_height * (thread_index + 1);

for (uint32_t j = height - thread_height; j < height; j++)

{

for (uint32_t i = 0; i < scene.width; ++i) {

// generate primary ray direction

float x = (2 * (i + 0.5) / (float)scene.width - 1) *

imageAspectRatio * scale;

float y = (1 - 2 * (j + 0.5) / (float)scene.height) * scale;

//eye的位置对结果有影响

Vector3f dir = normalize(Vector3f(-x, y, 1));

for (int k = 0; k < spp; k++)

{

framebuffer[j * scene.width + i] += scene.castRay(Ray(eye_pos, dir), 0) / spp;

}

}

mtx.lock();

process = process + Reciprocal_Scene_height;

UpdateProgress(process);

mtx.unlock();

}

};

for (int k = 0; k < thread_num; k++)

{

threads[k] = std::thread(castRay, k);

}

for (int k = 0; k < thread_num; k++)

{

threads[k].join();

}

UpdateProgress(1.f);

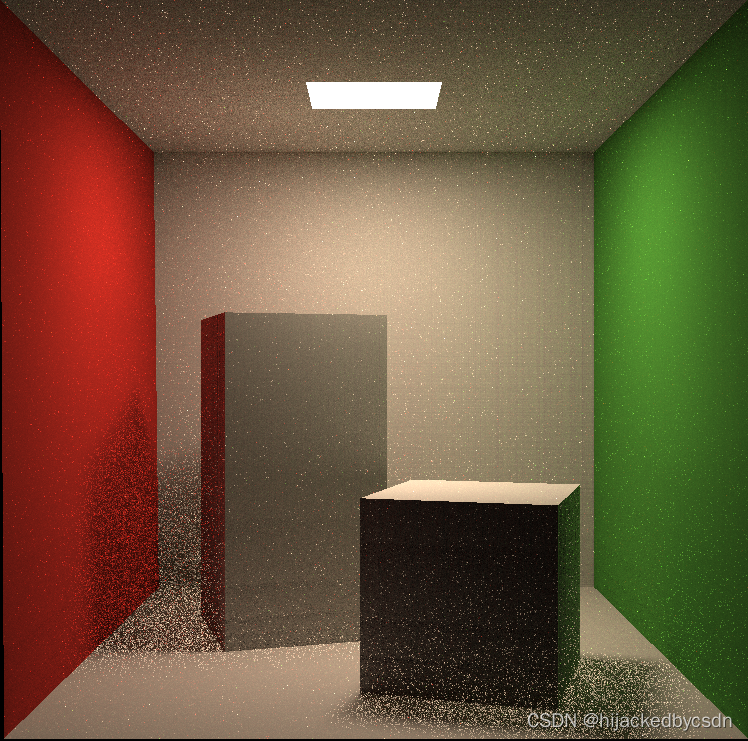

性能对比,1280 * 960 * 32 不开多线程 176 s,开 8 线程 22 s

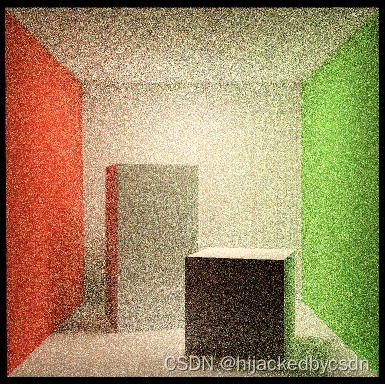

spp 很高时仍然有很多噪点

实现多线程之后,我做了 spp = 256 结果发现还是有很多噪点

1280 * 960 * 256

不管我怎么修改 EPSILON 的值,0.00016f 或者 0.0001 都不行

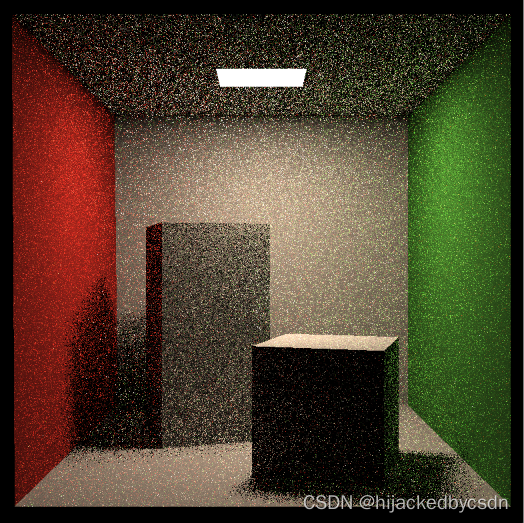

我看到别人有提到要钳制 hitColor 的值

https://blog.csdn.net/Xuuuuuuuuuuu/article/details/129001805

我试了一下,结果就正确了

马后炮一下的话,每一个点采样 spp 次,并且取平均,只要每一次采样的颜色都钳制到 0 到 1,最终平均出来的值也会钳制到 0 到 1

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray& ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

if (depth > this->maxDepth) {

return Vector3f(0.0, 0.0, 0.0);

}

Intersection inter_shade_point = Scene::intersect(ray);

Vector3f hitColor = Vector3f(0.0, 0.0, 0.0);

if (inter_shade_point.happened) {

Material* mat_p = inter_shade_point.m;

Object* hitObject = inter_shade_point.obj;

Vector3f p = inter_shade_point.coords;

Vector3f normal_p = inter_shade_point.normal;

// Contribution from the light source

// Uniformly sample the light at x' (pdf_light = 1 / A)

Intersection inter_sample_point;

float pdf_x;

sampleLight(inter_sample_point, pdf_x);

Vector3f x = inter_sample_point.coords;

Vector3f dir_p2x = normalize(x - p);

Vector3f p_deviation = (dotProduct(ray.direction, normal_p) < 0) ?

p + normal_p * EPSILON :

p - normal_p * EPSILON;

// Shoot a ray from p to x'

Intersection inter_p2x = Scene::intersect(Ray(p_deviation, dir_p2x));

// If the ray is not blocked in the middle

if ((inter_p2x.coords - x).norm() < 0.01f) {

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

Vector3f L_x2p = inter_sample_point.emit;

Vector3f normal_x = inter_sample_point.normal;

Vector3f L_dir = L_x2p * mat_p->eval(ray.direction, dir_p2x, normal_p) * dotProduct(dir_p2x, normal_p) * dotProduct(-dir_p2x, normal_x) / pow((x - p).norm(), 2) / pdf_x;

hitColor += L_dir;

}

// Contribution from other reflectors

float p_rr = get_random_float();

if (p_rr < RussianRoulette) {

Vector3f dir_o = normalize(mat_p->sample(ray.direction, normal_p));

Intersection inter_indir_point = Scene::intersect(Ray(p_deviation, dir_o));

if (inter_indir_point.happened && !inter_indir_point.m->hasEmission()) {

float pdf_shade_point = mat_p->pdf(ray.direction, dir_o, normal_p);

if (pdf_shade_point > EPSILON) {

Vector3f L_indir = castRay(Ray(inter_indir_point.coords, dir_o), depth + 1) * mat_p->eval(ray.direction, dir_o, normal_p) * dotProduct(dir_o, normal_p) / (pdf_shade_point * RussianRoulette);

hitColor += L_indir;

}

}

}

// make light visible

hitColor += mat_p->getEmission();

}

// avoid white noise

hitColor.x = (clamp(0, 1, hitColor.x));

hitColor.y = (clamp(0, 1, hitColor.y));

hitColor.z = (clamp(0, 1, hitColor.z));

return hitColor;

}

微表面模型

看到别人做了很详细的推导

https://zhuanlan.zhihu.com/p/434964126

之后他写了一大堆的公式,有点难以下手说实话

所以我还是自己去看原文章

https://www.pbr-book.org/3ed-2018/Reflection_Models/Microfacet_Models

物体的表面被视为由一些微小的细节(microfacets)组成的

这些细节一般由高度场来定义,

如果高度变化剧烈,那么表面看上去就会粗糙;如果高度变化缓慢,那么表面看上去就会光滑

微表面的模型的 BRDF 建立在这样一个假设上:任取物体表面一个微元,这个微元内部内有很多 microfacets,这个微元的入射反射行为是这些 microfacets 的统计意义上的表达

因此接下来的任务就是建立一个能够描述这个表达,同时又足够简单的计算式

-

Perfect mirror reflection 完美反射模型是最常用的微表面 BRDF 模型,将 microfacets 认为是理想镜面(进一步可以扩展到完全光滑的玻璃面,既可以完美反射也可以完美折射),适用于表述 translucent 半透明材质

-

Oren–Nayar model 模型将 microfacets 认为是 Lambertian 理想漫反射的,适用于完全粗糙的材质(比如塑料)

为了计算微表面模型的反射,需要考虑光线在 microfacets 之间的行为(感觉这句话的意思是,不能忽略 microfacets)的几何结构

-

masking

microfacets 上的某点 A 反射的光线可能被其他 microfacets 遮挡

也就是视线看不到点 A,光线可以打到点 A

-

shadowing

microfacets 上的某点 A 被其他 microfacets 遮挡,接受不到光线

也就是视线可以看到点 A,光线不能打到点 A

-

interreflection 相互反射

microfacets 上的某点 A 反射的光线打到其他 microfacets 上,再反射到人眼

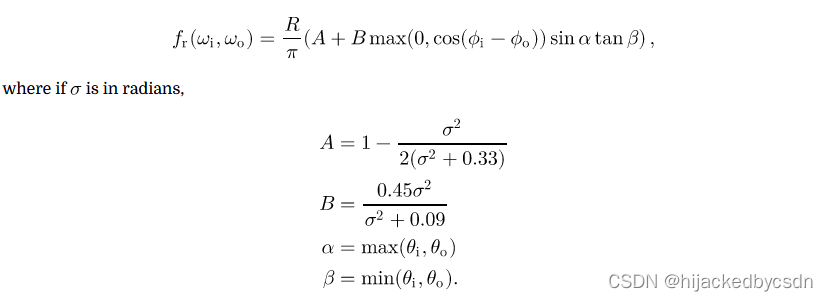

Oren–Nayar Diffuse Reflection

Oren和Nayar观察到,真实世界的物体并不(起码并不都)表现出Lambertian反射的特性,随着光照方法越来接近视角方向,很多 diffuse 表面都会变得越来越明亮。

基于这一个观测,Oren和Nayar用V-形微表面来描述粗糙表面,这些V-形微表面由球面高斯分布描述。该高斯分布只有一个参数——微表面法线与宏观表面法线夹角的标准差, 即粗糙程度。

因为假设成 V 形微表面,所以微表面之前的 interreflection 互相反射只与某一个微表面临近的微表面有关,所以这些 V 形槽的反射可以被归为一个平均的反射值

这样一个反射模型考虑到了 masking, shadowing, interreflection 没有一个闭的解(closed-form solution), Oren和Nayar推导出一个近似解:

这里的

ϕ

\phi

ϕ 是方位角

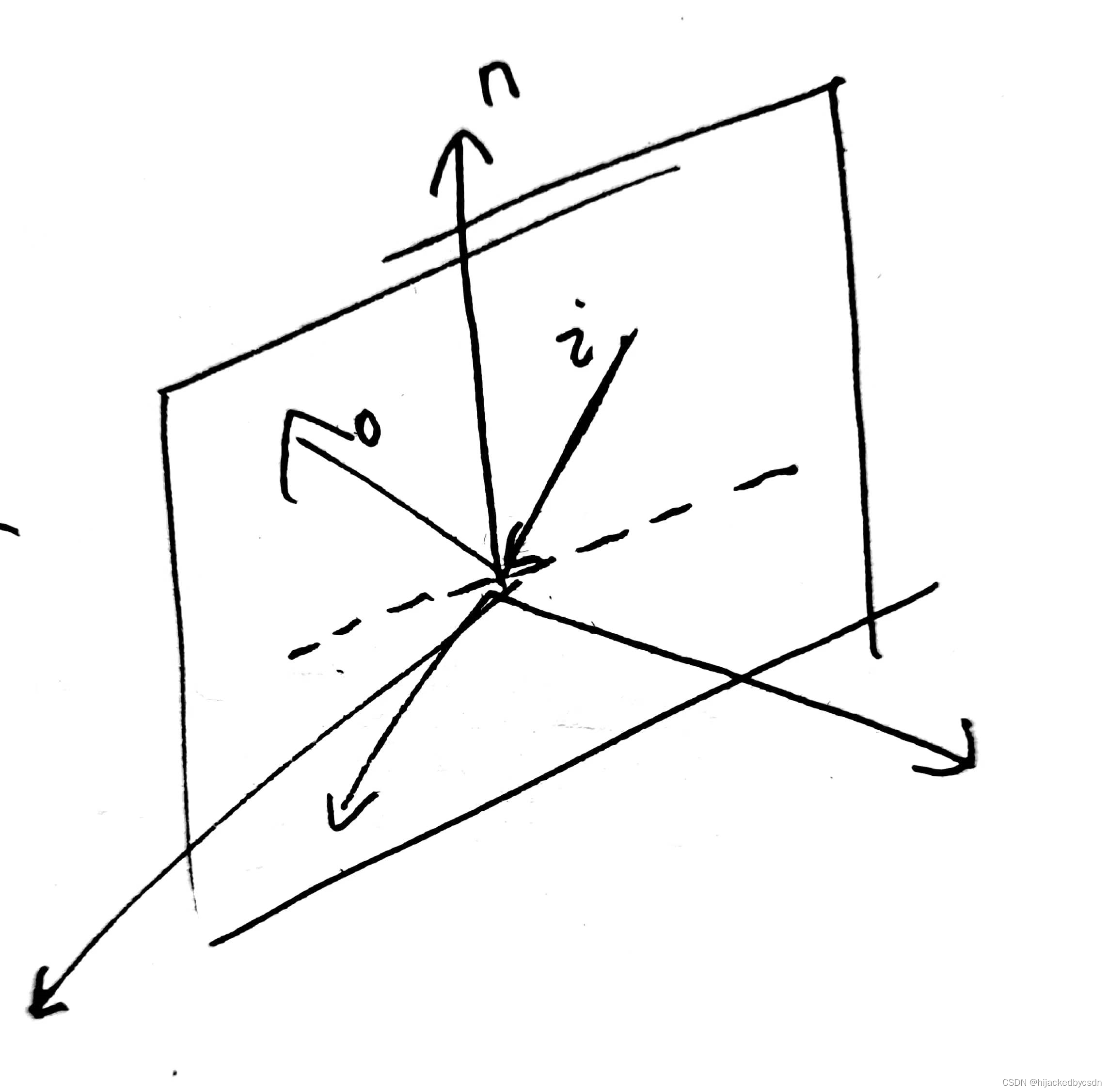

pbrt 这本书中的关于反射模型的话,向量都是在 stn 坐标系中的

https://www.pbr-book.org/3ed-2018/Reflection_Models#AbsCosTheta

问题就来了,我该怎么知道这个入射光和反射光在物体表面的方位角

我看了别人的计算,别人是说,如果 w i = ( w i x , w i y , w i z ) w_i = (w_{ix}, w_{iy}, w_{iz}) wi=(wix,wiy,wiz) 和 w o = ( w o x , w o y , w o z ) w_o = (w_{ox}, w_{oy}, w_{oz}) wo=(wox,woy,woz) 都定义在局部坐标系,这个局部坐标系就是定义物体表面为 xOy 平面那么 w i w_i wi 的投影就是 ( w i x , w i y , 0 ) (w_{ix}, w_{iy}, 0) (wix,wiy,0), w o w_o wo 的投影就是 ( w o x , w o y , 0 ) (w_{ox}, w_{oy}, 0) (wox,woy,0)

那么其实我们就知道了 ϕ i , ϕ o \phi_i,\phi_o ϕi,ϕo 的方向,但是不是方向向量,然后又因为两个向量点积包含这两个向量夹角的余弦,所以这两个投影点积,再除以这两个向量的模,就是 cos ( ϕ i − ϕ o ) \cos(\phi_i - \phi_o) cos(ϕi−ϕo)

但是现在我确实不知道这个入射的方向在局部坐标系中是什么样子的

而且我觉得他要是想正经考虑 cos ( ϕ i − ϕ o ) \cos(\phi_i - \phi_o) cos(ϕi−ϕo) 的话,那么入射方向和出射方向都应该是从着色点指向外面的,不然对于经典的反射,如果入射方向和出射方向都应该是从着色点指向外面的,那么方向角相差一个 pi,但是如果按照我们这个框架的写法,入射方向是从外面指向着色点的,那么这两个向量的方向角会是相同的,相差 0,这就不对了

所以我觉得如果真的要写的话,还需要注意一下这个入射方向

toWorld

之后我看到了这个框架里面对反射方向采样的函数

Vector3f Material::sample(const Vector3f &wi, const Vector3f &N){

switch(m_type){

case DIFFUSE:

{

// uniform sample on the hemisphere

float x_1 = get_random_float(), x_2 = get_random_float();

float z = std::fabs(1.0f - 2.0f * x_1);

float r = std::sqrt(1.0f - z * z), phi = 2 * M_PI * x_2;

Vector3f localRay(r*std::cos(phi), r*std::sin(phi), z);

return toWorld(localRay, N);

break;

}

}

}

逻辑很简单……就是局部坐标系下,半球上随机找一个方向,得到方向向量,然后转换到世界坐标

但是他这个转换到世界坐标系的方法我有点没看懂

Vector3f toWorld(const Vector3f &a, const Vector3f &N){

Vector3f B, C;

if (std::fabs(N.x) > std::fabs(N.y)){

float invLen = 1.0f / std::sqrt(N.x * N.x + N.z * N.z);

C = Vector3f(N.z * invLen, 0.0f, -N.x *invLen);

}

else {

float invLen = 1.0f / std::sqrt(N.y * N.y + N.z * N.z);

C = Vector3f(0.0f, N.z * invLen, -N.y *invLen);

}

B = crossProduct(C, N);

return a.x * B + a.y * C + a.z * N;

}

C 是先取的一个 与 N 垂直的向量

然后 B 是另一个与 C, N 垂直的向量,用叉乘求得

得到的 B C N 就组成了模型坐标系的三个基向量

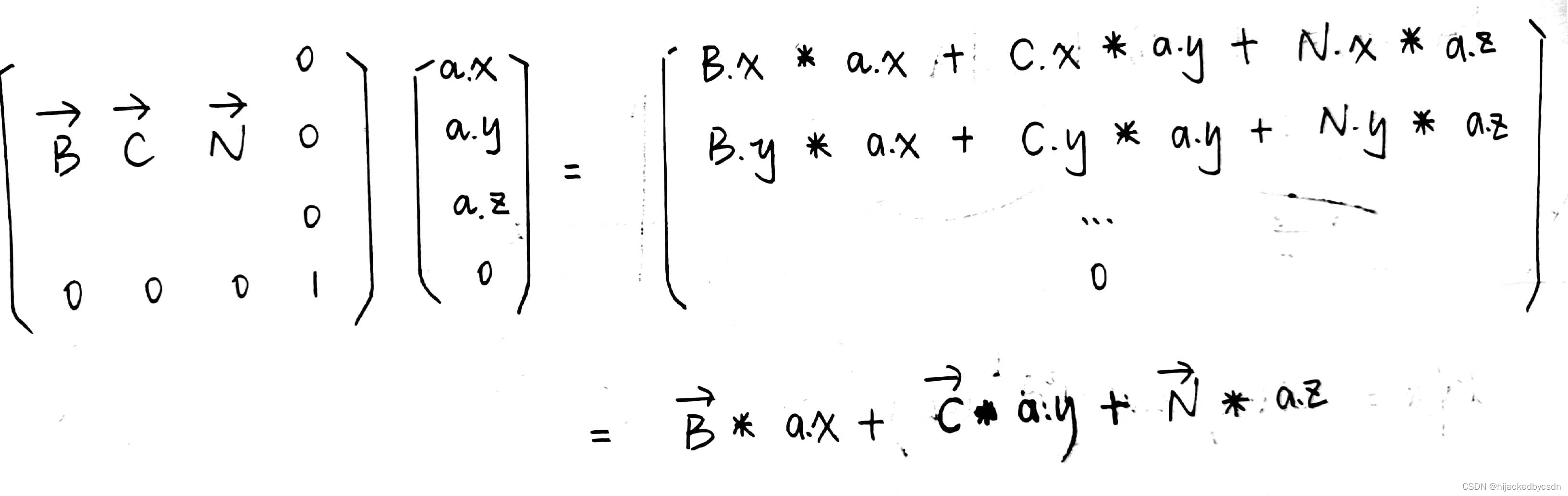

具体的变换算式,之后想到观测变换的矩阵才懂

把 B C N 的向量竖着写放一排,相当于从局部坐标系的 X = (1, 0, 0) Y = (0, 1, 0) Z = (0, 0, 1) 变换到 B C N 的坐标系中

虽然我知道了 B C N 是干啥的,但是我不知道为什么 C 要这么求

而且我感觉,变换到 B C N 坐标系之中后,这个坐标系他也不是世界坐标系啊……他只是以这个物体某一个三角面的法线为 Z 建立的一个坐标系而已啊

我感觉这个应该可以用 B C N 的表示都是在世界坐标系下的表示来解释

也就是 B = B x i + B y j + B z k B = B_x i + B_y j + B_z k B=Bxi+Byj+Bzk 这样来解释,这里的 i , j , k i,j,k i,j,k 是世界坐标系的基向量,那么变换之后的 a a a 的基向量其实也是 i , j , k i,j,k i,j,k,那就也是世界坐标系中的了

那剩下的问题就是为什么 C 的求法要先看 x 和 y 的大小了

之后看到了别人的注释

https://blog.csdn.net/qq_41835314/article/details/125166417

别人说是为了避免出现某一个轴为 0 的情况

比如判断到 std::fabs(N.x) > std::fabs(N.y) 的话,就说明 x 至少不为 0

那么用 x 和 z 来构造 C,一定不会出现 C 为零向量的情况

但是其实这里没有保证 z 不为 0

所以虽然我感觉如果真的要避免 C 为零向量的话,应该避免某两个轴都为 0 的情况……但是实际上单独判断一次 x 和 y 也能达到类似的效果,所以这很合理……

Oren–Nayar Diffuse Reflection 实现

原来的 DIFFUSE 模型

float Material::pdf(const Vector3f &wi, const Vector3f &wo, const Vector3f &N){

switch(m_type){

case DIFFUSE:

{

// uniform sample probability 1 / (2 * PI)

if (dotProduct(wo, N) > 0.0f)

return 0.5f / M_PI;

else

return 0.0f;

break;

}

}

}

照抄 pbrt 书本的

在 Material 类里面加了一些内联函数

// BSDF Inline Functions

// define in s t n coord

// n is normal vector s t are two tangent vector

inline float CosTheta(const Vector3f& w) { return w.z; }

inline float Cos2Theta(const Vector3f& w) { return w.z * w.z; }

inline float AbsCosTheta(const Vector3f& w) { return std::abs(w.z); }

inline float Sin2Theta(const Vector3f& w) {

return std::max(0.0f, 1.0f - Cos2Theta(w));

}

inline float SinTheta(const Vector3f& w) {

return std::sqrt(Sin2Theta(w));

}

inline float TanTheta(const Vector3f& w) {

return SinTheta(w) / CosTheta(w);

}

inline float Tan2Theta(const Vector3f& w) {

return Sin2Theta(w) / Cos2Theta(w);

}

inline float CosPhi(const Vector3f& w) {

float sinTheta = SinTheta(w);

return (sinTheta == 0) ? 1 : clamp(-1, 1, w.x / sinTheta);

}

inline float SinPhi(const Vector3f& w) {

float sinTheta = SinTheta(w);

return (sinTheta == 0) ? 0 : clamp(-1, 1, w.y / sinTheta);

}

inline float Cos2Phi(const Vector3f& w) {

return CosPhi(w) * CosPhi(w);

}

inline float Sin2Phi(const Vector3f& w) {

return SinPhi(w) * SinPhi(w);

}

添加一个世界坐标系到 stn 坐标系的函数

// world coord -> hemi coord

Vector3f toLocal(const Vector3f& a, const Vector3f& N) {

Vector3f B, C;

// ensure x != 0 -> ensure C != zero vector

if (std::fabs(N.x) > std::fabs(N.y)) {

float invLen = 1.0f / std::sqrt(N.x * N.x + N.z * N.z);

C = Vector3f(N.z * invLen, 0.0f, -N.x * invLen);

}

else {

float invLen = 1.0f / std::sqrt(N.y * N.y + N.z * N.z);

C = Vector3f(0.0f, N.z * invLen, -N.y * invLen);

}

B = crossProduct(C, N);

return Vector3f(dotProduct(B, a), dotProduct(C, a), dotProduct(N, a));

}

Oren–Nayar Diffuse Reflection 实现照抄书本

float Material::pdf(const Vector3f &wi, const Vector3f &wo, const Vector3f &N){

int sigma = 0.3;

switch(m_type){

case DIFFUSE:

{

// uniform sample probability 1 / (2 * PI)

// Oren–Nayar Diffuse Reflection

if (dotProduct(wo, N) > 0.0f) {

float R = 0.5f;

float A = 1.0f - sigma * sigma / (2.0f * (sigma * sigma + 0.33f));

float B = 0.45f * sigma * sigma / (sigma * sigma + 0.09f);

// input wi wo are both world coord

Vector3f wi_local = toLocal(wi, N), wo_local = toLocal(wo, N);

float sinThetaI = SinTheta(wi_local);

float sinThetaO = SinTheta(wo_local);

// Compute cosine term of Oren–Nayar model

float maxCos = 0;

if (sinThetaI > 1e-4 && sinThetaO > 1e-4) {

float sinPhiI = SinPhi(wi), cosPhiI = CosPhi(wi);

float sinPhiO = SinPhi(wo), cosPhiO = CosPhi(wo);

float dCos = cosPhiI * cosPhiO + sinPhiI * sinPhiO;

maxCos = std::max(0.0f, dCos);

}

// Compute sine and tangent terms of Oren–Nayar model

float sinAlpha, tanBeta;

if (AbsCosTheta(wi) > AbsCosTheta(wo)) {

sinAlpha = sinThetaO;

tanBeta = sinThetaI / AbsCosTheta(wi);

}

else {

sinAlpha = sinThetaI;

tanBeta = sinThetaO / AbsCosTheta(wo);

}

return R / M_PI * (A + B * maxCos * sinAlpha * tanBeta);

}

else

return 0.0f;

break;

}

}

}

这里暂时写死粗糙度,看看效果

说实话……渲染出来的跟原来的一样的hhh

Microfacet Distribution Functions

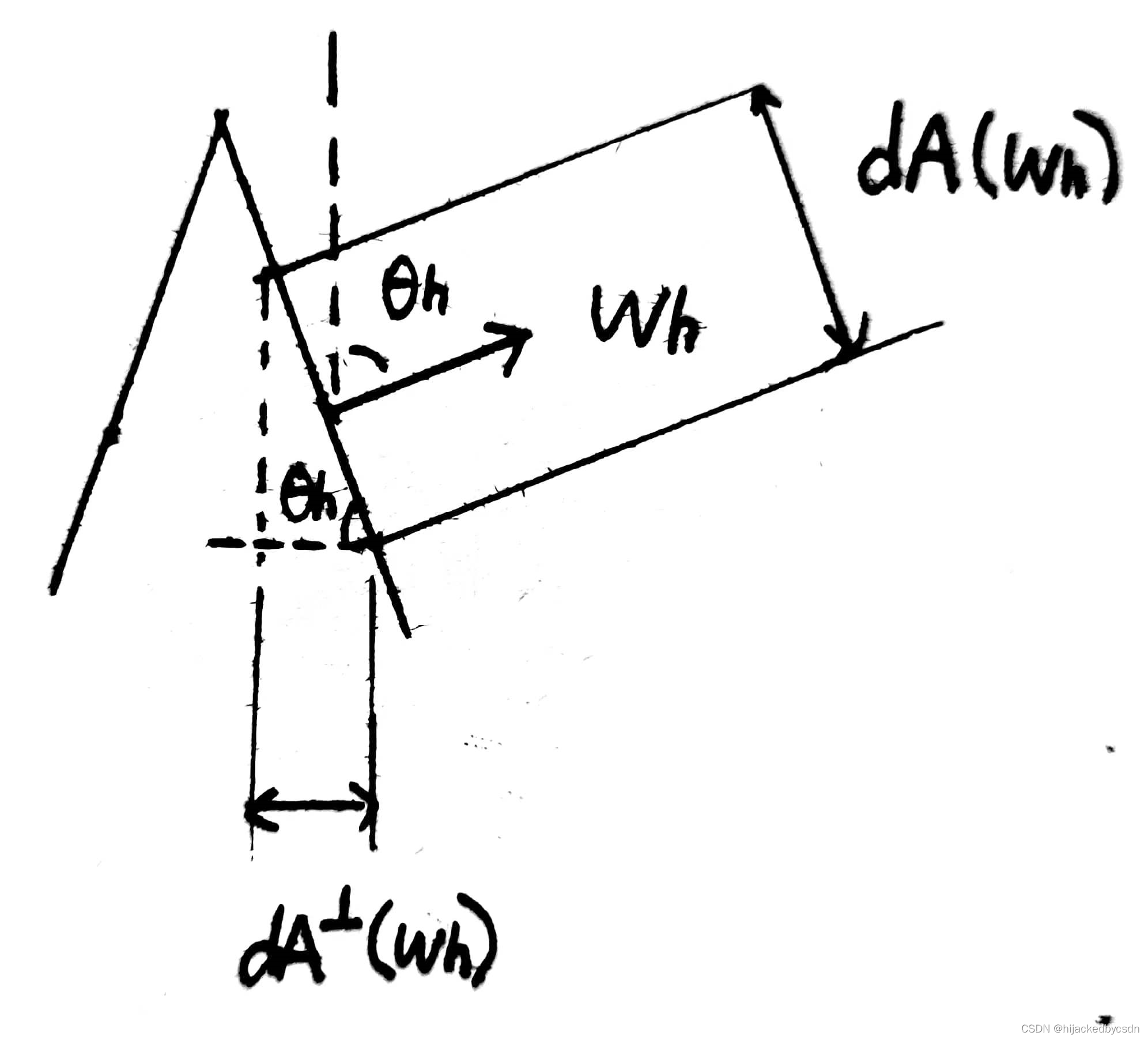

微表面特征可以用一个函数 D ( w h ) D(w_h) D(wh) 表示,其中 w h w_h wh 是法线向量,这个函数表示了 w h w_h wh 相关的微分面积

虽然我觉得这个 h 好容易和半程向量搞混

D ( w h ) D(w_h) D(wh) 本质上是面积微元 dA 上的法向为 h 的全部微表面的面积和与面积微元 dA 的比例

注:dA 是宏观表面上的面积微元。

不难发现, D ( w h ) D(w_h) D(wh) 实际上是针对物体宏观表面上的一个面积微元的,而不是整个物体。

如果要表示光滑的表面,可以写为 D ( w h ) = δ ( w h − ( 0 , 0 , 1 ) ) D(w_h) = \delta(w_h - (0,0,1)) D(wh)=δ(wh−(0,0,1))

因为 D ( w h ) D(w_h) D(wh) 本质是比例,所以为了保证物理正确,这个比例在自变量上积分应该是 1,也就是在法线向量立体角上积分 ∫ H 2 ( n ) D ( w h ) cos θ h d w h = 1 \int_{H^2(n)}D(w_h)\cos\theta_h\mathrm d w_h = 1 ∫H2(n)D(wh)cosθhdwh=1,其中 H 2 ( n ) H^2(n) H2(n) 表示以 n n n 为 z 轴的半球面

Beckmann–Spizzichino model

Beckmann–Spizzichino model 对 D ( w h ) D(w_h) D(wh) 的定义为

D ( w h ) = e − tan 2 θ h / α 2 π α 2 cos 4 θ h D(w_h) = \dfrac{e^{-\tan^2\theta_h/\alpha^2}}{\pi\alpha^2\cos^4\theta_h} D(wh)=πα2cos4θhe−tan2θh/α2

σ \sigma σ 是微表面的斜率的均方根 MSE,令 α = 2 σ \alpha = \sqrt 2 \sigma α=2σ

σ \sigma σ 的这个物理含义方便描述各向异性的材质,也就是在不同方向上 σ \sigma σ 不同

以 x 和 y 为例,各向异性的 D ( w h ) D(w_h) D(wh) 的定义为:

D ( w h ) = e − tan 2 θ h ( cos 2 ϕ h / α x 2 + sin 2 ϕ h / α y 2 ) π α x α y cos 4 θ h D(w_h) = \dfrac{e^{-\tan^2\theta_h(\cos^2\phi_h/\alpha_x^2 + \sin^2\phi_h/\alpha_y^2)}}{\pi\alpha_x\alpha_y\cos^4\theta_h} D(wh)=παxαycos4θhe−tan2θh(cos2ϕh/αx2+sin2ϕh/αy2)

σ x = σ y \sigma_x = \sigma_y σx=σy 时,上式退化为各向同性的式子

当 θ h \theta_h θh 趋于九十度的时候, D ( w h ) D(w_h) D(wh) 计算式会变成 0/0,而因为实际上这种情况是 grazing angle,也就是视线与物体表面平行的时候,所以这个时候的物理意义应该是没有法线方向为这个方向的微表面,好吧,或者说统计意义上,所以直接赋 0,这个做法是物理正确的

Trowbridge and Reitz

Trowbridge and Reitz 对各向异性的描述为

D ( w h ) = 1 π α x α y cos 4 θ h ( 1 + tan 2 θ h ( cos 2 ϕ h / α x 2 + sin 2 ϕ h / α y 2 ) ) 2 D(w_h) = \dfrac{1}{\pi\alpha_x\alpha_y\cos^4\theta_h(1+\tan^2\theta_h(\cos^2\phi_h/\alpha_x^2 + \sin^2\phi_h/\alpha_y^2))^2} D(wh)=παxαycos4θh(1+tan2θh(cos2ϕh/αx2+sin2ϕh/αy2))21

与 Beckmann–Spizzichino model 相比,Trowbridge–Reitz 在钟形部分衰减地更快,在尾部衰减地更慢,与现实更相符

Masking and Shadowing

单独描述微表面的分布还不足以完成整个微表面模型

还需要考虑微表面之间的遮挡,用函数 Smith’s masking-shadowing function G ( w , w h ) G(w,w_h) G(w,wh) 来表示,他描述了法线向量为 w h w_h wh 的微表面从方向 w w w 看的可见性

一般来说这个可见性与某一个微表面的法线方向无关,所以函数可以化简为 G ( w ) G(w) G(w)

那么从一个方向 w w w 看这个微表面的 d A \mathrm d A dA,看到的应该是 ( w ⋅ w h ) d A (w \cdot w_h)\mathrm d A (w⋅wh)dA

所以约束为 cos θ = ∫ H 2 ( n ) G 1 ( w , w h ) max ( 0 , w ⋅ w h ) D ( w h ) d w h \cos\theta = \int_{H^2(n)}G_1(w,w_h)\max(0,w \cdot w_h)D(w_h)\mathrm d w_h cosθ=∫H2(n)G1(w,wh)max(0,w⋅wh)D(wh)dwh

单独一个 D ( w h ) D(w_h) D(wh) 是无法确定好这个约束了,还有很多 G 1 ( w , w h ) G_1(w,w_h) G1(w,wh) 可以满足这个约束

G 1 ( w , w h ) G_1(w,w_h) G1(w,wh) 一般由高度场定义,虽然现实是, G 1 ( w , w h ) G_1(w,w_h) G1(w,wh) 和 D ( w h ) D(w_h) D(wh) 是有关系的,但是实际实验结果是 G 1 ( w , w h ) G_1(w,w_h) G1(w,wh) 表现地很好

所以也可以假设 G 1 ( w , w h ) G_1(w,w_h) G1(w,wh) 与 D ( w h ) D(w_h) D(wh) 互相独立

(但是我怎么感觉这句话是有点马后炮,是先写出的这个约束,再说 D ( w h ) D(w_h) D(wh) 不够

假设 A + ( w ) A^+(w) A+(w) 是在方向 w w w 下,微表面正面的投影面积; A − ( w ) A^-(w) A−(w) 是在方向 w w w 下,微表面背面的投影面积,那么

这里我稍微有点没看懂,他似乎说的是每一个微表面对其他微表面的遮盖是相等的,所以可以将 G ( w ) G(w) G(w) 写为

G 1 ( w ) = A + ( w ) − A − ( w ) A + ( w ) G_1(w) = \dfrac{A^+(w) - A^-(w)}{A^+(w)} G1(w)=A+(w)A+(w)−A−(w)

这里我也没看懂一个地方,他不知道为什么给出 cos θ = A + ( w ) − A − ( w ) \cos\theta = A^+(w) - A^-(w) cosθ=A+(w)−A−(w)

然后还可以定义一个衡量不可见性的函数

Λ ( w ) = A − ( w ) A + ( w ) − A − ( w ) = A − ( w ) cos θ \Lambda(w) = \dfrac{A^-(w)}{A^+(w) - A^-(w)} = \dfrac{A^-(w)}{\cos\theta} Λ(w)=A+(w)−A−(w)A−(w)=cosθA−(w)

还可以用 Λ ( w ) \Lambda(w) Λ(w) 表示 G ( w ) G(w) G(w)

G ( w ) = 1 1 + Λ ( w ) G(w) = \dfrac{1}{1+\Lambda(w)} G(w)=1+Λ(w)1

isotropic Beckmann–Spizzichino distribution

假设 G 1 ( w , w h ) G_1(w,w_h) G1(w,wh) 与 D ( w h ) D(w_h) D(wh) 互相独立,那么 isotropic Beckmann–Spizzichino distribution 给出的不可见性公式为

Λ ( w ) = 1 2 ( e r f ( a ) − 1 + e − a 2 a π ) \Lambda(w) = \dfrac{1}{2}(\mathrm{erf}(a)-1+\dfrac{e^{-a^2}}{a\sqrt\pi}) Λ(w)=21(erf(a)−1+aπe−a2)

其中 a = 1 / ( α tan θ ) a = 1/(\alpha\tan\theta) a=1/(αtanθ),erf 是误差函数 e r f ( x ) = 2 / x ∫ 0 x e − x ′ 2 d x ′ \mathrm{erf}(x) = 2/\sqrt x \int_0^x e^{-x'^2}\mathrm d x' erf(x)=2/x∫0xe−x′2dx′

使用有理多项式近似可以提升计算效率

Trowbridge–Reitz distribution

假设 G 1 ( w , w h ) G_1(w,w_h) G1(w,wh) 与 D ( w h ) D(w_h) D(wh) 互相独立,那么 Trowbridge–Reitz distribution 给出的不可见性公式为

Λ ( w ) = − 1 + 1 + α 2 tan 2 θ 2 \Lambda(w) = \dfrac{-1+\sqrt{1+\alpha^2\tan^2\theta}}{2} Λ(w)=2−1+1+α2tan2θ

各向异性的插值

如果已知在 x 和 y 上的各向异性的表示 α x , α y \alpha_x,\alpha_y αx,αy,那么对于中间方向,如果需要可以插值

G(w) 的进一步假设

一开始我们是假设了可见性与某一个微表面的法线方向无关,所以去掉了 w h w_h wh

现在可以有另外一个假设,就是某一个微表面的可见性与这个微表面的法线向量和观测角度都有关,但是这两个角度的影响是相互独立的,也就是 G ( w , w h ) = G ( w ) G ( w h ) G(w,w_h) = G(w)G(w_h) G(w,wh)=G(w)G(wh)

但是在现实中,这两个角度通常不是互相独立的,那么如果仍然使用这个独立性假设,算得的 G ( w , w h ) G(w,w_h) G(w,wh) 就会比实际值小

例如,入射方向等于出射方向的时候,这个时候,入射方向看到的微表面面积等于出射方向看到的微表面面积,也就是 G ( w i , w o ) = G ( w i ) = G ( w o ) G(w_i,w_o) = G(w_i)=G(w_o) G(wi,wo)=G(wi)=G(wo)。但是 0 < G ( w ) < = 1 0< G(w) <= 1 0<G(w)<=1,所以 G ( w i ) G ( w o ) < G ( w i , w o ) G(w_i)G(w_o) < G(w_i,w_o) G(wi)G(wo)<G(wi,wo)。除非 G ( w ) = 1 G(w) = 1 G(w)=1,这时 w = ( 0 , 0 , 1 ) w = (0,0,1) w=(0,0,1),也就是从法线方向看。

根据这个例子,可以发现,入射方向与出射方向之间越接近, G ( w i ) G(w_i) G(wi) 与 G ( w o ) G(w_o) G(wo) 越相关

另外一个更加准确的假设是,微表面上的点的高度越高 ,可见性越好

G ( w o , w i ) = 1 1 + Λ ( w o ) + Λ ( w i ) G(w_o,w_i) = \dfrac{1}{1+\Lambda(w_o)+\Lambda(w_i)} G(wo,wi)=1+Λ(wo)+Λ(wi)1

The Torrance–Sparrow Model

The Torrance–Sparrow Model 提出了一个早期的微表面模型,用于对金属建模

它们认为微表面都是完美的镜面。因为发生完美的镜面反射,所以微表面反射的半程向量都等于法线方向

或许这是为什么前面他们都在用 w h w_h wh 表示法线向量……?因为完美镜面反射的时候,半程向量就等于法线方向

但是问题是他前面好像也没有点出这一点……?

辐射通量的面积写法

前面已经知道了,辐射通量的微分形式:

d Φ h = L i ( w i ) d w d A ⊥ ( w h ) = L i ( w i ) d w cos θ h d A ( w h ) \mathrm d \Phi_h = L_i(w_i)\mathrm d w \mathrm d A^{\perp}(w_h) = L_i(w_i)\mathrm d w \cos\theta_h \mathrm d A(w_h) dΦh=Li(wi)dwdA⊥(wh)=Li(wi)dwcosθhdA(wh)

面积 d A ⊥ ( w h ) \mathrm d A^{\perp}(w_h) dA⊥(wh) 的话指的是 stn 坐标系的 xOy 面上的投影面积

D ( w h ) D(w_h) D(wh) 的理解

根据之前的说法:

D ( w h ) D(w_h) D(wh) 本质上是面积微元 dA 上的法向为 h 的全部微表面的面积和与面积微元 dA 的比例

注:dA 是宏观表面上的面积微元。

不难发现, D ( w h ) D(w_h) D(wh) 实际上是针对物体宏观表面上的一个面积微元的,而不是整个物体。

那么应该有 d A ( w h ) = D ( w h ) d A \mathrm d A(w_h) = D(w_h)\mathrm d A dA(wh)=D(wh)dA 才对

但是实际上这里应该写成 d A ( w h ) = D ( w h ) d w h d A \mathrm d A(w_h) = D(w_h)\mathrm d w_h \mathrm d A dA(wh)=D(wh)dwhdA

根据 https://zhuanlan.zhihu.com/p/152226698

在上文中,函数被解释为微观角度下微小镜面法线的分布函数,其函数接受一个参数为,即微平面的法线向量,更直观的来说, D ( w h ) D(w_h) D(wh) 的物理含义为所有法向为 w h w_h wh 的微平面的面积,没错就是面积,你没有看错,只不过我们要说明的是什么条件下的,法线方向为的微平面面积,需要加上限制:

(1) 第一,我们所关注的区域仅仅是一个着色点,或者说一个微分面积元,因此所指的应该是单位面积下,所有法向为 w h w_h wh 的微平面的面积。

(2) 第二, 微平面的法线是一个离散数值,而微平面法线分布是连续的,因此是几乎不可能精确的找到法线为某个固定值的微平面的,因此为完善第二个限制,即加上每单位立体角,使其变成一个范围,此时则变成了每单位立体角所有法向为 w h w_h wh 的微平面的面积。

(关于第二点限制可能有些不太好理解,其实这可以类比连续型随机变量取一点概率为0,道理是一样的)

好了,加上这两点限制之后,便得到了 D ( w h ) D(w_h) D(wh) 的真正的物理含义,即每单位面积,每单位立体角所有法向为的微平面的面积。

所以这里认为 D ( w h ) D(w_h) D(wh) 是类似概率密度函数一样,是对 w h w_h wh 的某个范围积分,得到单位面积下,所有法向在 w h w_h wh 的某个范围内的微平面的面积

这就是定义的问题咯……

求出射 radiance

将 d A ( w h ) = D ( w h ) d w h d A \mathrm d A(w_h) = D(w_h)\mathrm d w_h \mathrm d A dA(wh)=D(wh)dwhdA 代入辐射通量表达式,得

d Φ h = L i ( w i ) d w cos θ h D ( w h ) d w h d A \mathrm d \Phi_h = L_i(w_i)\mathrm d w \cos\theta_h D(w_h)\mathrm d w_h \mathrm d A dΦh=Li(wi)dwcosθhD(wh)dwhdA

根据菲涅尔定律,反射的辐射通量为:

d Φ o = F r ( w o ) d Φ h \mathrm d \Phi_o = F_r(w_o)\mathrm d \Phi_h dΦo=Fr(wo)dΦh

根据 Radiance 的定义:

L ( w o ) = d Φ o d w o cos θ o d A L(w_o) = \dfrac{\mathrm d \Phi_o}{\mathrm d w_o \cos\theta_o \mathrm d A} L(wo)=dwocosθodAdΦo

代入 d Φ o \mathrm d \Phi_o dΦo,得

L ( w o ) = F r ( w o ) L i ( w i ) d w i cos θ h D ( w h ) d w h d A d w o cos θ o d A L(w_o) = \dfrac{ F_r(w_o)L_i(w_i)\mathrm d w_i \cos\theta_h D(w_h)\mathrm d w_h \mathrm d A}{\mathrm d w_o \cos\theta_o \mathrm d A} L(wo)=dwocosθodAFr(wo)Li(wi)dwicosθhD(wh)dwhdA

根据光滑镜面可以推导出, d w h \mathrm d w_h dwh 与 d w o \mathrm d w_o dwo 之间的关系为

d w h = d w o 4 cos θ h \mathrm d w_h = \dfrac{\mathrm d w_o}{4\cos\theta_h} dwh=4cosθhdwo

化简 L ( w o ) L(w_o) L(wo) 得

L ( w o ) = F r ( w o ) L i ( w i ) D ( w h ) d w i 4 cos θ o L(w_o) = \dfrac{ F_r(w_o)L_i(w_i)D(w_h)\mathrm d w_i }{4 \cos\theta_o} L(wo)=4cosθoFr(wo)Li(wi)D(wh)dwi

求 Torrance–Sparrow BRDF

又根据 BRDF 定义:

f r ( w o , w i ) = d L o ( p , w o ) d E ( p , w i ) = d L o ( p , w o ) L i ( p , w i ) cos θ i d w i f_r(w_o,w_i) = \dfrac{\mathrm d L_o(p,w_o)}{\mathrm d E(p,w_i)} = \dfrac{\mathrm d L_o(p,w_o)}{L_i(p,w_i)\cos\theta_i\mathrm d w_i} fr(wo,wi)=dE(p,wi)dLo(p,wo)=Li(p,wi)cosθidwidLo(p,wo)

代入 L ( w o ) L(w_o) L(wo) 式:

f r ( w o , w i ) = d L o ( p , w o ) L i ( p , w i ) cos θ i d w i = F r ( w o ) L i ( w i ) D ( w h ) d w i 4 cos θ o L i ( p , w i ) cos θ i d w i = F r ( w o ) D ( w h ) 4 cos θ o cos θ i f_r(w_o,w_i) = \dfrac{\mathrm d L_o(p,w_o)}{L_i(p,w_i)\cos\theta_i\mathrm d w_i} \\ = \dfrac{\dfrac{ F_r(w_o)L_i(w_i)D(w_h)\mathrm d w_i }{4 \cos\theta_o}}{L_i(p,w_i)\cos\theta_i\mathrm d w_i} \\ = \dfrac{F_r(w_o)D(w_h)}{4 \cos\theta_o\cos\theta_i} fr(wo,wi)=Li(p,wi)cosθidwidLo(p,wo)=Li(p,wi)cosθidwi4cosθoFr(wo)Li(wi)D(wh)dwi=4cosθocosθiFr(wo)D(wh)

再乘上 G ( w o , w i ) G(w_o,w_i) G(wo,wi) 项,得到 Torrance–Sparrow BRDF

f r ( w o , w i ) = F r ( w o ) G ( w o , w i ) D ( w h ) 4 cos θ o cos θ i f_r(w_o,w_i) = \dfrac{F_r(w_o)G(w_o,w_i)D(w_h)}{4 \cos\theta_o\cos\theta_i} fr(wo,wi)=4cosθocosθiFr(wo)G(wo,wi)D(wh)

公式的推导不依赖于微表面特定的分布,也不依赖于特定的菲涅尔情况,所以可以用于导体或者绝缘体,但是 d w h \mathrm d w_h dwh 与 d w o \mathrm d w_o dwo 之间的关系要求微表面是完美镜面

推导 BTDF

考虑折射,这里与反射的推导过程不同的就是 d w h \mathrm d w_h dwh 与 d w o \mathrm d w_o dwo 之间的关系

对于折射,设入射介质的折射率为 η i \eta_i ηi,折射介质的折射率为 η o \eta_o ηo, d w h \mathrm d w_h dwh 与 d w o \mathrm d w_o dwo 之间的关系为:

d w h = η o 2 ∣ w o ⋅ w i ∣ d w o ( η i ( w i ⋅ w h ) + η o ( w o ⋅ w h ) ) 2 \mathrm d w_h = \dfrac{\eta_o^2|w_o\cdot w_i|\mathrm d w_o}{(\eta_i(w_i\cdot w_h)+\eta_o(w_o \cdot w_h))^2} dwh=(ηi(wi⋅wh)+ηo(wo⋅wh))2ηo2∣wo⋅wi∣dwo

代入 L ( w o ) L(w_o) L(wo)

L ( w o ) = F t ( w o ) L i ( w i ) d w i cos θ h D ( w h ) η o 2 ∣ w o ⋅ w i ∣ d w o ( η i ( w i ⋅ w h ) + η o ( w o ⋅ w h ) ) 2 d A d w o cos θ o d A = F t ( w o ) L i ( w i ) d w i cos θ h D ( w h ) η o 2 ∣ w o ⋅ w i ∣ ( η i ( w i ⋅ w h ) + η o ( w o ⋅ w h ) ) 2 cos θ o = F t ( w o ) L i ( w i ) d w i cos θ h D ( w h ) η o 2 ∣ w o ⋅ w i ∣ cos θ o ( η i ( w i ⋅ w h ) + η o ( w o ⋅ w h ) ) 2 L(w_o) = \dfrac{ F_t(w_o)L_i(w_i)\mathrm d w_i \cos\theta_h D(w_h)\dfrac{\eta_o^2|w_o\cdot w_i|\mathrm d w_o}{(\eta_i(w_i\cdot w_h)+\eta_o(w_o \cdot w_h))^2} \mathrm d A}{\mathrm d w_o \cos\theta_o \mathrm d A} \\ = \dfrac{ F_t(w_o)L_i(w_i)\mathrm d w_i \cos\theta_h D(w_h)\dfrac{\eta_o^2|w_o\cdot w_i|}{(\eta_i(w_i\cdot w_h)+\eta_o(w_o \cdot w_h))^2}}{ \cos\theta_o} \\ =\dfrac{ F_t(w_o)L_i(w_i)\mathrm d w_i \cos\theta_h D(w_h)\eta_o^2|w_o\cdot w_i|}{ \cos\theta_o(\eta_i(w_i\cdot w_h)+\eta_o(w_o \cdot w_h))^2} L(wo)=dwocosθodAFt(wo)Li(wi)dwicosθhD(wh)(ηi(wi⋅wh)+ηo(wo⋅wh))2ηo2∣wo⋅wi∣dwodA=cosθoFt(wo)Li(wi)dwicosθhD(wh)(ηi(wi⋅wh)+ηo(wo⋅wh))2ηo2∣wo⋅wi∣=cosθo(ηi(wi⋅wh)+ηo(wo⋅wh))2Ft(wo)Li(wi)dwicosθhD(wh)ηo2∣wo⋅wi∣

这里的折射的菲涅尔项记为 F t ( w o ) F_t(w_o) Ft(wo)

代入 f t ( w o , w i ) f_t(w_o,w_i) ft(wo,wi) 定义

f t ( w o , w i ) = d L o ( p , w o ) d E ( p , w i ) = F t ( w o ) L i ( w i ) d w i cos θ h D ( w h ) η o 2 ∣ w o ⋅ w i ∣ cos θ o ( η i ( w i ⋅ w h ) + η o ( w o ⋅ w h ) ) 2 L i ( p , w i ) cos θ i d w i = F t ( w o ) cos θ h D ( w h ) η o 2 ∣ w o ⋅ w i ∣ cos θ o ( η i ( w i ⋅ w h ) + η o ( w o ⋅ w h ) ) 2 cos θ i = F t ( w o ) cos θ h D ( w h ) η o 2 ∣ w o ⋅ w i ∣ cos θ i cos θ o ( η i ( w i ⋅ w h ) + η o ( w o ⋅ w h ) ) 2 f_t(w_o,w_i) = \dfrac{\mathrm d L_o(p,w_o)}{\mathrm d E(p,w_i)} = \dfrac{\dfrac{ F_t(w_o)L_i(w_i)\mathrm d w_i \cos\theta_h D(w_h)\eta_o^2|w_o\cdot w_i|}{ \cos\theta_o(\eta_i(w_i\cdot w_h)+\eta_o(w_o \cdot w_h))^2}}{L_i(p,w_i)\cos\theta_i\mathrm d w_i} \\ = \dfrac{\dfrac{ F_t(w_o)\cos\theta_h D(w_h)\eta_o^2|w_o\cdot w_i|}{ \cos\theta_o(\eta_i(w_i\cdot w_h)+\eta_o(w_o \cdot w_h))^2}}{\cos\theta_i} \\ =\dfrac{ F_t(w_o)\cos\theta_h D(w_h)\eta_o^2|w_o\cdot w_i|}{\cos\theta_i \cos\theta_o(\eta_i(w_i\cdot w_h)+\eta_o(w_o \cdot w_h))^2} ft(wo,wi)=dE(p,wi)dLo(p,wo)=Li(p,wi)cosθidwicosθo(ηi(wi⋅wh)+ηo(wo⋅wh))2Ft(wo)Li(wi)dwicosθhD(wh)ηo2∣wo⋅wi∣=cosθicosθo(ηi(wi⋅wh)+ηo(wo⋅wh))2Ft(wo)cosθhD(wh)ηo2∣wo⋅wi∣=cosθicosθo(ηi(wi⋅wh)+ηo(wo⋅wh))2Ft(wo)cosθhD(wh)ηo2∣wo⋅wi∣

上下提一个 η o 2 \eta_o^2 ηo2,得

f t ( w o , w i ) = F t ( w o ) cos θ h D ( w h ) ∣ w o ⋅ w i ∣ cos θ i cos θ o ( η ( w i ⋅ w h ) + ( w o ⋅ w h ) ) 2 f_t(w_o,w_i) = \dfrac{ F_t(w_o)\cos\theta_h D(w_h)|w_o\cdot w_i|}{\cos\theta_i \cos\theta_o(\eta(w_i\cdot w_h)+(w_o \cdot w_h))^2} ft(wo,wi)=cosθicosθo(η(wi⋅wh)+(wo⋅wh))2Ft(wo)cosθhD(wh)∣wo⋅wi∣

其中 η = η i η o \eta = \dfrac{\eta_i}{\eta_o} η=ηoηi

最终别人给出的式子是

f t ( w o , w i ) = F t ( w o ) D ( w h ) ( η ( w i ⋅ w h ) + ( w o ⋅ w h ) ) 2 ∣ w o ⋅ w h ∣ ∣ w i ⋅ w h ∣ cos θ o cos θ i f_t(w_o,w_i) = \dfrac{ F_t(w_o)D(w_h)}{(\eta(w_i\cdot w_h)+(w_o \cdot w_h))^2}\dfrac{|w_o \cdot w_h||w_i \cdot w_h|}{\cos\theta_o \cos\theta_i} ft(wo,wi)=(η(wi⋅wh)+(wo⋅wh))2Ft(wo)D(wh)cosθocosθi∣wo⋅wh∣∣wi⋅wh∣

除了乘上

G

(

w

o

,

w

i

)

G(w_o,w_i)

G(wo,wi) 项,

其中

cos

θ

h

=

∣

w

h

⋅

∣

\cos\theta_h = |w_h \cdot |

cosθh=∣wh⋅∣

嗯……中间浏览器崩了一次……

草稿丢了不详细写了……

之后就是模型的实现

castRay

// Implementation of Path Tracing

Vector3f Scene::castRay(const Ray& ray, int depth) const

{

// TO DO Implement Path Tracing Algorithm here

if (depth > this->maxDepth) {

return Vector3f(0.0, 0.0, 0.0);

}

Intersection inter_shade_point = Scene::intersect(ray);

Vector3f hitColor = Vector3f(0.0, 0.0, 0.0);

if (inter_shade_point.happened) {

Material* mat_p = inter_shade_point.m;

Object* hitObject = inter_shade_point.obj;

Vector3f p = inter_shade_point.coords;

Vector3f normal_p = inter_shade_point.normal;

switch (mat_p->getType())

{

case DIFFUSE:

case MICROFACET: {

// Contribution from the light source

// Uniformly sample the light at x' (pdf_light = 1 / A)

Intersection inter_sample_point;

float pdf_x;

sampleLight(inter_sample_point, pdf_x);

Vector3f x = inter_sample_point.coords;

Vector3f dir_p2x = normalize(x - p);

Vector3f p_deviation = (dotProduct(ray.direction, normal_p) < 0) ?

p + normal_p * EPSILON :

p - normal_p * EPSILON;

// Shoot a ray from p to x'

Intersection inter_p2x = Scene::intersect(Ray(p_deviation, dir_p2x));

// If the ray is not blocked in the middle

if ((inter_p2x.coords - x).norm() < 0.01f) {

// L_dir = L_i * f_r * cos(theta) * cos(theta') / |x' - p | ^ 2 / pdf_light

Vector3f L_x2p = inter_sample_point.emit;

Vector3f normal_x = inter_sample_point.normal;

Vector3f L_dir = L_x2p * mat_p->eval(ray.direction, dir_p2x, normal_p) * dotProduct(dir_p2x, normal_p) * dotProduct(-dir_p2x, normal_x) / pow((x - p).norm(), 2) / pdf_x;

hitColor += L_dir;

}

// Contribution from other reflectors

float p_rr = get_random_float();

if (p_rr < RussianRoulette) {

Vector3f dir_o = normalize(mat_p->sample(ray.direction, normal_p));

Intersection inter_indir_point = Scene::intersect(Ray(p_deviation, dir_o));

if (inter_indir_point.happened && !inter_indir_point.m->hasEmission()) {

float pdf_shade_point = mat_p->pdf(ray.direction, dir_o, normal_p);

if (pdf_shade_point > EPSILON) {

Vector3f L_indir = castRay(Ray(p_deviation, dir_o), depth + 1) * mat_p->eval(ray.direction, dir_o, normal_p) * dotProduct(dir_o, normal_p) / (pdf_shade_point * RussianRoulette);

hitColor += L_indir;

}

}

}

// make light visible

hitColor += mat_p->getEmission();

break;

}

case MIRROR: {

Vector3f p_deviation = (dotProduct(ray.direction, normal_p) < 0) ?

p + normal_p * EPSILON :

p - normal_p * EPSILON;

// Contribution from other reflectors

float p_rr = get_random_float();

if (p_rr < RussianRoulette) {

Vector3f dir_o = normalize(mat_p->sample(ray.direction, normal_p));

Intersection inter_indir_point = Scene::intersect(Ray(p_deviation, dir_o));

// don't only reflect light, but reflect everything

// if (inter_indir_point.happened && !inter_indir_point.m->hasEmission()) {

if (inter_indir_point.happened) {

float pdf_shade_point = mat_p->pdf(ray.direction, dir_o, normal_p);

if (pdf_shade_point > EPSILON) {

Vector3f L_indir = castRay(Ray(p_deviation, dir_o), depth + 1) * mat_p->eval(ray.direction, dir_o, normal_p) * dotProduct(dir_o, normal_p) / (pdf_shade_point * RussianRoulette);

hitColor += L_indir;

}

}

}

// make light visible

hitColor += mat_p->getEmission();

break;

}

}

}

// avoid white noise

hitColor.x = (clamp(0, 1, hitColor.x));

hitColor.y = (clamp(0, 1, hitColor.y));

hitColor.z = (clamp(0, 1, hitColor.z));

return hitColor;

}

模型的实现这里我之前有个地方写错了,间接光的起点应该是着色点

材质

材质的话就是

反射方向采样

概率密度函数

G 函数

D 函数

BRDF

//

// Created by LEI XU on 5/16/19.

//

#ifndef RAYTRACING_MATERIAL_H

#define RAYTRACING_MATERIAL_H

#include "Vector.hpp"

enum MaterialType { DIFFUSE, MIRROR, MICROFACET };

class Material{

private:

// Compute reflection direction

Vector3f reflect(const Vector3f &I, const Vector3f &N) const

{

return I - 2 * dotProduct(I, N) * N;

}

// Compute refraction direction using Snell's law

//

// We need to handle with care the two possible situations:

//

// - When the ray is inside the object

//

// - When the ray is outside.

//

// If the ray is outside, you need to make cosi positive cosi = -N.I

//

// If the ray is inside, you need to invert the refractive indices and negate the normal N

Vector3f refract(const Vector3f &I, const Vector3f &N, const float &ior) const

{

float cosi = clamp(-1, 1, dotProduct(I, N));

float etai = 1, etat = ior;

Vector3f n = N;

if (cosi < 0) { cosi = -cosi; } else { std::swap(etai, etat); n= -N; }

float eta = etai / etat;

float k = 1 - eta * eta * (1 - cosi * cosi);

return k < 0 ? 0 : eta * I + (eta * cosi - sqrtf(k)) * n;

}

// Compute Fresnel equation

//

// \param I is the incident view direction

//

// \param N is the normal at the intersection point

//

// \param ior is the material refractive index

//

// \param[out] kr is the amount of light reflected

void fresnel(const Vector3f &I, const Vector3f &N, const float &ior, float &kr) const

{

float cosi = clamp(-1, 1, dotProduct(I, N));

float etai = 1, etat = ior;

if (cosi > 0) { std::swap(etai, etat); }

// Compute sini using Snell's law

float sint = etai / etat * sqrtf(std::max(0.f, 1 - cosi * cosi));

// Total internal reflection

if (sint >= 1) {

kr = 1;

}

else {

float cost = sqrtf(std::max(0.f, 1 - sint * sint));

cosi = fabsf(cosi);

float Rs = ((etat * cosi) - (etai * cost)) / ((etat * cosi) + (etai * cost));

float Rp = ((etai * cosi) - (etat * cost)) / ((etai * cosi) + (etat * cost));

kr = (Rs * Rs + Rp * Rp) / 2;

}

// As a consequence of the conservation of energy, transmittance is given by:

// kt = 1 - kr;

}

// hemi coord -> world coord

Vector3f toWorld(const Vector3f &a, const Vector3f &N){

Vector3f B, C;

// ensure x != 0 -> ensure C != zero vector

if (std::fabs(N.x) > std::fabs(N.y)){

float invLen = 1.0f / std::sqrt(N.x * N.x + N.z * N.z);

C = Vector3f(N.z * invLen, 0.0f, -N.x *invLen);

}

else {

float invLen = 1.0f / std::sqrt(N.y * N.y + N.z * N.z);

C = Vector3f(0.0f, N.z * invLen, -N.y *invLen);

}

B = crossProduct(C, N);

return a.x * B + a.y * C + a.z * N;

}

// world coord -> hemi coord

Vector3f toLocal(const Vector3f& a, const Vector3f& N) {

Vector3f B, C;

// ensure x != 0 -> ensure C != zero vector

if (std::fabs(N.x) > std::fabs(N.y)) {

float invLen = 1.0f / std::sqrt(N.x * N.x + N.z * N.z);

C = Vector3f(N.z * invLen, 0.0f, -N.x * invLen);

}

else {

float invLen = 1.0f / std::sqrt(N.y * N.y + N.z * N.z);

C = Vector3f(0.0f, N.z * invLen, -N.y * invLen);

}

B = crossProduct(C, N);

return Vector3f(dotProduct(B, a), dotProduct(C, a), dotProduct(N, a));

}

public:

MaterialType m_type;

//Vector3f m_color;

Vector3f m_emission;

float ior;

Vector3f Kd, Ks;

float specularExponent;

//Texture tex;

inline Material(MaterialType t=DIFFUSE, Vector3f e=Vector3f(0,0,0));

inline MaterialType getType();

//inline Vector3f getColor();

inline Vector3f getColorAt(double u, double v);

inline Vector3f getEmission();

inline bool hasEmission();

// sample a ray by Material properties

inline Vector3f sample(const Vector3f &wi, const Vector3f &N);

// given a ray, calculate the PdF of this ray

inline float pdf(const Vector3f &wi, const Vector3f &wo, const Vector3f &N);

inline Vector3f G(const float& Roughness, const Vector3f& wi, const Vector3f& wo, const Vector3f& N);

inline float D(const float& Roughness, const Vector3f& h, const Vector3f& N);

// given a ray, calculate the contribution of this ray

inline Vector3f eval(const Vector3f& wi, const Vector3f& wo, const Vector3f& N);

};

Material::Material(MaterialType t, Vector3f e){

m_type = t;

//m_color = c;

m_emission = e;

}

MaterialType Material::getType(){return m_type;}

///Vector3f Material::getColor(){return m_color;}

Vector3f Material::getEmission() {return m_emission;}

bool Material::hasEmission() {

if (m_emission.norm() > EPSILON) return true;

else return false;

}

Vector3f Material::getColorAt(double u, double v) {

return Vector3f();

}

Vector3f Material::sample(const Vector3f &wi, const Vector3f &N){

switch(m_type){

case DIFFUSE:

{

// uniform sample on the hemisphere

float x_1 = get_random_float(), x_2 = get_random_float();

float z = std::fabs(1.0f - 2.0f * x_1);

float r = std::sqrt(1.0f - z * z), phi = 2 * M_PI * x_2;

Vector3f wo_local(r*std::cos(phi), r*std::sin(phi), z);

return toWorld(wo_local, N);

break;

}

case MIRROR:

{

Vector3f localRay = reflect(wi, N);

return localRay;

break;

}

case MICROFACET: {

// uniform sample on the hemisphere

float x_1 = get_random_float(), x_2 = get_random_float();

float z = std::fabs(1.0f - 2.0f * x_1);

float r = std::sqrt(1.0f - z * z), phi = 2 * M_PI * x_2;

Vector3f wo_local(r * std::cos(phi), r * std::sin(phi), z);

return toWorld(wo_local, N);

break;

}

}

}

float Material::pdf(const Vector3f &wi, const Vector3f &wo, const Vector3f &N){

int sigma = 0.3;

switch(m_type){

case DIFFUSE:

{

// uniform sample probability 1 / (2 * PI)

if (dotProduct(wo, N) > 0.0f)

return 0.5f / M_PI;

else

return 0.0f;

break;

}

case MIRROR:

{

if (dotProduct(wo, N) > EPSILON)

return 1.0f;

else

return 0.0f;

break;

}

case MICROFACET:

{

// uniform sample probability 1 / (2 * PI)

if (dotProduct(wo, N) > 0.0f)

return 0.5f / M_PI;

else

return 0.0f;

break;

}

}

}

Vector3f Material::G(const float& Roughness, const Vector3f& wi, const Vector3f& wo, const Vector3f& N) {

// Trowbridge and Reitz / GGX

float A_wi, A_wo;

A_wi = (-1 + sqrt(1 + Roughness * Roughness * pow(tan(acos(dotProduct(wi, N))), 2))) / 2;

A_wo = (-1 + sqrt(1 + Roughness * Roughness * pow(tan(acos(dotProduct(wo, N))), 2))) / 2;

float divisor = (1 + A_wi + A_wo);

if (divisor < 0.001)

return 1.f;

else

return 1.0f / divisor;

}

float Material::D(const float& Roughness, const Vector3f& h, const Vector3f& N) {

// Trowbridge and Reitz / GGX

float cos_sita = dotProduct(h, N);

float divisor = (M_PI * pow(1.0 + cos_sita * cos_sita * (Roughness * Roughness - 1), 2));

if (divisor < 0.001)

return 1.f;

else

return (Roughness * Roughness) / divisor;

}

Vector3f Material::eval(const Vector3f& wi, const Vector3f& wo, const Vector3f& N) {

switch (m_type) {

case DIFFUSE:

{

// calculate the contribution of diffuse model

float cosalpha = dotProduct(N, wo);

if (cosalpha > 0.0f) {

Vector3f diffuse = Kd / M_PI;

return diffuse;

}

else

return Vector3f(0.0f);

break;

}

case MIRROR:

{

float cosalpha = dotProduct(N, wo);

float kr;

if (cosalpha > EPSILON) {

fresnel(wi, N, ior, kr);

Vector3f mirror = 1 / cosalpha;

return kr * mirror;

}

else

return Vector3f(0.0f);

break;

}

case MICROFACET:

{

// Trowbridge and Reitz / GGX

float cosalpha = dotProduct(N, wo);

if (cosalpha > 0.0f)

{

// calculate the contribution of Microfacet model

float F;

fresnel(wi, N, ior, F);

float Roughness = 0.5f; // hyper para

// neg wi because ray.direction is from outside to shade point

Vector3f h = normalize(-wi + wo);

// energy balance

Vector3f diffuse = (Vector3f(1.0f) - F) * Kd / M_PI;

Vector3f specular;

float divisor = ((4 * (dotProduct(N, -wi)) * (dotProduct(N, wo))));

if (divisor < 0.001)

specular = Vector3f(1);

else

specular = F * G(Roughness, -wi, wo, N) * D(Roughness, h, N) / divisor;

return diffuse + specular;

}

else

return Vector3f(0.0f);

break;

}

}

}

#endif //RAYTRACING_MATERIAL_H

然后 Object 的要改掉那个没用的函数 evalDiffuseColor

球与光线的相交

球与光线的相交要改

Intersection getIntersection(Ray ray){

Intersection result;

result.happened = false;

Vector3f L = ray.origin - center;

float a = dotProduct(ray.direction, ray.direction);

float b = 2 * dotProduct(ray.direction, L);

float c = dotProduct(L, L) - radius2;

float t0, t1;

if (!solveQuadratic(a, b, c, t0, t1)) return result;

if (t0 < 100.0f * EPSILON) t0 = t1;

if (t0 < 100.0f * EPSILON) return result;

result.happened=true;

result.coords = Vector3f(ray.origin + ray.direction * t0);

result.normal = normalize(Vector3f(result.coords - center));

result.m = this->m;

result.obj = this;

result.distance = t0;

return result;

}

此外就没啥了……倦了……

1302

1302

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?