回归算法是一种有监督算法。

回归算法是一种比较常用的机器学习算法,用来建立“解释”变量(自变量X)和观 测值(因变量Y)之间的关系;从机器学习的角度来讲,用于构建一个算法模型(函 数)来做属性(X)与标签(Y)之间的映射关系,在算法的学习过程中,试图寻找一个 函数使得参数之间的关系拟合性最好。 回归算法中算法(函数)的最终结果是一个连续的数据值,输入值(属性值)是一个d 维度的属性/数值向量。

本文主要用于线性回归代码的实现,相关理论知识请参考博主的另一篇文章:机器学习(三)线性回归原理

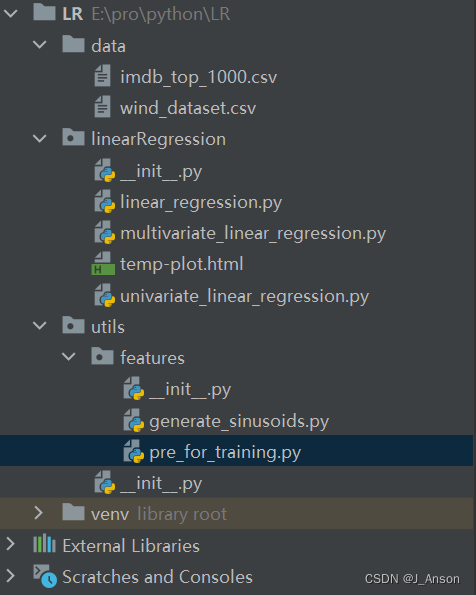

一、目录结构

二、数据预处理

import numpy as np

from utils.features.generate_sinusoids import generate_sinusoids

def prepare(data, polynomial_degree=0, sinusoid_degree=0, normalize_data=True):

num_examples = data.shape[0]

data_processed = np.copy(data)

features_mean = 0

features_deviation = 0

data_normalized = data_processed

if normalize_data:

(

data_normalized,

features_mean,

features_deviation

) = normalize(data_processed)

data_processed = data_normalized

# 特征变换

if sinusoid_degree > 0:

sinusoids = generate_sinusoids(data_normalized, sinusoid_degree)

data_processed = np.concatenate((data_processed, sinusoids), axis=1)

# # 特征变换

# if polynomial_degree > 0:

# polynomials = generate_polynomials(data_normalized, polynomial_degree, normalize_data)

# data_processed = np.concatenate((data_processed, polynomials), axis=1)

data_processed = np.hstack((np.ones((num_examples, 1)), data_processed))

return data_processed, features_mean, features_deviation

def normalize(features):

features_normalized = np.copy(features).astype(float)

# 计算均值

features_mean = np.mean(features, 0)

# 计算标准差

features_deviation = np.std(features, 0)

# 标准化操作

if features.shape[0] > 1:

features_normalized -= features_mean

# 防止除0

features_deviation[features_deviation == 0] = 1

features_normalized /= features_deviation

return features_normalized, features_mean, features_deviation

三、generate_sinusoids函数的定义

import numpy as np

def generate_sinusoids(dataset, sinusoid_degree):

num_examples = dataset.shape[0]

sinusoids = np.empty((num_examples, 0))

for degree in range(1, sinusoid_degree + 1):

sinusoid_features = np.sin(degree * dataset)

sinusoids = np.concatenate((sinusoids, sinusoid_features), axis=1)

return sinusoids

四、线性回归

import numpy as np

from utils.features import pre_for_training

class LinearRegression:

def __init__(self,

data,

labels,

polynomial_degree=0,

sinusoid_degree=0,

normalize_data=True):

"""

对数据预处理,获取所有特征个数,初始化参数矩阵

:param data: 训练特征集

:param labels: 训练目标值

:param polynomial_degree: 特征变换

:param sinusoid_degree: 特征变换

:param normalize_data: 标准化数据处理

"""

(data_processed, features_mean, features_deviation) = \

pre_for_training.prepare(data=data, polynomial_degree=polynomial_degree,

sinusoid_degree=sinusoid_degree, normalize_data=normalize_data)

self.data = data_processed

self.labels = labels

self.features_mean = features_mean

self.features_deviation = features_deviation

self.polynomial_degree = polynomial_degree

self.sinusoid_degree = sinusoid_degree

self.normalize_data = normalize_data

num_features = self.data.shape[1]

self.theta = np.zeros((num_features, 1))

def train(self, alpha, num_iterations=500):

"""

执行梯度下降

:param alpha: 学习绿

:param num_iterations: 学习次数

:return: 特征参数,历史损失值

"""

loss_history = self.gradient_descent(alpha=alpha, num_iterations=num_iterations)

return self.theta, loss_history

def gradient_descent(self, alpha, num_iterations):

"""

迭代下降参数

:param alpha: 学习率

:param num_iterations: 训练次数

:return: 历史损失列表

"""

loss_history = list()

for _ in range(num_iterations):

self.gradient_step(alpha)

loss_history.append(self.loss_function(self.data, self.labels))

return loss_history

def gradient_step(self, alpha):

"""

梯度下降,参数更新

:param alpha: 学习率

:return: 拟合参数

"""

num_examples = self.data.shape[0]

predictions = LinearRegression.hypothesis(self.data, self.theta)

delta = predictions - self.labels

theta = self.theta

theta = theta - alpha * (1 / num_examples) * (np.dot(delta.T, self.data)).T

self.theta = theta

def loss_function(self, data, labels):

"""

计算损失

:param data: 特征参数

:param labels: 特目标值

:return: 损失结果

"""

num_examples = data.shape[0]

delta = LinearRegression.hypothesis(data=self.data, theta=self.theta) - labels

loss = (1 / 2) * np.dot(delta.T, delta)/num_examples

# print(loss, loss.shape)

return loss[0][0]

@staticmethod

def hypothesis(data, theta):

predictions = np.dot(data, theta)

return predictions

def get_loss(self, data, labels):

data_processed = pre_for_training.prepare(data=data,

polynomial_degree=self.polynomial_degree,

sinusoid_degree=self.sinusoid_degree,

normalize_data=self.normalize_data)[0]

return self.loss_function(data=data_processed, labels=labels)

def predict(self, data):

"""

预测

:param data: 参数

:return: 预测结果

"""

data_processed = pre_for_training.prepare(data=data, polynomial_degree=self.polynomial_degree,

sinusoid_degree=self.sinusoid_degree,

normalize_data=self.normalize_data)[0]

predictions = LinearRegression.hypothesis(data=data_processed, theta=self.theta)

return predictions

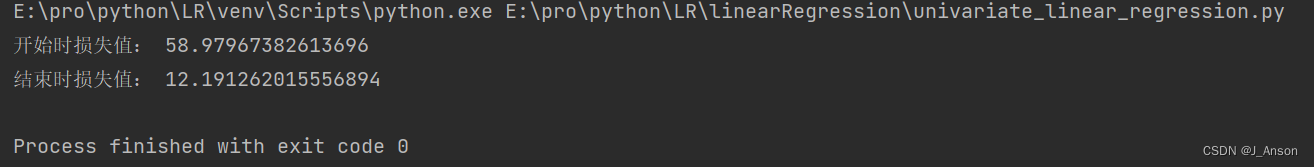

五、单参数预测

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from linearRegression import linear_regression

from utils.features import pre_for_training

data = pd.read_csv("../data/wind_dataset.csv")

for i in data.columns:

if not np.all(pd.notnull(data[i])):

data[i].fillna(data[i].mean())

train_data = data.sample(frac=0.8)

test_data = data.drop(train_data.index)

input_param_name = "RAIN"

output_param_name = "WIND"

x_train = train_data[[input_param_name]].values

y_train = train_data[[output_param_name]].values

x_test = test_data[[input_param_name]].values

y_test = test_data[[output_param_name]].values

num_iterations = 500

learning_rate = 0.01

linearRegression = linear_regression.LinearRegression(x_train, y_train)

(theta, loss_history) = linearRegression.train(alpha=learning_rate, num_iterations=num_iterations)

print("开始时损失值:", loss_history[0])

print("结束时损失值:", loss_history[-1])

plt.plot(range(num_iterations), loss_history)

plt.xlabel("Iter")

plt.ylabel("Loss")

plt.title("Gradient")

plt.show()

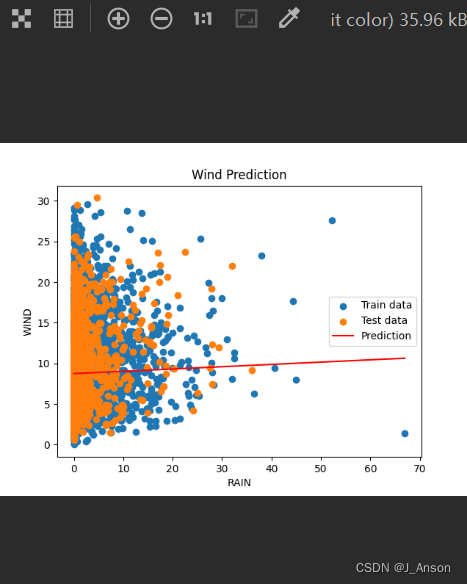

predictions_num = 100

x_predictions = np.linspace(x_train.min(), x_train.max(), predictions_num).reshape((predictions_num, 1))

y_predictions = linearRegression.predict(x_predictions)

plt.scatter(x_train, y_train, label="Train data")

plt.scatter(x_test, y_test, label="Test data")

plt.plot(x_predictions, y_predictions, "r", label="Prediction")

plt.xlabel(input_param_name)

plt.ylabel(output_param_name)

plt.title("Wind Prediction")

plt.legend()

plt.show()六、结果

7041

7041

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?