论文

1 Introduction

· 多视角(multi-view)与跨视角(cross-view)的区别:

- 多视角:所有视角注册到图库

- 跨视角:仅一个单一视角注册到图库

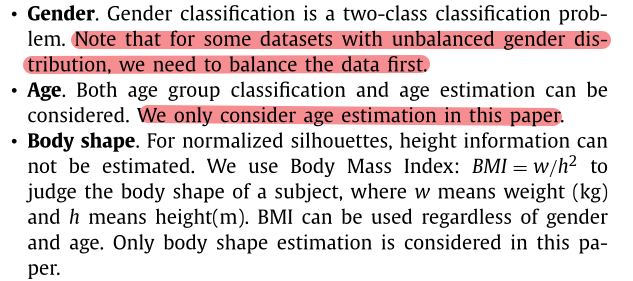

· 软生物特征(soft biometrics)

定义:能为个体识别提供一些信息的特征集合,但是由于缺乏特殊性和持久性,无法区分个体。例如年龄、性别。

作用:通过与生物特征中的主要特征进行融合,从而提高生物特征识别系统的识别精度

· 现有研究的缺漏

- 没有将行人识别以及软生物特征两项任务统一在一个框架中

- 即使有,也不够高效(精度高但识别速度慢)

· 文章的主要贡献:

① 将步态识别与软生物特征识别统一于一个联合框架,并且达到高精度

② 实现了快速的训练、测试与高效的存储

③ 研究得出不同身体部分对于软生物特征识别的贡献

2 Related work

文章的研究中心在appearance-based的方法,并且主要研究视角变换对步态识别的影响。

3 Our method

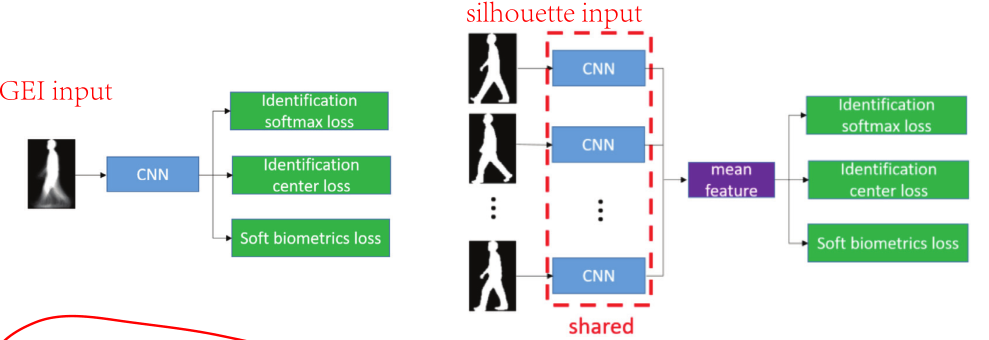

3.1 Gait Recognition

步态识别即需要捕捉空间信息,也需要捕捉时序信息。需要解决的两个问题:cross-view,cross-walking condition。

通过全连接层融合时间信息有两个好处:

① 得到每个序列的紧凑特征向量,在识别时只需要计算特征向量之间的距离

② 压缩特征向量语句语义意义,属性是在高层隐式学习的

文章考虑了两种时序融合方法:

① 图像级别的融合(GEI)

② 特征级别的融合(全连接层)

3.2 Soft biometrics

与Gait recognition相同的框架,对年龄、性别和BMI指数进行了预测,并且对他们进行了多任务学习。

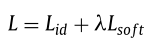

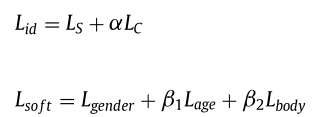

3.3 Joint analysis

在文章中,将步态识别与软生物特征识别进行了联合分析,模型框架将共享参数,并且在末端针对不同的任务给出不同的损失函数。

解释:软生物特征将减少搜索空间提升识别的表现,而识别将对软生物特征提供更多的监督以减少过拟合。

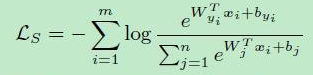

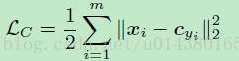

联合损失函数:

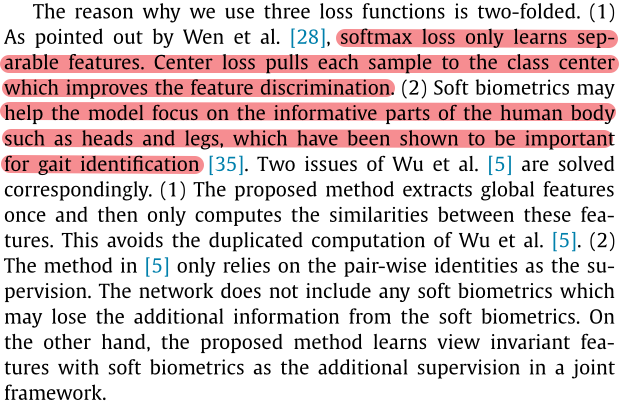

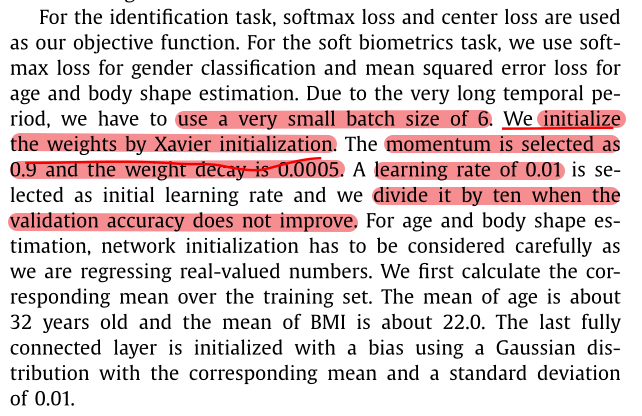

在识别任务上采用softmax loss + center loss可以使一个batch中的每个样本的feature离feature的中心的距离的平方和更小,从而使类间距离大,类内距离小。

4 Network

代码

'''基本块'''

import torch.nn as nn

from torch.nn import functional as F

class BasicConv2d(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, padding):

super(BasicConv2d, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels, kernel_size, padding=padding, stride=1)

self.bn = nn.BatchNorm2d(out_channels)

def forward(self, x):

x = self.conv(x)

x = self.bn(x)

return F.relu(x, inplace=True)

class SetBlock(nn.Module):

def __init__(self, forward_block):

super(SetBlock, self).__init__()

self.forward_block = forward_block

def forward(self, x):

n, s, c, h, w = x.size()

x = self.forward_block(x.view(-1, c, h, w))

_, c, h, w = x.size()

return x.view(n, s, c, h, w)

class PoolingBlock(nn.Module):

def __init__(self):

super(PoolingBlock, self).__init__()

self.pool2d = nn.MaxPool2d(2)

def forward(self, x):

n, s, c, h, w = x.size()

x = x.view(-1, c, h, w)

x = self.pool2d(x)

_, c, h, w = x.size()

return x.view(n, s, c, h, w)

def conv3x3(in_planes, out_planes, stride=1):

"""3x3 convolution with padding"""

return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride,

padding=1, bias=False)

def conv1x1(in_planes, out_planes, stride=1):

"""1x1 convolution"""

return nn.Conv2d(in_planes, out_planes, kernel_size=1, stride=stride, bias=False)

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, inplanes, planes, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = nn.BatchNorm2d(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = nn.BatchNorm2d(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

'''用于GEI的网络'''

import torch.nn as nn

import torch

from torch.nn import functional as F

from model.network.Basic_Blocks import BasicConv2d, BasicBlock

class GEINet(nn.Module):

def __init__(self):

super(GEINet, self).__init__()

# Conv

_in_channel = 1

_conv_channel = [64, 128, 256, 512]

self.conv_layer1 = BasicConv2d(_in_channel, _conv_channel[0], 3, 1)

self.conv_layer2 = BasicConv2d(_conv_channel[0], _conv_channel[0], 3, 1)

self.conv_layer3 = BasicConv2d(_conv_channel[0], _conv_channel[1], 3, 1)

self.conv_layer4 = BasicConv2d(_conv_channel[1], _conv_channel[2], 3, 1)

self.conv_layer5 = BasicConv2d(_conv_channel[2], _conv_channel[3], 3, 1)

# ResBlocks

_res_channel = [64, 128, 256, 512]

self.resblock1 = self._make_layer(BasicBlock, _res_channel[0], _res_channel[0], 1)

self.resblock2 = self._make_layer(BasicBlock, _res_channel[1], _res_channel[1], 2)

self.resblock3 = self._make_layer(BasicBlock, _res_channel[2], _res_channel[2], 5)

self.resblock4 = self._make_layer(BasicBlock, _res_channel[3], _res_channel[3], 3)

# Pooling

self.pooling = nn.MaxPool2d(2)

# 线性层

self.linear_layer1 = nn.Linear(512 * 4 * 4, 512)

for m in self.modules():

if isinstance(m, (nn.Conv2d, nn.Conv1d)):

nn.init.xavier_uniform_(m.weight.data)

elif isinstance(m, nn.Linear):

nn.init.xavier_uniform_(m.weight.data)

nn.init.constant(m.bias.data, 0.0)

elif isinstance(m, (nn.BatchNorm2d, nn.BatchNorm1d)):

nn.init.normal_(m.weight.data, 1.0, 0.02)

nn.init.constant_(m.bias.data, 0.0)

def _make_layer(self, block, inplanes, planes, blocks):

layers = []

for _ in range(0, blocks):

layers.append(block(inplanes, planes))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv_layer1(x) # 8 64 64 64

x = self.conv_layer2(x) # 8 64 64 64

x = self.pooling(x) # 8 64 32 32

x = self.resblock1(x) # 8 64 32 32

x = self.conv_layer3(x) # 8 128 32 32

x = self.pooling(x) # 8 128 16 16

x = self.resblock2(x) # 8 128 16 16

x = self.conv_layer4(x) # 8 256 16 16

x = self.pooling(x) # 8 256 8 8

x = self.resblock3(x) # 8 256 8 8

x = self.conv_layer5(x) # 8 512 8 8

x = self.pooling(x) # 8 512 4 4

x = self.resblock4(x) # 8 512 4 4

x = x.reshape(x.shape[0], 512 * 4 * 4) # 8 512 * 4 * 4

x = self.linear_layer1(x) # 8 512

return x

'''用于序列的网络'''

import torch.nn as nn

import torch

from torch.nn import functional as F

from model.network.Basic_Blocks import BasicConv2d, SetBlock, PoolingBlock, BasicBlock

class GEINet(nn.Module):

def __init__(self):

super(GEINet, self).__init__()

# Conv

_in_channel = 1

_conv_channel = [64, 128, 256, 512]

self.conv_layer1 = SetBlock(BasicConv2d(_in_channel, _conv_channel[0], 3, 1))

self.conv_layer2 = SetBlock(BasicConv2d(_conv_channel[0], _conv_channel[0], 3, 1))

self.conv_layer3 = SetBlock(BasicConv2d(_conv_channel[0], _conv_channel[1], 3, 1))

self.conv_layer4 = SetBlock(BasicConv2d(_conv_channel[1], _conv_channel[2], 3, 1))

self.conv_layer5 = SetBlock(BasicConv2d(_conv_channel[2], _conv_channel[3], 3, 1))

# ResBlocks

_res_channel = [64, 128, 256, 512]

self.resblock1 = SetBlock(self._make_layer(BasicBlock, _res_channel[0], _res_channel[0], 1))

self.resblock2 = SetBlock(self._make_layer(BasicBlock, _res_channel[1], _res_channel[1], 2))

self.resblock3 = SetBlock(self._make_layer(BasicBlock, _res_channel[2], _res_channel[2], 5))

self.resblock4 = SetBlock(self._make_layer(BasicBlock, _res_channel[3], _res_channel[3], 3))

# Pooling

self.pooling = PoolingBlock()

# 线性层

self.linear_layer1 = nn.Linear(512 * 4 * 4, 512)

for m in self.modules():

if isinstance(m, (nn.Conv2d, nn.Conv1d)):

nn.init.xavier_uniform_(m.weight.data)

elif isinstance(m, nn.Linear):

nn.init.xavier_uniform_(m.weight.data)

nn.init.constant(m.bias.data, 0.0)

elif isinstance(m, (nn.BatchNorm2d, nn.BatchNorm1d)):

nn.init.normal_(m.weight.data, 1.0, 0.02)

nn.init.constant_(m.bias.data, 0.0)

def _make_layer(self, block, inplanes, planes, blocks):

layers = []

for _ in range(0, blocks):

layers.append(block(inplanes, planes))

return nn.Sequential(*layers)

def forward(self, x):

x = x.unsqueeze(2) # 8 30 1 64 64

x = self.conv_layer1(x) # 8 30 64 64 64

x = self.conv_layer2(x) # 8 30 64 64 64

x = self.pooling(x) # 8 30 64 32 32

x = self.resblock1(x) # 8 30 64 32 32

x = self.conv_layer3(x) # 8 30 128 32 32

x = self.pooling(x) # 8 30 128 16 16

x = self.resblock2(x) # 8 30 128 16 16

x = self.conv_layer4(x) # 8 30 256 16 16

x = self.pooling(x) # 8 30 256 8 8

x = self.resblock3(x) # 8 30 256 8 8

x = self.conv_layer5(x) # 8 30 512 8 8

x = self.pooling(x) # 8 30 512 4 4

x = self.resblock4(x) # 8 30 512 4 4

x = x.mean(1) # 8 512 4 4

x = x.reshape(x.shape[0], 512 * 4 * 4) # 8 512 * 4 * 4

x = self.linear_layer1(x) # 8 512

return x

957

957

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?