迁移学习官方教程

翻译+搬运自:http://pytorch.org/tutorials/beginner/transfer_learning_tutorial.html#training-the-model

前置阅读:PyTorch深度学习:60分钟入门(Translation) - 知乎专栏

实际情况下,很少有人从头开始训练整个卷积网络(网络参数随机初始化),因为很难获得足够数量的训练图片,容易造成网络过拟合。所以,通常在一个非常大的数据集(如ImageNet图像,其中包含120万和1000类)上预训练ConvNet,然后用ConvNet作为参数初始化或固定特征提取器。

有两种迁移场景:

Finetuning the convnet:

我们用预训练网络的权值来初始化,来代替原先的随机初始化操作,其余训练过程照常。(这种方法通常叫做finetuning)

ConvNet as fixed feature extractor:

冻结所有网络的权重,除了最后的全连接层,对全连接层的权重做随机初始化后,开始训练,训练过程中只更新全连接层的参数。(这种方法就好比把pretrain的网络作为一个特征提取器)

需要用到的包:

# License: BSD

# Author: Sasank Chilamkurthy

from __future__ import print_function, division

import torch

import torch.nn as nn

import torch.optim as optim

from torch.autograd import Variable

import numpy as np

import torchvision

from torchvision import datasets, models, transforms

import matplotlib.pyplot as plt

import time

import copy

import os

plt.ion() # interactive mode加载数据

我们使用torchvision和torch.utils.data包来导入数据

现在要解决的问题是训练一个模型来对蚂蚁和蜜蜂进行分类。我们有大约120个训练图像用于蚂蚁和蜜蜂。每个类有75个验证图像。通常,如果从零开始训练,这是一个非常小的数据集。所以我们使用迁移学习,以得到更好的结果。(这个数据集是ImageNet中一个很小的子集)

请从这里下载数据集,然后把它解压到工程目录下。(下面是数据增广和标准化)

# Data augmentation and normalization for training

# Just normalization for validation

data_transforms = {

'train': transforms.Compose([

transforms.RandomSizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'val': transforms.Compose([

transforms.Scale(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

data_dir = 'hymenoptera_data'

dsets = {x: datasets.ImageFolder(os.path.join(data_dir, x), data_transforms[x])

for x in ['train', 'val']}

dset_loaders = {x: torch.utils.data.DataLoader(dsets[x], batch_size=4,

shuffle=True, num_workers=4)

for x in ['train', 'val']}

dset_sizes = {x: len(dsets[x]) for x in ['train', 'val']}

dset_classes = dsets['train'].classes

use_gpu = torch.cuda.is_available()可视化

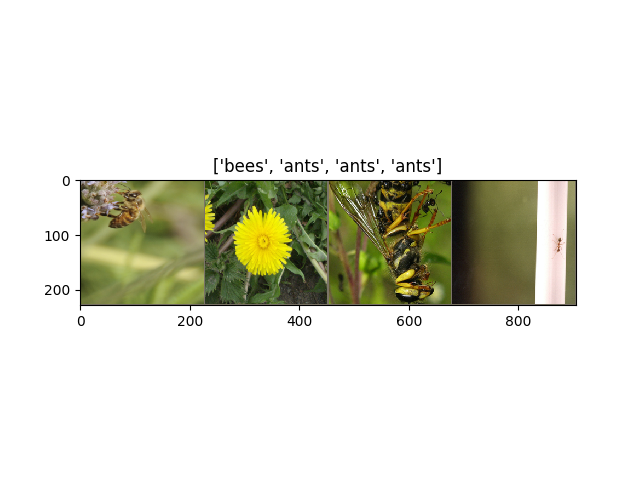

让我们可视化一些训练图像,以便更好的了解数据增广

def imshow(inp, title=None):

"""Imshow for Tensor."""

inp = inp.numpy().transpose((1, 2, 0))

mean = np.array([0.485, 0.456, 0.406])

std = np.array([0.229, 0.224, 0.225])

inp = std * inp + mean

plt.imshow(inp)

if title is not None:

plt.title(title)

plt.pause(0.001) # pause a bit so that plots are updated

# Get a batch of training data

inputs, classes = next(iter(dset_loaders['train']))

# Make a grid from batch

out = torchvision.utils.make_grid(inputs)

imshow(out, title=[dset_classes[x] for x in classes])

训练模型说明:

现在,让我们编写一个通用函数来训练模型。在这里,我们将说明:

1.学习率的调度

下面的模型中,

lr_scheduler(optimizer, epoch)是一个更改优化器的函数,从而使得学习率能根据我们指定的方案来调整。

def train_model(model, criterion, optimizer, lr_scheduler, num_epochs=25):

since = time.time()

best_model = model

best_acc = 0.0

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

# Each epoch has a training and validation phase

for phase in ['train', 'val']:

if phase == 'train':

optimizer = lr_scheduler(optimizer, epoch)

model.train(True) # Set model to training mode

else:

model.train(False) # Set model to evaluate mode

running_loss = 0.0

running_corrects = 0

# Iterate over data.

for data in dset_loaders[phase]:

# get the inputs

inputs, labels = data

# wrap them in Variable

if use_gpu:

inputs, labels = Variable(inputs.cuda()), \

Variable(labels.cuda())

else:

inputs, labels = Variable(inputs), Variable(labels)

# zero the parameter gradients

optimizer.zero_grad()

# forward

outputs = model(inputs)

_, preds = torch.max(outputs.data, 1)

loss = criterion(outputs, labels)

# backward + optimize only if in training phase

if phase == 'train':

loss.backward()

optimizer.step()

# statistics

running_loss += loss.data[0]

running_corrects += torch.sum(preds == labels.data)

epoch_loss = running_loss / dset_sizes[phase]

epoch_acc = running_corrects / dset_sizes[phase]

print('{} Loss: {:.4f} Acc: {:.4f}'.format(

phase, epoch_loss, epoch_acc))

# deep copy the model

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model = copy.deepcopy(model)

print()

time_elapsed = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(

time_elapsed // 60, time_elapsed % 60))

print('Best val Acc: {:4f}'.format(best_acc))

return best_model学习率调整方案

让我们创建学习速率调整方案。我们每隔几次epoch就会成倍地降低学习率。

def exp_lr_scheduler(optimizer, epoch, init_lr=0.001, lr_decay_epoch=7):

"""Decay learning rate by a factor of 0.1 every lr_decay_epoch epochs."""

lr = init_lr * (0.1**(epoch // lr_decay_epoch))

if epoch % lr_decay_epoch == 0:

print('LR is set to {}'.format(lr))

for param_group in optimizer.param_groups:

param_group['lr'] = lr

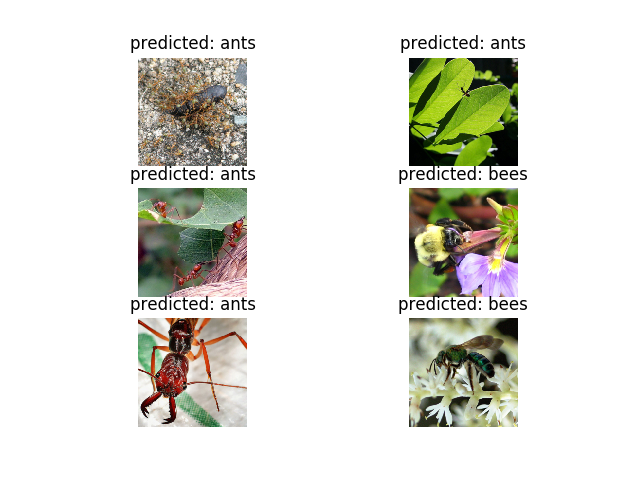

return optimizer可视化模型预测

def visualize_model(model, num_images=6):

images_so_far = 0

fig = plt.figure()

for i, data in enumerate(dset_loaders['val']):

inputs, labels = data

if use_gpu:

inputs, labels = Variable(inputs.cuda()), Variable(labels.cuda())

else:

inputs, labels = Variable(inputs), Variable(labels)

outputs = model(inputs)

_, preds = torch.max(outputs.data, 1)

for j in range(inputs.size()[0]):

images_so_far += 1

ax = plt.subplot(num_images//2, 2, images_so_far)

ax.axis('off')

ax.set_title('predicted: {}'.format(dset_classes[preds[j]]))

imshow(inputs.cpu().data[j])

if images_so_far == num_images:

returnFinetuning我们的网络

载入预训练的模型,重置最后的全连接层

model_ft = models.resnet18(pretrained=True)

num_ftrs = model_ft.fc.in_features

model_ft.fc = nn.Linear(num_ftrs, 2)

if use_gpu:

model_ft = model_ft.cuda()

criterion = nn.CrossEntropyLoss()

# Observe that all parameters are being optimized

optimizer_ft = optim.SGD(model_ft.parameters(), lr=0.001, momentum=0.9)

训练和测试

model_ft = train_model(model_ft, criterion, optimizer_ft, exp_lr_scheduler,

num_epochs=25)

输出:

Epoch 0/24

----------

LR is set to 0.001

train Loss: 0.1504 Acc: 0.6762

val Loss: 0.0756 Acc: 0.8627

Epoch 1/24

----------

train Loss: 0.1742 Acc: 0.7664

val Loss: 0.1215 Acc: 0.8301

Epoch 2/24

----------

train Loss: 0.1583 Acc: 0.7500

val Loss: 0.1291 Acc: 0.8039

Epoch 3/24

----------

train Loss: 0.1580 Acc: 0.7541

val Loss: 0.0714 Acc: 0.8824

Epoch 4/24

----------

train Loss: 0.1135 Acc: 0.8115

val Loss: 0.1669 Acc: 0.7778

Epoch 5/24

----------

train Loss: 0.1102 Acc: 0.8279

val Loss: 0.0687 Acc: 0.9020

Epoch 6/24

----------

train Loss: 0.0814 Acc: 0.8730

val Loss: 0.0660 Acc: 0.9281

Epoch 7/24

----------

LR is set to 0.0001

train Loss: 0.1015 Acc: 0.8238

val Loss: 0.0612 Acc: 0.9281

Epoch 8/24

----------

train Loss: 0.0848 Acc: 0.8525

val Loss: 0.0614 Acc: 0.9281

Epoch 9/24

----------

train Loss: 0.1072 Acc: 0.8033

val Loss: 0.0620 Acc: 0.9150

Epoch 10/24

----------

train Loss: 0.0570 Acc: 0.9139

val Loss: 0.0616 Acc: 0.9216

Epoch 11/24

----------

train Loss: 0.0781 Acc: 0.8689

val Loss: 0.0657 Acc: 0.9085

Epoch 12/24

----------

train Loss: 0.0800 Acc: 0.8525

val Loss: 0.0595 Acc: 0.9020

Epoch 13/24

----------

train Loss: 0.0724 Acc: 0.8689

val Loss: 0.0536 Acc: 0.9150

Epoch 14/24

----------

LR is set to 1.0000000000000003e-05

train Loss: 0.0778 Acc: 0.8770

val Loss: 0.0519 Acc: 0.9216

Epoch 15/24

----------

train Loss: 0.0574 Acc: 0.9057

val Loss: 0.0577 Acc: 0.9216

Epoch 16/24

----------

train Loss: 0.0701 Acc: 0.8934

val Loss: 0.0631 Acc: 0.9216

Epoch 17/24

----------

train Loss: 0.0970 Acc: 0.8361

val Loss: 0.0536 Acc: 0.9216

Epoch 18/24

----------

train Loss: 0.0639 Acc: 0.8975

val Loss: 0.0655 Acc: 0.9150

Epoch 19/24

----------

train Loss: 0.0699 Acc: 0.9016

val Loss: 0.0494 Acc: 0.9281

Epoch 20/24

----------

train Loss: 0.0765 Acc: 0.8648

val Loss: 0.0540 Acc: 0.9346

Epoch 21/24

----------

LR is set to 1.0000000000000002e-06

train Loss: 0.0854 Acc: 0.8484

val Loss: 0.0562 Acc: 0.9216

Epoch 22/24

----------

train Loss: 0.0822 Acc: 0.8852

val Loss: 0.0513 Acc: 0.9216

Epoch 23/24

----------

train Loss: 0.0561 Acc: 0.9139

val Loss: 0.0634 Acc: 0.9085

Epoch 24/24

----------

train Loss: 0.0645 Acc: 0.8975

val Loss: 0.0507 Acc: 0.9281

Training complete in 1m 3s

Best val Acc: 0.934641

可视化:

visualize_model(model_ft)

用固定特征提取器的方法训练网络

这里我们需要冻结所有网络参数,除了最后一层,设

requires_grad == False来冻结参数,使得其在反传的时候不会更新

model_conv = torchvision.models.resnet18(pretrained=True)

for param in model_conv.parameters():

param.requires_grad = False

# Parameters of newly constructed modules have requires_grad=True by default

num_ftrs = model_conv.fc.in_features

model_conv.fc = nn.Linear(num_ftrs, 2)

if use_gpu:

model_conv = model_conv.cuda()

criterion = nn.CrossEntropyLoss()

# Observe that only parameters of final layer are being optimized as

# opoosed to before.

optimizer_conv = optim.SGD(model_conv.fc.parameters(), lr=0.001, momentum=0.9)训练和测试

在CPU上,与以前的场景相比,这将花费大约一半的时间。这是因为不需要计算大多数网络的梯度。然而,前向仍然是都需要计算的。

model_conv = train_model(model_conv, criterion, optimizer_conv,

exp_lr_scheduler, num_epochs=25)输出:

Epoch 0/24

----------

LR is set to 0.001

train Loss: 0.1510 Acc: 0.6762

val Loss: 0.0555 Acc: 0.9281

Epoch 1/24

----------

train Loss: 0.0988 Acc: 0.8074

val Loss: 0.0454 Acc: 0.9412

Epoch 2/24

----------

train Loss: 0.1206 Acc: 0.7828

val Loss: 0.0967 Acc: 0.9020

Epoch 3/24

----------

train Loss: 0.1620 Acc: 0.7377

val Loss: 0.1390 Acc: 0.8039

Epoch 4/24

----------

train Loss: 0.1440 Acc: 0.7705

val Loss: 0.0607 Acc: 0.9216

Epoch 5/24

----------

train Loss: 0.1181 Acc: 0.7992

val Loss: 0.0762 Acc: 0.8758

Epoch 6/24

----------

train Loss: 0.1536 Acc: 0.7664

val Loss: 0.0454 Acc: 0.9608

Epoch 7/24

----------

LR is set to 0.0001

train Loss: 0.1109 Acc: 0.8074

val Loss: 0.0480 Acc: 0.9346

Epoch 8/24

----------

train Loss: 0.1045 Acc: 0.8115

val Loss: 0.0465 Acc: 0.9477

Epoch 9/24

----------

train Loss: 0.0973 Acc: 0.8238

val Loss: 0.0488 Acc: 0.9477

Epoch 10/24

----------

train Loss: 0.0723 Acc: 0.8730

val Loss: 0.0560 Acc: 0.9281

Epoch 11/24

----------

train Loss: 0.0867 Acc: 0.8525

val Loss: 0.0436 Acc: 0.9542

Epoch 12/24

----------

train Loss: 0.0941 Acc: 0.8443

val Loss: 0.0448 Acc: 0.9412

Epoch 13/24

----------

train Loss: 0.1037 Acc: 0.8074

val Loss: 0.0414 Acc: 0.9542

Epoch 14/24

----------

LR is set to 1.0000000000000003e-05

train Loss: 0.0874 Acc: 0.8320

val Loss: 0.0413 Acc: 0.9477

Epoch 15/24

----------

train Loss: 0.0893 Acc: 0.8484

val Loss: 0.0412 Acc: 0.9412

Epoch 16/24

----------

train Loss: 0.0585 Acc: 0.9098

val Loss: 0.0587 Acc: 0.9085

Epoch 17/24

----------

train Loss: 0.0708 Acc: 0.8770

val Loss: 0.0483 Acc: 0.9346

Epoch 18/24

----------

train Loss: 0.0915 Acc: 0.8361

val Loss: 0.0417 Acc: 0.9542

Epoch 19/24

----------

train Loss: 0.0751 Acc: 0.8648

val Loss: 0.0441 Acc: 0.9477

Epoch 20/24

----------

train Loss: 0.0717 Acc: 0.8852

val Loss: 0.0478 Acc: 0.9412

Epoch 21/24

----------

LR is set to 1.0000000000000002e-06

train Loss: 0.0865 Acc: 0.8279

val Loss: 0.0439 Acc: 0.9608

Epoch 22/24

----------

train Loss: 0.0764 Acc: 0.8443

val Loss: 0.0523 Acc: 0.9346

Epoch 23/24

----------

train Loss: 0.0790 Acc: 0.8648

val Loss: 0.0446 Acc: 0.9477

Epoch 24/24

----------

train Loss: 0.0850 Acc: 0.8566

val Loss: 0.0426 Acc: 0.9477

Training complete in 0m 37s

Best val Acc: 0.960784

可视化:

visualize_model(model_conv)

plt.ioff()

plt.show()

4350

4350

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?