复现指南

再说我的环境Ubuntu20.04 cuda11.1 python=3.8 torch==1.9.0 torchvision==0.10.0

然后在ReadMe里找到这里,按所述步骤操作即可!

问题解决

运行

python grounded_sam_demo.py --config GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py --grounded_checkpoint groundingdino_swint_ogc.pth --sam_checkpoint sam_vit_h_4b8939.pth --input_image assets/demo1.jpg --output_dir "outputs" --box_threshold 0.3 --text_threshold 0.25 --text_prompt "bear" --device "cuda"错误如下:

OSError: We couldn't connect to 'https://huggingface.co' to load this file, couldn't find it in the cached files and it looks like bert-base-uncased is not the path to a directory containing a file named config.json.

Checkout your internet connection or see how to run the library in offline mode at 'https://huggingface.co/docs/transformers/installation#offline-mode'.解决方法:

全网最好解决中国huggingface.co无法访问问题-CSDN博客

再报错:AttributeError: module 'torch' has no attribute 'frombuffer'

解决方法:把环境中的transformers和safetensors卸载重装版本为transformers==4.29.2,safetensors==0.3.0

再报错:

requests.exceptions.ReadTimeout: (ReadTimeoutError("HTTPSConnectionPool(host='cdn-lfs.hf-mirror.com', port=443): Read timed out. (read timeout=10)"), '(Request ID: 4935fe5f-696b-4709-b31a-9b242ff98d48)')

解决方法:等网好。。。

再报错:已杀死,但output里没有输出分割结果

解决方法:实测是因为模型太大了,换个小一点的就好了,官方提供的说明文档里默认使用的SAM中最大的模型,下面是几个模型文件,b是最小的,h是最大的;

SAM:

vit_h: https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth

vit_l: https://dl.fbaipublicfiles.com/segment_anything/sam_vit_l_0b3195.pth

vit_b: https://dl.fbaipublicfiles.com/segment_anything/sam_vit_b_01ec64.pth

SAM-HQ:

vit_h: https://drive.google.com/file/d/1qobFYrI4eyIANfBSmYcGuWRaSIXfMOQ8/view?usp=sharing

vit_l: https://drive.google.com/file/d/1Uk17tDKX1YAKas5knI4y9ZJCo0lRVL0G/view?usp=sharing

vit_b: https://drive.google.com/file/d/11yExZLOve38kRZPfRx_MRxfIAKmfMY47/view?usp=sharing

这里换模型以后记得把grounded_sam_demo.py里的

parser.add_argument(

"--sam_version", type=str, default="vit_b", required=False, help="SAM ViT version: vit_b / vit_l / vit_h"

)也改掉,例如使用b模型,将default="vit_h"改为default="vit_b",然后运行:

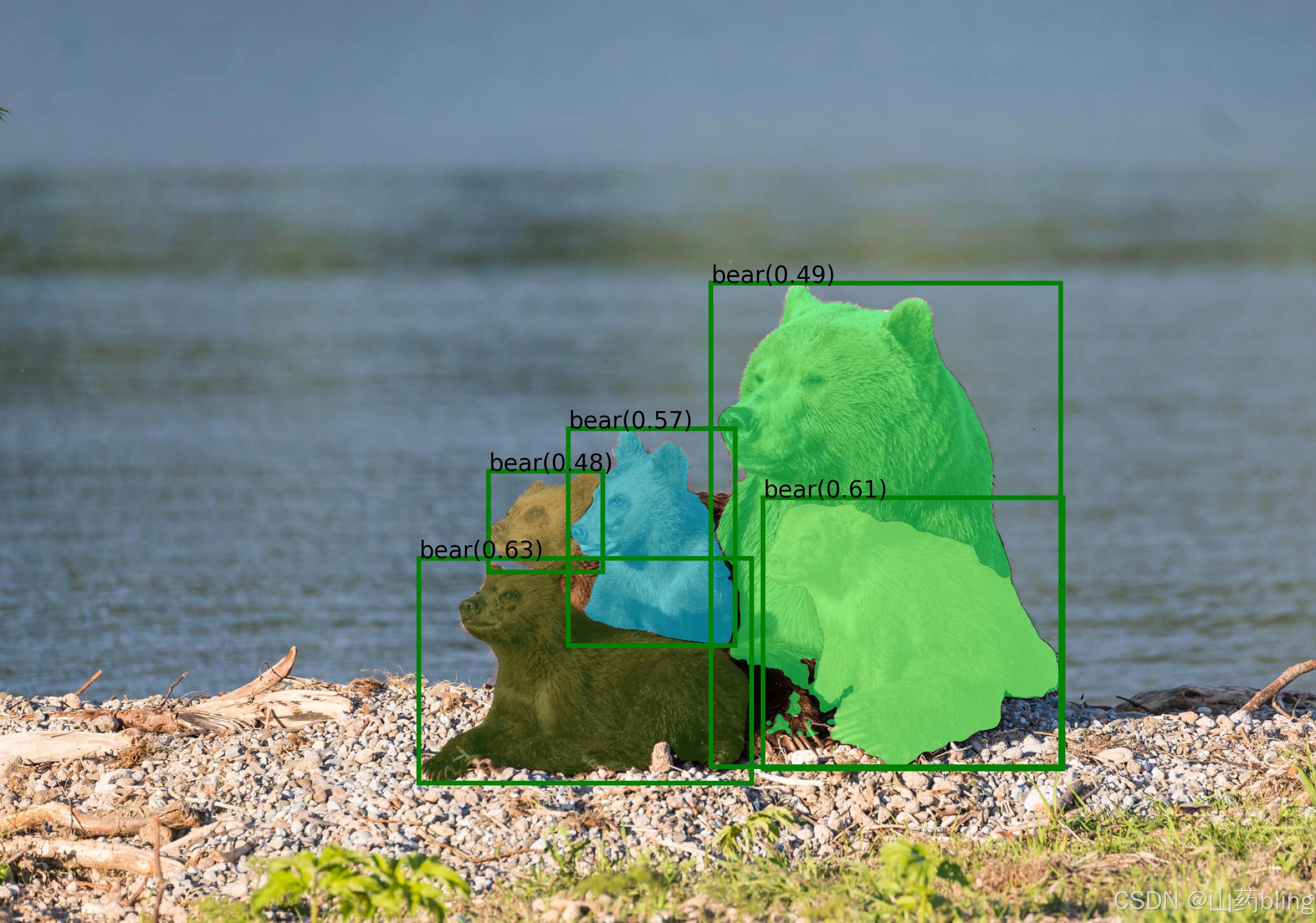

python grounded_sam_demo.py --config GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py --grounded_checkpoint groundingdino_swint_ogc.pth --sam_checkpoint sam_vit_b_01ec64.pth --input_image assets/demo1.jpg --output_dir "outputs" --box_threshold 0.3 --text_threshold 0.25 --text_prompt "bear" --device "cuda"最后在output文件夹下有输出啦~~

还有一个mask.json文件。

1062

1062

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?