k-Nearest-Neighbor

算法思想:

- 计算测试集的图片与训练集所有图片的距离,存储在dist中,其中dists[i, j]

保存的是第i个测试点和第j个训练点的距离 - 判断第i个测试点的k个最近邻的训练集图片的标签并存储在closest_y中

- 统计closest_y中数量最多的标签存储在y_pred[i]中,即预测的该测试点的标签

- 计算测试集中图片预测的正确率

- 使用交叉验证找到最好的k值

knn.ipynb

import random

import numpy as np

from cs231n.data_utils import load_CIFAR10

import matplotlib.pyplot as plt

from past.builtins import xrange

python中导入模块的两种方式:

- import 模块名1 [as 别名1], 模块名2 [as 别名2],…: 导入整个模块。

- from 模块名 import 成员名1 [as 别名1],成员名2 [as 别名2],…: 导入模块中指定成员。

当使用第一种 import 语句导入模块中的成员时,使用时必须添加模块名或模块别名前缀;使用第二种 import 语句导入模块中的成员时,无须使用任何前缀,直接使用成员名或成员别名即可。

# 导入原始的 CIFAR-10 数据.

cifar10_dir = 'cs231n/datasets/cifar-10-batches-py'

# 清除变量以防止对此加载数据(这可能会导致内存问题)

try:

del X_train, y_train

del X_test, y_test

print('Clear previously loaded data.')

except:

pass

X_train, y_train, X_test, y_test = load_CIFAR10(cifar10_dir)

# 打印训练和测试的数据检测数据完整性

print('Training data shape: ', X_train.shape)

print('Training labels shape: ', y_train.shape)

print('Test data shape: ', X_test.shape)

print('Test labels shape: ', y_test.shape)

输出结果:

Training data shape: (50000, 32, 32, 3)

Training labels shape: (50000,)

Test data shape: (10000, 32, 32, 3)

Test labels shape: (10000,)

# 可视化数据集中的一部分样例.

# 训练图片集中每个分类展示一部分样例

classes = ['plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

num_classes = len(classes)

samples_per_class = 7

for y, cls in enumerate(classes):

idxs = np.flatnonzero(y_train == y)

idxs = np.random.choice(idxs, samples_per_class, replace=False)

for i, idx in enumerate(idxs):

plt_idx = i * num_classes + y + 1

plt.subplot(samples_per_class, num_classes, plt_idx)

plt.imshow(X_train[idx].astype('uint8'))

plt.axis('off')

if i == 0:

plt.title(cls)

plt.show()

打印的结果:

# 在练习中为了高效执行代码,抽取一部分子样本

num_training = 5000

mask = list(range(num_training))

X_train = X_train[mask]

y_train = y_train[mask]

num_test = 500

mask = list(range(num_test))

X_test = X_test[mask]

y_test = y_test[mask]

# 图片数据形状变为一列

X_train = np.reshape(X_train, (X_train.shape[0], -1))

X_test = np.reshape(X_test, (X_test.shape[0], -1))

print(X_train.shape, X_test.shape)

(5000, 3072) (500, 3072)

from cs231n.classifiers import KNearestNeighbor

# 创建一个 kNN 分类器实例

# 分类器只记住数据,不做进一步的处理

classifier = KNearestNeighbor()

classifier.train(X_train, y_train) # 给分类器传入数据(训练)

我们现在想用knn分类器对测试数据进行分类。回想一下,我们可以将这个过程分为两个步骤:

- 首先,我们必须计算所有测试样例和所有训练示例之间的距离。

- 考虑到这些距离,对于每个测试样例,我们找到k个最近的样例,并让它们为标签投票。

让我们从计算所有训练和测试样例之间的距离矩阵开始。例如,如果有NTR训练样例和NTE测试样例,则此阶段应生成NTE x NTR矩阵,其中每个元素(i,j)是第i个测试和第j个训练样例之间的距离。

首先,打开cs231n/classifiers/k_nearest_neighbor.py并实现函数compute_distances_two_loops,该函数在所有(测试、训练)示例对上使用(非常低效)双循环,并一次计算一个元素的距离矩阵。

k_nearest_neighbor.py

def compute_distances_two_loops(self, X):

"""

在训练数据和测试数据上面使用一个嵌套循环,来计算X中的每个测试点和self.X_train中的每个训练点之间的距离。

Inputs:

- X: 一个包含测试数据的numpy数组,形状为(num_test, D)

Returns:

- dists: 形状为(num_test, num_train)的numpy数组,其中dists[i, j]

保存的是第i个测试点和第j个训练点的距离

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in range(num_test):

for j in range(num_train):

# 计算第i个测试点和第j个训练点的距离,结果保存在dists[i, j]

# 使用的是L2距离公式

sub = np.subtract(X[i], self.X_train[j])

dist = np.sqrt(np.power(sub, 2).sum())

dists[i][j] = dist

return dists

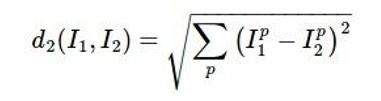

L2距离公式:

knn.ipynb

# 测试实现结果:

dists = classifier.compute_distances_two_loops(X_test)

print(dists.shape)

打印结果:

(500, 5000)

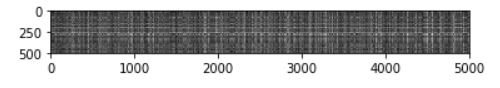

# 我们可以可视化距离矩阵: 每行是一个单独的测试样例与所有训练样例的距离

plt.imshow(dists, interpolation='none')

plt.show()

List item

k_nearest_neighbor.py

def predict_labels(self, dists, k=1):

"""

对于测试点和训练点的距离矩阵,预测每个测试点的标签

Inputs:

- dists:形状为(num_test, num_train)的numpy数组,其中dists[i, j]

保存的是第i个测试点和第j个训练点的距离

Returns:

- y: 一个包含对测试数据预测的标签的numpy数组,形状为(num_test,),其中y[i]是对测试点X[i]预测的标签.

"""

num_test = dists.shape[0]

y_pred = np.zeros(num_test)

for i in range(num_test):

# 一个长度为k的列表,保存了第i个测试点的k个最近邻的标签

closest_y = []

index_sorted = np.argsort(dists[i]) # 第i个测试样本与训练集中每个样本距离从小到大的索引

closest_y = self.y_train[index_sorted[0:k]].tolist() # k个最近距离样本的标签

closest_y = np.array(closest_y)

y_pred[i] = np.argmax(np.bincount(closest_y.reshape(len(closest_y)))) # 找到k个最近邻中出现次数最多的标签

return y_pred

knn.ipynb

y_test_pred = classifier.predict_labels(dists, k=1)

# 计算并打印正确预测图片的比例

num_correct = np.sum(y_test_pred == y_test) # 测试数据中预测正确的数量

accuracy = float(num_correct) / num_test

print('Got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy))

Got 137 / 500 correct => accuracy: 0.274000

# 当k值等于5时的准确率

y_test_pred = classifier.predict_labels(dists, k=5)

num_correct = np.sum(y_test_pred == y_test)

accuracy = float(num_correct) / num_test

print('Got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy))

Got 139 / 500 correct => accuracy: 0.278000

k_nearest_neighbor.py

使用单层循环来求距离矩阵

def compute_distances_one_loop(self, X):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in range(num_test):

dists[i] = np.sqrt(np.sum(np.power(np.subtract(X[i], self.X_train), 2), axis=1))

return dists

knn.ipynb

判断单层循环计算的矩阵与之前双层循环计算出的是否相同

dists_one = classifier.compute_distances_one_loop(X_test)

difference = np.linalg.norm(dists - dists_one, ord='fro')

print('One loop difference was: %f' % (difference, ))

if difference < 0.001:

print('Good! The distance matrices are the same')

else:

print('Uh-oh! The distance matrices are different')

One loop difference was: 0.000000

Good! The distance matrices are the same

k_nearest_neighbor.py

不使用循环来求距离矩阵

def compute_distances_no_loops(self, X):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

mul = np.dot(X, self.X_train.T)

new_X = np.sum(np.power(X, 2), axis=1)

new_train = np.sum(np.power(self.X_train.T, 2), axis=0)

new_X = new_X.reshape(num_test, 1)

new_train = new_train.reshape(1, num_train)

new_sum = new_X + new_train

dists = np.sqrt(new_sum - 2 * mul)

return dists

knn.ipynb

# 比较哪种计算方法运行最快

def time_function(f, *args):

"""

Call a function f with args and return the time (in seconds) that it took to execute.

"""

import time

tic = time.time()

f(*args)

toc = time.time()

return toc - tic

two_loop_time = time_function(classifier.compute_distances_two_loops, X_test)

print('Two loop version took %f seconds' % two_loop_time)

one_loop_time = time_function(classifier.compute_distances_one_loop, X_test)

print('One loop version took %f seconds' % one_loop_time)

no_loop_time = time_function(classifier.compute_distances_no_loops, X_test)

print('No loop version took %f seconds' % no_loop_time)

# 应该重点关注完全向量化的实现是更快的

# NOTE: 这个取决于你使用的机器,你可能不会看到单层循环比双层循环运行更快,甚至有可能更慢。

Two loop version took 30.061037 seconds

One loop version took 61.317346 seconds

No loop version took 0.233378 seconds

交叉验证:将数据拆分为多个folds,尝试将每个fold作为验证集并求平均值作为结果

我们需要使用交叉验证来求出预测正确率最高的超参数的值k

num_folds = 5

k_choices = [1, 3, 5, 8, 10, 12, 15, 20, 50, 100]

X_train_folds = []

y_train_folds = []

# y_train_folds是长度为num_folds的列表

# 其中y_train_folds[i]是X_train_folds[i]的标签向量

# 把数据集分成num_folds份,返回一个列表

X_train_folds = np.array_split(X_train, num_folds, axis=0)

y_train_folds = np.array_split(y_train, num_folds, axis=0)

# 字典保存着不同k值所对应的正确率

# 当运行交叉验证时。在执行交叉验证后,k_to_accuracies[k] 应该是长度为num_folds的列表,

# 保存着使用不同的k值所对应的正确率

k_to_accuracies = {} #

# 对每个可能的k值,运行num_folds次k-nearest-neighbor算法,

# 在每次算法中使用除了验证集部分外的所有folds作为训练数据,剩下一个fold作为验证集。

# 把所有的fold的正确率和相应的k值保存到k_to_accuracies字典。

num_val = len(X_train_folds[0])

classifier = KNearestNeighbor()

for k in k_choices:

k_to_accuracies[k] = []

for i in range(num_folds):

# 保存原数据集

X_temp_folds = X_train_folds[:]

y_temp_folds = y_train_folds[:]

# 第i个fold作为验证集,整下的部分作为训练集

X_val_fold = X_temp_folds.pop(i)

y_val_fold = y_temp_folds.pop(i)

# 压缩数据的前两个维度

X_temp_folds = np.array(X_temp_folds).reshape([(num_folds-1) * num_val, -1])

y_temp_folds = np.array(y_temp_folds).reshape([(num_folds-1) * num_val, -1])

classifier.train(X_temp_folds, y_temp_folds)

dists_two = classifier.compute_distances_no_loops(X_val_fold)

y_test_pred = classifier.predict_labels(dists_two, k)

num_correct = np.sum(y_test_pred == y_val_fold)

accuracy = float(num_correct) / num_val

k_to_accuracies[k].append(accuracy)

# 打印计算出的正确率

for k in sorted(k_to_accuracies):

for accuracy in k_to_accuracies[k]:

print('k = %d, accuracy = %f' % (k, accuracy))

k = 1, accuracy = 0.263000

k = 1, accuracy = 0.257000

k = 1, accuracy = 0.264000

k = 1, accuracy = 0.278000

k = 1, accuracy = 0.266000

k = 3, accuracy = 0.239000

k = 3, accuracy = 0.249000

k = 3, accuracy = 0.240000

k = 3, accuracy = 0.266000

k = 3, accuracy = 0.254000

k = 5, accuracy = 0.248000

k = 5, accuracy = 0.266000

k = 5, accuracy = 0.280000

k = 5, accuracy = 0.292000

k = 5, accuracy = 0.280000

k = 8, accuracy = 0.262000

k = 8, accuracy = 0.282000

k = 8, accuracy = 0.273000

k = 8, accuracy = 0.290000

k = 8, accuracy = 0.273000

k = 10, accuracy = 0.265000

k = 10, accuracy = 0.296000

k = 10, accuracy = 0.276000

k = 10, accuracy = 0.284000

k = 10, accuracy = 0.280000

k = 12, accuracy = 0.260000

k = 12, accuracy = 0.295000

k = 12, accuracy = 0.279000

k = 12, accuracy = 0.283000

k = 12, accuracy = 0.280000

k = 15, accuracy = 0.252000

k = 15, accuracy = 0.289000

k = 15, accuracy = 0.278000

k = 15, accuracy = 0.282000

k = 15, accuracy = 0.274000

k = 20, accuracy = 0.270000

k = 20, accuracy = 0.279000

k = 20, accuracy = 0.279000

k = 20, accuracy = 0.282000

k = 20, accuracy = 0.285000

k = 50, accuracy = 0.271000

k = 50, accuracy = 0.288000

k = 50, accuracy = 0.278000

k = 50, accuracy = 0.269000

k = 50, accuracy = 0.266000

k = 100, accuracy = 0.256000

k = 100, accuracy = 0.270000

k = 100, accuracy = 0.263000

k = 100, accuracy = 0.256000

k = 100, accuracy = 0.263000

# 绘制原始的观测图

for k in k_choices:

accuracies = k_to_accuracies[k]

plt.scatter([k] * len(accuracies), accuracies)

# 绘制相对应标准误差的错误趋势线

accuracies_mean = np.array([np.mean(v) for k,v in sorted(k_to_accuracies.items())])

accuracies_std = np.array([np.std(v) for k,v in sorted(k_to_accuracies.items())])

plt.errorbar(k_choices, accuracies_mean, yerr=accuracies_std)

plt.title('Cross-validation on k')

plt.xlabel('k')

plt.ylabel('Cross-validation accuracy')

plt.show()

# 基于以上的交叉验证结果, 选择最好的K值,

# 使用所有的训练数据重新训练分类器, 并且使用它测试测试数据

best_k = 1

classifier = KNearestNeighbor()

classifier.train(X_train, y_train)

y_test_pred = classifier.predict(X_test, k=best_k)

# 计算并且展示正确率

num_correct = np.sum(y_test_pred == y_test)

accuracy = float(num_correct) / num_test

print('Got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy))

Got 137 / 500 correct => accuracy: 0.274000

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?