引言

源码:https://github.com/nmwsharp/diffusion-net

论文:DiffusionNet: Discretization Agnostic Learning on Surfaces

相对来说效果好、速度快、占用内(显)存小、使用简单…今年(2022年)刚发表在TOG上.

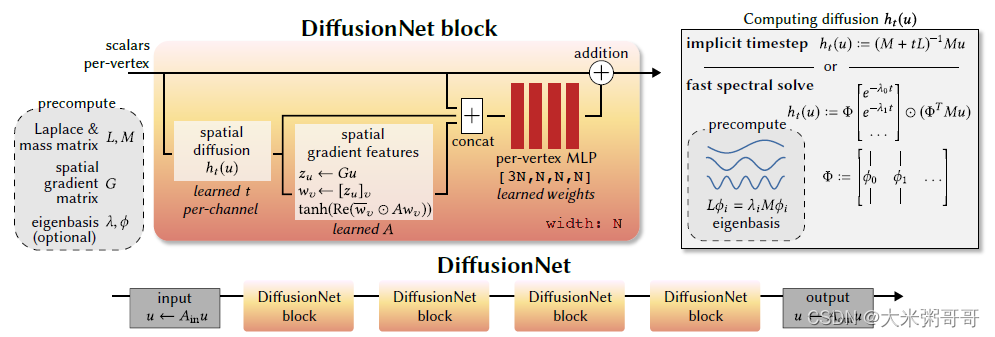

一、方法简述

个人感觉:DiffusionNet1核心是其代码中的一句注释 Applies diffusion with learned per-channel t

将传统算法,推广到深度学习的领域 - 将传统算法的参数变为可学习的参数 (卷积也是这样.大多数的网络都是基于或者说来源于传统方法,对传统方法进行建模,对某些特定参数进行学习)数学真的蛮重要....

1.1 输入

网络的输入:xyz坐标 or hks特征 + 梯度特征

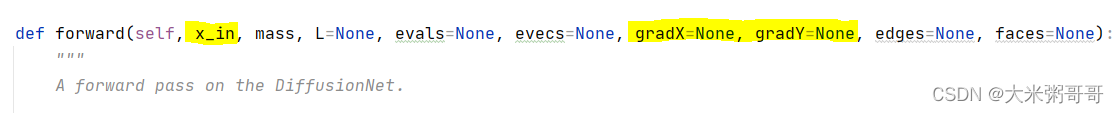

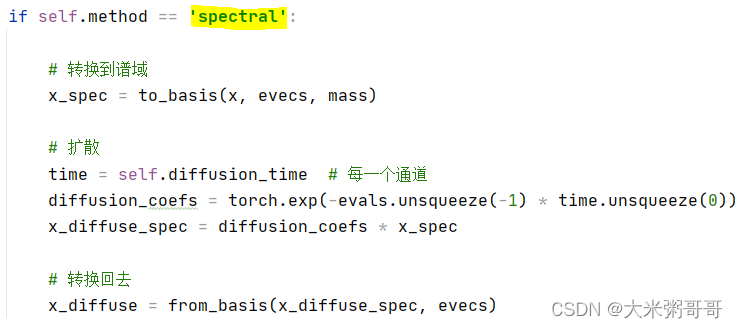

1.2 网络

核心部分:

- 大致框架:前面线性层,后面线性层,中间

Block叠加

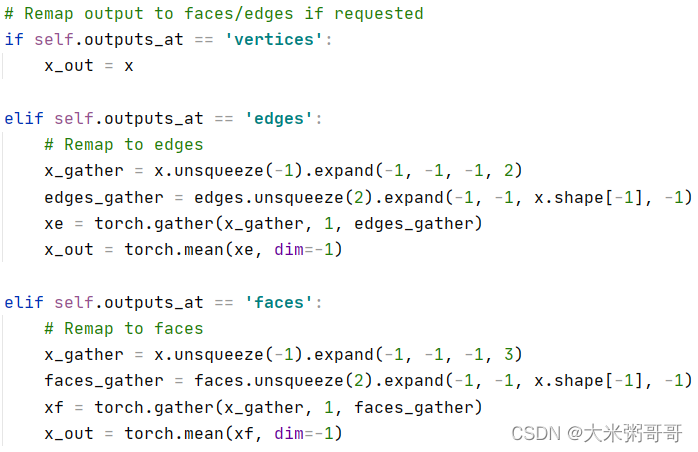

- 最后的

点、边、面特征转换 (比我写的转换代码优雅很多)

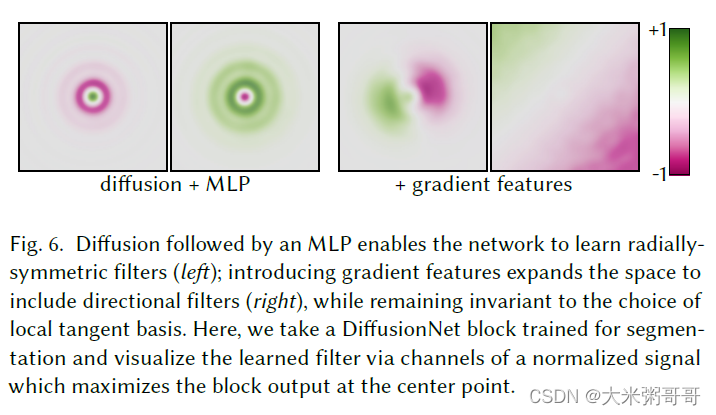

DiffusionNetBlock中主要三个部分 LearnedTimeDiffusion、SpatialGradientFeatures 和 MiniMLP

作者对于DiffusionNetBlock的分析与可视化:

The last building block in our method enables a larger space of filters by computing additional features from the spatial gradients of signal values at vertices (

用卷积的话来说就是感受野更大了):

1.3 损失函数

分类和分割所用的损失函数不一样

分类:标签平滑 label_smoothing_log_loss(preds, labels, label_smoothing_fac)

分割:交叉熵 - torch.nn.functional.nll_loss(preds, labels)

二、分类实验

分类数据集请参考:【三维几何学习】 三角网格(Triangular Mesh)分类数据集

2.1 结果

| input \ width | 32 | 64 | 128 | 256 |

|---|---|---|---|---|

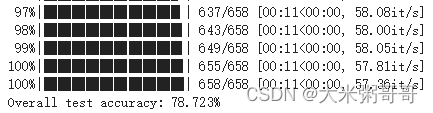

| hks | - | 78.723 | - | - |

| xyz | - | 85.562 | - | - |

diffusionNet_cubes_hks_64截图,服务器上时间波动较大 训练(10-34)it/s 测试(20-58)it/s

特征通道C_Width = 64,在Cubes上略过拟合,C_Width = 32准确率不到70%..C_Width大点可能能提点

2.2 代码

cubes_dataset.py

import shutil

import os

import sys

import random

import numpy as np

import torch

from torch.utils.data import Dataset

import potpourri3d as pp3d

sys.path.append(os.path.join(os.path.dirname(__file__), "../../")) # add the path to the DiffusionNet src

import src.diffusion_net as diffusion_net

from src.diffusion_net.utils import toNP

def is_mesh_file(filename):

return any(filename.endswith(extension) for extension in ['.obj', 'off'])

class CubesDataset(Dataset):

def __init__(self, root_dir, phase, k_eig, op_cache_dir=None):

self.root_dir = root_dir

self.k_eig = k_eig

self.op_cache_dir = op_cache_dir

self.classes, self.class_to_idx = self.find_classes(self.root_dir)

self.paths = self.make_dataset_by_class(self.root_dir, self.class_to_idx, phase)

self.n_class = len(self.classes)

def __len__(self):

return len(self.paths)

def __getitem__(self, index):

path = self.paths[index][0] # 路径

label = self.paths[index][1] # 类别 标签

verts, faces = pp3d.read_mesh(path)

verts = torch.tensor(verts).float()

faces = torch.tensor(faces)

label = torch.tensor(label)

frames, mass, L, evals, evecs, gradX, gradY = diffusion_net.geometry.get_operators(verts, faces, k_eig=self.k_eig, op_cache_dir=self.op_cache_dir)

return verts, faces, frames, mass, L, evals, evecs, gradX, gradY, label

@staticmethod

def find_classes(dirs):

classes = [d for d in os.listdir(dirs) if os.path.isdir(os.path.join(dirs, d))]

classes.sort()

class_to_idx = {classes[i]: i for i in range(len(classes))}

return classes, class_to_idx

@staticmethod

def make_dataset_by_class(dirs, class_to_idx, phase):

meshes = []

dirs = os.path.expanduser(dirs)

for target in sorted(os.listdir(dirs)):

d = os.path.join(dirs, target)

if not os.path.isdir(d):

continue

for root, _, fnames in sorted(os.walk(d)):

for fname in sorted(fnames):

if is_mesh_file(fname) and (root.count(phase) == 1):

path = os.path.join(root, fname)

item = (path, class_to_idx[target])

meshes.append(item)

return meshes

classification_cubes.py

import os

import sys

import argparse

import torch

from torch.utils.data import DataLoader

from tqdm import tqdm

sys.path.append(os.path.join(os.path.dirname(__file__), "../../"))

import src.diffusion_net as diffusion_net

from experiments.cls_cubes_500.cubes_dataset import CubesDataset

# Parse a few args

parser = argparse.ArgumentParser()

parser.add_argument("--input_features", type=str, help="('xyz' or 'hks')", default='hks')

parser.add_argument("--features_width", type=int, choices={32, 64, 128, 256}, default=128)

args = parser.parse_args()

# system things

device = torch.device('cuda:0')

dtype = torch.float32

input_features = args.input_features # one of ['xyz', 'hks'] # model

C_width = args.features_width

k_eig = 128

n_epoch = 200

lr = 1e-3

decay_every = 50

decay_rate = 0.5

augment_random_rotate = (input_features == 'xyz')

label_smoothing_fac = 0.2

# === Load datasets

base_path = os.path.dirname(__file__)

dataset_path = os.path.join(base_path, "data", "cubes") # 数据集路径

op_cache_dir = os.path.join(base_path, "data", "op_cache") # 缓存路径

# Train dataset

train_dataset = CubesDataset(dataset_path, 'train', k_eig=k_eig, op_cache_dir=op_cache_dir)

train_loader = DataLoader(train_dataset, batch_size=None, shuffle=True)

# Test dataset

test_dataset = CubesDataset(dataset_path, 'test', k_eig=k_eig, op_cache_dir=op_cache_dir)

test_loader = DataLoader(test_dataset, batch_size=None)

n_class = train_dataset.n_class

# === Create the model

C_in={'xyz': 3, 'hks': 16}[input_features] # dimension of input features

model = diffusion_net.layers.DiffusionNet(C_in=C_in,

C_out=n_class,

C_width=C_width,

N_block=4,

last_activation=lambda x : torch.nn.functional.log_softmax(x,dim=-1),

outputs_at='global_mean',

dropout=False)

model = model.to(device)

# === Optimize

optimizer = torch.optim.Adam(model.parameters(), lr=lr)

def train_epoch(epoch):

# Implement lr decay

if epoch > 0 and epoch % decay_every == 0:

global lr

lr *= decay_rate

for param_group in optimizer.param_groups:

param_group['lr'] = lr

# Set model to 'train' mode

model.train()

optimizer.zero_grad()

correct = 0

total_num = 0

for data in tqdm(train_loader):

# Get data

verts, faces, frames, mass, L, evals, evecs, gradX, gradY, labels = data

# Move to device

verts = verts.to(device)

faces = faces.to(device)

frames = frames.to(device)

mass = mass.to(device)

L = L.to(device)

evals = evals.to(device)

evecs = evecs.to(device)

gradX = gradX.to(device)

gradY = gradY.to(device)

labels = labels.to(device)

# Randomly rotate positions

if augment_random_rotate:

verts = diffusion_net.utils.random_rotate_points(verts)

# Construct features

if input_features == 'xyz':

features = verts

elif input_features == 'hks':

features = diffusion_net.geometry.compute_hks_autoscale(evals, evecs, 16)

# Apply the model

preds = model(features, mass, L=L, evals=evals, evecs=evecs, gradX=gradX, gradY=gradY, faces=faces)

# Evaluate loss

loss = diffusion_net.utils.label_smoothing_log_loss(preds, labels, label_smoothing_fac)

loss.backward()

# track accuracy

pred_labels = torch.max(preds, dim=-1).indices

this_correct = pred_labels.eq(labels).sum().item()

correct += this_correct

total_num += 1

# Step the optimizer

optimizer.step()

optimizer.zero_grad()

train_acc = correct / total_num

return train_acc

# Do an evaluation pass on the test dataset

def test():

model.eval()

correct = 0

total_num = 0

with torch.no_grad():

for data in tqdm(test_loader):

# Get data

verts, faces, frames, mass, L, evals, evecs, gradX, gradY, labels = data

# Move to device

verts = verts.to(device)

faces = faces.to(device)

frames = frames.to(device)

mass = mass.to(device)

L = L.to(device)

evals = evals.to(device)

evecs = evecs.to(device)

gradX = gradX.to(device)

gradY = gradY.to(device)

labels = labels.to(device)

# Construct features

if input_features == 'xyz':

features = verts

elif input_features == 'hks':

features = diffusion_net.geometry.compute_hks_autoscale(evals, evecs, 16)

# Apply the model

preds = model(features, mass, L=L, evals=evals, evecs=evecs, gradX=gradX, gradY=gradY, faces=faces)

# track accuracy

pred_labels = torch.max(preds, dim=-1).indices

this_correct = pred_labels.eq(labels).sum().item()

correct += this_correct

total_num += 1

test_acc = correct / total_num

return test_acc

print("Training...")

for epoch in range(n_epoch):

train_acc = train_epoch(epoch)

test_acc = test()

print("Epoch {} - Train overall: {:06.3f}% Test overall: {:06.3f}%".format(epoch, 100*train_acc, 100*test_acc))

# Test

test_acc = test()

print("Overall test accuracy: {:06.3f}%".format(100*test_acc))

三、分割实验

3.1 结果

| input \ width | 32 | 64 | 128 | 256 |

|---|---|---|---|---|

| hks | 80.393 | 78.496 | 78.533 | 80.304 |

| xyz | 83.200 | 82.544 | 81.026 | - |

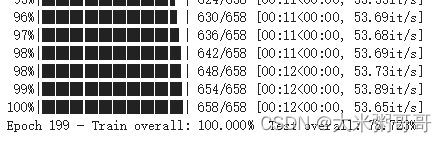

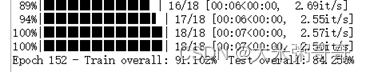

过拟合严重,训练中可到84+,C_width = 16 可以85% ... 减C_width还可以提点 但没有达到论文里的90%

github上的提问 (截止到目前还无回复):Segmentation on the simplified human dataset (face label)

以下数据集使用xyz作为输入,网络不收敛... Aliens(32)只有30%

| input \ width | 32 | 64 | 128 | 256 |

|---|---|---|---|---|

| hks | 66.190 | 70.574 | 69.721 | 54.891 |

| input \ width | 32 | 64 | 128 | 256 |

|---|---|---|---|---|

| hks | - | 96.873 | - | 96.730 |

| input \ width | 32 | 64 | 128 | 256 |

|---|---|---|---|---|

| hks | - | 35.000 | - | 66.262 |

3.2 代码

注意修改n_class和路径

coseg_seg_dataset.py

import shutil

import os

import sys

import random

import numpy as np

import torch

from torch.utils.data import Dataset

import potpourri3d as pp3d

sys.path.append(os.path.join(os.path.dirname(__file__), "../../")) # add the path to the DiffusionNet src

import src.diffusion_net as diffusion_net

def is_mesh_file(filename):

return any(filename.endswith(extension) for extension in ['.obj', 'off'])

class CosegDataset(Dataset):

def __init__(self, root_dir, phase, k_eig=128, op_cache_dir=None):

self.k_eig = k_eig

self.root_dir = root_dir

self.cache_dir = os.path.join(root_dir, "cache")

self.op_cache_dir = op_cache_dir

self.dir = os.path.join(self.root_dir, phase)

self.paths = self.make_dataset(self.dir)

self.seg_paths = self.get_seg_files(self.paths, os.path.join(self.root_dir, 'seg'))

def __len__(self):

return len(self.paths)

def __getitem__(self, index):

path = self.paths[index] # 路径

label = np.loadtxt(open(self.seg_paths[index], 'r'), dtype='float64')

verts, faces = pp3d.read_mesh(path)

verts = torch.tensor(verts).float()

faces = torch.tensor(faces)

label = torch.tensor(label).long()

frames, mass, L, evals, evecs, gradX, gradY = diffusion_net.geometry.get_operators(verts, faces,

k_eig=self.k_eig,

op_cache_dir=self.op_cache_dir)

return verts, faces, frames, mass, L, evals, evecs, gradX, gradY, label

@staticmethod

def get_seg_files(paths, seg_dir, seg_ext='.eseg'):

segs = []

for path in paths:

segfile = os.path.join(seg_dir, os.path.splitext(os.path.basename(path))[0] + seg_ext)

assert (os.path.isfile(segfile))

segs.append(segfile)

return segs

@staticmethod

def make_dataset(path):

meshes = []

assert os.path.isdir(path), '%s is not a valid directory' % path

for root, _, fnames in sorted(os.walk(path)):

for fname in fnames:

if is_mesh_file(fname):

path = os.path.join(root, fname)

meshes.append(path)

return meshes

seg_coseg.py

import os

import sys

import argparse

import torch

from torch.utils.data import DataLoader

from tqdm import tqdm

sys.path.append(os.path.join(os.path.dirname(__file__), "../../")) # add the path to the DiffusionNet src

import src.diffusion_net as diffusion_net

from experiments.seg_coseg.coseg_seg_dataset import CosegDataset

# Parse a few args

parser = argparse.ArgumentParser()

parser.add_argument("--input_features", type=str, help="('xyz' or 'hks')", default='hks')

parser.add_argument("--features_width", type=int, choices={32, 64, 128, 256}, default=128)

parser.add_argument("--data_name", type=str, choices={'aliens', 'vases', 'chairs'}, default='vases')

args = parser.parse_args()

# system things

device = torch.device('cuda:0')

dtype = torch.float32

input_features = args.input_features # one of ['xyz', 'hks']

C_width = args.features_width

k_eig = 128

n_epoch = 200

lr = 1e-3

decay_every = 50

decay_rate = 0.5

augment_random_rotate = (input_features == 'xyz')

# === Load datasets

data_name = args.data_name # aliens vases chairs

base_path = os.path.dirname(__file__)

op_cache_dir = os.path.join(base_path, "data", "op_cache", data_name)

dataset_path = os.path.join(base_path, "data", data_name)

n_class = 4

# Load the test dataset

test_dataset = CosegDataset(dataset_path, 'test', k_eig=k_eig, op_cache_dir=op_cache_dir)

test_loader = DataLoader(test_dataset, batch_size=None)

# Load the train dataset

train_dataset = CosegDataset(dataset_path, 'train', k_eig=k_eig, op_cache_dir=op_cache_dir)

train_loader = DataLoader(train_dataset, batch_size=None, shuffle=True)

# === Create the model

C_in={'xyz': 3, 'hks': 16}[input_features] # dimension of input features

model = diffusion_net.layers.DiffusionNet(C_in=C_in,

C_out=n_class,

C_width=C_width,

N_block=4,

last_activation=lambda x : torch.nn.functional.log_softmax(x, dim=-1),

outputs_at='faces',

dropout=True)

model = model.to(device)

# === Optimize

optimizer = torch.optim.Adam(model.parameters(), lr=lr)

def train_epoch(epoch):

# Implement lr decay

if epoch > 0 and epoch % decay_every == 0:

global lr

lr *= decay_rate

for param_group in optimizer.param_groups:

param_group['lr'] = lr

# Set model to 'train' mode

model.train()

optimizer.zero_grad()

correct = 0

total_num = 0

for data in tqdm(train_loader):

# Get data

verts, faces, frames, mass, L, evals, evecs, gradX, gradY, labels = data

# Move to device

verts = verts.to(device)

faces = faces.to(device)

frames = frames.to(device)

mass = mass.to(device)

L = L.to(device)

evals = evals.to(device)

evecs = evecs.to(device)

gradX = gradX.to(device)

gradY = gradY.to(device)

labels = labels.to(device)

# Randomly rotate positions

if augment_random_rotate:

verts = diffusion_net.utils.random_rotate_points(verts)

# Construct features

if input_features == 'xyz':

features = verts

elif input_features == 'hks':

features = diffusion_net.geometry.compute_hks_autoscale(evals, evecs, 16)

# Apply the model

preds = model(features, mass, L=L, evals=evals, evecs=evecs, gradX=gradX, gradY=gradY, faces=faces)

# Evaluate loss

loss = torch.nn.functional.nll_loss(preds, labels)

loss.backward()

# track accuracy

pred_labels = torch.max(preds, dim=1).indices

this_correct = pred_labels.eq(labels).sum().item()

this_num = labels.shape[0]

correct += this_correct

total_num += this_num

# Step the optimizer

optimizer.step()

optimizer.zero_grad()

train_acc = correct / total_num

return train_acc

# Do an evaluation pass on the test dataset

def test():

model.eval()

correct = 0

total_num = 0

with torch.no_grad():

for data in tqdm(test_loader):

# Get data

verts, faces, frames, mass, L, evals, evecs, gradX, gradY, labels = data

# Move to device

verts = verts.to(device)

faces = faces.to(device)

frames = frames.to(device)

mass = mass.to(device)

L = L.to(device)

evals = evals.to(device)

evecs = evecs.to(device)

gradX = gradX.to(device)

gradY = gradY.to(device)

labels = labels.to(device)

# Construct features

if input_features == 'xyz':

features = verts

elif input_features == 'hks':

features = diffusion_net.geometry.compute_hks_autoscale(evals, evecs, 16)

# Apply the model

preds = model(features, mass, L=L, evals=evals, evecs=evecs, gradX=gradX, gradY=gradY, faces=faces)

# track accuracy

pred_labels = torch.max(preds, dim=1).indices

this_correct = pred_labels.eq(labels).sum().item()

this_num = labels.shape[0]

correct += this_correct

total_num += this_num

test_acc = correct / total_num

return test_acc

print("Training...")

for epoch in range(n_epoch):

train_acc = train_epoch(epoch)

test_acc = test()

print("Epoch {} - Train overall: {:06.3f}% Test overall: {:06.3f}%".format(epoch, 100*train_acc, 100*test_acc))

# Test

test_acc = test()

print("Overall test accuracy: {:06.3f}%".format(100*test_acc))

四、一些想法

DiffusionNet本身有一些局限性 (不确定batch_size>1效果会怎么样),在一些数据集上的准确率并不尽人意… 感觉有改进的空间 可以尝试的一些方向:

4.1 输入

论文以顶点的xyz坐标和hks特征作为输入

- 尝试其他简单的几何特征,

面积、二面角、法向等 - 尝试其他谱域特征,比如:

wks

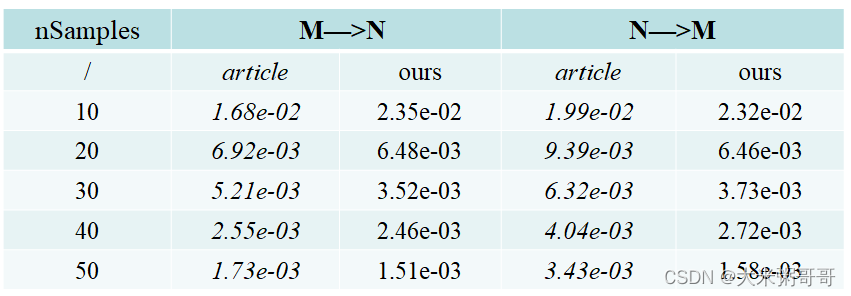

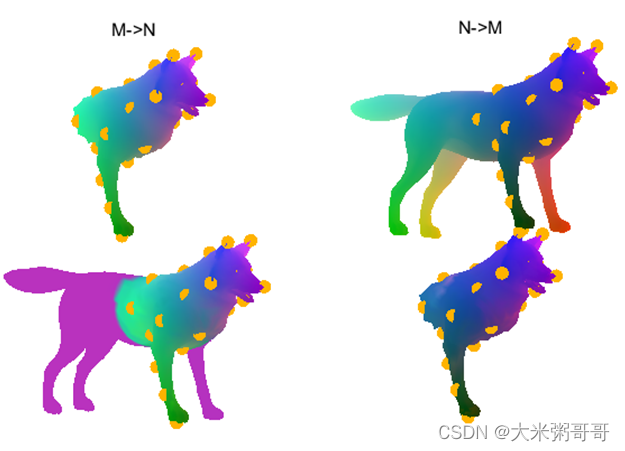

想到了之前复现的一篇论文,Wavelet-based Heat Kernel Derivatives: Towards Informative Localized Shape Analysis,当时改了改论文中的一些细节,结果如下:

4.2 网络

- 将DiffusionNetBlock作为

即插即用的Non-local模块,融合到ResNet中会怎么样 卷积+ DiffusionNetBlock orTransformer+ DiffusionNetBlock- 将DiffusionNetBlock 中的MLP改为基于点云的网络,比如

PointNet - 尝试batch_size>1,这时候激活函数改为

BN是否更好,或者用LN - … 很多idea可以尝试

1385

1385

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?