二.编写模型训练代码

打开MaskRCNN文件夹下的samples文件夹,存在以下四个文件夹:

下述模型训练代码将对shapes文件夹下的train_shapes.ipnyb 进行修改(这里是jupyter的文本格式,笔者不太习惯使用这种格式,所以将其转换为py文件。)

2.1 下载coco预训练权重文件

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

iter_num=0

COCO_MODEL_PATH = os.path.join(MODEL_DIR, "mask_rcnn_coco.h5")

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

这段代码的意思就是在MaskRCNN文件目录下下载coco预训练权重模型文件,并放在logs文件夹下。笔者建议先下载下来直接放到对应的文件夹下就好,因为在下载模型文件时会调用mrcnn文件夹下的utils.py文件中的download_trained_weights()函数。(笔者之前使用这个函数时出现了一定的问题,具体什么问题忘记了,当时就把这个函数屏蔽了,然后直接下载模型文件到文件夹下。)

mask-rcnn模型coco预训练权重文件下载

温馨提示:每次使用过coco预训练模型文件之后,下次再次进行新的训练时最好再重新下载一个预训练模型文件,不要重复使用。据网友说容易出现错误,不过笔者还没有尝试,在此作出提示。

2.2 修改Config类

- GPU_COUNT: GPU数量根据个人电脑硬件配置而定;

- IMAGES_PER_GPU: 表示每个GPU上跑多少张照片;

- NUM_CLASSES: 1 + 1 第一个1表示的是背景,第二个1表示的是要进行分割的物体类别

- IMAGE_MIN_DIM = 960;IMAGE_MAX_DIM = 960 :设定图片大小

- RPN_ANCHOR_SCALES: RPN网络中anchor的尺寸,其原理就是要用高分辨率特征去识别小物体,用低分辨率特征去识别大物体;

- RPN_ANCHOR_RATIOS: 生成锚框时的长宽比

- RPN_ANCHOR_STRIDE: 锚框采样步长

- RPN_NMS_THRESHOLD: IoU阈值

- STEPS_PER_EPOCH = 28: 每个epoch有28步(根据自己电脑配置而定)

- MAX_GT_INSTANCES = 100: 允许检测得到的最大类别数目

- VALIDATION_STEPS = 5 :验证时的步数,可以取小一点

class ShapesConfig(Config):

NAME = "shapes"

GPU_COUNT = 1

#每块GPU跑2张

IMAGES_PER_GPU = 2

#第一个1表示背景 第二个1表示要分割的物体类别数目

NUM_CLASSES = 1 + 1

#图片大小

IMAGE_MIN_DIM = 960

IMAGE_MAX_DIM = 960

#根据图片大小调整anchor的尺度

RPN_ANCHOR_SCALES = (8*7, 16*7, 32*7, 64*7, 128*7) # anchor side in pixels

#生成锚框时的长宽比,取[0.5,1,2]

RPN_ANCHOR_RATIOS = [0.5, 1, 2]

#锚框采样步长,取1

RPN_ANCHOR_STRIDE = 1

#IoU阈值,取0.7

RPN_NMS_THRESHOLD = 0.7

RPN_TRAIN_ANCHORS_PER_IMAGE = 256

TRAIN_ROIS_PER_IMAGE = 100

#每个epoch有28步

STEPS_PER_EPOCH = 28

#允许检测得到的最大类别数目

MAX_GT_INSTANCES = 100

#use small validation steps since the epoch is small

VALIDATION_STEPS = 5

config = ShapesConfig()

config.display()

2.3 修改Dataset类

这一部分的主要作用就是要加载我们之前的训练数据,包括原始图象数据,掩膜图象,以及每个图象文件对应的yaml文件。

class DrugDataset(utils.Dataset):

def get_obj_index(self, image):

n = np.max(image)

return n

def from_yaml_get_class(self, image_id):

info = self.image_info[image_id]

with open(info['yaml_path']) as f:

temp = yaml.load(f.read())

labels = temp['label_names']

del labels[0]

return labels

def draw_mask(self, num_obj, mask, image,image_id):

info = self.image_info[image_id]

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] = 1

return mask

def load_shapes(self, count, img_folder, mask_folder, imglist, dataset_root_path):

self.add_class("shapes", 1, "tank")

for i in range(count):

filestr = imglist[i].split(".")[0]

mask_path = mask_folder + "/" + filestr + ".png"

yaml_path = dataset_root_path + "labelme_json/" + filestr + "_json/info.yaml"

# print(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

cv_img = cv2.imread(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

self.add_image("shapes", image_id=i, path=img_folder + "/" + imglist[i],

width=cv_img.shape[1], height=cv_img.shape[0], mask_path=mask_path, yaml_path=yaml_path)

def load_mask(self, image_id):

global iter_num

print("image_id",image_id)

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path'])

num_obj = self.get_obj_index(img)

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8)

mask = self.draw_mask(num_obj, mask, img,image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8)

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels = []

labels = self.from_yaml_get_class(image_id)

labels_form = []

for i in range(len(labels)):

if labels[i].find("tank") != -1:

# print "box"

labels_form.append("tank")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)

def get_ax(rows=1, cols=1, size=8):

_, ax = plt.subplots(rows, cols, figsize=(size * cols, size * rows))

return ax

(其实这个get_ax()函数可有可无,并没有用到这个函数。)

2.4 加载数据并开始训练

dataset_root_path = os.path.join(ROOT_DIR, "train_data/")

img_folder = dataset_root_path + "pic"

mask_folder = dataset_root_path + "cv2_mask"

imglist = os.listdir(img_folder)

count = len(imglist)

#train数据集准备

dataset_train = DrugDataset()

dataset_train.load_shapes(count, img_folder, mask_folder, imglist,dataset_root_path)

dataset_train.prepare()

#val数据集

dataset_val = DrugDataset()

dataset_val.load_shapes(10, img_folder, mask_folder, imglist,dataset_root_path)

dataset_val.prepare()

model = modellib.MaskRCNN(mode="training", config=config,model_dir=MODEL_DIR)

init_with = "coco"

if init_with == "imagenet":

model.load_weights(model.get_imagenet_weights(), by_name=True)

elif init_with == "coco":

model.load_weights(COCO_MODEL_PATH, by_name=True,exclude=["mrcnn_class_logits", "mrcnn_bbox_fc","mrcnn_bbox", "mrcnn_mask"])

elif init_with == "last":

model.load_weights(model.find_last()[1], by_name=True)

model.train(dataset_train, dataset_val,learning_rate=config.LEARNING_RATE,epochs=20,layers='heads')

model.train(dataset_train, dataset_val,learning_rate=config.LEARNING_RATE / 10,epochs=20,layers="all")

至此,训练文件已经全部修改完毕,只需要在终端输入

python pretrain.py

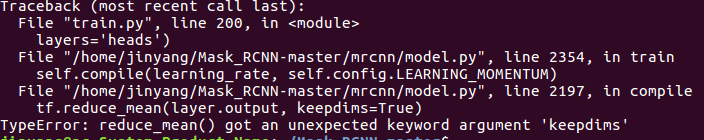

即可开始训练。如果一切顺利的话,那么你的模型已经开始训练,不过大多数情况下,第一次很难成功呀。so,这个时候你的终端可能会输出一系列的错误信息,最常见的一种如下:

出现这个错误主要是因为tensorflow版本造成的,只需要打开mrcnn文件夹下的model.py文件将keepdims都改为keep_dims即可,这个问题就迎刃而解。

tensorflow配置Mask-RCNN报错

还有一种错误

出现这个错误是因为你的mask和你的原始图片无法匹配成功造成的。有几种可能,一种是你在制作数据集时出现了问题导致两种图像文件不匹配,所以程序就找不到正确的image_id,还有一种可能就是笔者所犯的错误,就是在Dataset类下的load_mask()函数的形参中没有给image_id这个形参,只要加上就ok了。def load_mask(self, image_id):

至于错误下面提示的NameError: name 'Image' is not defined是因为代码没有导入对应的模块,加上

from PIL import Image

就OK。

其余常见错误笔者还没有遇到,不过在查考相关的博客过程中笔者积累了一定的博客以供参考。

MaskRCNN训练时所遇到的问题:

相关博客

https://blog.csdn.net/lovebyz/article/details/80138261

https://blog.csdn.net/qq_38596190/article/details/88962794

https://blog.csdn.net/qq_34713831/article/details/85797622

https://blog.csdn.net/weixin_39970417/article/details/80494923

模型训练完整代码 pretrain.py(版本一)

# -*- coding: utf-8 -*-

import os

import sys

sys.path.remove('/opt/ros/kinetic/lib/python2.7/dist-packages')

import random

import math

import re

import time

import numpy as np

import cv2

import matplotlib

import matplotlib.pyplot as plt

import tensorflow as tf

#ROOT_DIR = os.getcwd()

ROOT_DIR = os.path.abspath("/home/***/Mask_RCNN-master/")

sys.path.append(ROOT_DIR)

from mrcnn.config import Config

from mrcnn import utils

from mrcnn import model as modellib

from mrcnn import visualize

import yaml

from mrcnn.model import log

from PIL import Image

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

iter_num=0

COCO_MODEL_PATH = os.path.join(MODEL_DIR, "mask_rcnn_coco.h5")

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

class ShapesConfig(Config):

NAME = "shapes"

GPU_COUNT = 1

#每块GPU跑2张

IMAGES_PER_GPU = 2

#第一个1表示背景 第二个1表示要分割的物体类别数目

NUM_CLASSES = 1 + 1

#图片大小

IMAGE_MIN_DIM = 960

IMAGE_MAX_DIM = 960

#根据图片大小调整anchor的尺度

RPN_ANCHOR_SCALES = (8*7, 16*7, 32*7, 64*7, 128*7) # anchor side in pixels

#生成锚框时的长宽比,取[0.5,1,2]

RPN_ANCHOR_RATIOS = [0.5, 1, 2]

#锚框采样步长,取1

RPN_ANCHOR_STRIDE = 1

#IoU阈值,取0.7

RPN_NMS_THRESHOLD = 0.7

RPN_TRAIN_ANCHORS_PER_IMAGE = 256

TRAIN_ROIS_PER_IMAGE = 100

#每个epoch有28步

STEPS_PER_EPOCH = 28

#允许检测得到的最大类别数目

MAX_GT_INSTANCES = 100

#use small validation steps since the epoch is small

VALIDATION_STEPS = 5

config = ShapesConfig()

config.display()

class DrugDataset(utils.Dataset):

def get_obj_index(self, image):

n = np.max(image)

return n

def from_yaml_get_class(self, image_id):

info = self.image_info[image_id]

with open(info['yaml_path']) as f:

temp = yaml.load(f.read())

labels = temp['label_names']

del labels[0]

return labels

def draw_mask(self, num_obj, mask, image,image_id):

info = self.image_info[image_id]

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] = 1

return mask

def load_shapes(self, count, img_folder, mask_folder, imglist, dataset_root_path):

self.add_class("shapes", 1, "tank")

for i in range(count):

filestr = imglist[i].split(".")[0]

mask_path = mask_folder + "/" + filestr + ".png"

yaml_path = dataset_root_path + "labelme_json/" + filestr + "_json/info.yaml"

# print(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

cv_img = cv2.imread(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

self.add_image("shapes", image_id=i, path=img_folder + "/" + imglist[i],

width=cv_img.shape[1], height=cv_img.shape[0], mask_path=mask_path, yaml_path=yaml_path)

def load_mask(self, image_id):

global iter_num

print("image_id",image_id)

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path'])

num_obj = self.get_obj_index(img)

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8)

mask = self.draw_mask(num_obj, mask, img,image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8)

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels = []

labels = self.from_yaml_get_class(image_id)

labels_form = []

for i in range(len(labels)):

if labels[i].find("tank") != -1:

# print "box"

labels_form.append("tank")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)

def get_ax(rows=1, cols=1, size=8):

_, ax = plt.subplots(rows, cols, figsize=(size * cols, size * rows))

return ax

dataset_root_path = os.path.join(ROOT_DIR, "train_data/")

img_folder = dataset_root_path + "pic"

mask_folder = dataset_root_path + "cv2_mask"

imglist = os.listdir(img_folder)

count = len(imglist)

#train数据集准备

dataset_train = DrugDataset()

dataset_train.load_shapes(count, img_folder, mask_folder, imglist,dataset_root_path)

dataset_train.prepare()

#val数据集

dataset_val = DrugDataset()

dataset_val.load_shapes(10, img_folder, mask_folder, imglist,dataset_root_path)

dataset_val.prepare()

model = modellib.MaskRCNN(mode="training", config=config,model_dir=MODEL_DIR)

init_with = "coco"

if init_with == "imagenet":

model.load_weights(model.get_imagenet_weights(), by_name=True)

elif init_with == "coco":

model.load_weights(COCO_MODEL_PATH, by_name=True,exclude=["mrcnn_class_logits", "mrcnn_bbox_fc","mrcnn_bbox", "mrcnn_mask"])

elif init_with == "last":

model.load_weights(model.find_last()[1], by_name=True)

#model.train(dataset_train, dataset_val,learning_rate=config.LEARNING_RATE,epochs=1,layers='heads')

model.train(dataset_train, dataset_val,learning_rate=config.LEARNING_RATE,epochs=20,layers='heads')

model.train(dataset_train, dataset_val,learning_rate=config.LEARNING_RATE / 10,epochs=20,layers="all")

#model.train(dataset_train, dataset_val,learning_rate=config.LEARNING_RATE / 10,epochs=1,layers="all")

因为笔者的ubuntu环境下装了ros,ros仅仅支持python2.7,且笔者在bashrc文件夹下已经对ros环境进行了source,但是上述模型是基于python3来实现的,这就会经常导致程序找不到cv2模块,解决办法就是添加

sys.path.remove('/opt/ros/kinetic/lib/python2.7/dist-packages')

即可。暂时将ros下的python路径移除。如果没有ros环境则忽略笔者上述所提到的问题。

ImportError: /opt/ros/kinetic/lib/python2.7/dist-packages/cv2.so: undefined symbol: PyCObject_Type

解决ubuntu环境下ros中的python路径冲突问题

模型训练完整代码 train.py(版本二)

# -*- coding: utf-8 -*-

import os

import sys

sys.path.remove('/opt/ros/kinetic/lib/python2.7/dist-packages')

import random

import math

import re

import time

import numpy as np

import cv2

import matplotlib

import matplotlib.pyplot as plt

# Root directory of the project

ROOT_DIR = os.path.abspath("/home/***/Mask_RCNN-master/")

# Import Mask RCNN

sys.path.append(ROOT_DIR) # To find local version of the library

from mrcnn.config import Config

from mrcnn import utils

import mrcnn.model as modellib

from mrcnn import visualize

import yaml

from mrcnn.model import log

from PIL import Image

# Directory to save logs and trained model

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

iter_num = 0

# Local path to trained weights file

COCO_MODEL_PATH = os.path.join(ROOT_DIR, "mask_rcnn_coco.h5")

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 1

# Number of classes (including background)

NUM_CLASSES = 1 + 1 # background + 3 shapes

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 960

IMAGE_MAX_DIM = 960

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8*7, 16*7, 32*7, 64*7, 128*7) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE = 32

# Use a small epoch since the data is simple

STEPS_PER_EPOCH = 10

# use small validation steps since the epoch is small

VALIDATION_STEPS = 5

config = ShapesConfig()

config.display()

class DrugDataset(utils.Dataset):

#得到该图中有多少个实例(物体)

def get_obj_index(self, image):

n = np.max(image)

return n

#解析labelme中得到的yaml文件,从而得到mask每一层对应的实例标签

def from_yaml_get_class(self,image_id):

info=self.image_info[image_id]

with open(info['yaml_path']) as f:

temp=yaml.load(f.read())

labels=temp['label_names']

del labels[0]

return labels

#重新写draw_mask

def draw_mask(self, num_obj, mask, image, image_id):

info = self.image_info[image_id]

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] =1

return mask

#重新写load_shapes,里面包含自己的自己的类别(我的是box、column、package、fruit四类)

#并在self.image_info信息中添加了path、mask_path 、yaml_path

def load_shapes(self, count, img_folder, mask_folder, imglist,dataset_root_path):

"""Generate the requested number of synthetic images.

count: number of images to generate.

height, width: the size of the generated images.

"""

# Add classes

self.add_class("shapes", 1, "tank")

for i in range(count):

filestr = imglist[i].split(".")[0]

# filestr = filestr.split("_")[1]

mask_path = mask_folder + "/" + filestr + ".png"

yaml_path=dataset_root_path+"labelme_json/"+filestr+"_json/info.yaml"

cv_img = cv2.imread(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

self.add_image("shapes", image_id=i, path=img_folder + "/" + imglist[i],

width=cv_img.shape[1], height=cv_img.shape[0], mask_path=mask_path, yaml_path=yaml_path)

#重写load_mask

def load_mask(self, image_id):

"""Generate instance masks for shapes of the given image ID.

"""

global iter_num

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path'])

num_obj = self.get_obj_index(img)

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8)

mask = self.draw_mask(num_obj, mask, img, image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8)

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels=[]

labels=self.from_yaml_get_class(image_id)

labels_form=[]

for i in range(len(labels)):

if labels[i].find("tank")!=-1:

#print "box"

labels_form.append("tank")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)

#基础设置

dataset_root_path=os.path.join(ROOT_DIR, "train_data/")

img_folder = dataset_root_path+"pic"

mask_folder = dataset_root_path+"cv2_mask"

imglist = os.listdir(img_folder)

count = len(imglist)

#train数据集准备

dataset_train = DrugDataset()

dataset_train.load_shapes(count,img_folder, mask_folder, imglist,dataset_root_path)

dataset_train.prepare()

#val数据集

dataset_val = DrugDataset()

dataset_val.load_shapes(5, img_folder, mask_folder, imglist,dataset_root_path)

dataset_val.prepare()

#Create model in training mode

model = modellib.MaskRCNN(mode="training", config=config,model_dir=MODEL_DIR)

# Which weights to start with?

init_with = "coco" # imagenet, coco, or last

if init_with == "imagenet":

model.load_weights(model.get_imagenet_weights(), by_name=True)

elif init_with == "coco":

# Load weights trained on MS COCO, but skip layers that

# are different due to the different number of classes

# See README for instructions to download the COCO weights

model.load_weights(COCO_MODEL_PATH, by_name=True,

exclude=["mrcnn_class_logits", "mrcnn_bbox_fc",

"mrcnn_bbox", "mrcnn_mask"])

elif init_with == "last":

# Load the last model you trained and continue training

model.load_weights(model.find_last(), by_name=True)

# Train the head branches# Train

# Passing layers="heads" freezes all layers except the head

# layers. You can also pass a regular expression to select

# which layers to train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE,

epochs = 15,

layers='heads')

# Fine tune all layers

# Passing layers="all" trains all layers. You can also

# pass a regular expression to select which layers to

# train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE / 10,

epochs= 15,

layers="all")

这两个版本的代码没有本质区别,只不过版本二的原始demo是笔者最先使用的demo,不过出现了一定的错误,后来经过一定的修改都可以使用了,放在这里仅仅是留作参考

在实现过程中参考了一些博客,笔者这里都作了一定的总结,博友们可以主要参考前四篇博客即可,尤其是第一篇博客的demo基本上是可以直接跑通的

参考博客1

参考博客2

参考博客3

参考博客4

其他博客

https://blog.csdn.net/qq_15969343/article/details/80167215

https://blog.csdn.net/weixin_42880443/article/details/93622552

https://blog.csdn.net/l297969586/article/details/79140840

https://www.cnblogs.com/roscangjie/p/10770667.html

训练得到的权重模型文件在logs文件夹下

在tensorbord中查看训练过程的各指标变化趋势

tensorboard --logdir /home/***/workspace/Mask_R-CNN/cancer/logs

2.1 Mask-RCNN训练自己的数据集【Part One:制作数据集】(全部流程总结+部分释义)

2.3 Mask-RCNN训练自己的数据集【Part Three: 用自己训练的模型进行测试】(全部流程总结+部分释义)

2934

2934

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?