FCN = Fully Convolutional Networks

Ⅰ. 什么是Fully Convolution Network

全卷积,没有全连接层FC

Ⅱ. 怎样从图像分类网络变成分割?

-

标准VGG网络:卷积、pooling、全连接(代码实现在附录部分)

分类变成分割的目的:把全连接去掉

-

图像分类和图像分割的区别

图像分类结果:返回类别的概率

分割和分类最大的区别是:分割是要做每一个像素的分类

-

如何把FC层变成Convolution:1x1卷积

1x1卷积:输入的长和宽是不变的,改变的是channel

本质:1x1卷积就是降维或者升维

全卷积最好的特性:不需要在乎输入图片有多大,有尺寸就行

-

Feture map尺寸怎么变大:

1. Up-sampling:上采样- Bilinear Interpolation双线性插值

- 优点:快

- 按x方向插值,可以看作用x和x1,x2的距离作为一个权重,用于v(Q11)和v(Q21)的加权。即R1离Q11、Q21哪个近,则更偏向于哪一方。与原图更像。

paddle代码:

import paddle import paddle.fluid as fluid from paddle.fluid.dygraph import to_variable import numpy as np def main(): with fluid.dygraph.guard(fluid.CPUPlace()): data = np.array([[1, 2], [3, 4]]).astype(np.float32) # reshape array to N×C×H×W data = data[np.newaxis, np.newaxis, :, :] data = fluid.dygraph.to_variable(data) out = fluid.layers.interpolate(data, out_shape=(4, 4), align_corners = True) out = out.numpy() print(out.squeeze((0,1))) if __name__ =='__main__': main()[[1. 1.3333333 1.6666667 2. ] [1.6666666 2. 2.3333335 2.6666665] [2.3333333 2.6666665 3. 3.3333335] [3. 3.3333333 3.6666667 4. ]]2. Transpose Conv:反卷积

操作:原图padding 与 180°反向卷积核 做卷积操作。

扩展:卷积 -> 反卷积(矩阵相乘计算角度)

-

convolution

-

Transpose convolution

- 矩阵转置

- 矩阵转置

3. Un-pooling

- 是pooling的反向,需要一个index,max填回原来的位置

Ⅲ. FCN网络结构

1. backbone:VGG

2. 实现

- 真正实现的FCN与论文不太一样,复杂一点

- 直接将特征图上采样32倍,这种方式得到的分割精度不会很高

- FCN结合多层feture map,融合更多信息,效果会更好

其中 - FCN32s:pool5的feature map直接做32倍上采样,然后经过softmax得到分割预测图

- FCN16s:pool5的feature map进行2倍上采样,再与pool4的feature map进行加操作。即2×pool5 + pool4

- FCN8s在FCN16s的基础上,对(2×pool5 + pool4)进行2倍上采样,与pool3的feature map相加,即 2× (2×pool5 + pool4)+ pool3

3. 步骤:

(1) FC7上采样2倍和Pool4尺寸一样,做加操作

(2)再上采样2倍,和Pool3尺寸一样,再做加操作,融合足够的信息。

(3)最后做8倍上采样,和原图一样大,就可以做分割了。

4. FCN代码实现

import numpy as np

import paddle

import paddle.fluid.dygraph as fluid

from paddle.fluid.dygraph import Conv2D

from paddle.fluid.dygraph import Conv2DTranspose

from paddle.fluid.dygraph import Dropout

from paddle.fluid.dygraph import Pool2D

from paddle.fluid.dygraph.base import to_variable

from vgg import VGG16BN # load pretrained model

class FCN8s(fluid.dygraph.Layer):

def __init__(self, num_classes=59):

super(FCN8s, self).__init__() # 初始化父类

backbone = VGG16BN(pretrained=False)

self.layer1 = backbone.layer1

self.layer1[0].conv._padding = [100,100] # 官方FCN设计上的巧妙,要多加padding

self.pool1 = Pool2D(pool_size=2, pool_stride=2, ceil_mode=True) # 定义pooling层

self.layer2 = backbone.layer2

self.pool2 = Pool2D(pool_size=2, pool_stride=2, ceil_mode=True)

self.layer3 = backbone.layer3

self.pool3 = Pool2D(pool_size=2, pool_stride=2, ceil_mode=True)

self.layer4 = backbone.layer4

self.pool4 = Pool2D(pool_size=2, pool_stride=2, ceil_mode=True)

self.layer5 = backbone.layer5

self.pool5 = Pool2D(pool_size=2, pool_stride=2, ceil_mode=True)

# convolution 7×7

self.fc6 = Conv2D(512, 4096, 7, act='relu')

self.fc7 = Conv2D(4096, 4096, 1, act='relu')

self.drop6 = Dropout()

self.drop7 = Dropout()

# score是把feature map的通道数变成和分割图一样的类数

self.score = Conv2D(4096, num_classes, 1)

self.score_pool3 = Conv2D(256, num_classes, 1)

self.score_pool4 = Conv2D(512, num_classes, 1)

# 上采样(2倍、2倍、8倍)

self.up_output = Conv2DTranspose(num_channels=num_classes,

num_filters=num_classes,

filter_size=4,

stride=2,

bias_attr=False)

self.up_pool4 = Conv2DTranspose(num_channels=num_classes,

num_filters=num_classes,

filter_size=4,

stride=2,

bias_attr=False)

self.up_final = Conv2DTranspose(num_channels=num_classes,

num_filters=num_classes,

filter_size=16,

stride=8,

bias_attr=False)

def forward(self, inputs):

x = self.layer1(inputs) # 1/2

x = self.pool1(x)

x = self.layer2(x) # 1/4

x = self.pool2(x)

x = self.layer3(x) # 1/8

x = self.pool3(x)

pool3 = x

x = self.layer4(x) # 1/16

x = self.pool4(x)

pool4 = x

x = self.layer5(x) # 1/32

x = self.pool5(x)

x = self.fc6(x)

x = self.drop6(x)

x = self.fc7(x)

x = self.drop7(x)

x = self.score(x) # score是把FC7输出的feature map变成和分割图类别数一致

x = self.up_output(x) # 对应图中的 FC7 进行的2倍上采样操作

up_output = x # 保存FC上采样后的值 1/16

x = self.score_pool4(pool4) # 把pool4的feature map的通道变成和分割类别数一致

x = x[:, :, 5:5+up_output.shape[2], 5:5+up_output.shape[3]] # 相加之前做padding(实际为clop),主要目的是为了让模型的尺寸shape一样

up_pool4 = x # 保存和类别数通道一致的pool4

x = up_pool4 + up_output # FC7上采样后 + pool4,目的:融合更多的通道信息

x = self.up_pool4(x) # 再一次上采样:2 × (2 × FC7 + pool4)

up_pool4 = x # 保存 2 × (2 × FC7 + pool4)

x = self.score_pool3(pool3) # 把pool3的feature map的通道变成和分割类别数一致

x = x[:, :, 9:9+up_pool4.shape[2], 9:9+up_pool4.shape[3]] # padding,其中5、9、31是根据论文的设置的,0也可以

up_pool3 = x # 1/8 # 保存和类别数通道一致的pool3

x = up_pool3 + up_pool4 # pool3 + 2 × (2 × FC7 + pool4)

x = self.up_final(x) # 最终的8倍上采样:8 × (pool3 + 2 × (2 × FC7 + pool4))

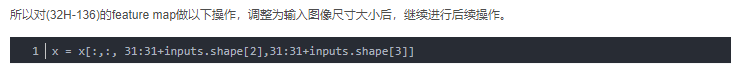

x = x[:, :, 31:31+inputs.shape[2], 31:31+inputs.shape[3]]

return x

def main():

with fluid.dygraph.guard():

x_data = np.random.rand(2, 3, 512, 512).astype(np.float32)

x = to_variable(x_data)

model = FCN8s(num_classes=59)

model.eval()

pred = model(x)

print(pred.shape)

if __name__ == '__main__':

main()

代码细节

来源:https://blog.csdn.net/qq_40680731/article/details/109270744

- Conv1后padding 100*100

- crop的尺寸起始选择为(5,9,31)

8×Upsample(32H-136)变回原图大小(32H-200)

5. FCN优缺点

Ⅳ 附录:VGG框架

VGG代码:

from re import T

import numpy as np

import paddle.fluid.dygraph as fluid

from paddle.fluid.dygraph import to_variable

from paddle.fluid.dygraph import Conv2D

from paddle.fluid.dygraph import Dropout

from paddle.fluid.dygraph import BatchNorm

from paddle.fluid.dygraph import Pool2D

from paddle.fluid.dygraph import Linear

from paddle.fluid.dygraph import layers

model_path = {

'vgg16' : './vgg16',

'vgg16bn':'./vgg16_bn',

'vgg19' : './vgg19',

'vgg19bn':'./vgg19_bn'

}

# vgg16会用到1×1卷积,这里设置的卷积都是3×3

class ConvBNLayer(fluid.dygraph.Layer):

def __init__(self,

num_channels,

num_filters,

filter_size=3,

stride=1,

groups=1,

use_bn=True,

act='relu',

name=None):

super(ConvBNLayer, self).__init__(name)

self.use_bn = use_bn

if use_bn:

self.conv = Conv2D(num_channels=num_channels,

num_filters=num_filters,

filter_size=filter_size,

stride=stride,

padding=(filter_size-1)//2,

groups=groups,

act=None,

bias_attr=None)

self.bn = BatchNorm(num_filters, act=act)

else:

self.conv = Conv2D(num_channels=num_channels,

num_filters=num_filters,

filter_size=filter_size,

stride=stride,

padding=(filter-1)//2,

groups=groups,

act=act,

bias_attr=None

)

def forward(self, inputs):

y = self.conv(inputs)

if self.use_bn:

y = self.bn(y)

return y

class VGG(fluid.dygraph.Layer):

def __init__(self, layers=16, use_bn=False, num_classes=1000):

super(VGG, self).__init__()

self.layers = layers

self.use_bn = use_bn

supported_layers = [16, 19]

assert layers in supported_layers

if layers == 16:

depth = [2, 2, 3, 3, 3]

elif layers == 19:

depth = [2, 2, 4, 4, 4]

# 输入层维度

num_channels = [3, 64, 128, 256, 512]

# 输出层维度

num_filters = [64, 128, 256, 512, 512]

self.layer1 = fluid.dygraph.Sequential(*self.make_layer(num_channels[0], num_filters[0], depth[0], use_bn, name='layer1'))

self.layer2 = fluid.dygraph.Sequential(*self.make_layer(num_channels[1], num_filters[1], depth[1], use_bn, name='layer2'))

self.layer3 = fluid.dygraph.Sequential(*self.make_layer(num_channels[2], num_filters[2], depth[2], use_bn, name='layer3'))

self.layer4 = fluid.dygraph.Sequential(*self.make_layer(num_channels[3], num_filters[3], depth[3], use_bn, name='layer4'))

self.layer5 = fluid.dygraph.Sequential(*self.make_layer(num_channels[4], num_filters[4], depth[4], use_bn, name='layer5'))

self.classifier = fluid.dygraph.Sequential(

Linear(input_dim=512*7*7, output_dim=4.96, act='relu'),

Dropout(),

Linear(input_dim=4096, output_dim=4096, act='relu'),

Dropout(),

Linear(input_dim=4096, output_dim=num_classes)

)

self.out_dim = 512 * 7 * 7

def forward(self, inputs):

x = self.layer1(inputs)

x = fluid.layers.pool2d(x, pool_size=2, pool_stride=2)

x = self.layer2(x)

x = fluid.layers.pool2d(x, pool_size=2, pool_stride=2)

x = self.layer3(x)

x = fluid.layers.pool2d(x, pool_size=2, pool_stride=2)

x = self.layer4(x)

x = fluid.layers.pool2d(x, pool_size=2, pool_stride=2)

x = self.layer5(x)

x = fluid.layers.pool2d(x, pool_size=2, pool_stride=2)

x = fluid.layers.adapive_pool2d(x, pool_size=(7,7), pool_type='avg')

# flatten

x = fluid.layers.reshape(x, shape=[-1, self.out_dim])

x = self.classifier(x)

return x

# def VGG16(pretrained=False):

# model = VGG(layers=16)

# if pretrained:

# model_dict,_ = fluid.load_dygraph(model_path=['vgg16'])

# model.set_dict(model_dict)

# return model

def VGG16BN(pretrained=False):

model = VGG(layers=16, use_bn=True)

if pretrained:

model_dict,_ = fluid.load_dygraph(model_path=['vgg16bn'])

model.set_dict(model_dict)

return model

# def VGG19(pretrained=False):

# model = VGG(layers=19)

# if pretrained:

# model_dict,_ = fluid.load_dygraph(model_path=['vgg19'])

# model.set_dict(model_dict)

# return model

# def VGG19BN(pretrained=False):

# model = VGG(layers=19, use_bn=True)

# if pretrained:

# model_dict,_ = fluid.load_dygraph(model_path=['vgg19bn'])

# model.set_dict(model_dict)

# return model

def main():

with fluid.dygraph.guard():

# vgg输入为224×224

x_data = np.random.rand(2, 3, 224, 224).astype(np.float32)

x = to_variable(x_data)

model = VGG16BN()

model.eval()

pred = model(x)

print('vgg16bn:pred.shape = ',pred.shape)

if __name__ == '__main__':

main()

1704

1704

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?