引言

基于 PyTorch 实现的 LSTM 模型在 MNIST 数据集上的手写数字识别。

用法

代码托管于 GitHub:https://github.com/XavierJiezou/pytorch-lstm-mnist

git clone https://github.com/XavierJiezou/pytorch-lstm-mnist.git

cd pytorch-lstm-mnist

pip install -r requirements.txt

python ./code/train_on_mnist.py

注意:在训练之前你需要先解压 mnist.7z 文件。

配置

你也可以修改配置文件。

data:

data_root: ./data/mnist # Path to data

train_ratio: 0.8 # Ratio of training set

val_ratio: 0.1 # Ratio of validation set

batch_size: 64 # How many samples per batch to load

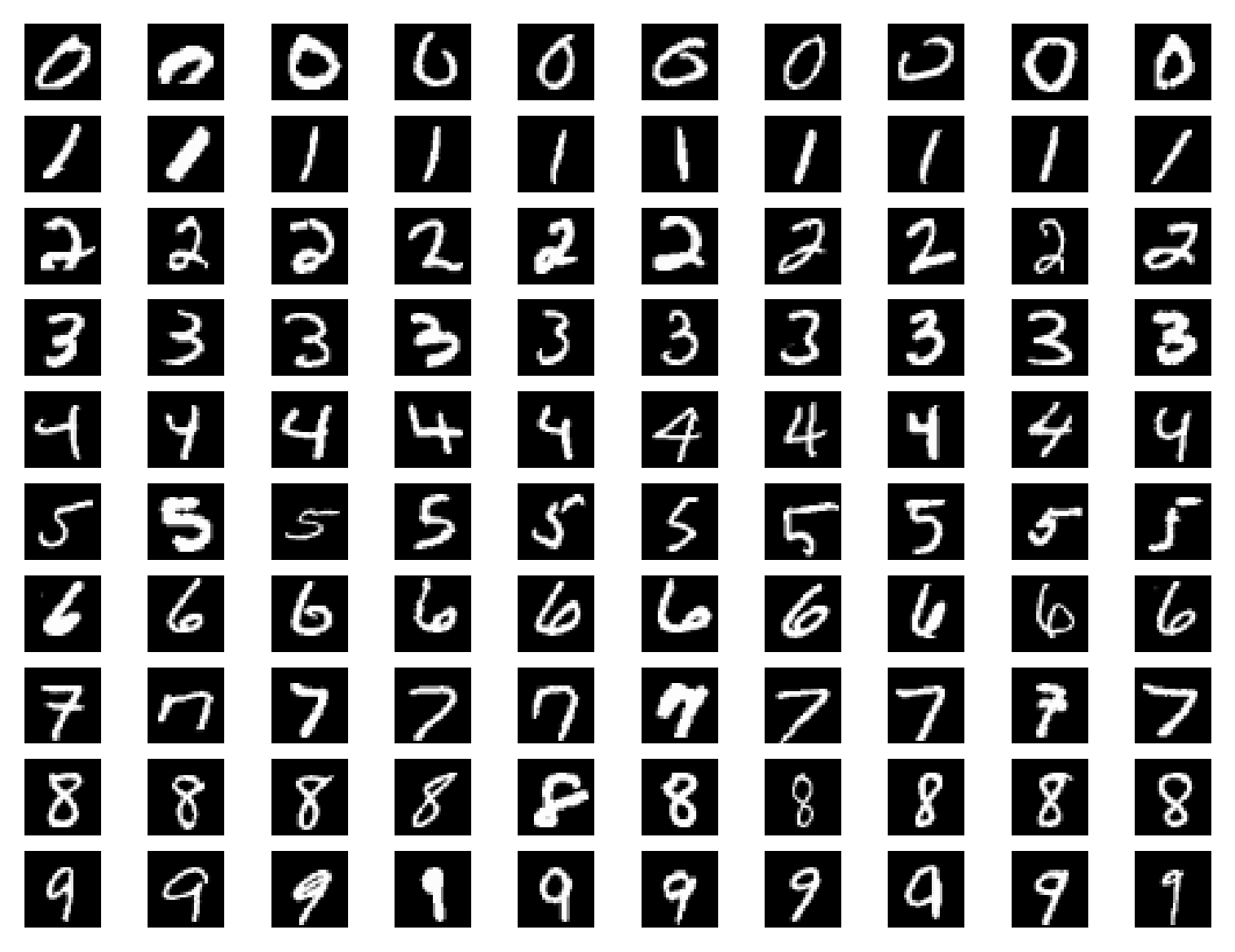

visualize_data_save: ./image/training_data_mnist.png

model:

input_size: 28 # Number of expected features in the input

hidden_size: 64 # Number of features in the hidden state

num_layers: 1 # Number of recurrent layers

output_size: 10 # Number of expected features in the output

train:

num_epochs: 100 # How many epochs to use for data training

sequence_length: 28 # Length of the input sequence

learning_rate: 0.001 # Learning_rate

device: cuda:0 # On which a `torch.Tensor` is or will be allocated

save_path: ./checkpoint/mnist.pth # Path to save the trained model

log:

sink: ./log/mnist.log # Path to save the logging file

level: INFO # Logging level: DEBUG | INFO | WARNING | ERROR | SUCCESS | CRITICAL

format: '{message}' # logging output format. Example: '{time:YYYY-MM-DD at HH:mm:ss} {level} {message}'

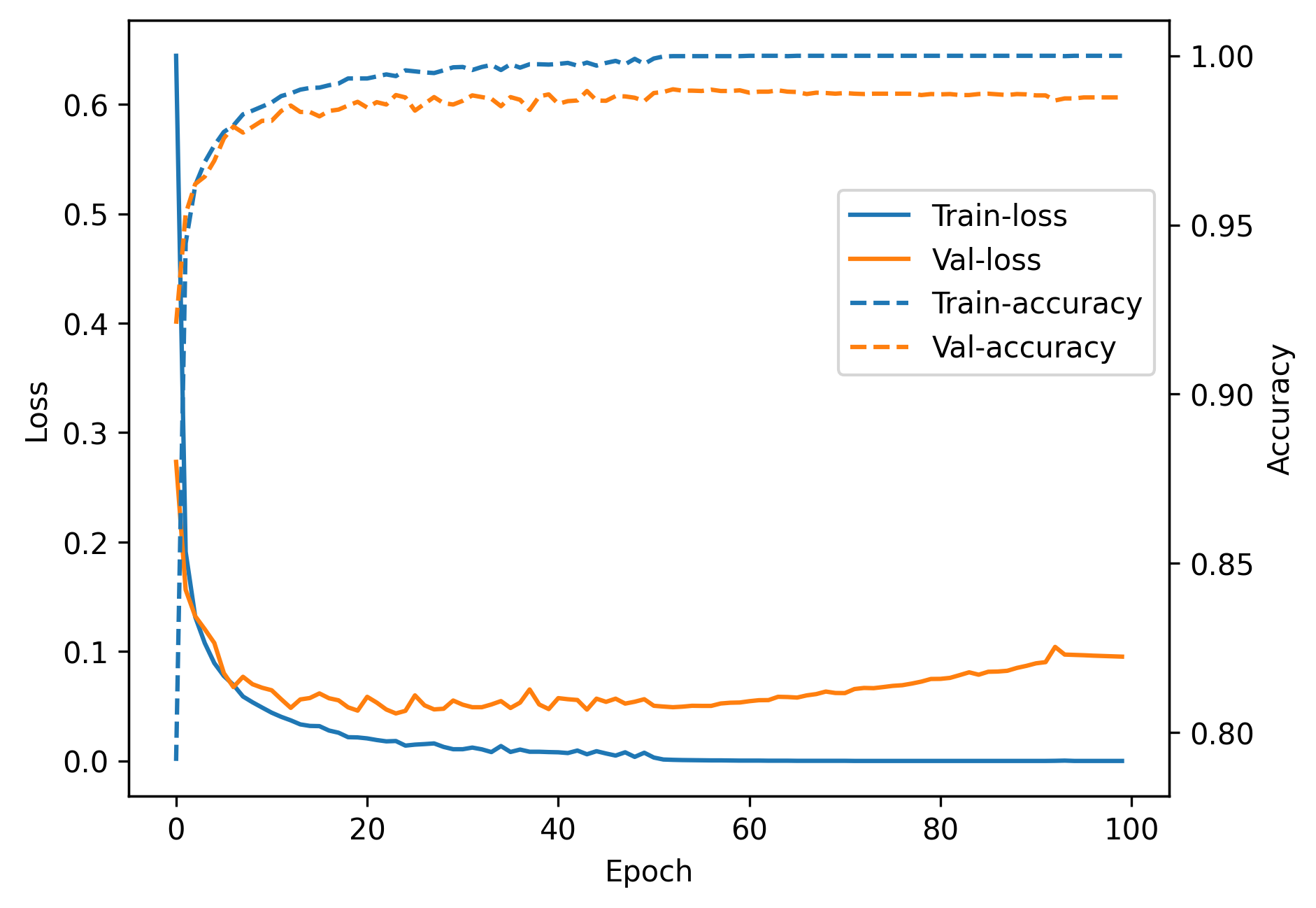

visualize_log_save: ./image/training_log_mnist.png # Path to save the visualization result

数据

结果

| 数据集 | 序列长度 | 输入维度 | 准确率 |

|---|---|---|---|

| MNIST | 28 | 28 | 0.9892 |

EPOCH: 001/100 LR: 0.0010 TRAIN-LOSS: 0.6444 TRAIN-ACC: 0.7916 VAL-LOSS: 0.2733 VAL-ACC: 0.9208 EPOCH-TIME: 0m10s

EPOCH: 002/100 LR: 0.0010 TRAIN-LOSS: 0.1911 TRAIN-ACC: 0.9446 VAL-LOSS: 0.1564 VAL-ACC: 0.9533 EPOCH-TIME: 0m10s

EPOCH: 003/100 LR: 0.0010 TRAIN-LOSS: 0.1317 TRAIN-ACC: 0.9617 VAL-LOSS: 0.1323 VAL-ACC: 0.9621 EPOCH-TIME: 0m9s

EPOCH: 004/100 LR: 0.0010 TRAIN-LOSS: 0.1077 TRAIN-ACC: 0.9686 VAL-LOSS: 0.1204 VAL-ACC: 0.9643 EPOCH-TIME: 0m9s

EPOCH: 005/100 LR: 0.0010 TRAIN-LOSS: 0.0894 TRAIN-ACC: 0.9735 VAL-LOSS: 0.1078 VAL-ACC: 0.9690 EPOCH-TIME: 0m9s

EPOCH: 006/100 LR: 0.0010 TRAIN-LOSS: 0.0776 TRAIN-ACC: 0.9775 VAL-LOSS: 0.0802 VAL-ACC: 0.9756 EPOCH-TIME: 0m9s

EPOCH: 007/100 LR: 0.0010 TRAIN-LOSS: 0.0695 TRAIN-ACC: 0.9794 VAL-LOSS: 0.0675 VAL-ACC: 0.9791 EPOCH-TIME: 0m9s

EPOCH: 008/100 LR: 0.0010 TRAIN-LOSS: 0.0589 TRAIN-ACC: 0.9827 VAL-LOSS: 0.0769 VAL-ACC: 0.9773 EPOCH-TIME: 0m9s

EPOCH: 009/100 LR: 0.0010 TRAIN-LOSS: 0.0536 TRAIN-ACC: 0.9838 VAL-LOSS: 0.0701 VAL-ACC: 0.9790 EPOCH-TIME: 0m9s

EPOCH: 010/100 LR: 0.0010 TRAIN-LOSS: 0.0488 TRAIN-ACC: 0.9850 VAL-LOSS: 0.0669 VAL-ACC: 0.9808 EPOCH-TIME: 0m10s

EPOCH: 011/100 LR: 0.0010 TRAIN-LOSS: 0.0441 TRAIN-ACC: 0.9862 VAL-LOSS: 0.0646 VAL-ACC: 0.9808 EPOCH-TIME: 0m10s

EPOCH: 012/100 LR: 0.0010 TRAIN-LOSS: 0.0403 TRAIN-ACC: 0.9881 VAL-LOSS: 0.0564 VAL-ACC: 0.9837 EPOCH-TIME: 0m10s

EPOCH: 013/100 LR: 0.0010 TRAIN-LOSS: 0.0371 TRAIN-ACC: 0.9889 VAL-LOSS: 0.0485 VAL-ACC: 0.9853 EPOCH-TIME: 0m9s

EPOCH: 014/100 LR: 0.0010 TRAIN-LOSS: 0.0334 TRAIN-ACC: 0.9900 VAL-LOSS: 0.0562 VAL-ACC: 0.9834 EPOCH-TIME: 0m10s

EPOCH: 015/100 LR: 0.0010 TRAIN-LOSS: 0.0320 TRAIN-ACC: 0.9905 VAL-LOSS: 0.0575 VAL-ACC: 0.9834 EPOCH-TIME: 0m9s

EPOCH: 016/100 LR: 0.0010 TRAIN-LOSS: 0.0318 TRAIN-ACC: 0.9906 VAL-LOSS: 0.0617 VAL-ACC: 0.9821 EPOCH-TIME: 0m9s

EPOCH: 017/100 LR: 0.0010 TRAIN-LOSS: 0.0278 TRAIN-ACC: 0.9913 VAL-LOSS: 0.0573 VAL-ACC: 0.9837 EPOCH-TIME: 0m10s

EPOCH: 018/100 LR: 0.0010 TRAIN-LOSS: 0.0258 TRAIN-ACC: 0.9918 VAL-LOSS: 0.0554 VAL-ACC: 0.9841 EPOCH-TIME: 0m9s

EPOCH: 019/100 LR: 0.0010 TRAIN-LOSS: 0.0217 TRAIN-ACC: 0.9933 VAL-LOSS: 0.0490 VAL-ACC: 0.9853 EPOCH-TIME: 0m10s

EPOCH: 020/100 LR: 0.0010 TRAIN-LOSS: 0.0215 TRAIN-ACC: 0.9933 VAL-LOSS: 0.0460 VAL-ACC: 0.9864 EPOCH-TIME: 0m10s

EPOCH: 021/100 LR: 0.0010 TRAIN-LOSS: 0.0206 TRAIN-ACC: 0.9933 VAL-LOSS: 0.0586 VAL-ACC: 0.9847 EPOCH-TIME: 0m10s

EPOCH: 022/100 LR: 0.0010 TRAIN-LOSS: 0.0191 TRAIN-ACC: 0.9939 VAL-LOSS: 0.0533 VAL-ACC: 0.9863 EPOCH-TIME: 0m10s

EPOCH: 023/100 LR: 0.0010 TRAIN-LOSS: 0.0179 TRAIN-ACC: 0.9945 VAL-LOSS: 0.0470 VAL-ACC: 0.9856 EPOCH-TIME: 0m10s

EPOCH: 024/100 LR: 0.0010 TRAIN-LOSS: 0.0182 TRAIN-ACC: 0.9940 VAL-LOSS: 0.0434 VAL-ACC: 0.9884 EPOCH-TIME: 0m10s

EPOCH: 025/100 LR: 0.0010 TRAIN-LOSS: 0.0140 TRAIN-ACC: 0.9957 VAL-LOSS: 0.0458 VAL-ACC: 0.9877 EPOCH-TIME: 0m10s

EPOCH: 026/100 LR: 0.0010 TRAIN-LOSS: 0.0149 TRAIN-ACC: 0.9954 VAL-LOSS: 0.0599 VAL-ACC: 0.9838 EPOCH-TIME: 0m10s

EPOCH: 027/100 LR: 0.0010 TRAIN-LOSS: 0.0154 TRAIN-ACC: 0.9951 VAL-LOSS: 0.0508 VAL-ACC: 0.9858 EPOCH-TIME: 0m10s

EPOCH: 028/100 LR: 0.0010 TRAIN-LOSS: 0.0160 TRAIN-ACC: 0.9949 VAL-LOSS: 0.0471 VAL-ACC: 0.9878 EPOCH-TIME: 0m10s

EPOCH: 029/100 LR: 0.0010 TRAIN-LOSS: 0.0128 TRAIN-ACC: 0.9957 VAL-LOSS: 0.0477 VAL-ACC: 0.9860 EPOCH-TIME: 0m10s

EPOCH: 030/100 LR: 0.0010 TRAIN-LOSS: 0.0107 TRAIN-ACC: 0.9966 VAL-LOSS: 0.0552 VAL-ACC: 0.9856 EPOCH-TIME: 0m10s

EPOCH: 031/100 LR: 0.0010 TRAIN-LOSS: 0.0107 TRAIN-ACC: 0.9967 VAL-LOSS: 0.0514 VAL-ACC: 0.9867 EPOCH-TIME: 0m9s

EPOCH: 032/100 LR: 0.0010 TRAIN-LOSS: 0.0122 TRAIN-ACC: 0.9958 VAL-LOSS: 0.0491 VAL-ACC: 0.9883 EPOCH-TIME: 0m10s

EPOCH: 033/100 LR: 0.0010 TRAIN-LOSS: 0.0106 TRAIN-ACC: 0.9967 VAL-LOSS: 0.0491 VAL-ACC: 0.9878 EPOCH-TIME: 0m9s

EPOCH: 034/100 LR: 0.0010 TRAIN-LOSS: 0.0081 TRAIN-ACC: 0.9973 VAL-LOSS: 0.0516 VAL-ACC: 0.9873 EPOCH-TIME: 0m10s

EPOCH: 035/100 LR: 0.0010 TRAIN-LOSS: 0.0136 TRAIN-ACC: 0.9958 VAL-LOSS: 0.0547 VAL-ACC: 0.9851 EPOCH-TIME: 0m9s

EPOCH: 036/100 LR: 0.0010 TRAIN-LOSS: 0.0082 TRAIN-ACC: 0.9975 VAL-LOSS: 0.0484 VAL-ACC: 0.9878 EPOCH-TIME: 0m10s

EPOCH: 037/100 LR: 0.0010 TRAIN-LOSS: 0.0104 TRAIN-ACC: 0.9965 VAL-LOSS: 0.0533 VAL-ACC: 0.9870 EPOCH-TIME: 0m9s

EPOCH: 038/100 LR: 0.0010 TRAIN-LOSS: 0.0084 TRAIN-ACC: 0.9975 VAL-LOSS: 0.0653 VAL-ACC: 0.9840 EPOCH-TIME: 0m10s

EPOCH: 039/100 LR: 0.0010 TRAIN-LOSS: 0.0084 TRAIN-ACC: 0.9975 VAL-LOSS: 0.0516 VAL-ACC: 0.9880 EPOCH-TIME: 0m9s

EPOCH: 040/100 LR: 0.0010 TRAIN-LOSS: 0.0081 TRAIN-ACC: 0.9974 VAL-LOSS: 0.0474 VAL-ACC: 0.9886 EPOCH-TIME: 0m9s

EPOCH: 041/100 LR: 0.0010 TRAIN-LOSS: 0.0079 TRAIN-ACC: 0.9976 VAL-LOSS: 0.0574 VAL-ACC: 0.9858 EPOCH-TIME: 0m9s

EPOCH: 042/100 LR: 0.0010 TRAIN-LOSS: 0.0072 TRAIN-ACC: 0.9979 VAL-LOSS: 0.0564 VAL-ACC: 0.9866 EPOCH-TIME: 0m9s

EPOCH: 043/100 LR: 0.0010 TRAIN-LOSS: 0.0095 TRAIN-ACC: 0.9971 VAL-LOSS: 0.0557 VAL-ACC: 0.9868 EPOCH-TIME: 0m10s

EPOCH: 044/100 LR: 0.0010 TRAIN-LOSS: 0.0061 TRAIN-ACC: 0.9980 VAL-LOSS: 0.0469 VAL-ACC: 0.9896 EPOCH-TIME: 0m10s

EPOCH: 045/100 LR: 0.0010 TRAIN-LOSS: 0.0089 TRAIN-ACC: 0.9971 VAL-LOSS: 0.0569 VAL-ACC: 0.9868 EPOCH-TIME: 0m10s

EPOCH: 046/100 LR: 0.0010 TRAIN-LOSS: 0.0068 TRAIN-ACC: 0.9979 VAL-LOSS: 0.0540 VAL-ACC: 0.9867 EPOCH-TIME: 0m9s

EPOCH: 047/100 LR: 0.0010 TRAIN-LOSS: 0.0049 TRAIN-ACC: 0.9985 VAL-LOSS: 0.0569 VAL-ACC: 0.9881 EPOCH-TIME: 0m10s

EPOCH: 048/100 LR: 0.0010 TRAIN-LOSS: 0.0079 TRAIN-ACC: 0.9975 VAL-LOSS: 0.0524 VAL-ACC: 0.9880 EPOCH-TIME: 0m9s

EPOCH: 049/100 LR: 0.0010 TRAIN-LOSS: 0.0037 TRAIN-ACC: 0.9991 VAL-LOSS: 0.0541 VAL-ACC: 0.9876 EPOCH-TIME: 0m9s

EPOCH: 050/100 LR: 0.0010 TRAIN-LOSS: 0.0075 TRAIN-ACC: 0.9976 VAL-LOSS: 0.0564 VAL-ACC: 0.9866 EPOCH-TIME: 0m9s

EPOCH: 051/100 LR: 0.0001 TRAIN-LOSS: 0.0031 TRAIN-ACC: 0.9992 VAL-LOSS: 0.0504 VAL-ACC: 0.9890 EPOCH-TIME: 0m9s

EPOCH: 052/100 LR: 0.0001 TRAIN-LOSS: 0.0013 TRAIN-ACC: 0.9998 VAL-LOSS: 0.0497 VAL-ACC: 0.9893 EPOCH-TIME: 0m10s

EPOCH: 053/100 LR: 0.0001 TRAIN-LOSS: 0.0010 TRAIN-ACC: 0.9999 VAL-LOSS: 0.0491 VAL-ACC: 0.9901 EPOCH-TIME: 0m10s

EPOCH: 054/100 LR: 0.0001 TRAIN-LOSS: 0.0008 TRAIN-ACC: 0.9999 VAL-LOSS: 0.0496 VAL-ACC: 0.9897 EPOCH-TIME: 0m9s

EPOCH: 055/100 LR: 0.0001 TRAIN-LOSS: 0.0007 TRAIN-ACC: 0.9999 VAL-LOSS: 0.0504 VAL-ACC: 0.9897 EPOCH-TIME: 0m9s

EPOCH: 056/100 LR: 0.0001 TRAIN-LOSS: 0.0006 TRAIN-ACC: 0.9999 VAL-LOSS: 0.0503 VAL-ACC: 0.9896 EPOCH-TIME: 0m10s

EPOCH: 057/100 LR: 0.0001 TRAIN-LOSS: 0.0005 TRAIN-ACC: 0.9999 VAL-LOSS: 0.0503 VAL-ACC: 0.9900 EPOCH-TIME: 0m10s

EPOCH: 058/100 LR: 0.0001 TRAIN-LOSS: 0.0005 TRAIN-ACC: 0.9999 VAL-LOSS: 0.0525 VAL-ACC: 0.9896 EPOCH-TIME: 0m10s

EPOCH: 059/100 LR: 0.0001 TRAIN-LOSS: 0.0004 TRAIN-ACC: 0.9999 VAL-LOSS: 0.0532 VAL-ACC: 0.9896 EPOCH-TIME: 0m10s

EPOCH: 060/100 LR: 0.0001 TRAIN-LOSS: 0.0003 TRAIN-ACC: 0.9999 VAL-LOSS: 0.0534 VAL-ACC: 0.9898 EPOCH-TIME: 0m10s

EPOCH: 061/100 LR: 0.0001 TRAIN-LOSS: 0.0003 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0546 VAL-ACC: 0.9891 EPOCH-TIME: 0m10s

EPOCH: 062/100 LR: 0.0001 TRAIN-LOSS: 0.0003 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0555 VAL-ACC: 0.9894 EPOCH-TIME: 0m10s

EPOCH: 063/100 LR: 0.0001 TRAIN-LOSS: 0.0002 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0556 VAL-ACC: 0.9894 EPOCH-TIME: 0m10s

EPOCH: 064/100 LR: 0.0001 TRAIN-LOSS: 0.0002 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0586 VAL-ACC: 0.9898 EPOCH-TIME: 0m9s

EPOCH: 065/100 LR: 0.0001 TRAIN-LOSS: 0.0002 TRAIN-ACC: 0.9999 VAL-LOSS: 0.0584 VAL-ACC: 0.9894 EPOCH-TIME: 0m10s

EPOCH: 066/100 LR: 0.0001 TRAIN-LOSS: 0.0001 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0579 VAL-ACC: 0.9893 EPOCH-TIME: 0m9s

EPOCH: 067/100 LR: 0.0001 TRAIN-LOSS: 0.0001 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0599 VAL-ACC: 0.9887 EPOCH-TIME: 0m9s

EPOCH: 068/100 LR: 0.0001 TRAIN-LOSS: 0.0001 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0611 VAL-ACC: 0.9891 EPOCH-TIME: 0m10s

EPOCH: 069/100 LR: 0.0001 TRAIN-LOSS: 0.0001 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0634 VAL-ACC: 0.9890 EPOCH-TIME: 0m9s

EPOCH: 070/100 LR: 0.0001 TRAIN-LOSS: 0.0001 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0621 VAL-ACC: 0.9888 EPOCH-TIME: 0m9s

EPOCH: 071/100 LR: 0.0001 TRAIN-LOSS: 0.0001 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0620 VAL-ACC: 0.9890 EPOCH-TIME: 0m10s

EPOCH: 072/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0657 VAL-ACC: 0.9888 EPOCH-TIME: 0m10s

EPOCH: 073/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0667 VAL-ACC: 0.9887 EPOCH-TIME: 0m10s

EPOCH: 074/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0665 VAL-ACC: 0.9888 EPOCH-TIME: 0m10s

EPOCH: 075/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0675 VAL-ACC: 0.9888 EPOCH-TIME: 0m10s

EPOCH: 076/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0686 VAL-ACC: 0.9888 EPOCH-TIME: 0m10s

EPOCH: 077/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0692 VAL-ACC: 0.9888 EPOCH-TIME: 0m9s

EPOCH: 078/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0707 VAL-ACC: 0.9888 EPOCH-TIME: 0m10s

EPOCH: 079/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0725 VAL-ACC: 0.9884 EPOCH-TIME: 0m10s

EPOCH: 080/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0749 VAL-ACC: 0.9887 EPOCH-TIME: 0m10s

EPOCH: 081/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0750 VAL-ACC: 0.9886 EPOCH-TIME: 0m10s

EPOCH: 082/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0759 VAL-ACC: 0.9887 EPOCH-TIME: 0m9s

EPOCH: 083/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0784 VAL-ACC: 0.9884 EPOCH-TIME: 0m10s

EPOCH: 084/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0810 VAL-ACC: 0.9884 EPOCH-TIME: 0m10s

EPOCH: 085/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0788 VAL-ACC: 0.9887 EPOCH-TIME: 0m10s

EPOCH: 086/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0815 VAL-ACC: 0.9888 EPOCH-TIME: 0m10s

EPOCH: 087/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0817 VAL-ACC: 0.9886 EPOCH-TIME: 0m10s

EPOCH: 088/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0824 VAL-ACC: 0.9884 EPOCH-TIME: 0m10s

EPOCH: 089/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0850 VAL-ACC: 0.9887 EPOCH-TIME: 0m9s

EPOCH: 090/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0869 VAL-ACC: 0.9886 EPOCH-TIME: 0m10s

EPOCH: 091/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0892 VAL-ACC: 0.9883 EPOCH-TIME: 0m9s

EPOCH: 092/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0903 VAL-ACC: 0.9883 EPOCH-TIME: 0m10s

EPOCH: 093/100 LR: 0.0001 TRAIN-LOSS: 0.0001 TRAIN-ACC: 1.0000 VAL-LOSS: 0.1042 VAL-ACC: 0.9868 EPOCH-TIME: 0m9s

EPOCH: 094/100 LR: 0.0001 TRAIN-LOSS: 0.0003 TRAIN-ACC: 0.9999 VAL-LOSS: 0.0972 VAL-ACC: 0.9874 EPOCH-TIME: 0m10s

EPOCH: 095/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0969 VAL-ACC: 0.9874 EPOCH-TIME: 0m9s

EPOCH: 096/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0966 VAL-ACC: 0.9877 EPOCH-TIME: 0m9s

EPOCH: 097/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0962 VAL-ACC: 0.9877 EPOCH-TIME: 0m9s

EPOCH: 098/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0959 VAL-ACC: 0.9877 EPOCH-TIME: 0m9s

EPOCH: 099/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0956 VAL-ACC: 0.9877 EPOCH-TIME: 0m9s

EPOCH: 100/100 LR: 0.0001 TRAIN-LOSS: 0.0000 TRAIN-ACC: 1.0000 VAL-LOSS: 0.0953 VAL-ACC: 0.9877 EPOCH-TIME: 0m10s

参考

- pytorch: https://github.com/pytorch/pytorch

- loguru: https://github.com/Delgan/loguru

- tqdm: https://github.com/tqdm/tqdm

- numpy: https://github.com/numpy/numpy

- pillow: https://github.com/python-pillow/Pillow

- matplotlib: https://github.com/matplotlib/matplotlib

- easydict: https://github.com/makinacorpus/easydict

794

794

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?