一、深度学习结构三要素

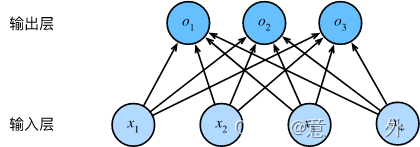

(一)线性模型

O = X W + b , Y ^ = s o f t m a x ( O ) . \begin{aligned} \mathbf{O} &= \mathbf{X} \mathbf{W} + \mathbf{b}, \\ \hat{\mathbf{Y}} & = \mathrm{softmax}(\mathbf{O}). \end{aligned} OY^=XW+b,=softmax(O).

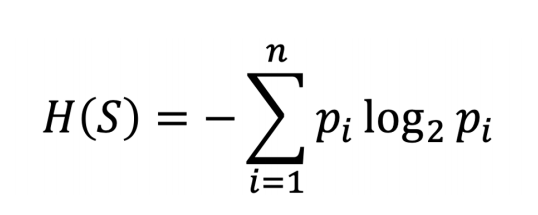

(二)损失函数

(三)精度

精度等于正确预测数与预测总数之间的比率

二、实现

(一)读取数据集

from numpy.core.fromnumeric import partition

import paddle

from IPython import display

from paddle.fluid.layers.nn import pad

from paddle.tensor.linalg import cross

from paddle import vision

from paddle.vision import transforms

from paddle.io import TensorDataset, DataLoader

import matplotlib.pylab as plt

def get_dataloader_workers():

"""使用4个进程来读取数据"""

return 4

def load_data_fashion_mnist(batch_size, resize=None):

"""下载Fashion-MNIST数据集,然后将其加载到内存中"""

trans = [transforms.ToTensor()]

if resize:

trans.insert(0, transforms.Resize(resize))

trans = transforms.Compose(trans)

mnist_train = vision.datasets.FashionMNIST(mode='train', transform=trans, download=True)

mnist_test = vision.datasets.FashionMNIST(mode='test', transform=trans, download=True)

return (DataLoader(mnist_train, batch_size=batch_size, shuffle=True,

num_workers=get_dataloader_workers()),

DataLoader(mnist_test, batch_size=batch_size, shuffle=False,

num_workers=get_dataloader_workers()))

"""返回Fashion-MNIST数据集的文本标签"""

def get_fashion_mnist_labels(labels):

text_labels = ['t-shirt', 'trouser', 'pullover', 'dress', 'coat',

'sandal', 'shirt', 'sneaker', 'bag', 'ankle boot']

return [text_labels[int(i)] for i in labels]

"""绘制图像列表"""

def show_images(imgs, num_rows, num_cols, titles=None, scale=1.5):

figsize = (num_cols * scale, num_rows * scale)

_, axes = plt.subplots(num_rows, num_cols, figsize=figsize)

axes = axes.flatten()

for i, (ax, img) in enumerate(zip(axes, imgs)):

if paddle.is_tensor(img):

# 图片张量

ax.imshow(img.numpy())

else:

# PIL图片

ax.imshow(img)

ax.axes.get_xaxis().set_visible(False)

ax.axes.get_yaxis().set_visible(False)

if titles:

ax.set_title(titles[i])

return axes

batch_size = 256

train_iter, test_iter = load_data_fashion_mnist(batch_size)

(二)线性模型

def softmax(X):

X_exp = paddle.exp(X)

partition = X_exp.sum(1, keepdim=True)# dim=1代表对行求和,keepdim表示是否需要保持输出的维度与输入一样

return X_exp / partition # 这里应用了广播机制

def net(X):

return softmax(paddle.matmul(X.reshape((-1, W.shape[0])), W) + b)

# reshape(-1,1)表示(任意行,1列),这里传递的是batch值

(三)损失函数

def cross_entropy(y_hat, y):

y = y.reshape(shape=[y.shape[0]])

return - paddle.log(y_hat[list(range(len(y_hat))), y])

(四)分类精度

def accuracy(y_hat, y):

y = y.reshape(shape=[y.shape[0]])

"""计算预测正确的数量"""

if len(y_hat.shape) > 1 and y_hat.shape[1] > 1:# 在y_hat是一个二维矩阵且每一行>1个 的情况下执行

y_hat = y_hat.argmax(axis=1)#每一行中预测值最大的作为y_hat类别

# cmp函数用于比较2个对象

cmp = y_hat == y

return float(cmp.sum())

class Accumulator:

"""在n个变量上累加"""

def __init__(self, n):

self.data = [0.0] * n

def add(self, *args):

self.data = [a + float(b) for a, b in zip(self.data, args)]

def reset(self):

self.data = [0.0] * len(self.data)

def __getitem__(self, idx):

return self.data[idx]

(五)训练

batch_size = 256

train_iter, test_iter = load_data_fashion_mnist(batch_size)

num_inputs = 784

num_outputs = 10

W = paddle.normal(0, 0.01, shape=(num_inputs, num_outputs))

W.stop_gradient = False

b = paddle.zeros(shape=[num_outputs])

b.stop_gradient = False

#print("W, b:", W, b)

lr = 0.2

num_epochs = 20

for epoch in range(num_epochs):

if isinstance(net, paddle.nn.Layer):

net.train()

# 训练损失总和、训练准确度总和、样本数

metric = Accumulator(3)

for X, y in train_iter():

# 计算梯度并更新参数

y_hat = net(X)

# print("X, y: ", X.shape, y.shape, y_hat.shape)

l = cross_entropy(y_hat, y)

# print("loss: ", l.shape)

# 使用定制的优化器和损失函数

l.sum().backward()

with paddle.no_grad():

W -= lr * W.grad / X.shape[0]

b -= lr * b.grad / batch_size

W.clear_grad()

b.clear_grad()

W.stop_gradient = False

b.stop_gradient = False

# print("updated W, b:", W, b)

metric.add(float(l.sum()), accuracy(y_hat, y), y.numel())

print(metric[0] / metric[2], metric[1] / metric[2])#所有的loss累加/总样本数,分类正确的/总样本数

(六)预测

def predict_ch3(net, test_iter, n=10): #@save

"""预测标签(定义见第3章)"""

for X, y in test_iter:

break

trues = get_fashion_mnist_labels(y)

preds = get_fashion_mnist_labels(net(X).argmax(axis=1))

titles = [true +'\n' + pred for true, pred in zip(trues, preds)]

show_images(

X[0:n].reshape((n, 28, 28)), 1, n, titles=titles[0:n])

predict_ch3(net, test_iter)

5319

5319

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?