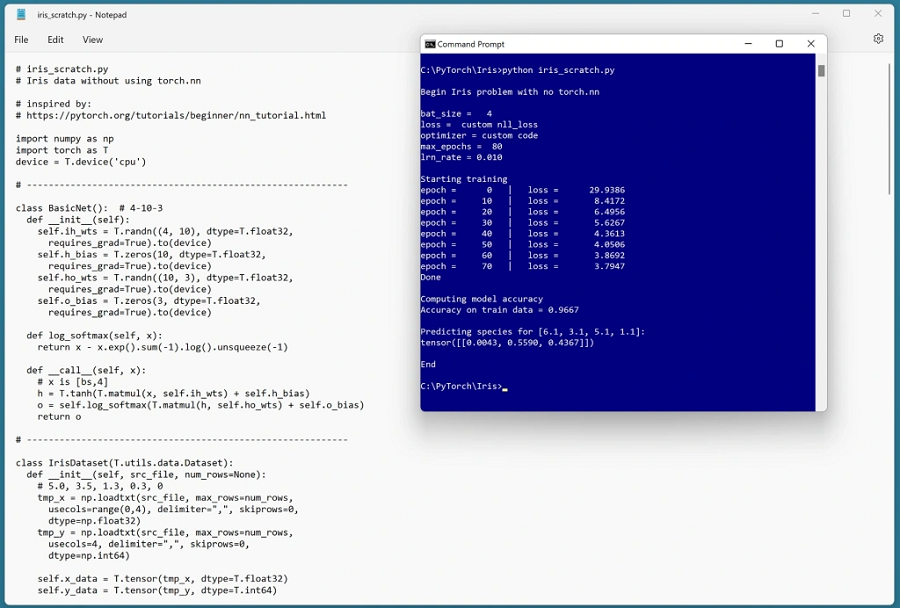

出于好奇,我决定尝试在低级层面实现 PyTorch 神经网络。我的意思是不使用封装了大量功能的 torch.nn 模块。

我编写了一个演示。需要处理的细节比我想象的要多得多,编写代码所花的时间也比我想象的要长得多。

NSDT工具推荐: Three.js AI纹理开发包 - YOLO合成数据生成器 - GLTF/GLB在线编辑 - 3D模型格式在线转换 - 可编程3D场景编辑器 - REVIT导出3D模型插件 - 3D模型语义搜索引擎 - Three.js虚拟轴心开发包 - 3D模型在线减面 - STL模型在线切割

这篇博文不涉及一般概念——它只涉及代码细节。我已经使用 C# 和 Python 从头实现了数百个神经网络,并且使用 PyTorch 实现了数百个神经网络。即便如此,我在不使用 torch.nn 模块的情况下实现 PyTorch 神经网络时还是遇到了很大困难。

但我从概念挑战中学到了很多东西,所以这段时间花得很值。

示例代码:

# iris_scratch.py

# Iris data without using torch.nn

# inspired by a similar experiment at:

# https://pytorch.org/tutorials/beginner/nn_tutorial.html

import numpy as np

import torch as T

device = T.device('cpu')

# -----------------------------------------------------------

class BasicNet(): # 4-10-3

def __init__(self):

self.ih_wts = T.randn((4, 10), dtype=T.float32,

requires_grad=True).to(device)

self.h_bias = T.zeros(10, dtype=T.float32,

requires_grad=True).to(device)

self.ho_wts = T.randn((10, 3), dtype=T.float32,

requires_grad=True).to(device)

self.o_bias = T.zeros(3, dtype=T.float32,

requires_grad=True).to(device)

def log_softmax(self, x):

return x - x.exp().sum(-1).log().unsqueeze(-1)

def __call__(self, x):

# x is [bs,4]

h = T.tanh(T.matmul(x, self.ih_wts) + self.h_bias)

o = self.log_softmax(T.matmul(h, self.ho_wts) +

self.o_bias)

return o

# -----------------------------------------------------------

class IrisDataset(T.utils.data.Dataset):

def __init__(self, src_file, num_rows=None):

# 5.0, 3.5, 1.3, 0.3, 0

tmp_x = np.loadtxt(src_file, max_rows=num_rows,

usecols=range(0,4), delimiter=",", skiprows=0,

dtype=np.float32)

tmp_y = np.loadtxt(src_file, max_rows=num_rows,

usecols=4, delimiter=",", skiprows=0,

dtype=np.int64)

self.x_data = T.tensor(tmp_x, dtype=T.float32).to(device)

self.y_data = T.tensor(tmp_y, dtype=T.int64).to(device)

def __len__(self):

return len(self.x_data)

def __getitem__(self, idx):

if T.is_tensor(idx):

idx = idx.tolist()

preds = self.x_data[idx]

spcs = self.y_data[idx]

return preds, spcs

# -----------------------------------------------------------

def nll_loss(predicted, target):

return -predicted[range(target.shape[0]), target].mean()

# -----------------------------------------------------------

def accuracy(model, dataset):

# assumes model.eval()

dataldr = T.utils.data.DataLoader(dataset, batch_size=1,

shuffle=False)

n_correct = 0; n_wrong = 0

for (_, batch) in enumerate(dataldr):

X = batch[0]

Y = batch[1] # already flattened by Dataset

with T.no_grad():

oupt = model(X) # logits form

big_idx = T.argmax(oupt)

# if big_idx.item() == Y.item():

if big_idx == Y:

n_correct += 1

else:

n_wrong += 1

acc = (n_correct * 1.0) / (n_correct + n_wrong)

return acc

# -----------------------------------------------------------

def main():

print("\nBegin Iris problem with no torch.nn ")

# 0. prepare

T.manual_seed(1)

np.random.seed(1)

# 1. load data

train_file = ".\\Data\\iris_train.txt"

train_ds = IrisDataset(train_file, num_rows=120)

bat_size = 4

train_ldr = T.utils.data.DataLoader(train_ds,

batch_size=bat_size, shuffle=True)

# 2. create network

net = BasicNet()

# 3. train model

max_epochs = 80

ep_log_interval = 10

lr = 0.01

loss_func = nll_loss # see above

# optimizer = T.optim.SGD(net.parameters(),

# lr=lrn_rate) # no parameters

print("\nbat_size = %3d " % bat_size)

print("loss = " + " custom nll_loss" )

print("optimizer = custom code")

print("max_epochs = %3d " % max_epochs)

print("lrn_rate = %0.3f " % lr)

print("\nStarting training")

for epoch in range(0, max_epochs):

epoch_loss = 0 # for one full epoch

for (batch_idx, batch) in enumerate(train_ldr):

X = batch[0] # [10,4]

Y = batch[1] # OK; alreay flattened

oupt = net(X)

loss_val = loss_func(oupt, Y) # a tensor

epoch_loss += loss_val.item() # accumulate

loss_val.backward() # compute gradients

# torch.optimizer.step()

# leaf Var in place

with T.no_grad(): # update weights

net.ih_wts -= net.ih_wts.grad * lr

net.h_bias -= net.h_bias.grad * lr

net.ho_wts -= net.ho_wts.grad * lr

net.o_bias -= net.o_bias.grad * lr

# torch.optimizer.zero_grad()

net.ih_wts.grad.zero_() # get ready for next update

net.h_bias.grad.zero_()

net.ho_wts.grad.zero_()

net.o_bias.grad.zero_()

if epoch % ep_log_interval == 0:

print("epoch = %6d | loss = %12.4f " % \

(epoch, epoch_loss) )

print("Done ")

# 4. evaluate model accuracy

print("\nComputing model accuracy")

acc = accuracy(net, train_ds) # item-by-item

print("Accuracy on train data = %0.4f" % acc)

# 5. make a prediction

print("\nPredicting species for [6.1, 3.1, 5.1, 1.1]: ")

unk = np.array([[6.1, 3.1, 5.1, 1.1]], dtype=np.float32)

unk = T.tensor(unk, dtype=T.float32).to(device)

with T.no_grad():

logits = net(unk) # values do not sum to 1.0

probs = T.softmax(logits, dim=1)

T.set_printoptions(precision=4)

print(probs)

print("\nEnd ")

# -----------------------------------------------------------

if __name__ == "__main__":

main()原文链接:不用nn.Module - BimAnt

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?