转载自 https://blog.csdn.net/qq_21368481/article/details/80196819

开篇先从FCN的net.py开始,net.py用于构建网络,即运行net.py可以直接生成所需要的train.prototxt和val.prototxt。

即当需要修改网络时,不需要自己逐个修改train.prototxt和val.prototxt,只需要修改net.py中的相应内容,运行后即可重新全盘生成所修改后的网络。

以下先拿fcn.berkeleyvision.org-master下的voc-fcn32s中的net.py文件进行分析,其源码如下:

-

import caffe

-

from caffe

import layers

as L, params

as P

-

from caffe.coord_map

import crop

-

-

-

def conv_relu(bottom, nout, ks=3, stride=1, pad=1):

-

conv = L.Convolution(bottom, kernel_size=ks, stride=stride,

-

num_output=nout, pad=pad,

-

param=[dict(lr_mult=

1, decay_mult=

1), dict(lr_mult=

2, decay_mult=

0)])

-

return conv, L.ReLU(conv, in_place=

True)

-

-

-

def max_pool(bottom, ks=2, stride=2):

-

return L.Pooling(bottom, pool=P.Pooling.MAX, kernel_size=ks, stride=stride)

-

-

-

def fcn(split):

-

n = caffe.NetSpec()

-

pydata_params = dict(split=split, mean=(

104.00699,

116.66877,

122.67892),

-

seed=

1337)

-

if split ==

'train':

-

pydata_params[

'sbdd_dir'] =

'../data/sbdd/dataset'

-

pylayer =

'SBDDSegDataLayer'

-

else:

-

pydata_params[

'voc_dir'] =

'../data/pascal/VOC2011'

-

pylayer =

'VOCSegDataLayer'

-

n.data, n.label = L.Python(module=

'voc_layers', layer=pylayer,

-

ntop=

2, param_str=str(pydata_params))

-

-

-

# the base net

-

n.conv1_1, n.relu1_1 = conv_relu(n.data,

64, pad=

100)

-

n.conv1_2, n.relu1_2 = conv_relu(n.relu1_1,

64)

-

n.pool1 = max_pool(n.relu1_2)

-

-

-

n.conv2_1, n.relu2_1 = conv_relu(n.pool1,

128)

-

n.conv2_2, n.relu2_2 = conv_relu(n.relu2_1,

128)

-

n.pool2 = max_pool(n.relu2_2)

-

-

-

n.conv3_1, n.relu3_1 = conv_relu(n.pool2,

256)

-

n.conv3_2, n.relu3_2 = conv_relu(n.relu3_1,

256)

-

n.conv3_3, n.relu3_3 = conv_relu(n.relu3_2,

256)

-

n.pool3 = max_pool(n.relu3_3)

-

-

-

n.conv4_1, n.relu4_1 = conv_relu(n.pool3,

512)

-

n.conv4_2, n.relu4_2 = conv_relu(n.relu4_1,

512)

-

n.conv4_3, n.relu4_3 = conv_relu(n.relu4_2,

512)

-

n.pool4 = max_pool(n.relu4_3)

-

-

-

n.conv5_1, n.relu5_1 = conv_relu(n.pool4,

512)

-

n.conv5_2, n.relu5_2 = conv_relu(n.relu5_1,

512)

-

n.conv5_3, n.relu5_3 = conv_relu(n.relu5_2,

512)

-

n.pool5 = max_pool(n.relu5_3)

-

-

-

# fully conv

-

n.fc6, n.relu6 = conv_relu(n.pool5,

4096, ks=

7, pad=

0)

-

n.drop6 = L.Dropout(n.relu6, dropout_ratio=

0.5, in_place=

True)

-

-

n.drop7 = L.Dropout(n.relu7, dropout_ratio=

0.5, in_place=

True)

-

n.score_fr = L.Convolution(n.drop7, num_output=

21, kernel_size=

1, pad=

0,

-

param=[dict(lr_mult=

1, decay_mult=

1), dict(lr_mult=

2, decay_mult=

0)])

-

n.upscore = L.Deconvolution(n.score_fr,

-

convolution_param=dict(num_output=

21, kernel_size=

64, stride=

32,

-

bias_term=

False),

-

param=[dict(lr_mult=

0)])

-

n.score = crop(n.upscore, n.data)

-

n.loss = L.SoftmaxWithLoss(n.score, n.label,

-

loss_param=dict(normalize=

False, ignore_label=

255))

-

-

-

return n.to_proto()

-

-

-

def make_net():

-

with open(

'train.prototxt',

'w')

as f:

-

f.write(str(fcn(

'train')))

-

-

-

with open(

'val.prototxt',

'w')

as f:

-

f.write(str(fcn(

'seg11valid')))

-

-

-

if __name__ ==

'__main__':

-

make_net()

1.函数conv_relu()

调用caffe中用于定义卷积层和ReLU激活函数层的Convolution和ReLU函数

-

#用于定义卷积层以及该层的激活函数层(均采用ReLU激活函数)

-

'''

-

参数bottum:即该层的上一层的输出

-

参数nout:该层输出的数目(对应于卷积层,即输出的特征图数目)

-

参数ks:卷积核的大小

-

参数stride:步长

-

参数pad:填充数

-

'''

-

def conv_relu(bottom, nout, ks=3, stride=1, pad=1):

-

conv = L.Convolution(bottom, kernel_size=ks, stride=stride,

-

num_output=nout, pad=pad,

-

param=[dict(lr_mult=

1, decay_mult=

1), dict(lr_mult=

2, decay_mult=

0)])

-

#前者为权重W的学习速率和衰减系数设置;后者为偏置b的学习速率(偏置不设置衰减系数)设置

-

#lr_mult为学习速率倍增参数(即最终的学习速率需乘上solver.prototxt中的base_lr)

-

#decay_mult为权重衰减系数倍增参数(即最终的权重衰减系数需乘上solver.prototxt中的weight_decay)

-

#返回该层及其激活层(激活层的输入和输出都为该卷积层的输出)

-

return conv, L.ReLU(conv, in_place=

True)

2.函数max_pool()

调用caffe中用于定义最大池化层的Pooling函数(Pooling.MAX即表示最大池化)

-

#用于定义池化层(均采用最大池化)

-

def max_pool(bottom, ks=2, stride=2):

-

return L.Pooling(bottom, pool=P.Pooling.MAX, kernel_size=ks, stride=stride)

3.函数fcn()

-

#用于生成train.prototxt和val.prototxt的函数

-

#输入参数split为'train'或'val'(前者为训练,后者为测试)

-

def fcn(split):

-

n = caffe.NetSpec()

#使用pycaffe定义Net

-

pydata_params = dict(split=split, mean=(

104.00699,

116.66877,

122.67892),

-

seed=

1337)

-

if split ==

'train':

-

pydata_params[

'sbdd_dir'] =

'../data/sbdd/dataset'

#对应训练集的路径

-

pylayer =

'SBDDSegDataLayer'

#类的名称,详见voc_layers.py

-

else:

-

pydata_params[

'voc_dir'] =

'../data/pascal/VOC2011'

#对应测试或验证集的路径

-

pylayer =

'VOCSegDataLayer'

-

'''

-

输入参数

-

参数module:即模型名称,一般对应自己所写的一个.py文件(用于实现自己想要的该层的功能,这里对应voc_layers.py文件)

-

参数layer:即所定义的类的名称

-

参数ntop:即该层的输出数目(一般而言第一层输出的是data和label)

-

参数param_str:即该层所需要的各项参数

-

输出参数

-

输出n.data和n.label,即对应ntop=2

-

'''

-

n.data, n.label = L.Python(module=

'voc_layers', layer=pylayer,

-

ntop=

2, param_str=str(pydata_params))

-

-

-

# the base net

-

n.conv1_1, n.relu1_1 = conv_relu(n.data,

64, pad=

100)

#conv1_1,1_1卷积层(激活函数为ReLU)

-

n.conv1_2, n.relu1_2 = conv_relu(n.relu1_1,

64)

#conv1_2,1_2卷积层(激活函数为ReLU)

-

n.pool1 = max_pool(n.relu1_2)

#pool1,第一个最大池化层

此处对应.prototxt中的内容如下:

-

layer {

-

name:

"conv1_1"

-

type:

"Convolution"

-

bottom:

"data"

-

top:

"conv1_1"

-

param {

-

lr_mult:

1.0

-

decay_mult:

1.0

-

}

-

param {

-

lr_mult:

2.0

-

decay_mult:

0.0

-

}

-

convolution_param {

-

num_output:

64

-

pad:

100

-

kernel_size:

3

-

stride:

1

-

}

-

}

-

layer {

-

name:

"relu1_1"

-

type:

"ReLU"

-

bottom:

"conv1_1"

-

top:

"conv1_1"

-

}

-

layer {

-

name:

"conv1_2"

-

type:

"Convolution"

-

bottom:

"conv1_1"

-

top:

"conv1_2"

-

param {

-

lr_mult:

1.0

-

decay_mult:

1.0

-

}

-

param {

-

lr_mult:

2.0

-

decay_mult:

0.0

-

}

-

convolution_param {

-

num_output:

64

-

pad:

1

-

kernel_size:

3

-

stride:

1

-

}

-

}

-

layer {

-

name:

"relu1_2"

-

type:

"ReLU"

-

bottom:

"conv1_2"

-

top:

"conv1_2"

-

}

-

layer {

-

name:

"pool1"

-

type:

"Pooling"

-

bottom:

"conv1_2"

-

top:

"pool1"

-

pooling_param {

-

pool: MAX

-

kernel_size:

2

-

stride:

2

-

}

-

}

这里第一个卷积层conv1_1采用pad=100进行填充是为了防止输入图片过小,也是FCN可以输入任意大小图片进行训练和测试的原因,具体分析如下:

从程序中可以看出conv1到conv5各卷积层的卷积核大小均为k=3,填充均为p=1(除了conv1_1),步长均为s=1,即卷积层的输出大小和输入大小是一样的,即(此处拿height这一维举例子,且假设conv1_1的填充也为1,同时假设输出的大小可以为小数,即去掉向下取整操作)

其中的40就是输出所多余的部分,和conv1_1层的填充数pad有关,而score层的目的就是要裁剪掉这多余部分,怎么裁剪,即对称裁剪(取中间部分,原因和填充是对称填充有关),故offset为19,即从每行每列的第20个像素点开始裁剪,取原图像大小为裁剪步长,得到和原图一样大小的输出(为何是19,不是20,这个也是因为caffe是用C++写的,数组的索引号从0开始),如下示意图:

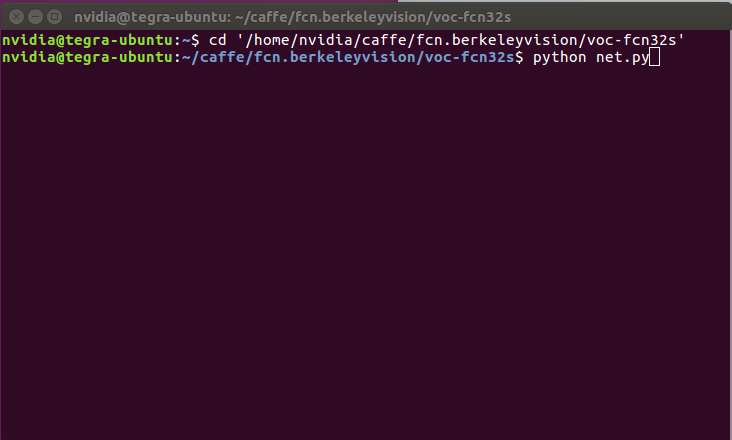

要是没看懂coord_map.py文件的原理,可以自己改一下conv1_1的pad,然后在终端输入一下语句运行修改过的net.py文件,看看score这一层的offset的变化,可以间接来理解conv1_1的pad以及这个offset的作用。

python net.py

-

#损失函数采用softmax

-

n.loss = L.SoftmaxWithLoss(n.score, n.label,

-

loss_param=dict(normalize=

False, ignore_label=

255))

-

#忽略255这个像素值

-

-

return n.to_proto()

#返回生成的整个网络

4.主函数及其入口

-

#创建train.prototxt和val.prototxt并写入相应的网络

-

def make_net():

-

with open(

'train.prototxt',

'w')

as f:

-

f.write(str(fcn(

'train')))

-

-

with open(

'val.prototxt',

'w')

as f:

-

f.write(str(fcn(

'seg11valid')))

-

#主函数入口

-

if __name__ ==

'__main__':

-

make_net()

以下为部分训练日志:

大家可以对照每一层的核的大小、步长和填充数来计算每一层的输出大小,然后可以和caffe的输出结果对照一下,需要说明的一点是caffe中的卷积层计算输出大小时采用向下取整的方式,但其池化层计算输出大小采用向上取整,具体原因暂未知,还没深入看代码,但个人感觉是互补吧,将卷积层丢失的在池化层补回来。故在pool2层caffe的输出是144*175,而不是144*174(174是概念里都是向下取整,但caffe并不是这么做的)。

具体可参见caffe/src/caffe/layers下的conv_layer.cpp和pooling_layer.cpp文件,在此截取部分代码,方便查看:

(1)conv_layer.cpp(采用除号/向下取整)

-

template <

typename Dtype>

-

void ConvolutionLayer<Dtype>::compute_output_shape() {

-

const

int* kernel_shape_data =

this->kernel_shape_.cpu_data();

-

const

int* stride_data =

this->stride_.cpu_data();

-

const

int* pad_data =

this->pad_.cpu_data();

-

const

int* dilation_data =

this->dilation_.cpu_data();

-

this->output_shape_.clear();

-

for (

int i =

0; i <

this->num_spatial_axes_; ++i) {

-

// i + 1 to skip channel axis

-

const

int input_dim =

this->input_shape(i +

1);

-

const

int kernel_extent = dilation_data[i] * (kernel_shape_data[i] -

1) +

1;

-

const

int output_dim = (input_dim +

2 * pad_data[i] - kernel_extent)

-

/ stride_data[i] +

1;

-

this->output_shape_.push_back(output_dim);

-

}

-

}

(2)pooling_layer.cpp(采用ceil函数向上取整)

-

template <

typename Dtype>

-

void PoolingLayer<Dtype>::Reshape(

const

vector<Blob<Dtype>*>& bottom,

-

const

vector<Blob<Dtype>*>& top) {

-

CHECK_EQ(

4, bottom[

0]->num_axes()) <<

"Input must have 4 axes, "

-

<<

"corresponding to (num, channels, height, width)";

-

channels_ = bottom[

0]->channels();

-

height_ = bottom[

0]->height();

-

width_ = bottom[

0]->width();

-

if (global_pooling_) {

-

kernel_h_ = bottom[

0]->height();

-

kernel_w_ = bottom[

0]->width();

-

}

-

pooled_height_ =

static_cast<

int>(

ceil(

static_cast<

float>(

-

height_ +

2 * pad_h_ - kernel_h_) / stride_h_)) +

1;

-

pooled_width_ =

static_cast<

int>(

ceil(

static_cast<

float>(

-

width_ +

2 * pad_w_ - kernel_w_) / stride_w_)) +

1;

-

if (pad_h_ || pad_w_) {

-

// If we have padding, ensure that the last pooling starts strictly

-

// inside the image (instead of at the padding); otherwise clip the last.

-

if ((pooled_height_ -

1) * stride_h_ >= height_ + pad_h_) {

-

--pooled_height_;

-

}

-

if ((pooled_width_ -

1) * stride_w_ >= width_ + pad_w_) {

-

--pooled_width_;

-

}

-

CHECK_LT((pooled_height_ -

1) * stride_h_, height_ + pad_h_);

-

CHECK_LT((pooled_width_ -

1) * stride_w_, width_ + pad_w_);

-

}

-

top[

0]->Reshape(bottom[

0]->num(), channels_, pooled_height_,

-

pooled_width_);

-

if (top.size() >

1) {

-

top[

1]->ReshapeLike(*top[

0]);

-

}

-

// If max pooling, we will initialize the vector index part.

-

if (

this->layer_param_.pooling_param().pool() ==

-

PoolingParameter_PoolMethod_MAX && top.size() ==

1) {

-

max_idx_.Reshape(bottom[

0]->num(), channels_, pooled_height_,

-

pooled_width_);

-

}

-

// If stochastic pooling, we will initialize the random index part.

-

if (

this->layer_param_.pooling_param().pool() ==

-

PoolingParameter_PoolMethod_STOCHASTIC) {

-

rand_idx_.Reshape(bottom[

0]->num(), channels_, pooled_height_,

-

pooled_width_);

-

}

-

}

具体的输出日志:

-

I0505

15:

40:

16.159765

5317 layer_factory.hpp:

77] Creating layer data

-

I0505

15:

40:

16.174499

5317 net.cpp:

84] Creating Layer data

-

I0505

15:

40:

16.174676

5317 net.cpp:

380] data -> data

-

I0505

15:

40:

16.174806

5317 net.cpp:

380] data -> label

-

I0505

15:

40:

16.330996

5317 net.cpp:

122] Setting up data

-

I0505

15:

40:

16.331058

5317 net.cpp:

129] Top shape:

1

3

375

500 (

562500)

-

I0505

15:

40:

16.331099

5317 net.cpp:

129] Top shape:

1

1

375

500 (

187500)

-

I0505

15:

40:

16.331130

5317 net.cpp:

137] Memory required

for data:

3000000

-

I0505

15:

40:

16.331173

5317 layer_factory.hpp:

77] Creating layer data_data_0_split

-

I0505

15:

40:

16.331220

5317 net.cpp:

84] Creating Layer data_data_0_split

-

I0505

15:

40:

16.331256

5317 net.cpp:

406] data_data_0_split <- data

-

I0505

15:

40:

16.331293

5317 net.cpp:

380] data_data_0_split -> data_data_0_split_0

-

I0505

15:

40:

16.331348

5317 net.cpp:

380] data_data_0_split -> data_data_0_split_1

-

I0505

15:

40:

16.331512

5317 net.cpp:

122] Setting up data_data_0_split

-

I0505

15:

40:

16.331542

5317 net.cpp:

129] Top shape:

1

3

375

500 (

562500)

-

I0505

15:

40:

16.331569

5317 net.cpp:

129] Top shape:

1

3

375

500 (

562500)

-

I0505

15:

40:

16.331596

5317 net.cpp:

137] Memory required

for data:

7500000

-

I0505

15:

40:

16.331619

5317 layer_factory.hpp:

77] Creating layer conv1_1

-

I0505

15:

40:

16.331663

5317 net.cpp:

84] Creating Layer conv1_1

-

I0505

15:

40:

16.331687

5317 net.cpp:

406] conv1_1 <- data_data_0_split_0

-

I0505

15:

40:

16.331720

5317 net.cpp:

380] conv1_1 -> conv1_1

-

I0505

15:

40:

17.840531

5317 net.cpp:

122] Setting up conv1_1

-

I0505

15:

40:

17.840585

5317 net.cpp:

129] Top shape:

1

64

573

698 (

25597056)

-

I0505

15:

40:

17.840610

5317 net.cpp:

137] Memory required

for data:

109888224

-

I0505

15:

40:

17.840651

5317 layer_factory.hpp:

77] Creating layer relu1_1

-

I0505

15:

40:

17.840685

5317 net.cpp:

84] Creating Layer relu1_1

-

I0505

15:

40:

17.840703

5317 net.cpp:

406] relu1_1 <- conv1_1

-

I0505

15:

40:

17.840723

5317 net.cpp:

367] relu1_1 -> conv1_1 (

in-place)

-

I0505

15:

40:

17.842114

5317 net.cpp:

122] Setting up relu1_1

-

I0505

15:

40:

17.842149

5317 net.cpp:

129] Top shape:

1

64

573

698 (

25597056)

-

I0505

15:

40:

17.842166

5317 net.cpp:

137] Memory required

for data:

212276448

-

I0505

15:

40:

17.842182

5317 layer_factory.hpp:

77] Creating layer conv1_2

-

I0505

15:

40:

17.842221

5317 net.cpp:

84] Creating Layer conv1_2

-

I0505

15:

40:

17.842236

5317 net.cpp:

406] conv1_2 <- conv1_1

-

I0505

15:

40:

17.842260

5317 net.cpp:

380] conv1_2 -> conv1_2

-

I0505

15:

40:

17.847681

5317 net.cpp:

122] Setting up conv1_2

-

I0505

15:

40:

17.847723

5317 net.cpp:

129] Top shape:

1

64

573

698 (

25597056)

-

I0505

15:

40:

17.847745

5317 net.cpp:

137] Memory required

for data:

314664672

-

I0505

15:

40:

17.847779

5317 layer_factory.hpp:

77] Creating layer relu1_2

-

I0505

15:

40:

17.847810

5317 net.cpp:

84] Creating Layer relu1_2

-

I0505

15:

40:

17.847826

5317 net.cpp:

406] relu1_2 <- conv1_2

-

I0505

15:

40:

17.847846

5317 net.cpp:

367] relu1_2 -> conv1_2 (

in-place)

-

I0505

15:

40:

17.849138

5317 net.cpp:

122] Setting up relu1_2

-

I0505

15:

40:

17.849169

5317 net.cpp:

129] Top shape:

1

64

573

698 (

25597056)

-

I0505

15:

40:

17.849186

5317 net.cpp:

137] Memory required

for data:

417052896

-

I0505

15:

40:

17.849202

5317 layer_factory.hpp:

77] Creating layer pool1

-

I0505

15:

40:

17.849226

5317 net.cpp:

84] Creating Layer pool1

-

I0505

15:

40:

17.849241

5317 net.cpp:

406] pool1 <- conv1_2

-

I0505

15:

40:

17.849261

5317 net.cpp:

380] pool1 -> pool1

-

I0505

15:

40:

17.849390

5317 net.cpp:

122] Setting up pool1

-

I0505

15:

40:

17.849406

5317 net.cpp:

129] Top shape:

1

64

287

349 (

6410432)

-

I0505

15:

40:

17.849421

5317 net.cpp:

137] Memory required

for data:

442694624

-

I0505

15:

40:

17.849434

5317 layer_factory.hpp:

77] Creating layer conv2_1

-

I0505

15:

40:

17.849462

5317 net.cpp:

84] Creating Layer conv2_1

-

I0505

15:

40:

17.849475

5317 net.cpp:

406] conv2_1 <- pool1

-

I0505

15:

40:

17.849496

5317 net.cpp:

380] conv2_1 -> conv2_1

-

I0505

15:

40:

17.853972

5317 net.cpp:

122] Setting up conv2_1

-

I0505

15:

40:

17.854018

5317 net.cpp:

129] Top shape:

1

128

287

349 (

12820864)

-

I0505

15:

40:

17.854041

5317 net.cpp:

137] Memory required

for data:

493978080

-

I0505

15:

40:

17.854084

5317 layer_factory.hpp:

77] Creating layer relu2_1

-

I0505

15:

40:

17.854116

5317 net.cpp:

84] Creating Layer relu2_1

-

I0505

15:

40:

17.854133

5317 net.cpp:

406] relu2_1 <- conv2_1

-

I0505

15:

40:

17.854153

5317 net.cpp:

367] relu2_1 -> conv2_1 (

in-place)

-

I0505

15:

40:

17.855494

5317 net.cpp:

122] Setting up relu2_1

-

I0505

15:

40:

17.855537

5317 net.cpp:

129] Top shape:

1

128

287

349 (

12820864)

-

I0505

15:

40:

17.855561

5317 net.cpp:

137] Memory required

for data:

545261536

-

I0505

15:

40:

17.855579

5317 layer_factory.hpp:

77] Creating layer conv2_2

-

I0505

15:

40:

17.855623

5317 net.cpp:

84] Creating Layer conv2_2

-

I0505

15:

40:

17.855640

5317 net.cpp:

406] conv2_2 <- conv2_1

-

I0505

15:

40:

17.855669

5317 net.cpp:

380] conv2_2 -> conv2_2

-

I0505

15:

40:

17.861979

5317 net.cpp:

122] Setting up conv2_2

-

I0505

15:

40:

17.862028

5317 net.cpp:

129] Top shape:

1

128

287

349 (

12820864)

-

I0505

15:

40:

17.862051

5317 net.cpp:

137] Memory required

for data:

596544992

-

I0505

15:

40:

17.862080

5317 layer_factory.hpp:

77] Creating layer relu2_2

-

I0505

15:

40:

17.862112

5317 net.cpp:

84] Creating Layer relu2_2

-

I0505

15:

40:

17.862129

5317 net.cpp:

406] relu2_2 <- conv2_2

-

I0505

15:

40:

17.862150

5317 net.cpp:

367] relu2_2 -> conv2_2 (

in-place)

-

I0505

15:

40:

17.863425

5317 net.cpp:

122] Setting up relu2_2

-

I0505

15:

40:

17.863457

5317 net.cpp:

129] Top shape:

1

128

287

349 (

12820864)

-

I0505

15:

40:

17.863476

5317 net.cpp:

137] Memory required

for data:

647828448

-

I0505

15:

40:

17.863492

5317 layer_factory.hpp:

77] Creating layer pool2

-

I0505

15:

40:

17.863514

5317 net.cpp:

84] Creating Layer pool2

-

I0505

15:

40:

17.863529

5317 net.cpp:

406] pool2 <- conv2_2

-

I0505

15:

40:

17.863554

5317 net.cpp:

380] pool2 -> pool2

-

I0505

15:

40:

17.863689

5317 net.cpp:

122] Setting up pool2

-

I0505

15:

40:

17.863708

5317 net.cpp:

129] Top shape:

1

128

144

175 (

3225600)

-

I0505

15:

40:

17.863723

5317 net.cpp:

137] Memory required

for data:

660730848

-

I0505

15:

40:

17.863735

5317 layer_factory.hpp:

77] Creating layer conv3_1

-

I0505

15:

40:

17.863765

5317 net.cpp:

84] Creating Layer conv3_1

-

I0505

15:

40:

17.863780

5317 net.cpp:

406] conv3_1 <- pool2

-

I0505

15:

40:

17.863798

5317 net.cpp:

380] conv3_1 -> conv3_1

-

I0505

15:

40:

17.869124

5317 net.cpp:

122] Setting up conv3_1

-

I0505

15:

40:

17.869174

5317 net.cpp:

129] Top shape:

1

256

144

175 (

6451200)

-

I0505

15:

40:

17.869195

5317 net.cpp:

137] Memory required

for data:

686535648

-

I0505

15:

40:

17.869246

5317 layer_factory.hpp:

77] Creating layer relu3_1

-

I0505

15:

40:

17.869279

5317 net.cpp:

84] Creating Layer relu3_1

-

I0505

15:

40:

17.869299

5317 net.cpp:

406] relu3_1 <- conv3_1

-

I0505

15:

40:

17.869324

5317 net.cpp:

367] relu3_1 -> conv3_1 (

in-place)

-

I0505

15:

40:

17.870867

5317 net.cpp:

122] Setting up relu3_1

-

I0505

15:

40:

17.870905

5317 net.cpp:

129] Top shape:

1

256

144

175 (

6451200)

-

I0505

15:

40:

17.870934

5317 net.cpp:

137] Memory required

for data:

712340448

-

I0505

15:

40:

17.870950

5317 layer_factory.hpp:

77] Creating layer conv3_2

-

I0505

15:

40:

17.870985

5317 net.cpp:

84] Creating Layer conv3_2

-

I0505

15:

40:

17.871001

5317 net.cpp:

406] conv3_2 <- conv3_1

-

I0505

15:

40:

17.871023

5317 net.cpp:

380] conv3_2 -> conv3_2

-

I0505

15:

40:

17.877616

5317 net.cpp:

122] Setting up conv3_2

-

I0505

15:

40:

17.877665

5317 net.cpp:

129] Top shape:

1

256

144

175 (

6451200)

-

I0505

15:

40:

17.877687

5317 net.cpp:

137] Memory required

for data:

738145248

-

I0505

15:

40:

17.877713

5317 layer_factory.hpp:

77] Creating layer relu3_2

-

I0505

15:

40:

17.877748

5317 net.cpp:

84] Creating Layer relu3_2

-

I0505

15:

40:

17.877765

5317 net.cpp:

406] relu3_2 <- conv3_2

-

I0505

15:

40:

17.877785

5317 net.cpp:

367] relu3_2 -> conv3_2 (

in-place)

-

I0505

15:

40:

17.879271

5317 net.cpp:

122] Setting up relu3_2

-

I0505

15:

40:

17.879315

5317 net.cpp:

129] Top shape:

1

256

144

175 (

6451200)

-

I0505

15:

40:

17.879339

5317 net.cpp:

137] Memory required

for data:

763950048

-

I0505

15:

40:

17.879357

5317 layer_factory.hpp:

77] Creating layer conv3_3

-

I0505

15:

40:

17.879406

5317 net.cpp:

84] Creating Layer conv3_3

-

I0505

15:

40:

17.879428

5317 net.cpp:

406] conv3_3 <- conv3_2

-

I0505

15:

40:

17.879454

5317 net.cpp:

380] conv3_3 -> conv3_3

-

I0505

15:

40:

17.887154

5317 net.cpp:

122] Setting up conv3_3

-

I0505

15:

40:

17.887202

5317 net.cpp:

129] Top shape:

1

256

144

175 (

6451200)

-

I0505

15:

40:

17.887228

5317 net.cpp:

137] Memory required

for data:

789754848

-

I0505

15:

40:

17.887259

5317 layer_factory.hpp:

77] Creating layer relu3_3

-

I0505

15:

40:

17.887291

5317 net.cpp:

84] Creating Layer relu3_3

-

I0505

15:

40:

17.887310

5317 net.cpp:

406] relu3_3 <- conv3_3

-

I0505

15:

40:

17.887331

5317 net.cpp:

367] relu3_3 -> conv3_3 (

in-place)

-

I0505

15:

40:

17.888519

5317 net.cpp:

122] Setting up relu3_3

-

I0505

15:

40:

17.888551

5317 net.cpp:

129] Top shape:

1

256

144

175 (

6451200)

-

I0505

15:

40:

17.888572

5317 net.cpp:

137] Memory required

for data:

815559648

-

I0505

15:

40:

17.888586

5317 layer_factory.hpp:

77] Creating layer pool3

-

I0505

15:

40:

17.888613

5317 net.cpp:

84] Creating Layer pool3

-

I0505

15:

40:

17.888628

5317 net.cpp:

406] pool3 <- conv3_3

-

I0505

15:

40:

17.888649

5317 net.cpp:

380] pool3 -> pool3

-

I0505

15:

40:

17.888797

5317 net.cpp:

122] Setting up pool3

-

I0505

15:

40:

17.888816

5317 net.cpp:

129] Top shape:

1

256

72

88 (

1622016)

-

I0505

15:

40:

17.888833

5317 net.cpp:

137] Memory required

for data:

822047712

-

I0505

15:

40:

17.888845

5317 layer_factory.hpp:

77] Creating layer conv4_1

-

I0505

15:

40:

17.888873

5317 net.cpp:

84] Creating Layer conv4_1

-

I0505

15:

40:

17.888887

5317 net.cpp:

406] conv4_1 <- pool3

-

I0505

15:

40:

17.888906

5317 net.cpp:

380] conv4_1 -> conv4_1

-

I0505

15:

40:

17.897431

5317 net.cpp:

122] Setting up conv4_1

-

I0505

15:

40:

17.897486

5317 net.cpp:

129] Top shape:

1

512

72

88 (

3244032)

-

I0505

15:

40:

17.897514

5317 net.cpp:

137] Memory required

for data:

835023840

-

I0505

15:

40:

17.897552

5317 layer_factory.hpp:

77] Creating layer relu4_1

-

I0505

15:

40:

17.897588

5317 net.cpp:

84] Creating Layer relu4_1

-

I0505

15:

40:

17.897605

5317 net.cpp:

406] relu4_1 <- conv4_1

-

I0505

15:

40:

17.897629

5317 net.cpp:

367] relu4_1 -> conv4_1 (

in-place)

-

I0505

15:

40:

17.899286

5317 net.cpp:

122] Setting up relu4_1

-

I0505

15:

40:

17.899335

5317 net.cpp:

129] Top shape:

1

512

72

88 (

3244032)

-

I0505

15:

40:

17.899360

5317 net.cpp:

137] Memory required

for data:

847999968

-

I0505

15:

40:

17.899381

5317 layer_factory.hpp:

77] Creating layer conv4_2

-

I0505

15:

40:

17.899421

5317 net.cpp:

84] Creating Layer conv4_2

-

I0505

15:

40:

17.899440

5317 net.cpp:

406] conv4_2 <- conv4_1

-

I0505

15:

40:

17.899468

5317 net.cpp:

380] conv4_2 -> conv4_2

-

I0505

15:

40:

17.911787

5317 net.cpp:

122] Setting up conv4_2

-

I0505

15:

40:

17.911835

5317 net.cpp:

129] Top shape:

1

512

72

88 (

3244032)

-

I0505

15:

40:

17.911859

5317 net.cpp:

137] Memory required

for data:

860976096

-

I0505

15:

40:

17.911897

5317 layer_factory.hpp:

77] Creating layer relu4_2

-

I0505

15:

40:

17.911931

5317 net.cpp:

84] Creating Layer relu4_2

-

I0505

15:

40:

17.911952

5317 net.cpp:

406] relu4_2 <- conv4_2

-

I0505

15:

40:

17.911975

5317 net.cpp:

367] relu4_2 -> conv4_2 (

in-place)

-

I0505

15:

40:

17.913126

5317 net.cpp:

122] Setting up relu4_2

-

I0505

15:

40:

17.913168

5317 net.cpp:

129] Top shape:

1

512

72

88 (

3244032)

-

I0505

15:

40:

17.913190

5317 net.cpp:

137] Memory required

for data:

873952224

-

I0505

15:

40:

17.913206

5317 layer_factory.hpp:

77] Creating layer conv4_3

-

I0505

15:

40:

17.913239

5317 net.cpp:

84] Creating Layer conv4_3

-

I0505

15:

40:

17.913257

5317 net.cpp:

406] conv4_3 <- conv4_2

-

I0505

15:

40:

17.913282

5317 net.cpp:

380] conv4_3 -> conv4_3

-

I0505

15:

40:

17.925925

5317 net.cpp:

122] Setting up conv4_3

-

I0505

15:

40:

17.925977

5317 net.cpp:

129] Top shape:

1

512

72

88 (

3244032)

-

I0505

15:

40:

17.926003

5317 net.cpp:

137] Memory required

for data:

886928352

-

I0505

15:

40:

17.926033

5317 layer_factory.hpp:

3282

3282

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?