该人脸68特征点检测的深度学习方法采用VGG作为原型进行改造(以下简称mini VGG),从数据集的准备,网络模型的构造以及最终的训练过程三个方面进行介绍,工程源码详见:Github链接

一、数据集的准备

1、数据集的采集

第一类是公共数据集:

人脸68特征点检测的数据集通常采用ibug数据集,官网地址为:

https://ibug.doc.ic.ac.uk/resources/facial-point-annotations/

其中同时包含图像和标注的有(有的数据集免费下载的只有标注没有图像):

300W,AFW,HELEN,LFPW,IBUG五个数据集。

如果需要做对于视频类图像的68特征点检测可以用下面300-VM数据集:

https://ibug.doc.ic.ac.uk/resources/300-VW/

上面数据集的介绍可以参考:https://yinguobing.com/facial-landmark-localization-by-deep-learning-data-and-algorithm/

第二类是自己标注的数据集:

这部分主要是用标注工具对自己收集到的图片进行标注,我采用自己的标注工具进行标注后,生成的是一个包含68点坐标位置的txt文档,之后要需要通过以下脚本将其转换成公共数据集中类似的pts文件的形式:

from __future__ import division

import os

import cv2

from compiler.ast import flatten

txt_dir = "/Users/camlin_z/Data/68landmark/txt/"

txt_new_dir = "/Users/camlin_z/Data/68landmark/landmark/"

def trans_label():

files = os.listdir(txt_dir)

for file in files:

flag = file.find(".")

if flag > 0:

txt_name = file[:flag] + ".pts"

print txt_name

line = open(txt_dir + file, 'r')

for label in line:

label = label.strip().split()

label = map(float, label)

file_new = open(txt_new_dir + txt_name, 'w+')

file_new.write("version: 1" + "\n")

file_new.write("n_points: 68" + "\n")

file_new.write("{" + "\n")

for i in range(0, 135, 2):

file_new.write(str(label[i]) + " " + str(label[i+1]) + "\n")

file_new.write("}")

else:

print file, " not exist!"

if __name__ == '__main__':

trans_label()通过以上的整理过程,就可以将数据集整理成以下形式:

同时需要将以上数据集分成训练集和测试集两个部分。

2、数据集的预处理

准备好上面的五个数据集后,接下来就是对于数据集的一系列处理了,由于特征点的检测是基于检测框检测出来之后,将图像crop出只有人脸的部分,然后再进行特征点的检测过程(因为这样可以大量的减少图像中其他因素的干扰,将神经网络的功能聚焦到特征点检测的任务上面来),所以需要根据以上数据集中标注的特征点位置来裁剪出一个只有人脸的区域,用于神经网络的训练。

处理过程主要参考:

https://yinguobing.com/facial-landmark-localization-by-deep-learning-data-collate/

但是在图像进行预处理之后,特征点的位置同样也会发生变化,上面作者分享的代码在对图像进行处理之后没有将对应的特征点坐标进行处理,所以我将原始的代码进行改进,同时对特征点坐标和图像进行处理,并生成最终我们网络训练需要的label形式,代码如下:

# -*- coding: utf-8 -*-

"""

This script shows how to read iBUG pts file and draw all the landmark points on image.

"""

from __future__ import division

import os

import cv2

from compiler.ast import flatten

import face_detector_image as fd

from lxml import etree, objectify

from compiler.ast import flatten

import shutil

# 0: test the pts of crop image

# 1: output the crop image

test_flag = 0

# List all the files

filelist_train = ["300W/trainset", "afw", "data2", "data3", "data4/trainset",

"helen/trainset", "landmark/trainset", "lfpw/trainset"]

filelist_test = ["300W/testset", "data4/testset", "helen/testset",

"landmark/testset", "lfpw/testset"]

filelist = filelist_train

def mkr(dr):

if not os.path.exists(dr):

os.mkdir(dr)

def read_points(file_name=None):

"""

Read points from .pts file.

"""

points = []

with open(file_name) as file:

line_count = 0

for line in file:

if "version" in line or "points" in line or "{" in line or "}" in line:

continue

else:

loc_x, loc_y = line.strip().split()

points.append([float(loc_x), float(loc_y)])

line_count += 1

return points

def draw_landmark_point(image, points):

"""

Draw landmark point on image.

"""

for point in points:

cv2.circle(image, (int(point[0]), int(

point[1])), 2, (0, 255, 0), -1, cv2.LINE_AA)

return image

def points_are_valid(points, image):

"""Check if all points are in image"""

min_box = get_minimal_box(points)

if box_in_image(min_box, image):

return True

return False

def get_square_box(box):

"""Get the square boxes which are ready for CNN from the boxes"""

left_x = box[0]

top_y = box[1]

right_x = box[2]

bottom_y = box[3]

box_width = right_x - left_x

box_height = bottom_y - top_y

# Check if box is already a square. If not, make it a square.

diff = box_height - box_width

delta = int(abs(diff) / 2)

if diff == 0: # Already a square.

return box

elif diff > 0: # Height > width, a slim box.

left_x -= delta

right_x += delta

if diff % 2 == 1:

right_x += 1

else: # Width > height, a short box.

top_y -= delta

bottom_y += delta

if diff % 2 == 1:

bottom_y += 1

# Make sure box is always square.

assert ((right_x - left_x) == (bottom_y - top_y)), 'Box is not square.'

return [left_x, top_y, right_x, bottom_y]

def get_minimal_box(points):

"""

Get the minimal bounding box of a group of points.

The coordinates are also converted to int numbers.

"""

min_x = int(min([point[0] for point in points]))

max_x = int(max([point[0] for point in points]))

min_y = int(min([point[1] for point in points]))

max_y = int(max([point[1] for point in points]))

return [min_x, min_y, max_x, max_y]

def move_box(box, offset):

"""Move the box to direction specified by offset"""

left_x = box[0] + offset[0]

top_y = box[1] + offset[1]

right_x = box[2] + offset[0]

bottom_y = box[3] + offset[1]

return [left_x, top_y, right_x, bottom_y]

def expand_box(square_box, scale_ratio=1.2):

"""Scale up the box"""

assert (scale_ratio >= 1), "Scale ratio should be greater than 1."

delta = int((square_box[2] - square_box[0]) * (scale_ratio - 1) / 2)

left_x = square_box[0] - delta

left_y = square_box[1] - delta

right_x = square_box[2] + delta

right_y = square_box[3] + delta

return [left_x, left_y, right_x, right_y]

def points_in_box(points, box):

"""Check if box contains all the points"""

minimal_box = get_minimal_box(points)

return box[0] <= minimal_box[0] and \

box[1] <= minimal_box[1] and \

box[2] >= minimal_box[2] and \

box[3] >= minimal_box[3]

def box_in_image(box, image):

"""Check if the box is in image"""

rows = image.shape[0]

cols = image.shape[1]

return box[0] >= 0 and box[1] >= 0 and box[2] <= cols and box[3] <= rows

def box_is_valid(image, points, box):

"""Check if box is valid."""

# Box contains all the points.

points_is_in_box = points_in_box(points, box)

# Box is in image.

box_is_in_image = box_in_image(box, image)

# Box is square.

w_equal_h = (box[2] - box[0]) == (box[3] - box[1])

# Return the result.

return box_is_in_image and points_is_in_box and w_equal_h

def fit_by_shifting(box, rows, cols):

"""Method 1: Try to move the box."""

# Face box points.

left_x = box[0]

top_y = box[1]

right_x = box[2]

bottom_y = box[3]

# Check if moving is possible.

if right_x - left_x <= cols and bottom_y - top_y <= rows:

if left_x < 0: # left edge crossed, move right.

right_x += abs(left_x)

left_x = 0

if right_x > cols: # right edge crossed, move left.

left_x -= (right_x - cols)

right_x = cols

if top_y < 0: # top edge crossed, move down.

bottom_y += abs(top_y)

top_y = 0

if bottom_y > rows: # bottom edge crossed, move up.

top_y -= (bottom_y - rows)

bottom_y = rows

return [left_x, top_y, right_x, bottom_y]

def fit_by_shrinking(box, rows, cols):

"""Method 2: Try to shrink the box."""

# Face box points.

left_x = box[0]

top_y = box[1]

right_x = box[2]

bottom_y = box[3]

# The first step would be get the interlaced area.

if left_x < 0: # left edge crossed, set zero.

left_x = 0

if right_x > cols: # right edge crossed, set max.

right_x = cols

if top_y < 0: # top edge crossed, set zero.

top_y = 0

if bottom_y > rows: # bottom edge crossed, set max.

bottom_y = rows

# Then found out which is larger: the width or height. This will

# be used to decide in which dimention the size would be shrinked.

width = right_x - left_x

height = bottom_y - top_y

delta = abs(width - height)

# Find out which dimention should be altered.

if width > height: # x should be altered.

if left_x != 0 and right_x != cols: # shrink from center.

left_x += int(delta / 2)

right_x -= int(delta / 2) + delta % 2

elif left_x == 0: # shrink from right.

right_x -= delta

else: # shrink from left.

left_x += delta

else: # y should be altered.

if top_y != 0 and bottom_y != rows: # shrink from center.

top_y += int(delta / 2) + delta % 2

bottom_y -= int(delta / 2)

elif top_y == 0: # shrink from bottom.

bottom_y -= delta

else: # shrink from top.

top_y += delta

return [left_x, top_y, right_x, bottom_y]

def fit_box(box, image, points):

"""

Try to fit the box, make sure it satisfy following conditions:

- A square.

- Inside the image.

- Contains all the points.

If all above failed, return None.

"""

rows = image.shape[0]

cols = image.shape[1]

# First try to move the box.

box_moved = fit_by_shifting(box, rows, cols)

# If moving faild ,try to shrink.

if box_is_valid(image, points, box_moved):

return box_moved

else:

box_shrinked = fit_by_shrinking(box, rows, cols)

# If shrink failed, return None

if box_is_valid(image, points, box_shrinked):

return box_shrinked

# Finally, Worst situation.

print("Fitting failed!")

return None

def get_valid_box(image, points):

"""

Try to get a valid face box which meets the requirments.

The function follows these steps:

1. Try method 1, if failed:

2. Try method 0, if failed:

3. Return None

"""

# Try method 1 first.

def _get_postive_box(raw_boxes, points):

for box in raw_boxes:

# Move box down.

diff_height_width = (box[3] - box[1]) - (box[2] - box[0])

offset_y = int(abs(diff_height_width / 2))

box_moved = move_box(box, [0, offset_y])

# Make box square.

square_box = get_square_box(box_moved)

# Remove false positive boxes.

if points_in_box(points, square_box):

return square_box

return None

# Try to get a positive box from face detection results.

_, raw_boxes = fd.get_facebox(image, threshold=0.5)

positive_box = _get_postive_box(raw_boxes, points)

if positive_box is not None:

if box_in_image(positive_box, image) is True:

return positive_box

return fit_box(positive_box, image, points)

# Method 1 failed, Method 0

min_box = get_minimal_box(points)

sqr_box = get_square_box(min_box)

epd_box = expand_box(sqr_box)

if box_in_image(epd_box, image) is True:

return epd_box

return fit_box(epd_box, image, points)

def get_new_pts(facebox, raw_points, label_txt, image_file, flag, ratio_w, ratio_h):

"""

generate a new pts file according to face box

"""

x = facebox[0]

y = facebox[1]

# print x, y

new_point = []

label_pts = flatten(raw_points)

# print label_pts

label_txt.write(flag + image_file + ".jpg ")

for i in range(0, 135, 2):

if i != 134:

x_temp = int((label_pts[i] - x) * ratio_w )

y_temp = int((label_pts[i + 1] - y) * ratio_h)

new_point.append([x_temp, y_temp])

label_txt.write(str(x_temp) + " " + str(y_temp) + " ")

else:

x_temp = int((label_pts[i] - x) * ratio_w)

y_temp = int((label_pts[i + 1] - y) * ratio_h)

new_point.append([x_temp, y_temp])

label_txt.write(str(x_temp) + " " + str(y_temp))

label_txt.write("\n")

# print new_point

return new_point

def preview(point_file, test_flag, bbox_new_file):

"""

Preview points on image.

"""

# Read the points from file.

raw_points = read_points(point_file)

# Safe guard, make sure point importing goes well.

assert len(raw_points) == 68, "The landmarks should contain 68 points."

# Read the image.

head, tail = os.path.split(point_file)

image_file = tail.split('.')[-2]

img_jpeg = os.path.join(head, image_file + ".jpeg")

img_jpg = os.path.join(head, image_file + ".jpg")

img_png = os.path.join(head, image_file + ".png")

if os.path.exists(img_jpg):

img = cv2.imread(img_jpg)

img_file = img_jpg

elif os.path.exists(img_jpeg):

img = cv2.imread(img_jpeg)

img_file = img_jpeg

else:

img = cv2.imread(img_png)

img_file = img_png

print image_file

# Fast check: all points are in image.

if points_are_valid(raw_points, img) is False:

return None

# Get the valid facebox.

facebox = get_valid_box(img, raw_points)

if facebox is None:

print("Using minimal box.")

facebox = get_minimal_box(raw_points)

# Extract valid image area.

face_area = img[facebox[1]:facebox[3],

facebox[0]: facebox[2]]

rw = 1

rh = 1

# Check if resize is needed.

width = facebox[2] - facebox[0]

height = facebox[3] - facebox[1]

print width,height

if width != height:

print('opps!', width, height)

if (width != 224) or (height != 224):

face_area = cv2.resize(face_area, (224, 224))

rw = 224 / width

rh = 224 / height

# generate a new pts file according to facebox

new_point = get_new_pts(facebox, raw_points, label_txt,

image_file, flag, rw, rh)

if test_flag == 0:

# verify the crop image whether match to 68 point or not

face_area = draw_landmark_point(face_area, new_point)

cv2.imwrite(DATA_TEST_DST + image_file + ".jpg", face_area)

else:

cv2.imwrite(DATA_DST + image_file + ".jpg", face_area)

# Show the result.

cv2.imshow("Crop face", face_area)

if cv2.waitKey(10) == 27:

cv2.waitKey()

# # Show whole image in window.

# width, height = img.shape[:2]

# max_height = 640

# if height > max_height:

# img = cv2.resize(

# img, (max_height, int(width * max_height / height)))

# cv2.imshow("preview", img)

# cv2.waitKey()

def main():

"""

The main entrance

"""

for file_string in filelist:

root = "/Users/camlin_z/Data/data/"

# 图像存储的路劲

DATA_DIR = root + file_string + "/"

# crop之后图像存储的路劲

DATA_DST = root + file_string + "_crop/"

# 存储将转换后的坐标画在crop之后的图像的路径,用于验证坐标的转换是否出现错误

DATA_TEST_DST = root + file_string + "_pts/"

# 最终生成网络训练需要的label的txt文件的路径

point_new_file = root + file_string + ".txt"

flag = file_string + "/"

pts_file_list = []

for file_path, _, file_names in os.walk(DATA_DIR):

for file_name in file_names:

if file_name.split(".")[-1] in ["pts"]:

pts_file_list.append(os.path.join(file_path, file_name))

label_txt = open(point_new_file, 'w')

mkr(DATA_DST)

mkr(DATA_TEST_DST)

# Show the image one by one.

for file_name in pts_file_list:

preview(file_name, test_flag, bbox_new_file)

if __name__ == "__main__":

main()3、数据增强

由于以上数据集总共加起来只有五千张左右,对于需要大数据训练的神经网络显然是不够的,所以这里考虑对上面的数据集进行数据增强的操作,由于项目的需要,所以主要是对原来的数据集进行旋转的数据增强。

以上的旋转主要可以分为两种策略:

1、将原始图像直接保持原始大小进行旋转

2、将原始图像旋转后将生成的图像的四个边向外扩充,使得生成的图像不会切掉原始图像的四个边。

主要分为±15°,±30°,±45°,±60°四种旋转类型,在进行数据增强的过程中,主要由三个问题需要解决:

(1)旋转后产生的黑色区域可能影响卷积学习特征

(2)利用以上产生的只有人脸的图像进行旋转可能将之前标注的特征点旋转到图像的外面,导致某些特征点损失

(3)旋转后特征点坐标的生成

针对第一个问题:

可以参考:https://blog.csdn.net/guyuealian/article/details/77993410中的方法,对图像的黑色区域利用其边缘值的二次插值来进行填充,但是上面的处理过程可能会产生一些奇怪的边缘效果,如下图所示:

有担心上面这些奇怪的特征是不是会影响最终卷积网络的学习结果,但是暂时还没有找到合适的解决方法,有大牛知道,感谢留言。

针对第二个问题:

可以参考:https://www.oschina.net/translate/opencv-rotation中的代码,将图像旋转后根据其旋转后产生的新的长宽来存储图片,保证最终生成的旋转后的图片不会去掉原始图片的四个角,上面展示的图片就是利用这种方法进行旋转-60°之后的结果。

针对第三个问题:

可以参考:https://blog.csdn.net/songzitea/article/details/51043743中的解释来进行转换。

还有一篇写的比较好的博文可以阅读:https://charlesnord.github.io/2017/04/01/rotation/

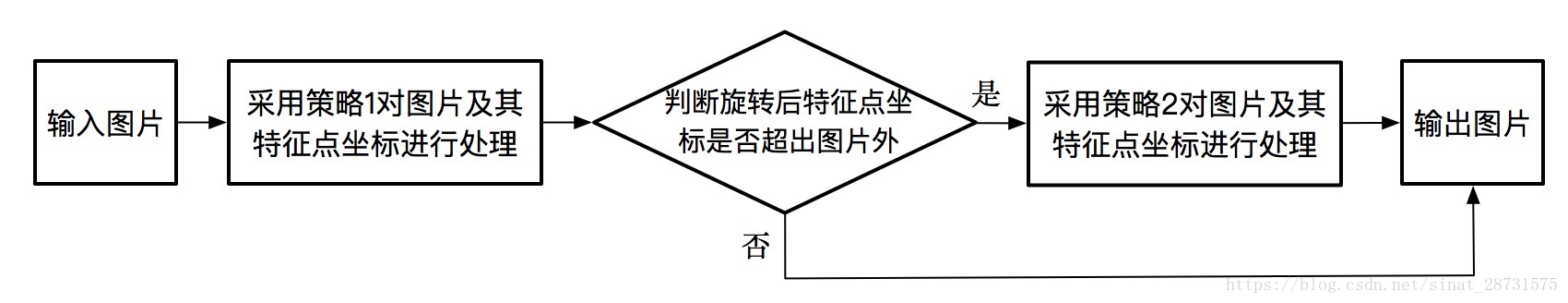

将以上问题一一解决之后,由于采用策略二进行旋转时会产生上面图片所示的大块奇怪的特征,但是策略一则不会产生那么大块的奇怪的特征,所以我对于旋转的数据增强的整体逻辑如下:

按照上面的处理过程,可以写出下面的代码:

#-*- coding: UTF-8 -*-

from __future__ import division

import cv2

import os

import numpy as np

import math

filelist = ["300W/trainset", "afw/trainset", "data2/trainset", "data3/trainset",

"data4/trainset", "helen/trainset", "landmark/trainset", "lfpw/trainset",

"300W/testset", "afw/testset", "data2/testset", "data3/testset",

"data4/testset", "helen/testset", "landmark/testset", "lfpw/testset"]

img_dir = "/Users/camlin_z/Data/data_output/"

angles = [15, 30, 45, 60]

def mkr(dr):

if not os.path.exists(dr):

os.mkdir(dr)

def read_points(file_name=None):

"""

Read points from .pts file.

"""

points = []

with open(file_name) as file:

line_count = 0

for line in file:

if "version" in line or "points" in line or "{" in line or "}" in line:

continue

else:

loc_x, loc_y = line.strip().split()

points.append([float(loc_x), float(loc_y)])

line_count += 1

return points

def draw_save_landmark(image, points, dst):

"""

Draw landmark point on image.

"""

for point in points:

cv2.circle(image, (int(point[0]), int(

point[1])), 2, (0, 255, 0), -1, cv2.LINE_AA)

cv2.imwrite(dst, image)

def trans_label(txt, label):

file_new = open(txt, 'w+')

file_new.write("version: 1" + "\n")

file_new.write("n_points: 68" + "\n")

file_new.write("{" + "\n")

for point in label:

file_new.write(str(point[0]) + " " + str(point[1]) + "\n")

file_new.write("}")

def rotate_with_adjust_size(img, theta):

img_raw = cv2.imread(img)

height, width = img_raw.shape[:2]

center = (width / 2, height / 2)

scale = 1

rangle = np.deg2rad(theta) # angle in radians

# now calculate new image width and height

nw = (abs(np.sin(rangle) * height) + abs(np.cos(rangle) * width)) * scale

nh = (abs(np.cos(rangle) * height) + abs(np.sin(rangle) * width)) * scale

# ask OpenCV for the rotation matrix

rot_mat = cv2.getRotationMatrix2D((nw * 0.5, nh * 0.5), theta, scale)

# calculate the move from the old center to the new center combined

# with the rotation

rot_move = np.dot(rot_mat, np.array([(nw - width) * 0.5, (nh - height) * 0.5, 0]))

# the move only affects the translation, so update the translation

# part of the transform

rot_mat[0, 2] += rot_move[0]

rot_mat[1, 2] += rot_move[1]

img_rotate = cv2.warpAffine(img_raw, rot_mat, (int(np.math.ceil(nw)), int(np.math.ceil(nh))), cv2.INTER_LANCZOS4,

cv2.BORDER_REFLECT, 1)

offset_w = (nw - width) / 2

offset_h = (nh - height) / 2

img_rotate = cv2.resize(img_rotate, (224, 224))

rw = 224 / nw

rh = 224 / nh

return img_rotate, center, offset_w, offset_h, rw, rh

def rotate_with_original_size(img, theta):

img_raw = cv2.imread(img)

height, width = img_raw.shape[:2]

center = (width / 2, height / 2)

rot_mat = cv2.getRotationMatrix2D(center, theta, 1)

img_rotate = cv2.warpAffine(img_raw, rot_mat, (width, height), cv2.INTER_LANCZOS4,

cv2.BORDER_REFLECT, 1)

return img_rotate, center

def rotate_pts_original_size(img, points, center, angle):

flag = 0

new_points = []

height, width = img.shape[:2]

theta = np.deg2rad(angle)

for i in range(len(points)):

[x_raw, y_raw] = points[i]

y_raw = height - y_raw

(center_x, center_y) = center

center_y = height - center_y

x = round((x_raw - center_x) * math.cos(theta) - (y_raw - center_y) * math.sin(theta) + center_x)

y = round((x_raw - center_x) * math.sin(theta) + (y_raw - center_y) * math.cos(theta) + center_y)

x = int(x)

y = int(height - y)

if x <= 0 or y <= 0:

flag = 1

break

new_points.append([x, y])

return new_points, flag

def rotate_pts_adjust_size(img, points, center, angle, offset_w, offset_h, rate_w, rate_h):

new_points = []

height, width = img.shape[:2]

theta = np.deg2rad(angle)

for i in range(len(points)):

[x_raw, y_raw] = points[i]

y_raw = height - y_raw

(center_x, center_y) = center

center_y = height - center_y

x = round((x_raw - center_x) * math.cos(theta) - (y_raw - center_y) * math.sin(theta) + center_x)

y = round((x_raw - center_x) * math.sin(theta) + (y_raw - center_y) * math.cos(theta) + center_y)

x = int((x + offset_w) * rate_w)

y = int((height - y + offset_h) * rate_h)

new_points.append([x, y])

return new_points

def main():

for angle in angles:

for file_string in filelist:

out_dir = os.path.join(img_dir, file_string + "_" + str(abs(angle)))

out_verify_dir = out_dir + "/out/"

mkr(out_dir)

mkr(out_verify_dir)

for file_path, _, file_names in os.walk(os.path.join(img_dir, file_string)):

for file_name in file_names:

if file_name.split(".")[-1] in ["jpg", "png", "jpeg"]:

print file_name

# 读取图像路径

img_file_path = os.path.join(img_dir, file_string, file_name)

# 读取pts文件路径

pts_file_name = file_name.split(".")[0] + ".pts"

pts_file_path = os.path.join(img_dir, file_string, pts_file_name)

# 写入pts文件路径

pts_new_dir = os.path.join(out_dir, pts_file_name)

############ 原始大小旋转图像和点 ############

# 随机生成指定的旋转角度

if angle == 15:

theta_pos = np.random.randint(0, 15)

theta_neg = np.random.randint(-15, 0)

elif angle == 30:

theta_pos = np.random.randint(15, 30)

theta_neg = np.random.randint(-30, -15)

elif angle == 45:

theta_pos = np.random.randint(30, 45)

theta_neg = np.random.randint(-45, -30)

else:

theta_pos = np.random.randint(45, 60)

theta_neg = np.random.randint(-60, -45)

arr = np.random.randint(0, 2)

if arr == 0:

theta = theta_pos

else:

theta = theta_neg

print theta

# 旋转图像

img, center = rotate_with_original_size(img_file_path, theta)

# 调整图像对应坐标点

points = read_points(pts_file_path)

new_points, flag = rotate_pts_original_size(img, points, center, theta)

# 根据以上flag判断产生的点是否超出图像位置

# 如果超出,则使用调整大小的方式旋转

if flag == 1:

print img_file_path, "warning!!!"

img, center, offset_w, offset_h, rw, rh = rotate_with_adjust_size(img_file_path, theta)

new_points = rotate_pts_adjust_size(img, points, center, theta, offset_w, offset_h,rw, rh)

# 将图像写入输出文件夹

cv2.imwrite(os.path.join(out_dir, file_name), img)

# 将pts重新写入输出文件夹

trans_label(pts_new_dir, new_points)

# 将坐标点画到图像上验证位置是否正确

out_img_path = os.path.join(out_verify_dir, file_name)

draw_save_landmark(img, new_points, out_img_path)

if __name__ == '__main__':

main()以上过程处理完成后,就完成了所有的数据预处理过程了。

二、网络模型的构造

由于caffe的图片输入层只是支持一个标签的输入,所以本文中的caffe的iamge data layer经过了一定程度的修改,使其可以接受136个label值的输入:

#ifdef USE_OPENCV

#include <opencv2/core/core.hpp>

#include <fstream> // NOLINT(readability/streams)

#include <iostream> // NOLINT(readability/streams)

#include <string>

#include <utility>

#include <vector>

#include "caffe/data_transformer.hpp"

#include "caffe/layers/base_data_layer.hpp"

#include "caffe/layers/image_data_layer.hpp"

#include "caffe/util/benchmark.hpp"

#include "caffe/util/io.hpp"

#include "caffe/util/math_functions.hpp"

#include "caffe/util/rng.hpp"

namespace caffe {

template <typename Dtype>

ImageDataLayer<Dtype>::~ImageDataLayer<Dtype>() {

this->StopInternalThread();

}

template <typename Dtype>

int ImageDataLayer<Dtype>::Rand(int n) {

if (n < 1) return 1;

caffe::rng_t* rng =

static_cast<caffe::rng_t*>(prefetch_rng_->generator());

return ((*rng)() % n);

}

template <typename Dtype>

void ImageDataLayer<Dtype>::DataLayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const int new_height = this->layer_param_.image_data_param().new_height();

const int new_width = this->layer_param_.image_data_param().new_width();

const bool is_color = this->layer_param_.image_data_param().is_color();

const bool shuffleflag = this->layer_param_.image_data_param().shuffle();

string root_folder = this->layer_param_.image_data_param().root_folder();

CHECK((new_height == 0 && new_width == 0) ||

(new_height > 0 && new_width > 0)) << "Current implementation requires "

"new_height and new_width to be set at the same time.";

// Read the file with filenames and labels

const string& source = this->layer_param_.image_data_param().source();

LOG(INFO) << "Opening file " << source;

std::ifstream infile(source.c_str());

string line;

int pos;

int label_dim = 0;

bool gfirst = true;

int rd = shuffleflag?4:0;

while (std::getline(infile, line)) {

if(line.find_last_of(' ')==line.size()-2) line.erase(line.find_last_not_of(' ')-1);

pos = line.find_first_of(' ');

string str = line.substr(0, pos);

int p0 = pos + 1;

vector<float> vl;

while (pos != -1){

pos = line.find_first_of(' ', p0);

vl.push_back(atof(line.substr(p0, pos).c_str()));

p0 = pos + 1;

}

if (shuffleflag) {

float minx = vl[0];

float maxx = minx;

float miny = vl[1];

float maxy = miny;

for (int i = 2; i < vl.size(); i += 2){

if (vl[i] < minx) minx = vl[i];

else if (vl[i] > maxx) maxx = vl[i];

if (vl[i + 1] < miny) miny = vl[i + 1];

else if (vl[i + 1] > maxy) maxy = vl[i + 1];

}

vl.push_back(minx);

vl.push_back(maxx + 1);

vl.push_back(miny);

vl.push_back(maxy + 1);

}

if (gfirst){

label_dim = vl.size();

gfirst = false;

LOG(INFO) << "label dim: " << label_dim - rd;

//LOG(INFO) << line;

}

CHECK_EQ(vl.size(), label_dim) << "label dim not match in: " << lines_.size()<<", "<<lines_[lines_.size()-1].first;

lines_.push_back(std::make_pair(str, vl));

}

CHECK(!lines_.empty()) << "File is empty";

if (shuffleflag) {

// randomly shuffle data

LOG(INFO) << "Shuffling data & randomly crop image";

const unsigned int prefetch_rng_seed = caffe_rng_rand();

prefetch_rng_.reset(new Caffe::RNG(prefetch_rng_seed));

ShuffleImages();

} else {

if (this->phase_ == TRAIN && Caffe::solver_rank() > 0 &&

this->layer_param_.image_data_param().rand_skip() == 0) {

LOG(WARNING) << "Shuffling or skipping recommended for multi-GPU";

}

}

LOG(INFO) << "A total of " << lines_.size() << " images.";

lines_id_ = 0;

// Check if we would need to randomly skip a few data points

if (this->layer_param_.image_data_param().rand_skip()) {

unsigned int skip = caffe_rng_rand() %

this->layer_param_.image_data_param().rand_skip();

LOG(INFO) << "Skipping first " << skip << " data points.";

CHECK_GT(lines_.size(), skip) << "Not enough points to skip";

lines_id_ = skip;

}

// Read an image, and use it to initialize the top blob.

cv::Mat cv_img = ReadImageToCVMat(root_folder + lines_[lines_id_].first,

0, 0, is_color);

CHECK(cv_img.data) << "Could not load " << lines_[lines_id_].first;

// Use data_transformer to infer the expected blob shape from a cv_image.

vector<int> top_shape(4);

top_shape[0] = 1;

top_shape[1] = cv_img.channels();

top_shape[2] = shuffleflag ? new_height : cv_img.rows;

top_shape[3] = shuffleflag ? new_width : cv_img.cols;

this->transformed_data_.Reshape(top_shape);

// Reshape prefetch_data and top[0] according to the batch_size.

const int batch_size = this->layer_param_.image_data_param().batch_size();

CHECK_GT(batch_size, 0) << "Positive batch size required";

top_shape[0] = batch_size;

for (int i = 0; i < this->prefetch_.size(); ++i) {

this->prefetch_[i]->data_.Reshape(top_shape);

}

top[0]->Reshape(top_shape);

LOG(INFO) << "output data size: " << top[0]->num() << ","

<< top[0]->channels() << "," << top[0]->height() << ","

<< top[0]->width();

// label

vector<int> label_shape(2, batch_size);

label_shape[1] = label_dim-rd;

top[1]->Reshape(label_shape);

for (int i = 0; i < this->prefetch_.size(); ++i) {

this->prefetch_[i]->label_.Reshape(label_shape);

}

}

template <typename Dtype>

void ImageDataLayer<Dtype>::ShuffleImages() {

caffe::rng_t* prefetch_rng =

static_cast<caffe::rng_t*>(prefetch_rng_->generator());

shuffle(lines_.begin(), lines_.end(), prefetch_rng);

}

// This function is called on prefetch thread

template <typename Dtype>

void ImageDataLayer<Dtype>::load_batch(Batch<Dtype>* batch) {

CPUTimer batch_timer;

batch_timer.Start();

double read_time = 0;

double trans_time = 0;

CPUTimer timer;

CHECK(batch->data_.count());

CHECK(this->transformed_data_.count());

ImageDataParameter image_data_param = this->layer_param_.image_data_param();

const int batch_size = image_data_param.batch_size();

const float rate_height = this->layer_param_.image_data_param().rate_height();

const float rate_width = this->layer_param_.image_data_param().rate_width();

const bool is_color = image_data_param.is_color();

const bool shuffleflag = this->layer_param_.image_data_param().shuffle();

string root_folder = image_data_param.root_folder();

// Reshape according to the first image of each batch

// on single input batches allows for inputs of varying dimension.

cv::Mat cv_img = ReadImageToCVMat(root_folder + lines_[lines_id_].first,

0, 0, is_color);

CHECK(cv_img.data) << "Could not load " << lines_[lines_id_].first;

const int new_height = shuffleflag ? image_data_param.new_height() : cv_img.rows;

const int new_width = shuffleflag ? image_data_param.new_width() : cv_img.cols;

// Use data_transformer to infer the expected blob shape from a cv_img.

vector<int> top_shape(4);

top_shape[0] = 1;

top_shape[1] = cv_img.channels();

top_shape[2] = new_height;

top_shape[3] = new_width;

this->transformed_data_.Reshape(top_shape);

// Reshape batch according to the batch_size.

top_shape[0] = batch_size;

batch->data_.Reshape(top_shape);

vector<int> top_shape1(4);

top_shape1[0] = batch_size;

top_shape1[1] = shuffleflag ? lines_[0].second.size() - 4 : lines_[0].second.size();

top_shape1[2] = 1;

top_shape1[3] = 1;

batch->label_.Reshape(top_shape1);

Dtype* prefetch_data = batch->data_.mutable_cpu_data();

Dtype* prefetch_label = batch->label_.mutable_cpu_data();

// datum scales

const int lines_size = lines_.size();

const float dh_2 = (new_height - 1)*0.5;

const float dw_2 = (new_width - 1)*0.5;

for (int item_id = 0; item_id < batch_size; ++item_id) {

// get a blob

timer.Start();

CHECK_GT(lines_size, lines_id_);

cv::Mat cv_img = ReadImageToCVMat(root_folder + lines_[lines_id_].first,

0, 0, is_color);

CHECK(cv_img.data) << "Could not load " << lines_[lines_id_].first;

read_time += timer.MicroSeconds();

timer.Start();

// Apply transformations (mirror, crop...) to the image

int x1 = 0;

int y1 = 0;

int x2 = cv_img.cols;

int y2 = cv_img.rows;

if (shuffleflag){

CHECK_GE(cv_img.rows, new_height) << lines_[lines_id_].first;

CHECK_GE(cv_img.cols, new_width) << lines_[lines_id_].first;

int minx = lines_[lines_id_].second[top_shape1[1]];

int maxx = lines_[lines_id_].second[top_shape1[1] + 1];

int miny = lines_[lines_id_].second[top_shape1[1] + 2];

int maxy = lines_[lines_id_].second[top_shape1[1] + 3];

x1 = Rand(2 * round(rate_width*cv_img.cols));

y1 = Rand(2 * round(rate_height*cv_img.rows));

x2 = x1 + new_width;

y2 = y1 + new_height;

if (x1 > minx){

x2 -= x1 - minx;

x1 = minx;

}

if (x2 < maxx){

x1 += maxx - x2;

x2 = maxx;

}

if (x1<0){

x2 += -x1;

x1 = 0;

}

if (x2 > cv_img.cols){

x1 -= x2 - cv_img.cols;

x2 = cv_img.cols;

}

if (y1 > miny){

y2 -= y1 - miny;

y1 = miny;

}

if (y2 < maxy){

y1 += maxy - y2;

y2 = maxy;

}

if (y1<0){

y2 += -y1;

y1 = 0;

}

if (y2>cv_img.rows){

y1 -= y2 - cv_img.rows;

y2 = cv_img.rows;

}

}

if (y2 - y1 != new_height || x2 - x1 != new_width){

printf("%s y1:%d, y2:%d, x1:%d, x2:%d\n", lines_[lines_id_].first.c_str(),y1,y2,x1,x2);

}

//

int offset = batch->data_.offset(item_id);

this->transformed_data_.set_cpu_data(prefetch_data + offset);

this->data_transformer_->Transform(cv_img(cv::Range(y1, y2), cv::Range(x1, x2)), &(this->transformed_data_));

trans_time += timer.MicroSeconds();

for (int i = 0; i < top_shape1[1]; i++){

if (i % 2 == 0) prefetch_label[item_id*top_shape1[1] + i] = (lines_[lines_id_].second[i] - x1 - dw_2) / dw_2;

else prefetch_label[item_id*top_shape1[1] + i] = (lines_[lines_id_].second[i] - y1 - dh_2) / dh_2;

}

// go to the next iter

lines_id_++;

if (lines_id_ >= lines_size) {

// We have reached the end. Restart from the first.

DLOG(INFO) << "Restarting data prefetching from start.";

lines_id_ = 0;

if (shuffleflag) {

ShuffleImages();

}

}

}

batch_timer.Stop();

DLOG(INFO) << "Prefetch batch: " << batch_timer.MilliSeconds() << " ms.";

DLOG(INFO) << " Read time: " << read_time / 1000 << " ms.";

DLOG(INFO) << "Transform time: " << trans_time / 1000 << " ms.";

}

INSTANTIATE_CLASS(ImageDataLayer);

REGISTER_LAYER_CLASS(ImageData);

} // namespace caffe

#endif // USE_OPENCV经过上面的修改之后,就可以编译该caffe。

网络结构以及solver.ptototxt见github中landmark_detec文件夹中的内容。

三、模型的训练

准备好了上面的所有数据以及文件之后,可以使用下面的shell脚本进行训练:

#!/bin/sh

cd ../

## MODIFY PATH for YOUR SETTING

CAFFE_DIR=/Users/camlin_z/Data/Project/caffe-68landmark

CONFIG_DIR=${CAFFE_DIR}/landmark_detec

CAFFE_BIN=${CAFFE_DIR}/build/tools/caffe

DEV_ID=0

sudo ${CAFFE_BIN} train \

-solver=${CONFIG_DIR}/solver.prototxt \

-weights=${CONFIG_DIR}/init.caffemodel \

-gpu=${DEV_ID} \

2>&1 | tee ${CONFIG_DIR}/train.log训练过程中先开始使用”fixed”的策略进行训练,发现到20000次的迭代之后,loss不再下降了,所以改为”multistep”的策略进行训练,训练得到的模型效果还是很好的,时间在我的mac上面大概是80ms左右,可以使用下面的脚本进行测试:

# coding=utf-8

import numpy as np

import cv2

import caffe

from PIL import Image, ImageDraw

import time

import os

blobname = "68point"

feature_dim = 136

root = "/Users/camlin_z/Data/Project/caffe-master-multilabel-normalize-randcrop-newloss/landmark_detec/"

deploy = root + "deploy.prototxt"

# caffe_model = root + "snapshot_all1/snapshot_iter_250000.caffemodel"

# caffe_model = root + "snapshot_part1/oldfinetune_iter_20000.caffemodel"

caffe_model = root + "init.caffemodel"

# caffe_model = root + "snapshot2/fine_iter_400000.caffemodel"

# caffe_model = root + "snapshot_final/final_iter_350000.caffemodel"

img_dir = "/Users/camlin_z/Data/data_fine/"

img_dir_out = "/Users/camlin_z/Data/data_fine/out/"

label_file = "/Users/camlin_z/Data/data_fine/label_test.txt"

img_path = "/Users/camlin_z/Data/data_fine/data2/2415.jpeg"

net = caffe.Net(deploy, caffe_model, caffe.TEST)

caffe.set_mode_cpu()

# 测试一批数据,并显示所有数据的均方差

def detec_whole(img_dir, img_dir_out, label_file):

time_sum = 0

mser_sum = 0

id_sum = 0

fid = open(label_file, 'r')

for id in fid:

id_sum += 1

flag = id.find(' ')

# 读取文件中的标签信息

image_name = id[:flag]

label_true = id[flag:]

label_true = label_true.strip().split()

label_true = map(float, label_true)

label_true = np.array(label_true, np.float32)

imgname = os.path.basename(image_name)

print imgname

# print label_true

img = cv2.imread(img_dir + image_name)

img_draw = img.copy()

sh = img.shape

h = sh[0]

w = sh[1]

rw = (w + 1) / 2

rh = (h + 1) / 2

# 以下网络输出了预测的68点坐标

# start = time.time()

img = np.array(img, np.float32)

transformer = caffe.io.Transformer({'data': net.blobs['data'].data.shape}) # 设定图片的shape格式(1,3,28,28)

transformer.set_transpose('data', (2, 0, 1))

transformer.set_mean('data', np.array([127.5, 127.5, 127.5]))

net.blobs['data'].data[...] = transformer.preprocess('data', img)

start = time.time()

out = net.forward()

landmark = out[blobname]

elap = time.time() - start

landmark = np.array(landmark, np.float32)

landmark[0: 136: 2] = (landmark[0: 136: 2] * rh) + rh

landmark[1: 136: 2] = (landmark[1: 136: 2] * rw) + rw

# print landmark

time_sum += elap

print "time:", elap

for i in range(0, 136, 2):

cv2.circle(img_draw, (int(landmark[0][i]), int(landmark[0][i + 1])), 2, (0, 255, 0), -1, cv2.LINE_AA)

cv2.imwrite(img_dir_out + imgname, img_draw)

v = label_true - landmark

v = v*v

v = v[0][0::2] + v[0][1:: 2]

sv = np.power(v, 0.5)

mser = sum(sv) / feature_dim

mser_sum += mser

print "mser:", mser

print "Average time:", time_sum/id_sum

print "Average mser:", mser_sum/id_sum

# 测试一张图片,并显示预测的特征点的位置

def detec_single():

img = cv2.imread(img_path)

# draw = ImageDraw.Draw(img1)

sh = img.shape

print sh

h = sh[0]

w = sh[1]

rw = (w + 1)/2

rh = (h + 1)/2

img = np.array(img, np.float32)

img_copy = img.copy()

transformer = caffe.io.Transformer({'data': net.blobs['data'].data.shape})

transformer.set_transpose('data', (2, 0, 1))

transformer.set_mean('data', np.array([127.5, 127.5, 127.5]))

net.blobs['data'].data[...] = transformer.preprocess('data',img)

start = time.time()

out = net.forward()

elap = time.time() - start

print "time:", elap

landmark = out[blobname]

landmark = np.array(landmark, np.float32)

landmark[0: 136: 2] = (landmark[0: 136: 2] * rh ) + rh

landmark[1: 136: 2] = (landmark[1: 136: 2] * rw ) + rw

# print landmark

for i in range(0, 136, 2):

cv2.circle(img_copy, (int(landmark[0][i]), int(landmark[0][i+1])), 2, (0, 255, 0), -1, cv2.LINE_AA)

# draw.point(landmark[0], (225, 225, 255))

# del draw

cv2.imwrite(root + 'test.jpg', img_copy)

# img1.show()

if __name__ == '__main__':

detec_whole(img_dir, img_dir_out, label_file)

# detec_single()在写上面的测试代码的时候发现mac上由于caffe的包和cv2的包会有冲突,所以img.show()显示图片会出现问题,希望有知道的大牛可以告诉我怎么解决这个问题。

以上就是全部的过程,也是我实习里做的第二个完整的项目,上面如果有错误或者说的不对的地方,希望大家能够留言指出,万分感谢。

695

695

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?