*部分内容转载于http://blog.csdn.net/songrotek/article/details/47662617

CMT,全称是Clustering of Static-Adaptive Correspondences for Deformable Object Tracking主要应用功能为对于物体的视觉跟踪。

视频跟踪常见的方法思路可以分为三大类:

第一类是基于整体的模型来跟踪,比如说TLD,通过不断的更新模型(也就是学习的过程)来实现对物体特征的良好表示。

第二类是基于物体的局部来跟踪,就是将物体分解为多个部分,对每一部分进行单独的跟踪,采用光流等方法

第三类是基于物体的特征点来跟踪,就是实时的监测物体的特征点,与一开始的特征点进行匹配的方法来实现物体的跟踪。

CMT算法采用的是第三种思路,就是利用特征点。其主要优势在于时间效率。

如果以神经网络来获取特征,那么那么多的参数,肯定会消耗大量的时间。而采用特征点的话,我们知道OpenCV中集成了很多检测特征点的算法,比如SIFT,FAST,BRISK等等,有的比如FAST速度很快的。而且这么做连滑动窗口检测都可以省掉啦。

那么问题在于如何判断下一帧的图像中哪些特征点是与当前的框中的特征点相匹配的问题了?只要能够很好地找到下一帧中物体的特征点,跟踪也就完成了。

因此,为了解决这个问题,作者做了一个看起来很简单的创新:就是计算特征点的相对位置,以框的中心来进行计算,对于不形变的物体而言,不管物体怎么移动旋转,其上面的特征点相对中心的距离是在缩放比例下是确定的,因此可以由此来排除不是的特征点。作者获取下一帧的特征点做了两部分工作:1个是计算前一帧的框中的特征点的光流,从而得到当前帧的特征点位置,另一个方法是直接计算当前帧的特征点,并与上一帧的特征点进行匹配,得到相匹配的特征点,然后把两个得到的特征点都融合在一起。就得到了下一帧的初步的特征点。然后在对特征点进行筛选,采用的就是上一段说的方法。

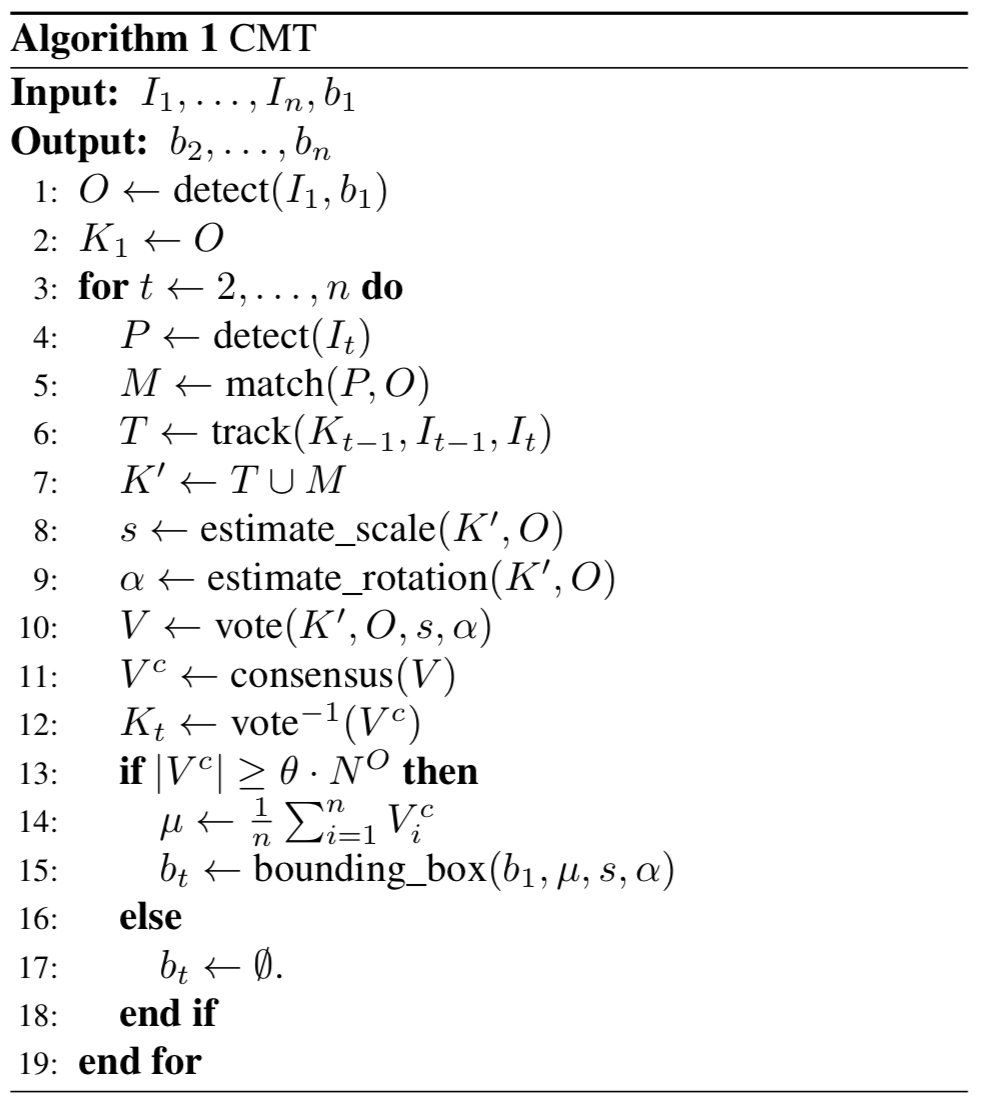

CMT算法流程

上文中的博客博主对其做了翻译。

Step 1:检测初始视频帧的所有特征点和特征描述,不仅仅是框内的点而是整个图像的点,所以在代码中可以看到,初始的database数据库的特征描述包含了前景(框内)和背景(框外)的特征

Step 2:将初始框内的特征描述赋给K1

Step 3:从第二帧开始

Step 4:检测视频帧的特征点P

Step 5:将特征点P与O匹配,获取匹配的特征点M

Step 6:利用上一帧的特征点使用光流法跟踪得到这一帧的特征点的位置T

Step 7:融合特征点M和特征点T得到这一帧的总的特征点K’

Step 8:根据K’估计特征点相对初始帧特征的缩放比例

Step 9:根据K’估计特征点相对初始帧特征的旋转比例

Step 10:根据Step7,8,9得到的数据计算每一个特征点的Vote

Step 11:采用聚类的方法选取最大的类也就是最一致的VoteC

Step 12:将VoteC转换回特征点得到最后这一帧的有效特征点

Step 13:判断VoteC的长度是否大于最小阈值,如果是则计算最后新的旋转矩形框的参数,如果不是也就是框太小了则输出0

首先是初始化,就是使用OpenCV做特征检测及特征描述。然后关键是存储有效的数据用于之后的匹配

void CMT::initialise(cv::Mat im_gray0, cv::Point2f topleft, cv::Point2f bottomright)

{

//Initialise detector, descriptor, matcher

detector = cv::Algorithm::create<cv::FeatureDetector>(detectorType.c_str());

descriptorExtractor = cv::Algorithm::create<cv::DescriptorExtractor>(descriptorType.c_str());

descriptorMatcher = cv::DescriptorMatcher::create(matcherType.c_str());

std::vector<std::string> list;

cv::Algorithm::getList(list);

//Get initial keypoints in whole image

std::vector<cv::KeyPoint> keypoints;

detector->detect(im_gray0, keypoints);

//Remember keypoints that are in the rectangle as selected keypoints

std::vector<cv::KeyPoint> selected_keypoints;

std::vector<cv::KeyPoint> background_keypoints;

inout_rect(keypoints, topleft, bottomright, selected_keypoints, background_keypoints);

descriptorExtractor->compute(im_gray0, selected_keypoints, selectedFeatures);

if(selected_keypoints.size() == 0)

{

printf("No keypoints found in selection");

return;

}

//Remember keypoints that are not in the rectangle as background keypoints

cv::Mat background_features;

descriptorExtractor->compute(im_gray0, background_keypoints, background_features);

//Assign each keypoint a class starting from 1, background is 0

selectedClasses = std::vector<int>();

for(int i = 1; i <= selected_keypoints.size(); i++)

selectedClasses.push_back(i);

std::vector<int> backgroundClasses;

for(int i = 0; i < background_keypoints.size(); i++)

backgroundClasses.push_back(0);

//Stack background features and selected features into database

featuresDatabase = cv::Mat(background_features.rows+selectedFeatures.rows, background_features.cols, background_features.type());

background_features.copyTo(featuresDatabase(cv::Rect(0,0,background_features.cols, background_features.rows)));

selectedFeatures.copyTo(featuresDatabase(cv::Rect(0,background_features.rows,selectedFeatures.cols, selectedFeatures.rows)));

//Same for classes

classesDatabase = std::vector<int>();

for(int i = 0; i < backgroundClasses.size(); i++)

classesDatabase.push_back(backgroundClasses[i]);

for(int i = 0; i < selectedClasses.size(); i++)

classesDatabase.push_back(selectedClasses[i]);

//Get all distances between selected keypoints in squareform and get all angles between selected keypoints

squareForm = std::vector<std::vector<double> >();

angles = std::vector<std::vector<double> >();

for(int i = 0; i < selected_keypoints.size(); i++)

{

std::vector<double> lineSquare;

std::vector<double> lineAngle;

for(int j = 0; j < selected_keypoints.size(); j++)

{

double dx = selected_keypoints[j].pt.x-selected_keypoints[i].pt.x;

double dy = selected_keypoints[j].pt.y-selected_keypoints[i].pt.y;

lineSquare.push_back(sqrt(dx*dx+dy*dy));

lineAngle.push_back(atan2(dy, dx));

}

squareForm.push_back(lineSquare);

angles.push_back(lineAngle);

}

//Find the center of selected keypoints

cv::Point2f center(0,0);

for(int i = 0; i < selected_keypoints.size(); i++)

center += selected_keypoints[i].pt;

center *= (1.0/selected_keypoints.size());

//Remember the rectangle coordinates relative to the center

centerToTopLeft = topleft - center;

centerToTopRight = cv::Point2f(bottomright.x, topleft.y) - center;

centerToBottomRight = bottomright - center;

centerToBottomLeft = cv::Point2f(topleft.x, bottomright.y) - center;

//Calculate springs of each keypoint

springs = std::vector<cv::Point2f>();

for(int i = 0; i < selected_keypoints.size(); i++)

springs.push_back(selected_keypoints[i].pt - center);

//Set start image for tracking

im_prev = im_gray0.clone();

//Make keypoints 'active' keypoints

activeKeypoints = std::vector<std::pair<cv::KeyPoint,int> >();

for(int i = 0; i < selected_keypoints.size(); i++)

activeKeypoints.push_back(std::make_pair(selected_keypoints[i], selectedClasses[i]));

//Remember number of initial keypoints

nbInitialKeypoints = selected_keypoints.size();

}

然后利用光流法计算关键点的当前位置,

基本的思路就是先使用上一帧的特征点points_prev通过光流计算这一帧的对应位置points_tracked,然后反过来使用points_tracked计算对应的上一帧的位置points_back,然后对比points_prev和points_back之间的距离,按道理应该是接近0才对,但是因为光流计算有误差,因此,有的可能比较大,因此作者设置了一个阈值thr_fb 30,如果大于该阈值,表示得到的数据有误,删掉该点。

这么做的目的是为了使跟踪得到的结果更可靠。

void track(cv::Mat im_prev, cv::Mat im_gray, const std::vector<std::pair<cv::KeyPoint, int> >& keypointsIN, std::vector<std::pair<cv::KeyPoint, int> >& keypointsTracked, std::vector<unsigned char>& status, int THR_FB)

{

//Status of tracked keypoint - True means successfully tracked

status = std::vector<unsigned char>();

//for(int i = 0; i < keypointsIN.size(); i++)

// status.push_back(false);

//If at least one keypoint is active

if(keypointsIN.size() > 0)

{

std::vector<cv::Point2f> pts;

std::vector<cv::Point2f> pts_back;

std::vector<cv::Point2f> nextPts;

std::vector<bool> status_back;

std::vector<float> err;

std::vector<float> err_back;

std::vector<float> fb_err;

for(int i = 0; i < keypointsIN.size(); i++)

pts.push_back(cv::Point2f(keypointsIN[i].first.pt.x,keypointsIN[i].first.pt.y));

//Calculate forward optical flow for prev_location

cv::calcOpticalFlowPyrLK(im_prev, im_gray, pts, nextPts, status, err);

//Calculate backward optical flow for prev_location

cv::calcOpticalFlowPyrLK(im_gray, im_prev, nextPts, pts_back, status_back, err_back);

//Calculate forward-backward error

for(int i = 0; i < pts.size(); i++)

{

cv::Point2d v = pts_back[i]-pts[i];

fb_err.push_back(sqrt(v.dot(v)));

}

//Set status depending on fb_err and lk error

for(int i = 0; i < status.size(); i++)

status[i] = fb_err[i] <= THR_FB & status[i];

keypointsTracked = std::vector<std::pair<cv::KeyPoint, int> >();

for(int i = 0; i < pts.size(); i++)

{

std::pair<cv::KeyPoint, int> p = keypointsIN[i];

if(status[i])

p.first.pt = nextPts[i];

keypointsTracked.push_back(p);

}

}

else keypointsTracked = std::vector<std::pair<cv::KeyPoint, int> >();

}我们也可以看到,这里

//Calculate forward optical flow for prev_location

cv::calcOpticalFlowPyrLK(im_prev, im_gray, pts, nextPts, status, err);

//Calculate backward optical flow for prev_location

cv::calcOpticalFlowPyrLK(im_gray, im_prev, nextPts, pts_back, status_back, err_back);

是双向的处理。机智如我~

接下来是估计缩放比率和旋转角度,一开始我们已经存储了初始的特征点,而且是正规化的特征点points_normalized,先计算两两之间的相对距离和相对角度那么对于新的特征点,同样也是计算他们的相对距离和相对角度,并与初始的数据相除或相减,就得到变化。 最后取他们的中位数作为整体的缩放比率和旋转。

void CMT::estimate(const std::vector<std::pair<cv::KeyPoint, int> >& keypointsIN, cv::Point2f& center, double& scaleEstimate, double& medRot, std::vector<std::pair<cv::KeyPoint, int> >& keypoints)

{

center = cv::Point2f(NAN,NAN);

scaleEstimate = NAN;

medRot = NAN;

//At least 2 keypoints are needed for scale

if(keypointsIN.size() > 1)

{

//sort

std::vector<PairInt> list;

for(int i = 0; i < keypointsIN.size(); i++)

list.push_back(std::make_pair(keypointsIN[i].second, i));

std::sort(&list[0], &list[0]+list.size(), comparatorPair<int>);

for(int i = 0; i < list.size(); i++)

keypoints.push_back(keypointsIN[list[i].second]);

std::vector<int> ind1;

std::vector<int> ind2;

for(int i = 0; i < list.size(); i++)

for(int j = 0; j < list.size(); j++)

{

if(i != j && keypoints[i].second != keypoints[j].second)

{

ind1.push_back(i);

ind2.push_back(j);

}

}

if(ind1.size() > 0)

{

std::vector<int> class_ind1;

std::vector<int> class_ind2;

std::vector<cv::KeyPoint> pts_ind1;

std::vector<cv::KeyPoint> pts_ind2;

for(int i = 0; i < ind1.size(); i++)

{

class_ind1.push_back(keypoints[ind1[i]].second-1);

class_ind2.push_back(keypoints[ind2[i]].second-1);

pts_ind1.push_back(keypoints[ind1[i]].first);

pts_ind2.push_back(keypoints[ind2[i]].first);

}

std::vector<double> scaleChange;

std::vector<double> angleDiffs;

for(int i = 0; i < pts_ind1.size(); i++)

{

cv::Point2f p = pts_ind2[i].pt - pts_ind1[i].pt;

//This distance might be 0 for some combinations,

//as it can happen that there is more than one keypoint at a single location

double dist = sqrt(p.dot(p));

double origDist = squareForm[class_ind1[i]][class_ind2[i]];

scaleChange.push_back(dist/origDist);

//Compute angle

double angle = atan2(p.y, p.x);

double origAngle = angles[class_ind1[i]][class_ind2[i]];

double angleDiff = angle - origAngle;

//Fix long way angles

if(fabs(angleDiff) > M_PI)

angleDiff -= sign(angleDiff) * 2 * M_PI;

angleDiffs.push_back(angleDiff);

}

scaleEstimate = median(scaleChange);

if(!estimateScale)

scaleEstimate = 1;

medRot = median(angleDiffs);

if(!estimateRotation)

medRot = 0;

votes = std::vector<cv::Point2f>();

for(int i = 0; i < keypoints.size(); i++)

votes.push_back(keypoints[i].first.pt - scaleEstimate * rotate(springs[keypoints[i].second-1], medRot));

//Compute linkage between pairwise distances

std::vector<Cluster> linkageData = linkage(votes);

//Perform hierarchical distance-based clustering

std::vector<int> T = fcluster(linkageData, thrOutlier);

//Count votes for each cluster

std::vector<int> cnt = binCount(T);

//Get largest class

int Cmax = argmax(cnt);

//Remember outliers

outliers = std::vector<std::pair<cv::KeyPoint, int> >();

std::vector<std::pair<cv::KeyPoint, int> > newKeypoints;

std::vector<cv::Point2f> newVotes;

for(int i = 0; i < keypoints.size(); i++)

{

if(T[i] != Cmax)

outliers.push_back(keypoints[i]);

else

{

newKeypoints.push_back(keypoints[i]);

newVotes.push_back(votes[i]);

}

}

keypoints = newKeypoints;

center = cv::Point2f(0,0);

for(int i = 0; i < newVotes.size(); i++)

center += newVotes[i];

center *= (1.0/newVotes.size());

}

}

}

最后分析去掉不好的特征点,vote的基本思想就是这些特征点相对中心的相对距离在把缩放旋转考虑进去之后是相对不变的,也就是按道理下一帧的特征点相对中心的位置是不变的。但是由于图像本身的变化,不可能得到完全一样的相对位置,这个时候,有一些会离中心近,有一些会偏差很大。那么,作者就采用聚类的方法,选择最大的一类作为最好的特征点。其他的不要。

完整代码:

CMT.h

#ifndef CMT_H

#define CMT_H

#include <opencv2/opencv.hpp>

#include <opencv2/features2d/features2d.hpp>

class CMT

{

public:

std::string detectorType;

std::string descriptorType;

std::string matcherType;

int descriptorLength;

int thrOutlier;

double thrConf;

double thrRatio;

bool estimateScale;

bool estimateRotation;

cv::Ptr<cv::FeatureDetector> detector;

cv::Ptr<cv::DescriptorExtractor> descriptorExtractor;

cv::Ptr<cv::DescriptorMatcher> descriptorMatcher;

cv::Mat selectedFeatures;

std::vector<int> selectedClasses;

cv::Mat featuresDatabase;

std::vector<int> classesDatabase;

std::vector<std::vector<double> > squareForm;

std::vector<std::vector<double> > angles;

cv::Point2f topLeft;

cv::Point2f topRight;

cv::Point2f bottomRight;

cv::Point2f bottomLeft;

cv::Rect_<float> boundingbox;

bool hasResult;

cv::Point2f centerToTopLeft;

cv::Point2f centerToTopRight;

cv::Point2f centerToBottomRight;

cv::Point2f centerToBottomLeft;

std::vector<cv::Point2f> springs;

cv::Mat im_prev;

std::vector<std::pair<cv::KeyPoint,int> > activeKeypoints;

std::vector<std::pair<cv::KeyPoint,int> > trackedKeypoints;

int nbInitialKeypoints;

std::vector<cv::Point2f> votes;

std::vector<std::pair<cv::KeyPoint, int> > outliers;

CMT();

void initialise(cv::Mat im_gray0, cv::Point2f topleft, cv::Point2f bottomright);

void estimate(const std::vector<std::pair<cv::KeyPoint, int> >& keypointsIN, cv::Point2f& center, double& scaleEstimate, double& medRot, std::vector<std::pair<cv::KeyPoint, int> >& keypoints);

void processFrame(cv::Mat im_gray);

};

class Cluster

{

public:

int first, second;//cluster id

double dist;

int num;

};

void inout_rect(const std::vector<cv::KeyPoint>& keypoints, cv::Point2d topleft, cv::Point2d bottomright, std::vector<cv::KeyPoint>& in, std::vector<cv::KeyPoint>& out);

void track(cv::Mat im_prev, cv::Mat im_gray, const std::vector<std::pair<cv::KeyPoint, int> >& keypointsIN, std::vector<std::pair<cv::KeyPoint, int> >& keypointsTracked, std::vector<unsigned char>& status, int THR_FB = 20);

cv::Point2f rotate(cv::Point2f p, float rad);

#endif // CMT_HCMT.cpp

// CMT.cpp : 定义控制台应用程序的入口点。

//

#include "stdafx.h"

#include "CMT.h"

//#define DEBUG_MODE

#ifdef DEBUG_MODE

#include <QDebug>

#endif

void num2str(char *str, int length, int num)

{

for(int i = 0; i < length-1; i++)

{

str[length-i-2] = '0'+num%10;

num /= 10;

}

str[length-1] = 0;

}

int main(int argc, char *argv[])

{

char *path = "sequences/cokecan/img";

char *ext = "png";

int numLength = 5;

char numString[6];

char filename[255];

int start = 0;

int end = 291;

cv::Point2f initTopLeft(150,82);

cv::Point2f initBottomDown(170,118);

CMT cmt;

//cmt.estimateRotation = false;

for(int i = start; i <= end; i++)

{

num2str(numString, numLength+1, i);

sprintf(filename, "%s%s.%s", path, numString, ext);

#ifdef DEBUG_MODE

qDebug() << filename;

#endif

cv::Mat img = cv::imread(filename);

cv::Mat im_gray;

cv::cvtColor(img, im_gray, CV_RGB2GRAY);

if(i == start)

cmt.initialise(im_gray, initTopLeft, initBottomDown);

cmt.processFrame(im_gray);

for(int i = 0; i<cmt.trackedKeypoints.size(); i++)

cv::circle(img, cmt.trackedKeypoints[i].first.pt, 3, cv::Scalar(255,255,255));

cv::line(img, cmt.topLeft, cmt.topRight, cv::Scalar(255,255,255));

cv::line(img, cmt.topRight, cmt.bottomRight, cv::Scalar(255,255,255));

cv::line(img, cmt.bottomRight, cmt.bottomLeft, cv::Scalar(255,255,255));

cv::line(img, cmt.bottomLeft, cmt.topLeft, cv::Scalar(255,255,255));

#ifdef DEBUG_MODE

qDebug() << "trackedKeypoints";

for(int i = 0; i<cmt.trackedKeypoints.size(); i++)

qDebug() << cmt.trackedKeypoints[i].first.pt.x << cmt.trackedKeypoints[i].first.pt.x << cmt.trackedKeypoints[i].second;

qDebug() << "box";

qDebug() << cmt.topLeft.x << cmt.topLeft.y;

qDebug() << cmt.topRight.x << cmt.topRight.y;

qDebug() << cmt.bottomRight.x << cmt.bottomRight.y;

qDebug() << cmt.bottomLeft.x << cmt.bottomLeft.y;

#endif

imshow("frame", img);

cv::waitKey(1);

}

return 0;

}

void inout_rect(const std::vector<cv::KeyPoint>& keypoints, cv::Point2d topleft, cv::Point2d bottomright, std::vector<cv::KeyPoint>& in, std::vector<cv::KeyPoint>& out)

{

for(int i = 0; i < keypoints.size(); i++)

{

if(keypoints[i].pt.x > topleft.x && keypoints[i].pt.y > topleft.y && keypoints[i].pt.x < bottomright.x && keypoints[i].pt.y < bottomright.y)

in.push_back(keypoints[i]);

else out.push_back(keypoints[i]);

}

}

void track(cv::Mat im_prev, cv::Mat im_gray, const std::vector<std::pair<cv::KeyPoint, int> >& keypointsIN, std::vector<std::pair<cv::KeyPoint, int> >& keypointsTracked, std::vector<unsigned char>& status, int THR_FB)

{

//Status of tracked keypoint - True means successfully tracked

status = std::vector<unsigned char>();

//for(int i = 0; i < keypointsIN.size(); i++)

// status.push_back(false);

//If at least one keypoint is active

if(keypointsIN.size() > 0)

{

std::vector<cv::Point2f> pts;

std::vector<cv::Point2f> pts_back;

std::vector<cv::Point2f> nextPts;

std::vector<bool> status_back;

std::vector<float> err;

std::vector<float> err_back;

std::vector<float> fb_err;

for(int i = 0; i < keypointsIN.size(); i++)

pts.push_back(cv::Point2f(keypointsIN[i].first.pt.x,keypointsIN[i].first.pt.y));

//Calculate forward optical flow for prev_location

cv::calcOpticalFlowPyrLK(im_prev, im_gray, pts, nextPts, status, err);

//Calculate backward optical flow for prev_location

cv::calcOpticalFlowPyrLK(im_gray, im_prev, nextPts, pts_back, status_back, err_back);

//Calculate forward-backward error

for(int i = 0; i < pts.size(); i++)

{

cv::Point2d v = pts_back[i]-pts[i];

fb_err.push_back(sqrt(v.dot(v)));

}

//Set status depending on fb_err and lk error

for(int i = 0; i < status.size(); i++)

status[i] = fb_err[i] <= THR_FB & status[i];

keypointsTracked = std::vector<std::pair<cv::KeyPoint, int> >();

for(int i = 0; i < pts.size(); i++)

{

std::pair<cv::KeyPoint, int> p = keypointsIN[i];

if(status[i])

p.first.pt = nextPts[i];

keypointsTracked.push_back(p);

}

}

else keypointsTracked = std::vector<std::pair<cv::KeyPoint, int> >();

}

cv::Point2f rotate(cv::Point2f p, float rad)

{

if(rad == 0)

return p;

double s = sin(rad);

double c = cos(rad);

return cv::Point2f(c*p.x-s*p.y,s*p.x+c*p.y);

}

CMT::CMT()

{

detectorType = "Feature2D.BRISK";

descriptorType = "Feature2D.BRISK";

matcherType = "BruteForce-Hamming";

thrOutlier = 20;

thrConf = 0.75;

thrRatio = 0.8;

descriptorLength = 512;

estimateScale = true;

estimateRotation = true;

}

void CMT::initialise(cv::Mat im_gray0, cv::Point2f topleft, cv::Point2f bottomright)

{

//Initialise detector, descriptor, matcher

detector = cv::Algorithm::create<cv::FeatureDetector>(detectorType.c_str());

descriptorExtractor = cv::Algorithm::create<cv::DescriptorExtractor>(descriptorType.c_str());

descriptorMatcher = cv::DescriptorMatcher::create(matcherType.c_str());

std::vector<std::string> list;

cv::Algorithm::getList(list);

//Get initial keypoints in whole image

std::vector<cv::KeyPoint> keypoints;

detector->detect(im_gray0, keypoints);

//Remember keypoints that are in the rectangle as selected keypoints

std::vector<cv::KeyPoint> selected_keypoints;

std::vector<cv::KeyPoint> background_keypoints;

inout_rect(keypoints, topleft, bottomright, selected_keypoints, background_keypoints);

descriptorExtractor->compute(im_gray0, selected_keypoints, selectedFeatures);

if(selected_keypoints.size() == 0)

{

printf("No keypoints found in selection");

return;

}

//Remember keypoints that are not in the rectangle as background keypoints

cv::Mat background_features;

descriptorExtractor->compute(im_gray0, background_keypoints, background_features);

//Assign each keypoint a class starting from 1, background is 0

selectedClasses = std::vector<int>();

for(int i = 1; i <= selected_keypoints.size(); i++)

selectedClasses.push_back(i);

std::vector<int> backgroundClasses;

for(int i = 0; i < background_keypoints.size(); i++)

backgroundClasses.push_back(0);

//Stack background features and selected features into database

featuresDatabase = cv::Mat(background_features.rows+selectedFeatures.rows, background_features.cols, background_features.type());

background_features.copyTo(featuresDatabase(cv::Rect(0,0,background_features.cols, background_features.rows)));

selectedFeatures.copyTo(featuresDatabase(cv::Rect(0,background_features.rows,selectedFeatures.cols, selectedFeatures.rows)));

//Same for classes

classesDatabase = std::vector<int>();

for(int i = 0; i < backgroundClasses.size(); i++)

classesDatabase.push_back(backgroundClasses[i]);

for(int i = 0; i < selectedClasses.size(); i++)

classesDatabase.push_back(selectedClasses[i]);

//Get all distances between selected keypoints in squareform and get all angles between selected keypoints

squareForm = std::vector<std::vector<double> >();

angles = std::vector<std::vector<double> >();

for(int i = 0; i < selected_keypoints.size(); i++)

{

std::vector<double> lineSquare;

std::vector<double> lineAngle;

for(int j = 0; j < selected_keypoints.size(); j++)

{

double dx = selected_keypoints[j].pt.x-selected_keypoints[i].pt.x;

double dy = selected_keypoints[j].pt.y-selected_keypoints[i].pt.y;

lineSquare.push_back(sqrt(dx*dx+dy*dy));

lineAngle.push_back(atan2(dy, dx));

}

squareForm.push_back(lineSquare);

angles.push_back(lineAngle);

}

//Find the center of selected keypoints

cv::Point2f center(0,0);

for(int i = 0; i < selected_keypoints.size(); i++)

center += selected_keypoints[i].pt;

center *= (1.0/selected_keypoints.size());

//Remember the rectangle coordinates relative to the center

centerToTopLeft = topleft - center;

centerToTopRight = cv::Point2f(bottomright.x, topleft.y) - center;

centerToBottomRight = bottomright - center;

centerToBottomLeft = cv::Point2f(topleft.x, bottomright.y) - center;

//Calculate springs of each keypoint

springs = std::vector<cv::Point2f>();

for(int i = 0; i < selected_keypoints.size(); i++)

springs.push_back(selected_keypoints[i].pt - center);

//Set start image for tracking

im_prev = im_gray0.clone();

//Make keypoints 'active' keypoints

activeKeypoints = std::vector<std::pair<cv::KeyPoint,int> >();

for(int i = 0; i < selected_keypoints.size(); i++)

activeKeypoints.push_back(std::make_pair(selected_keypoints[i], selectedClasses[i]));

//Remember number of initial keypoints

nbInitialKeypoints = selected_keypoints.size();

}

typedef std::pair<int,int> PairInt;

typedef std::pair<float,int> PairFloat;

template<typename T>

bool comparatorPair ( const std::pair<T,int>& l, const std::pair<T,int>& r)

{

return l.first < r.first;

}

template<typename T>

bool comparatorPairDesc ( const std::pair<T,int>& l, const std::pair<T,int>& r)

{

return l.first > r.first;

}

template <typename T>

T sign(T t)

{

if( t == 0 )

return T(0);

else

return (t < 0) ? T(-1) : T(1);

}

template<typename T>

T median(std::vector<T> list)

{

T val;

std::nth_element(&list[0], &list[0]+list.size()/2, &list[0]+list.size());

val = list[list.size()/2];

if(list.size()%2==0)

{

std::nth_element(&list[0], &list[0]+list.size()/2-1, &list[0]+list.size());

val = (val+list[list.size()/2-1])/2;

}

return val;

}

double findMinSymetric(const std::vector<std::vector<double> >& dist, const std::vector<bool>& used, int limit, int &i, int &j)

{

double min = dist[0][0];

i = 0;

j = 0;

for(int x = 0; x < limit; x++)

{

if(!used[x])

{

for(int y = x+1; y < limit; y++)

if(!used[y] && dist[x][y] <= min)

{

min = dist[x][y];

i = x;

j = y;

}

}

}

return min;

}

std::vector<Cluster> linkage(const std::vector<cv::Point2f>& list)

{

double inf = 10000000;0;

std::vector<bool> used;

for(int i = 0; i < 2*list.size(); i++)

used.push_back(false);

std::vector<std::vector<double> > dist;

for(int i = 0; i < list.size(); i++)

{

std::vector<double> line;

for(int j = 0; j < list.size(); j++)

{

if(i == j)

line.push_back(inf);

else

{

cv::Point2f p = list[i]-list[j];

line.push_back(sqrt(p.dot(p)));

}

}

for(int j = 0; j < list.size(); j++)

line.push_back(inf);

dist.push_back(line);

}

for(int i = 0; i < list.size(); i++)

{

std::vector<double> line;

for(int j = 0; j < 2*list.size(); j++)

line.push_back(inf);

dist.push_back(line);

}

std::vector<Cluster> clusters;

while(clusters.size() < list.size()-1)

{

int x, y;

double min = findMinSymetric(dist, used, list.size()+clusters.size(), x, y);

Cluster cluster;

cluster.first = x;

cluster.second = y;

cluster.dist = min;

cluster.num = (x < list.size() ? 1 : clusters[x-list.size()].num) + (y < list.size() ? 1 : clusters[y-list.size()].num);

used[x] = true;

used[y] = true;

int limit = list.size()+clusters.size();

for(int i = 0; i < limit; i++)

{

if(!used[i])

dist[i][limit] = dist[limit][i] = std::min(dist[i][x], dist[i][y]);

}

clusters.push_back(cluster);

}

return clusters;

}

void fcluster_rec(std::vector<int>& data, const std::vector<Cluster>& clusters, double threshold, const Cluster& currentCluster, int& binId)

{

int startBin = binId;

if(currentCluster.first >= data.size())

fcluster_rec(data, clusters, threshold, clusters[currentCluster.first - data.size()], binId);

else data[currentCluster.first] = binId;

if(startBin == binId && currentCluster.dist >= threshold)

binId++;

startBin = binId;

if(currentCluster.second >= data.size())

fcluster_rec(data, clusters, threshold, clusters[currentCluster.second - data.size()], binId);

else data[currentCluster.second] = binId;

if(startBin == binId && currentCluster.dist >= threshold)

binId++;

}

std::vector<int> binCount(const std::vector<int>& T)

{

std::vector<int> result;

for(int i = 0; i < T.size(); i++)

{

while(T[i] >= result.size())

result.push_back(0);

result[T[i]]++;

}

return result;

}

int argmax(const std::vector<int>& list)

{

int max = list[0];

int id = 0;

for(int i = 1; i < list.size(); i++)

if(list[i] > max)

{

max = list[i];

id = i;

}

return id;

}

std::vector<int> fcluster(const std::vector<Cluster>& clusters, double threshold)

{

std::vector<int> data;

for(int i = 0; i < clusters.size()+1; i++)

data.push_back(0);

int binId = 0;

fcluster_rec(data, clusters, threshold, clusters[clusters.size()-1], binId);

return data;

}

void CMT::estimate(const std::vector<std::pair<cv::KeyPoint, int> >& keypointsIN, cv::Point2f& center, double& scaleEstimate, double& medRot, std::vector<std::pair<cv::KeyPoint, int> >& keypoints)

{

center = cv::Point2f(NAN,NAN);

scaleEstimate = NAN;

medRot = NAN;

//At least 2 keypoints are needed for scale

if(keypointsIN.size() > 1)

{

//sort

std::vector<PairInt> list;

for(int i = 0; i < keypointsIN.size(); i++)

list.push_back(std::make_pair(keypointsIN[i].second, i));

std::sort(&list[0], &list[0]+list.size(), comparatorPair<int>);

for(int i = 0; i < list.size(); i++)

keypoints.push_back(keypointsIN[list[i].second]);

std::vector<int> ind1;

std::vector<int> ind2;

for(int i = 0; i < list.size(); i++)

for(int j = 0; j < list.size(); j++)

{

if(i != j && keypoints[i].second != keypoints[j].second)

{

ind1.push_back(i);

ind2.push_back(j);

}

}

if(ind1.size() > 0)

{

std::vector<int> class_ind1;

std::vector<int> class_ind2;

std::vector<cv::KeyPoint> pts_ind1;

std::vector<cv::KeyPoint> pts_ind2;

for(int i = 0; i < ind1.size(); i++)

{

class_ind1.push_back(keypoints[ind1[i]].second-1);

class_ind2.push_back(keypoints[ind2[i]].second-1);

pts_ind1.push_back(keypoints[ind1[i]].first);

pts_ind2.push_back(keypoints[ind2[i]].first);

}

std::vector<double> scaleChange;

std::vector<double> angleDiffs;

for(int i = 0; i < pts_ind1.size(); i++)

{

cv::Point2f p = pts_ind2[i].pt - pts_ind1[i].pt;

//This distance might be 0 for some combinations,

//as it can happen that there is more than one keypoint at a single location

double dist = sqrt(p.dot(p));

double origDist = squareForm[class_ind1[i]][class_ind2[i]];

scaleChange.push_back(dist/origDist);

//Compute angle

double angle = atan2(p.y, p.x);

double origAngle = angles[class_ind1[i]][class_ind2[i]];

double angleDiff = angle - origAngle;

//Fix long way angles

if(fabs(angleDiff) > M_PI)

angleDiff -= sign(angleDiff) * 2 * M_PI;

angleDiffs.push_back(angleDiff);

}

scaleEstimate = median(scaleChange);

if(!estimateScale)

scaleEstimate = 1;

medRot = median(angleDiffs);

if(!estimateRotation)

medRot = 0;

votes = std::vector<cv::Point2f>();

for(int i = 0; i < keypoints.size(); i++)

votes.push_back(keypoints[i].first.pt - scaleEstimate * rotate(springs[keypoints[i].second-1], medRot));

//Compute linkage between pairwise distances

std::vector<Cluster> linkageData = linkage(votes);

//Perform hierarchical distance-based clustering

std::vector<int> T = fcluster(linkageData, thrOutlier);

//Count votes for each cluster

std::vector<int> cnt = binCount(T);

//Get largest class

int Cmax = argmax(cnt);

//Remember outliers

outliers = std::vector<std::pair<cv::KeyPoint, int> >();

std::vector<std::pair<cv::KeyPoint, int> > newKeypoints;

std::vector<cv::Point2f> newVotes;

for(int i = 0; i < keypoints.size(); i++)

{

if(T[i] != Cmax)

outliers.push_back(keypoints[i]);

else

{

newKeypoints.push_back(keypoints[i]);

newVotes.push_back(votes[i]);

}

}

keypoints = newKeypoints;

center = cv::Point2f(0,0);

for(int i = 0; i < newVotes.size(); i++)

center += newVotes[i];

center *= (1.0/newVotes.size());

}

}

}

//todo : n*log(n) by sorting the second array and dichotomic search instead of n^2

std::vector<bool> in1d(const std::vector<int>& a, const std::vector<int>& b)

{

std::vector<bool> result;

for(int i = 0; i < a.size(); i++)

{

bool found = false;

for(int j = 0; j < b.size(); j++)

if(a[i] == b[j])

{

found = true;

break;

}

result.push_back(found);

}

return result;

}

void CMT::processFrame(cv::Mat im_gray)

{

trackedKeypoints = std::vector<std::pair<cv::KeyPoint, int> >();

std::vector<unsigned char> status;

track(im_prev, im_gray, activeKeypoints, trackedKeypoints, status);

cv::Point2f center;

double scaleEstimate;

double rotationEstimate;

std::vector<std::pair<cv::KeyPoint, int> > trackedKeypoints2;

estimate(trackedKeypoints, center, scaleEstimate, rotationEstimate, trackedKeypoints2);

trackedKeypoints = trackedKeypoints2;

//Detect keypoints, compute descriptors

std::vector<cv::KeyPoint> keypoints;

cv::Mat features;

detector->detect(im_gray, keypoints);

descriptorExtractor->compute(im_gray, keypoints, features);

//Create list of active keypoints

activeKeypoints = std::vector<std::pair<cv::KeyPoint, int> >();

//For each keypoint and its descriptor

for(int i = 0; i < keypoints.size(); i++)

{

cv::KeyPoint keypoint = keypoints[i];

//First: Match over whole image

//Compute distances to all descriptors

std::vector<cv::DMatch> matches;

descriptorMatcher->match(featuresDatabase,features.row(i), matches);

//Convert distances to confidences, do not weight

std::vector<float> combined;

for(int i = 0; i < matches.size(); i++)

combined.push_back(1 - matches[i].distance / descriptorLength);

std::vector<int>& classes = classesDatabase;

//Sort in descending order

std::vector<PairFloat> sorted_conf;

for(int i = 0; i < combined.size(); i++)

sorted_conf.push_back(std::make_pair(combined[i], i));

std::sort(&sorted_conf[0], &sorted_conf[0]+sorted_conf.size(), comparatorPairDesc<float>);

//Get best and second best index

int bestInd = sorted_conf[0].second;

int secondBestInd = sorted_conf[1].second;

//Compute distance ratio according to Lowe

float ratio = (1-combined[bestInd]) / (1-combined[secondBestInd]);

//Extract class of best match

int keypoint_class = classes[bestInd];

//If distance ratio is ok and absolute distance is ok and keypoint class is not background

if(ratio < thrRatio && combined[bestInd] > thrConf && keypoint_class != 0)

activeKeypoints.push_back(std::make_pair(keypoint, keypoint_class));

//In a second step, try to match difficult keypoints

//If structural constraints are applicable

if(!(isnan(center.x) | isnan(center.y)))

{

//Compute distances to initial descriptors

std::vector<cv::DMatch> matches;

descriptorMatcher->match(selectedFeatures, features.row(i), matches);

//Convert distances to confidences

std::vector<float> confidences;

for(int i = 0; i < matches.size(); i++)

confidences.push_back(1 - matches[i].distance / descriptorLength);

//Compute the keypoint location relative to the object center

cv::Point2f relative_location = keypoint.pt - center;

//Compute the distances to all springs

std::vector<float> displacements;

for(int i = 0; i < springs.size(); i++)

{

cv::Point2f p = (scaleEstimate * rotate(springs[i], -rotationEstimate) - relative_location);

displacements.push_back(sqrt(p.dot(p)));

}

//For each spring, calculate weight

std::vector<float> combined;

for(int i = 0; i < confidences.size(); i++)

combined.push_back((displacements[i] < thrOutlier)*confidences[i]);

std::vector<int>& classes = selectedClasses;

//Sort in descending order

std::vector<PairFloat> sorted_conf;

for(int i = 0; i < combined.size(); i++)

sorted_conf.push_back(std::make_pair(combined[i], i));

std::sort(&sorted_conf[0], &sorted_conf[0]+sorted_conf.size(), comparatorPairDesc<float>);

//Get best and second best index

int bestInd = sorted_conf[0].second;

int secondBestInd = sorted_conf[1].second;

//Compute distance ratio according to Lowe

float ratio = (1-combined[bestInd]) / (1-combined[secondBestInd]);

//Extract class of best match

int keypoint_class = classes[bestInd];

//If distance ratio is ok and absolute distance is ok and keypoint class is not background

if(ratio < thrRatio && combined[bestInd] > thrConf && keypoint_class != 0)

{

for(int i = activeKeypoints.size()-1; i >= 0; i--)

if(activeKeypoints[i].second == keypoint_class)

activeKeypoints.erase(activeKeypoints.begin()+i);

activeKeypoints.push_back(std::make_pair(keypoint, keypoint_class));

}

}

}

//If some keypoints have been tracked

if(trackedKeypoints.size() > 0)

{

//Extract the keypoint classes

std::vector<int> tracked_classes;

for(int i = 0; i < trackedKeypoints.size(); i++)

tracked_classes.push_back(trackedKeypoints[i].second);

//If there already are some active keypoints

if(activeKeypoints.size() > 0)

{

//Add all tracked keypoints that have not been matched

std::vector<int> associated_classes;

for(int i = 0; i < activeKeypoints.size(); i++)

associated_classes.push_back(activeKeypoints[i].second);

std::vector<bool> notmissing = in1d(tracked_classes, associated_classes);

for(int i = 0; i < trackedKeypoints.size(); i++)

if(!notmissing[i])

activeKeypoints.push_back(trackedKeypoints[i]);

}

else activeKeypoints = trackedKeypoints;

}

//Update object state estimate

std::vector<std::pair<cv::KeyPoint, int> > activeKeypointsBefore = activeKeypoints;

im_prev = im_gray;

topLeft = cv::Point2f(NAN,NAN);

topRight = cv::Point2f(NAN,NAN);

bottomLeft = cv::Point2f(NAN,NAN);

bottomRight = cv::Point2f(NAN,NAN);

boundingbox = cv::Rect_<float>(NAN,NAN,NAN,NAN);

hasResult = false;

if(!(isnan(center.x) | isnan(center.y)) && activeKeypoints.size() > nbInitialKeypoints / 10)

{

hasResult = true;

topLeft = center + scaleEstimate*rotate(centerToTopLeft, rotationEstimate);

topRight = center + scaleEstimate*rotate(centerToTopRight, rotationEstimate);

bottomLeft = center + scaleEstimate*rotate(centerToBottomLeft, rotationEstimate);

bottomRight = center + scaleEstimate*rotate(centerToBottomRight, rotationEstimate);

float minx = std::min(std::min(topLeft.x,topRight.x),std::min(bottomRight.x, bottomLeft.x));

float miny = std::min(std::min(topLeft.y,topRight.y),std::min(bottomRight.y, bottomLeft.y));

float maxx = std::max(std::max(topLeft.x,topRight.x),std::max(bottomRight.x, bottomLeft.x));

float maxy = std::max(std::max(topLeft.y,topRight.y),std::max(bottomRight.y, bottomLeft.y));

boundingbox = cv::Rect_<float>(minx, miny, maxx-minx, maxy-miny);

}

}最后说一下自己对于CMT算法应用方面的理解

其优点在于代码简单,方便移植,效率非常的快,适用于静态物体。

缺点在于物体角度变化大或者移动幅度较大时很难定位特征点。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?