定向广播和受限广播

神经风格转移,进化 (Neural Style Transfer, Evolution)

In their seminal work, “Image Style Transfer Using Convolutional Neural Networks,” Gatys et al.[R1] demonstrate the efficacy of CNNs in separating and re-combining image content and style to create composite artistic images. Using feature extractions from intermediate layers of a pre-trained CNN, they define separate content and style loss functions, and pose the style transfer task as an optimization problem. We start from a random image and update the pixel values, such that the individual loss functions are minimized. For more details, please refer to this article.

在他们开创性的工作中,“ 使用卷积神经网络进行图像样式转换 ”,Gatys 等人。 [R1] 演示了CNN在分离和重新组合图像内容和样式以创建合成艺术图像方面的功效。 使用从预训练的CNN的中间层提取的特征,它们定义了单独的内容和样式损失函数,并将样式转换任务摆为优化问题。 我们从随机图像开始并更新像素值,以使各个损失函数最小化。 有关更多详细信息,请参阅此 文章。

One obvious caveat of this approach is that it is slow. It takes several optimization iterations to tune the random image such that it adapts to the content and style of two different reference images. To tackle this inefficiency, Johnson et al.[R2] propose a feed-forward neural network to approximate the solution of the style transfer optimization problem[R1].

这种方法的一个明显警告是它很慢 。 它需要进行多次优化迭代才能调整随机图像,使其适应两个不同参考图像的内容和样式 。 为了解决这种效率低下的问题,Johnson 等人。 [R2]提出了一种前馈神经网络,以近似解决样式转换优化问题[R1]。

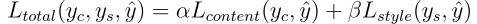

Essentially, they propose an image transformation network (fw), which is a deep residual convolutional neural network that transforms input images x into output images ŷ. The goal of style transfer is to generate an image ŷ that combines the content of the target content image y_c with the style of a target style image y_s.

从本质上讲,它们提出的图像变换网络 (FW),这是一种深残余卷积神经网络,其变换输入图像x转换成输出图像ÿ。 风格转移的目标是产生ŷ结合的图像与目标风格的形象Y_S的风格目标内容图像y_c的内容。

During training, for a fixed style target y_s, the image transformation network takes a random target content image y_c (or x) as input, and generates the image ŷ. The transformation network is then tuned to minimize the style transfer loss (refer Fig 2). Over the course of several iterations, the network sees multiple content images, but only a fixed style image. As a result, the image transformation network learns to combine content from any reference image with the style of a particular reference image.

在训练期间,对于固定样式目标y_s ,图像转换网络将随机目标内容图像y_c ( 或 x )作为输入,并生成图像。 然后,调整转换网络以最大程度地减少样式转移损失(请参见图2)。 在几次迭代过程中,网络可以看到多个内容图像,但只能看到固定样式的图像。 结果,图像变换网络学习将来自任何参考图像的内容与特定参考图像的样式相结合。

In conclusion, while this approach performs style transfer at a speed three orders of magnitude faster than per-image optimization, they are restricted to one-style-per-network.

总而言之,尽管这种方法以比每个图像优化快三个数量级的速度执行样式转换,但它们仅限于每个网络一个样式 。

翻译自: https://towardsdatascience.com/fast-and-restricted-style-transfer-bbfc383cccd6

定向广播和受限广播

7179

7179

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?