集成学习模型融合方式

There is a famous game show in India named “Kaun Banega Crorepati (KBC)” inspired by “Who wants to be a Millionaire”. It was kind of a quiz show with multiple choices of answers. If the participants can choose the right option for all questions, he or she can win 10 Million Indin Rupees. The participants had some options to resort to if he is unsure about any question. One such option was taking an audience poll and go by the majority choice.

印度有一个著名的游戏节目,名为“ Kaun Banega Crorepati(KBC)”,灵感来自“谁想成为百万富翁”。 这是一个具有多种选择答案的测验节目。 如果参与者可以为所有问题选择正确的选项,则他或她可以赢得1000万印度卢比。 如果不确定任何问题,参与者可以采取一些选择。 一种这样的选择是进行受众调查,然后以多数票通过。

The questions could be from Sports, Mythology, Politics, Music, Movies, Culture, Science, etc that is from a variety of subjects. The audience were no experts of any such subject. Interestingly, more often than not the majority opinion will turn out to be the correct answer. This is the central concept behind ensembling.

这些问题可能来自体育,神话,政治,音乐,电影,文化,科学等,这些学科涉及很多领域。 听众不是任何此类主题的专家。 有趣的是,多数人的意见往往是正确的答案。 这是集成背后的中心概念。

Seems like a Magic right, let’s look at the mathematical intuition of the same.

看起来像是魔幻般的权利,让我们看一下相同的数学直觉。

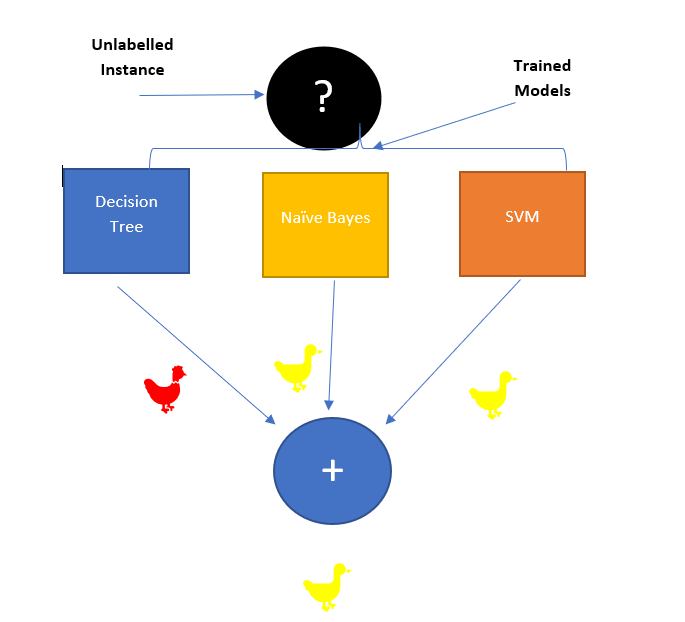

The idea is presented using the following simple diagram, in the context of ML. Now each person is equivalent to a classifier and like the audience is diverse, the more uncorrelated the classifiers better it is.

在ML的上下文中,使用以下简单图表介绍了该想法。 现在,每个人都相当于一个分类器,并且像受众群体一样,分类器越不相关,就越好。

We will train three classifiers on the same set of data. Now in the time of prediction, we will check, what the majority of the classifiers are saying. Assuming that this is a duck vs hen problem. As two of them are saying it to be a duck, the final decision of the ensemble model is that of duck.

我们将在同一组数据上训练三个分类器。 现在,在预测时,我们将检查大多数分类器在说什么。 假设这是一个鸭蛋鸡问题。 正如其中两个说的是鸭子一样,集成模型的最终决定是鸭子。

Let’s say, we have a classifier with a classification accuracy of 70%. If we add more classifiers then can it increase?

假设我们有一个分类器,分类精度为70%。 如果我们添加更多的分类器,那么它会增加吗?

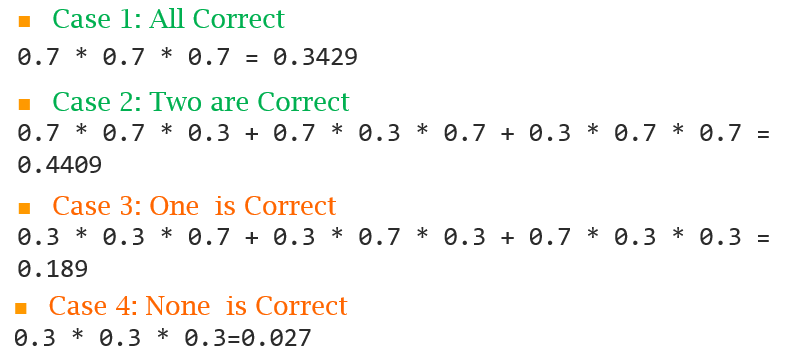

Let’s assume that we have 3 people/classifiers to start with. If any time more than two of them are right about the prediction, by majority voting we get the correct result. If the classifiers are independent, then the probability of C1 and C3 being correct and C3 being incorrect is .7 * .7 * 3. Let’s look at a more generalized calculation

假设我们有3个人/分类器开始。 如果其中有两个以上的时间是正确的,则以多数票通过,我们可以得出正确的结果。 如果分类器是独立的,则C1和C3正确且C3错误的概率为.7 * .7 *3。让我们来看一个更笼统的计算

The above diagram lays out all the cases and Case 1 and Case 2 are where our predictions are correct. Now, the revised overall accuracy is 0.3429 + 0.4409 or 78.38%.

上图列出了所有情况,情况1和情况2是我们的预测正确的地方。 现在,修改后的整体准确度为0.3429 + 0.4409或78.38%。

Just think about it if we just add more classifiers the classification accuracy improves from 70% to 78.33%.

只需考虑一下,如果我们仅添加更多分类器,分类精度就会从70%提高到78.33%。

If we increase more classifiers or more people in the crowd, the accuracy goes further up as shown in the below figure.

如果我们增加分类器或增加人群,那么准确性将进一步提高,如下图所示。

Hard-Voting versus Soft-voting:-

硬投票与软投票:-

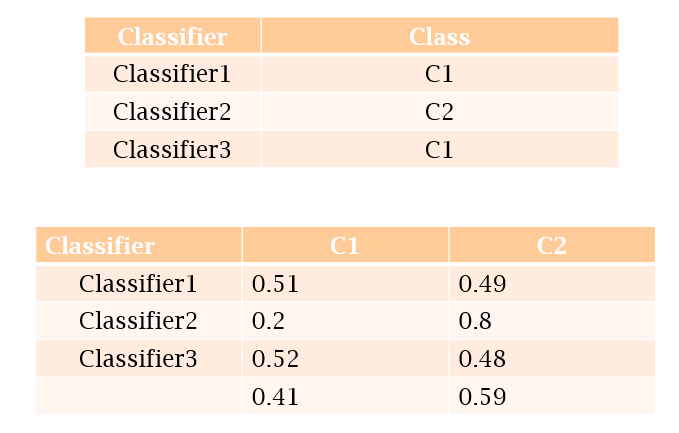

When all classifiers have equal say on the final prediction, it is called as hard-voting, like the one shown in Figure 1, whereas if we consider the confidence of a classifier in a particular decision or some other scheme to give different weight to different classifiers it is called as soft voting.

当所有分类器对最终预测有相同的发言权时,就称为“硬投票”,如图1所示,而如果我们考虑分类器对特定决策或其他方案的信心,则可以赋予不同的权重不同的权重。分类器称为软投票。

In the first case, it is hard voting, so only the label is considered, in soft voting we look rather at the prediction probability. We find the class-wise average probability and decide.

在第一种情况下,这是硬投票,因此仅考虑标签,在软投票中,我们着眼于预测概率。 我们找到类别平均概率并做出决定。

Softvoting is more fine-grained than hard voting.

软投票比硬投票更具说服力。

Time for some implementation

实施一些时间

from sklearn.ensemble import VotingClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.neighbors import KNeighborsClassifier

#Initalize the classifier#Individual Classifiers

log_clf = LogisticRegression(random_state=42)

knn_clf = KNeighborsClassifier(n_neighbors=10)

svm_clf = SVC(gamma=”auto”, random_state=42, probability=True)#hardvoting

voting_clf = VotingClassifier(

estimators=[(‘lr’, log_clf), (‘knn’, knn_clf), (‘svc’, svm_clf)],

voting=’hard’)# Voting can be changed to ‘Soft’, however, the classifier must support predict the probability

#softvoting

voting_clf_soft = VotingClassifier(

estimators=[(‘lr’, log_clf), (‘knn’, knn_clf), (‘svc’, svm_clf)],

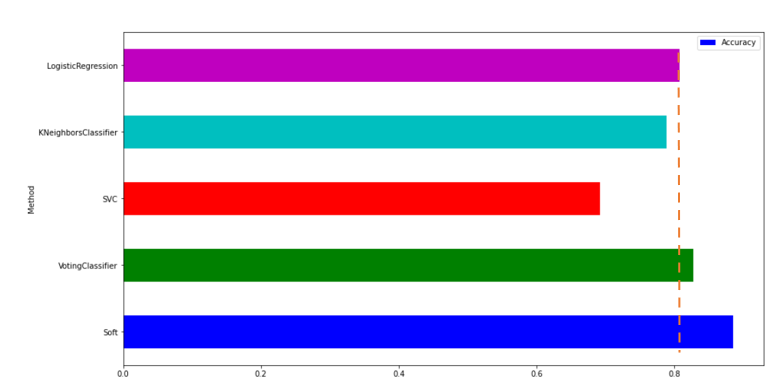

voting=’soft’)The result is shown in the below figure

结果如下图所示

This experiment was done on ‘Sonar’ data and it can be seen that the soft voting and hard voting classifiers are bringing in around 7–8% improvement over individual classifiers.

该实验是基于“声纳”数据完成的,可以看出,软投票和硬投票分类器比单个分类器提高了约7–8%。

For further details, you can look at our notebook or the video here.

Conclusion:

结论:

In this tutorial, we gave a quick overview of how crowd intelligence embodied in Ensemble works. We discussed soft-voting and hard-voting. There are more popular methods like random forest and Boosting, which will take up some other day.

在本教程中,我们快速概述了Ensemble中体现的人群智能的工作原理。 我们讨论了软投票和硬投票。 还有其他更流行的方法,例如随机森林和Boosting,它们将需要几天的时间。

[1] https://towardsdatascience.com/a-guide-to-ensemble-learning-d3686c9bed9a

[1] https://towardsdatascience.com/a-guide-to-ensemble-learning-d3686c9bed9a

[2] https://mlwave.com/kaggle-ensembling-guide/

翻译自: https://towardsdatascience.com/how-and-why-of-the-ensemble-models-f869453bbe16

集成学习模型融合方式

9236

9236

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?