1.导包

import tensorflow as tf

batch_size=256

2.下载Fashion-MNIST数据集,然后将其加载到内存中

def load_data_fashion_mnist(batch_size, resize=None):

mnist_train, mnist_test = tf.keras.datasets.fashion_mnist.load_data()

# 将所有数字除以255,使所有像素值介于0和1之间,在最后添加一个批处理维度,

# 并将标签转换为int32。

process = lambda X, y: (tf.expand_dims(X, axis=3) / 255,

tf.cast(y, dtype='int32'))

resize_fn = lambda X, y: (

tf.image.resize_with_pad(X, resize, resize) if resize else X, y)

return (

tf.data.Dataset.from_tensor_slices(process(*mnist_train)).batch(

batch_size).shuffle(len(mnist_train[0])).map(resize_fn),

tf.data.Dataset.from_tensor_slices(process(*mnist_test)).batch(

batch_size).map(resize_fn))

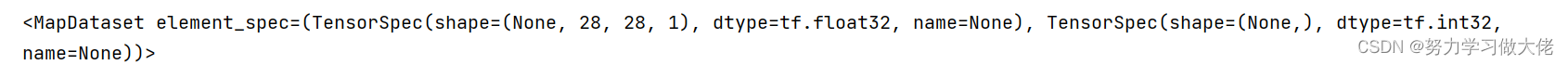

train_iter,test_iter=load_data_fashion_mnist(batch_size)

print(train_iter)

3.定义网络

net=tf.keras.models.Sequential()

net.add(tf.keras.layers.Flatten(input_shape=(28,28)))

weight_initializer=tf.keras.initializers.RandomNormal(mean=0.0,stddev=0.01)

net.add(tf.keras.layers.Dense(10,kernel_initializer=weight_initializer))

4.定义交叉熵与梯度下降

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

trainer=tf.keras.optimizers.SGD(learning_rate=0.1)

num_epochs=10

5.Accumulator计算累加

class Accumulator:

def __init__(self,n):

self.data=[0.0]*n

def add(self,*args):

self.data=[a+float(b) for a,b in zip(self.data,args)]

def reset(self):

self.data=[0.0]*len(self.data)

def __getitem__(self,idx):

return self.data[idx]

6.计算准确率

def accuracy(y_hat,y):

if len(y_hat.shape)>1 and y_hat.shape[1]>1:

y_hat=tf.argmax(y_hat,axis=1)

cmp=tf.cast(y_hat,y.dtype)==y

return float(tf.reduce_sum(tf.cast(cmp,y.dtype)))

def evaluate_accuracy(net,data_iter):

metric=Accumulator(2)

for X,y in data_iter:

metric.add(accuracy(net(X),y),tf.size(y))

return metric[0]/metric[1]

7.训练

def train_epoch_ch3(net,train_iter,loss,updater):

metric=Accumulator(3)

for X,y in train_iter:

with tf.GradientTape() as tape:

y_hat=net(X)

l=loss(y,y_hat)

params=net.trainable_variables

grads=tape.gradient(l,params)

updater.apply_gradients(zip(grads,params))

l_sum=l*float(tf.size(y))

metric.add(l_sum,accuracy(y_hat,y),tf.size(y))

return metric[0]/metric[2],metric[1]/metric[2]#返回训练损失及精度

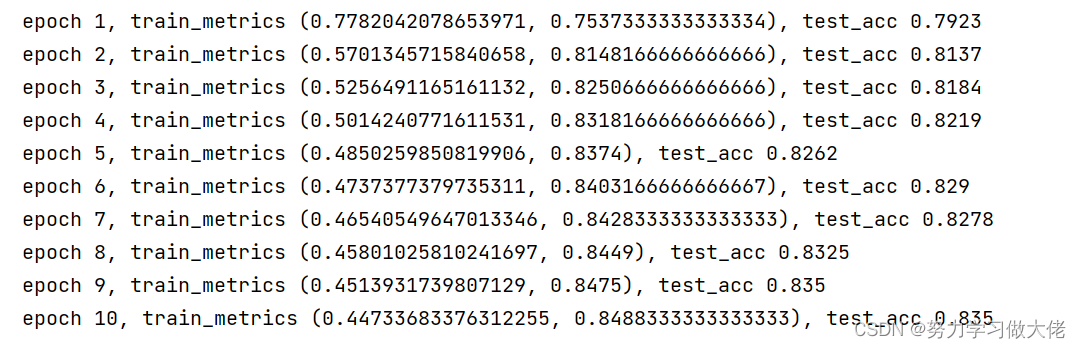

def train_ch3(net,train_iter,test_iter,loss,num_epochs,updater):

for epoch in range(num_epochs):

train_metrics=train_epoch_ch3(net,train_iter,loss,updater)

test_acc=evaluate_accuracy(net,test_iter)

print(f'epoch {epoch+1}, train_metrics {train_metrics}, test_acc {test_acc}')

train_ch3(net,train_iter,test_iter,loss,num_epochs,trainer)

8.返回Fashion-MNIST数据集的文本标签

8.返回Fashion-MNIST数据集的文本标签

def get_fashion_mnist_labels(labels):

text_labels = ['t-shirt', 'trouser', 'pullover', 'dress', 'coat',

'sandal', 'shirt', 'sneaker', 'bag', 'ankle boot']

return [text_labels[int(i)] for i in labels]

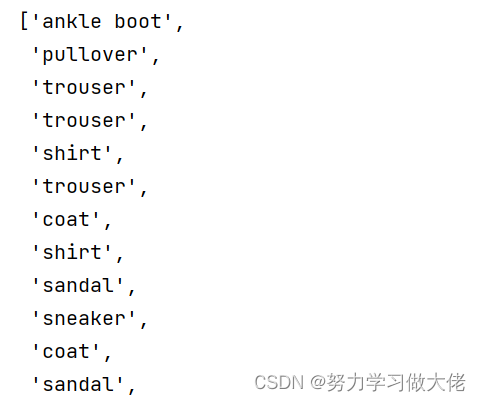

9.预测

def predict_ch3(net,test_iter):

for X,y in test_iter:

break

preds=get_fashion_mnist_labels(tf.argmax(net(X),axis=1))

return preds

predict_ch3(net,test_iter)

1938

1938

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?