有关于Transformer、BERT及其各种变体的详细介绍请参照笔者另一篇博客:最火的几个全网络预训练模型梳理整合(BERT、ALBERT、XLNet详解)。

本文基于对T5一文的理解,再重新回顾一下有关于auto-encoder、auto-regressive等常见概念,以及Transformer-based model的decoder结构。

1. Auto-encoder & Auto-regressive Language Model

1.1 Auto-encoder

类似于BERT、ALBERT、RoBERTa这类,encoder-only的language model。

优点:

- 能够保证同时上下文。

缺点:

- token之间的条件独立假设。违反自然语言生成的直觉性。

- encoder-only的时候,预训练目标不能和很多生成任务一致。

而结合预训练的时候的objective,像BERT这类Masked Language Model (MLM)又可以叫做denoised Auto-encoder(去噪自编码)。

1.2 Auto-regressive

类似于ELMO、GPT这类,时序LM和decoder-only LM。

传统的时序Language Model ,类似于RNN、ELMO,严格意义上不能叫做decoder;只是后来出现了大量基于transformer的auto-regressive LM,比方说GPT等,它们都是用作文本生成直接解码输出结果。所以在”transformer时代“下,Auto-regressive现在很多时候也被简单地理解为decoder-only。大致概念可以按下图理解:

优点:

- 无条件独立假设。

- 预训练可以直接做生成任务,符合下游生成任务的objective。

缺点:

- 不能同时双向编码信息(像ELMO这种是”伪双向“,而且容易“透露答案”)。

而目前自然语言处理的auto-regressive结构,大多基于Transformer;像传统时序LM,ELMO这种也已经快被遗忘了。

2. Transformer-based Model 结构概览

如前文所述,我们目前理解LM (autoencoder & autoregressive),大多是基于transformer的结构的。所以这一节暂时不讨论传统时序LM,ELMO这种。

T5一文1曾对Transformer的经典结构进行过概述,主要分为以下三种:

- Encoder-only Language Model:也即Auto-encoder。如第一节所述,特点是,能同时双向编码。代表有:

BERT、RoBERTa等。 - Decoder-only Language Model(上图,中):也即Auto Regressive (不包括传统的时序模型),可以简单理解成只有Decoder。特点是,只能看到前文信息(因为decoder-only)。代表有:

GPT等。 - Encoder-Decoder:也即Auto-encoder + Auto regressive(上图,左)。最原始的Transformer结构,encoder和decoder都有self-attentino支持,decoder还有额外的cross-attention用来结合encoder的输出信息。特点是,encoder能够同时看到上下文双向信息,而decoder只能看到前文信息。代表有:

BART、T5等,这也使得这类模型特别适合生成式任务。 - Prefix LM:可以简单地理解为Encoder-Decoder结构的变形(上图,右)。特点是,一部分像 Encoder 一样,能看到上下文信息;而其余部分则和 Decoder 一样,只能看到过去信息。代表有

UniLM等·。

而Transformer结构,如果想要实现所谓的,“同时上下文信息”、“只看到前文信息”,则需要依赖masked attention,因为transformer的self-attention,默认是全文计算attention,想要部分不可见,就得mask。这一点和传统时序LM不一样,像RNN这种,下一个token依赖于前文的hidden state,天然地就只能看到前文信息。

下面就以Encoder-Decoder LM为例,讲一下如何使用attention,实现encoder看到全文,而decoder只看到前文。 顺带介绍一下encoder的decoder的具体运作区别,因为区别之一就是这个attention机制。

3. Encoder / Decoder间的区别 & Masked Attention机制

这里以T5的代码为例。Transformer中的mask其实分为两种:1)padding mask;2)sequence mask。

3.1 Padding mask vs. Sequence mask

padding mask很简单,就是我们常用的同一个batch里面,把那些较短的样本,补至最长的样本长度。因为这些填充的位置,其实是没什么意义的,所以Attention机制不应该把注意力放在这些位置上,自然会在padding 位置进行attention mask。这个操作在encoder和decoder中都有用到,只是一个简单的tensor批量运算操作。

sequence mask是为了使得Decoder只能看见上文信息。所以当前step之后的文本信息,都会被mask掉。这个操作仅在decoder中使用。

总而言之,上述两种mask方式中,sequence mask是用于实现模型是否可见后文信息的关键。

3.2 Encoder

Encoder用的是全部的上下文信息,所以这边的sequence mask全部为0,形状为【batch_size, 1,1, seq_len】:

3.3 Decoder

Dncoder用的是前文的信息,所以这边的attention mask是一个矩阵 (seq_len * seq_len),其中下三角全0,迫使模型只能看到输入中的前文信息。如下图所示,sequence mask的实际形状为【batch_size, 1, seq_len, seq_len】

3.4 Encoder 和Decoder的区别

这里最后再简单总结一下Encoder-Decoder结构的LM,其Encoder和Decoder之间的区别。

首先,decoder有三层,sub-layer[1]计算self-attention,sub-layer[2]计算cross-attention,sub-layer[3]则是Linear层把最终hidden印射为vocab_size的logits。

sub_layer_1 (self-attention):

- inputs:

- [when doing train]: decoder_input_ids + sequence_mask_attention

- [when doing test]: predicted tokens

- outputs: hidden_states_1

sub_layer_2 (cross-attention):

- inputs: hidden_state_1 (query) and encoder_hidden_states (key & value)

- outputs: hidden_states_2

sub_layer_3 (linear+softmax):

- inputs: hidden_states_2

- outputs: logits (vocab probability)

所以decoder相较于encoder,其大部分结构和计算都是一样的,只不过多出这三个部分:

- sequence mask:Decoder在计算sub-layer[1]的self-attention时,有sequence mask机制,确保只看到前文信息;而Encoder则没有这部分mask机制,计算self-attention时默认看到全文。(这里指的训练阶段,decoder在预测阶段也没有sequence mask,具体参见本文第4节)

- cross-attention:Decoder的sub_layer[2]会计算cross-attention。具体来讲,这一层会使用encoder的output hidden作为k,v,而sub-layer[1]的self-attention的output作为q,来进行self-attention的计算。这是为了让decoder充分融合encoder端的全部上下文信息,所以名为“cross-attention”。

- linear+softmax:最后sub_layer[3]会有一个映射层,输出每个token的词表概率预测。

4. Decoder 在训练、预测前后的区别

Encoder在训练和测试时,没有计算上的变化。

但是Decoder结构,在模型推理时,和先前训练时,工作方式会存在一定的区别。

为了方便理解,首先介绍更简单的推理过程,然后引出训练过程的不同。

4.1 推理

auto-regressive LM在模型test的时候,预测下一个词,会基于之前的所有预测结果。

而一开始,为了能够生成第一个词,需要传入一个special token,我们称之为"start letter"。不同模型的start letter不一样 (e.g., T5就是ids为0的padding token;其他很多模型,像BERT就是BOS token)。

如上图,模型预测了第一个词的词表概率分布之后,我们取最高概率的那个词作为预测结果 (i.e., greedy decode, 也可以使用multinomial sampling,beam search等其他方案)。

比方说,这里就是“Ich”这个词。

然后第二步,我们就把start letter + "ich"这两个词作为新的输入,让模型预测下一个词。

同样的,下一个词预测出来之后,我们重复上述的步骤,继续把先前预测的所有词(start letter + predicted tokens)作为新的输入,继续让模型预测。直到模型预测出"ending letter" (e.g., EOS),结束预测。

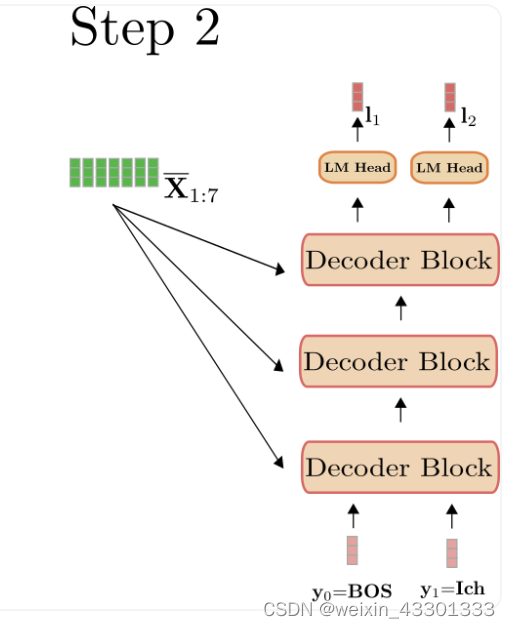

总而言之,如下图所示,右手边的decoder,会利用先前已经生成的tokens作为新的输入,来预测下一个词。而下一个词的概率,则是上一个词的输出结果。比方说,下图中的“Ich”这个词,是由BOS的输出结果“I1”概率分布决定的。

由于decoder的目标就是利用已有的tokens,预测下一个token,所以decoder这里会有一个input和output的"错位"(如上图,y1 == “Ich” 对应的输出其实是“I2”),理解的时候需要稍微注意一下。

有关于transformer框架,如果利用常规的

forward()接口实现推理时,需要额外注意的就是start letter,必须有start letter,而且也必须是正确的start letter。

所以,在使用decoder模型进行预测推理的时候,官方推荐调用统一接口generate(),会自动帮你的input加上模型的start letter。

具体请参照官网:Text Generation,查看更多有关于generate()的参数,以及sampling策略。

4.2 训练

训练时,会采用经典的teacher forcing 操作。

其核心思想在于:对于auto-regressive LM,下一个词的预测是需要基于前面所有已经预测的词;而在训练的过程中,如果让模型利用自己的预测结果,来预测下一个词,模型很难预测对(误差传播),这样越往后的token,loss就越大。总之,这样会造成优化困难。

所以,在训练的时候,我们会给模型提供正确答案作为decoder的输入,目的是为了只惩罚当前待预测的token,而不把先前模型预测的误差计算进来。 更多有关于teacher forcing的细节,详见这篇博客:教师强制.

4.2.1 具体实现

从transformer框架的代码实现来讲,不同于预测推理,训练时的decoder会有一个额外的输入,称之为decoder_input_ids。也就是先前提到的,我们会给模型提供正确的答案作为输入。

它大概长这个样子:

如上图,我们会发现,decoder_input_ids和正确答案labels的内容几乎是一摸一样的。

唯一的差别,就在于:labels是tokens + ending letter;而decoder_input_ids是start letter + tokens。照应之前提到过的,decoder的输入、输出“错位”。

有人就会说,这里一开始就把所有正确的tokens都作为输入了,不就把正确答案都透露给模型了吗。这里的话,就照应前面提到过的sequence mask,在预测下一个词的时候,我们只提供前面的序列,而后面的序列(包括待预测的这个词),我们都会把输入进行mask。

比方说:

- step1

decoder_input_ids = [0,10747,7,15]

sequence_mask = [0,1,1,1]

labels = [10747,7,15,1]

这样一来,decoder的self-attention就只看到第一个词,也就是0(start letter)。目标是为了预测第二个词的概率。这个概率后续和labels的第一个词,即10747,进行cross entropy的计算。

- step2

decoder_input_ids = [0,10747,7,15]

sequence_mask = [0,0,1,1] ## sequence mask changed

labels = [10747,7,15,1]

随后,mask发生变化,decoder的self-attention就看到两个词,一个还是0(start letter),另一个就是我们给他提供的正确答案10747。

这里也就照应前文第三节,为什么sequence mask是一个下三角全0的矩阵。 因为每次预测下一个词,都对应sequence mask矩阵的一行。

有没有发现,其实和推理的过程是一样的,唯一的区别就在于,我们不是给模型提供了它自己的已预测结果,而是提供了对应的答案。然后用sequence mask确保之后的答案不被泄露。

- step n

decoder_input_ids = [0,10747,7,15]

sequence_mask = [0,0,0,0] ## all decoder_input_ids can be seen

labels = [10747,7,15,1]

一直重复上述,直到达到最大长度(teacher forcing的思想,我们已经知道答案的最终长度,不是让模型预测出ending letter才结束)。

这时,模型已经可见所有的decoder_input_ids,目标是为了预测最后一个词的概率。用这个概率和labels的最后一个词,也就是1 (ending letter)进行loss的计算。

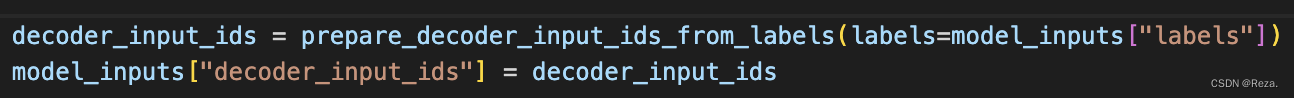

带decoder的模型,一般都内带一个名为prepare_decoder_input_ids_from_labels的方法,可以将labels构造成decoder_input_ids(如上所述,其实就是shift,把labels错位了一下)。

具体可以参见官网:https://huggingface.co/docs/transformers/v4.23.1/en/model_doc/plbart#transformers.PLBartTokenizer.build_inputs_with_special_tokens

这里再额外推荐一篇又关于大语言模型的survey, 其中也包含了很多对于语言模型encoder、decoder的总结:

另外,再放点tweet上的一些讨论 (两个人的观点其实都是对的,只不过描述的角度不太一样;相对而言,Yi tay的描述更加清楚):

参考

- [1] Raffel C, Shazeer N, Roberts A, et al. Exploring the limits of transfer learning with a unified text-to-text transformer[J]. J. Mach. Learn. Res., 2020, 21(140): 1-67.;paper

- [2] 知乎——T5 模型:NLP Text-to-Text 预训练模型超大规模探索

- [3] 知乎——【精华】BERT,Transformer,Attention(中)

- [4] CSDN——最火的几个全网络预训练模型梳理整合(BERT、ALBERT、XLNet详解)

- [5] Huggingface 官方对于encoder-decoder的讲解 (官方提供的非常好的一篇notebook)

7754

7754

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?