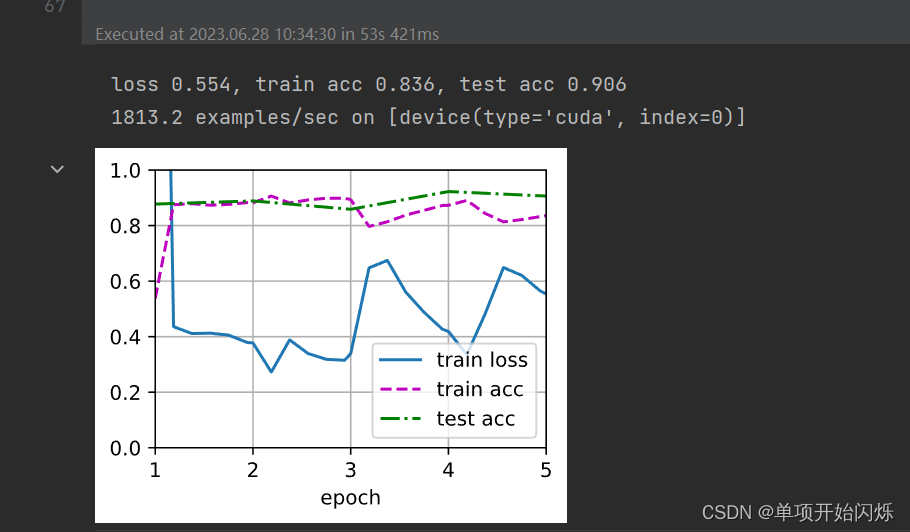

1、 继续提高finetune_net的学习率,模型的准确性如何变化?

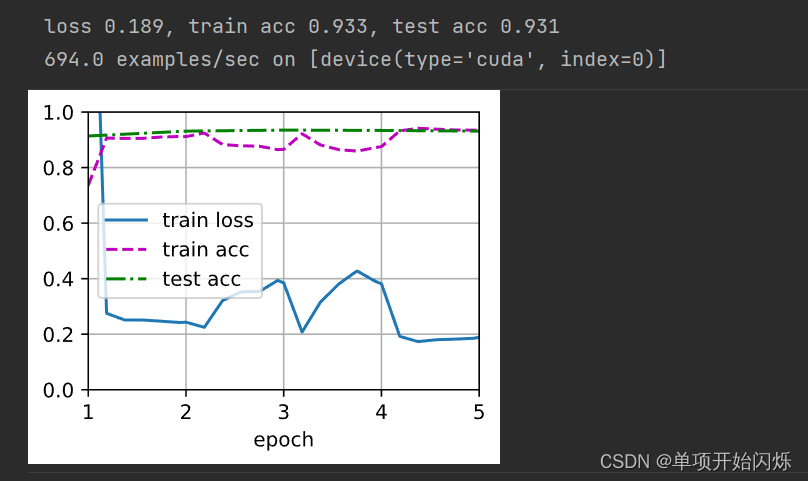

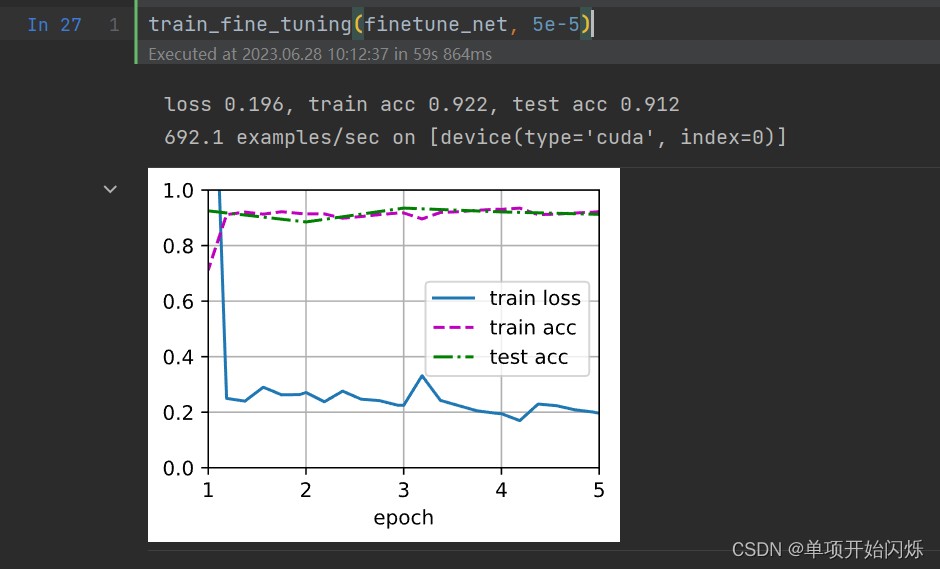

原实验参数 lr=5e-5, batch_size=128, num_epochs=5

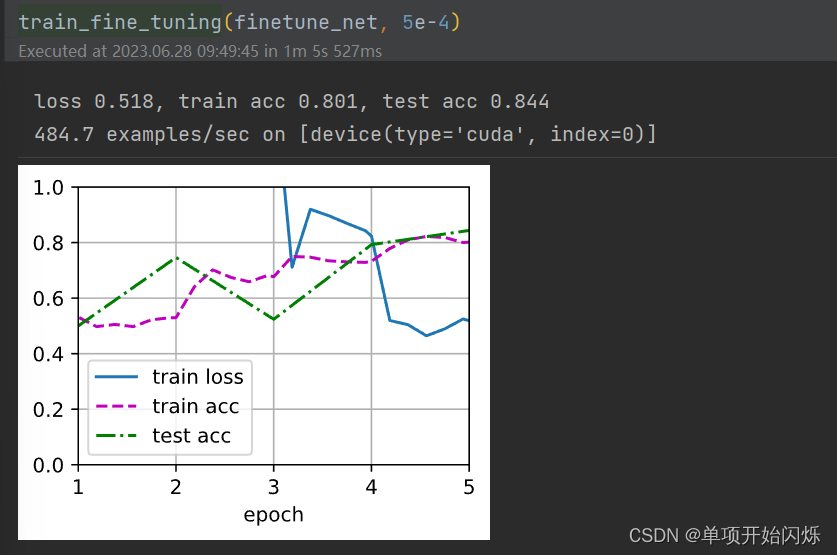

lr = 5e-4

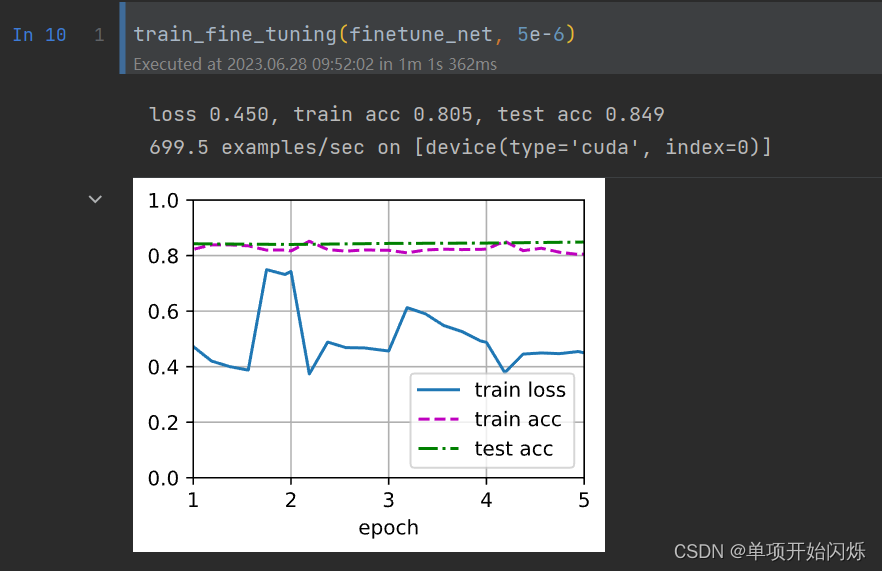

lr = 5e-6

2、2. 在比较实验中进一步调整finetune_net和scratch_net的超参数。它们的准确性还有不同吗?

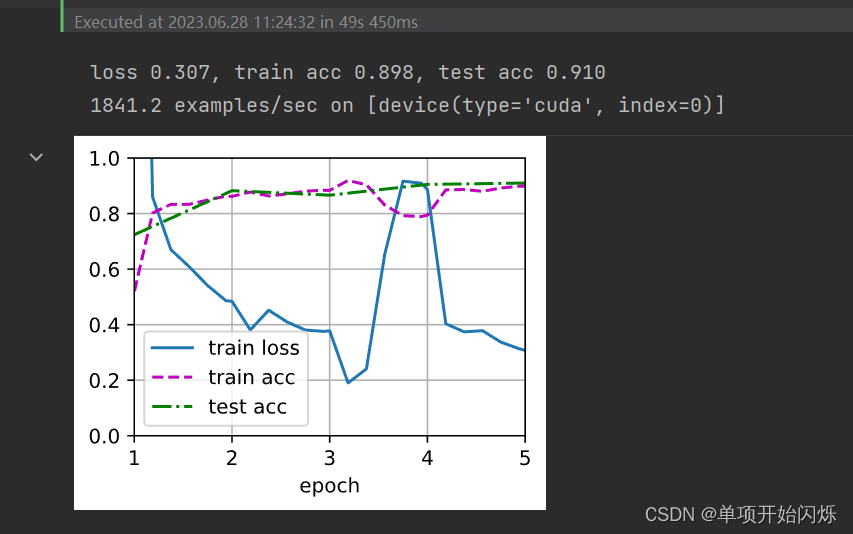

num_epochs=5是scratch_net

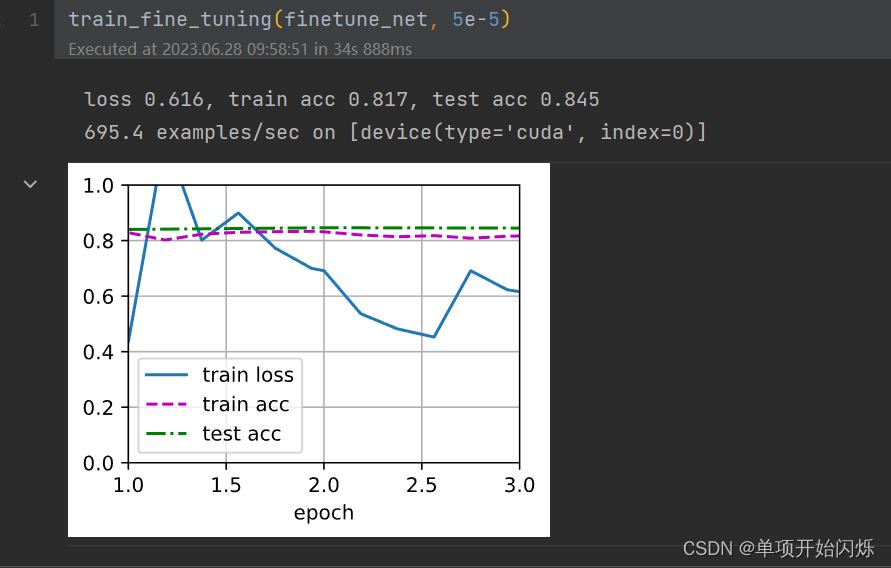

num_epochs改为3

scratch_net:

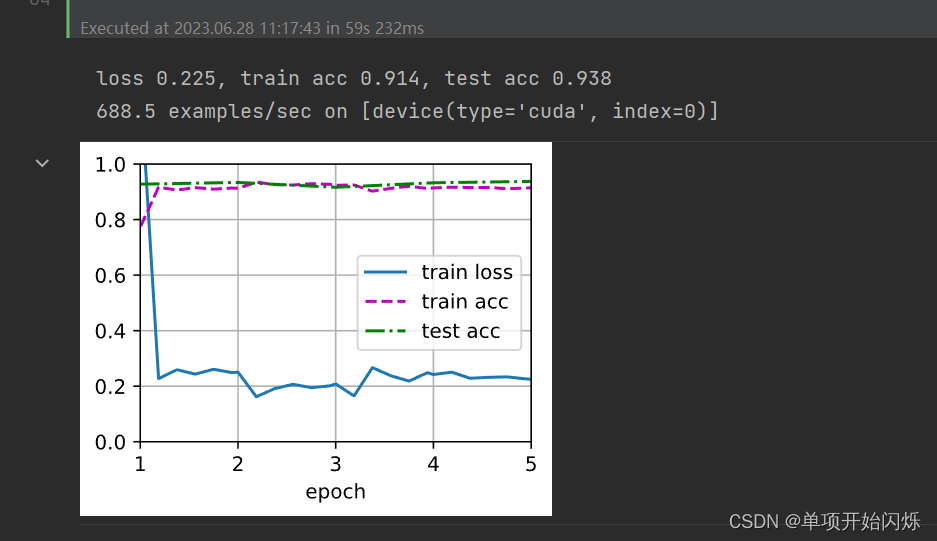

finetunr_net

3、将输出层finetune_net之前的参数设置为源模型的参数,在训练期间不要更新它们。模型的准确性如何变化?

#13.2 练习3

%matplotlib inline

import os

import torch

import torchvision

from torch import nn

from d2l import torch as d2l

data_dir = '../data/hotdog'

train_imgs = torchvision.datasets.ImageFolder(os.path.join(data_dir, 'train'))

test_imgs = torchvision.datasets.ImageFolder(os.path.join(data_dir, 'test'))

normalize = torchvision.transforms.Normalize(

[0.485, 0.456, 0.406], [0.229, 0.224, 0.225]

)

train_augs = torchvision.transforms.Compose([

torchvision.transforms.RandomResizedCrop(224),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

normalize

])

test_augs = torchvision.transforms.Compose([

torchvision.transforms.Resize([256,256]),

torchvision.transforms.CenterCrop(224),

torchvision.transforms.ToTensor(),

normalize

])

# pretrained_net = torchvision.models.resnet18(pretrained=True)

finetune_net = torchvision.models.resnet18(pretrained=True)

finetune_net.fc = nn.Linear(finetune_net.fc.in_features, 2)

nn.init.xavier_uniform_(finetune_net.fc.weight)

from torch.utils.data import DataLoader

def train_fine_tuning(net, lr, batch_size=128, num_epochs=5,

param_group=True):

train_iter = DataLoader(dataset=torchvision.datasets.ImageFolder(os.path.join(data_dir, 'train'), transform=train_augs), batch_size=batch_size, shuffle=True)

test_iter = DataLoader(torchvision.datasets.ImageFolder(

os.path.join(data_dir, 'test'), transform=test_augs

), batch_size=batch_size)

devices = d2l.try_all_gpus()

loss = nn.CrossEntropyLoss(reduction='none')

if param_group:

# params_1x = [param for name, param in net.named_parameters()

# if name not in ["fc.weight", "fc.bias"]]

param_pre = []

for name, param in net.named_parameters():

if name not in ['fc.weight', 'fc.bias']:

param.requires_grad = False

param_pre.append(param)

trainer = torch.optim.SGD([

{'params': param_pre},

{'params': net.fc.parameters(),

'lr': lr * 10}

], lr=lr, weight_decay=0.001)

else:

trainer = torch.optim.SGD(net.parameters(), lr=lr, weight_decay=0.001)

d2l.train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs, devices)

train_fine_tuning(finetune_net, 5e-5)

结果:53s

没改变之前:59s

4、事实上,ImageNet数据集中有一个“热狗”类别。我们可以通过以下代码获取其输出层中的相应权重参数,但是我们怎样才能利用这个权重参数?

#13.2 练习3

%matplotlib inline

import os

import torch

import torchvision

from torch import nn

from d2l import torch as d2l

data_dir = '../data/hotdog'

train_imgs = torchvision.datasets.ImageFolder(os.path.join(data_dir, 'train'))

test_imgs = torchvision.datasets.ImageFolder(os.path.join(data_dir, 'test'))

normalize = torchvision.transforms.Normalize(

[0.485, 0.456, 0.406], [0.229, 0.224, 0.225]

)

train_augs = torchvision.transforms.Compose([

torchvision.transforms.RandomResizedCrop(224),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

normalize

])

test_augs = torchvision.transforms.Compose([

torchvision.transforms.Resize([256,256]),

torchvision.transforms.CenterCrop(224),

torchvision.transforms.ToTensor(),

normalize

])

# pretrained_net = torchvision.models.resnet18(pretrained=True)

finetune_net = torchvision.models.resnet18(pretrained=True)

weight = finetune_net.fc.weight[934] #热狗的权重

finetune_net.fc = nn.Linear(finetune_net.fc.in_features, 2)

## Todo:使用ImageNet预训练好的热狗类的权重参数进行初始化

finetune_net.fc.weight.data[0] = weight.data

#需要reshape,否则一维tensor会报错

nn.init.xavier_uniform_(finetune_net.fc.weight[1].reshape((1,512)))

from torch.utils.data import DataLoader

def train_fine_tuning(net, lr, batch_size=128, num_epochs=5,

param_group=True):

train_iter = DataLoader(dataset=torchvision.datasets.ImageFolder(os.path.join(data_dir, 'train'), transform=train_augs), batch_size=batch_size, shuffle=True)

test_iter = DataLoader(torchvision.datasets.ImageFolder(

os.path.join(data_dir, 'test'), transform=test_augs

), batch_size=batch_size)

devices = d2l.try_all_gpus()

loss = nn.CrossEntropyLoss(reduction='none')

if param_group:

# params_1x = [param for name, param in net.named_parameters()

# if name not in ["fc.weight", "fc.bias"]]

param_pre = []

for name, param in net.named_parameters():

if name not in ['fc.weight', 'fc.bias']:

# param.requires_grad = False

param_pre.append(param)

trainer = torch.optim.SGD([

{'params': param_pre},

{'params': net.fc.parameters(),

'lr': lr * 10}

], lr=lr, weight_decay=0.001)

else:

trainer = torch.optim.SGD(net.parameters(), lr=lr, weight_decay=0.001)

d2l.train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs, devices)

train_fine_tuning(finetune_net, 5e-5)

结果:

原始结果

固定输出层之前的参数:

783

783

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?