本专栏参考崔华坤的《VINS论文推导及代码解析》的PDF,按照这个框架,对VINS-Mono的原理进行详解,并附加代码解析~

总体内容如下:

VINS-Mono原理推导与代码解析(五)初始化

VINS-Mono原理推导与代码解析(六)边缘化Maginalization和FEJ

VINS-Mono原理推导与代码解析(七)闭环检测与优化

VINS-Mono原理推导与代码解析(八)其他补充~

先认识一下重要的变量~

- prev_img:上一次发布的帧的图像数据

- cur_img:光流跟踪的前一帧的图像数据

- forw_img:光流跟踪的后一帧的图像数据

先来看看PDF中对前端视觉处理的讲解。

特征点检测

Frame 1: goodFeaturesToTrack 检测MAX_CNT个特征点,设置forw_pts如下:

| ids | forw_pts | track_cnt |

| 4 | [600,400] | 1 |

| 3 | [500,300] | 1 |

| 2 | [400,200] | 1 |

| 1 | [300,100] | 1 |

| 0 | [100,50] | 1 |

特征点跟踪

Frame 2: calcOpticalFlowPyrLK 跟踪,将跟踪失败的点删除,跟踪成功的点点跟踪计数+1,并调用 goodFeaturesToTrack 检测出MAX_CNT - forw_pts.size()个特征点,并添加到forw_pts中,并调用updateID 更新ids,最后得到的forw_pts如下:

| ids | forw_pts | track_cnt |

| 6 | [200,150] | 1 |

| 5 | [100,100] | 1 |

| 4 | [580,400] | 2 |

| 1 | [280,100] | 2 |

| 0 | [700,50] | 2 |

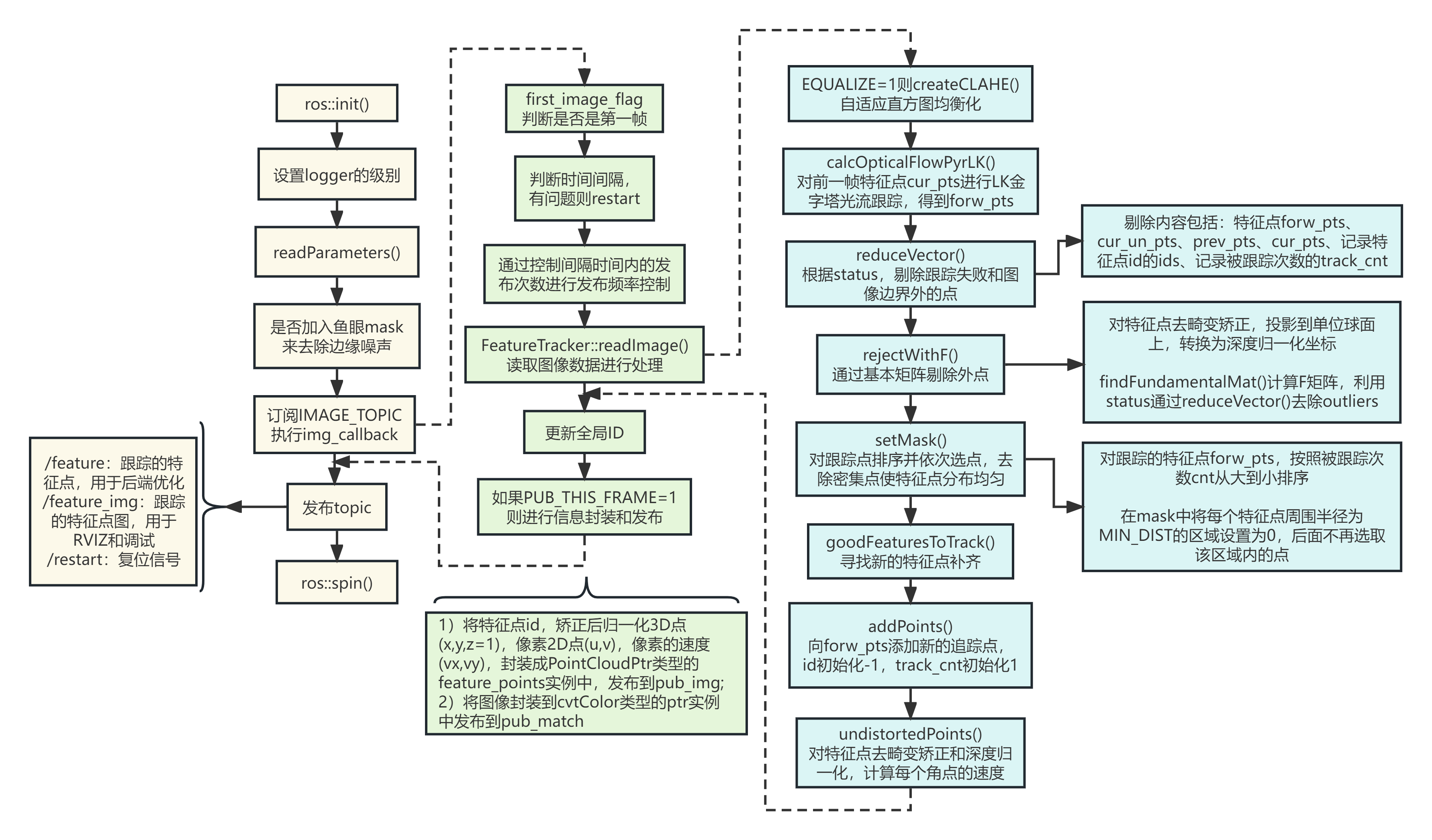

然后来看看代码。按照下面的流程图,来理通代码思路。

流程图来源:VINS-Mono代码解读——视觉跟踪 feature_trackers_rejectwithf-CSDN博客

1. main()函数

该部分对应流程图黄色那一列~ 步骤已在代码中注释出来了。

int main(int argc, char **argv)

{

// 1.ros::init()

ros::init(argc, argv, "feature_tracker");

ros::NodeHandle n("~");

// 2.设置logger的级别

ros::console::set_logger_level(ROSCONSOLE_DEFAULT_NAME, ros::console::levels::Info);

// 3. readParameters()读取参数

readParameters(n);

for(int i=0; i<NUM_OF_CAM; i++)

trackerData[i].readIntrinsicParameter(CAM_NAMES[i]);

// 4.判断是否加入鱼眼mask来去除边缘噪声

if(FISHEYE)

{

for(int i=0; i<NUM_OF_CAM; i++)

{

trackerData[i].fisheye_mask = cv::imread(FISHEYE_MASK, 0);

if(!trackData[i].fisheye_mask.data)

{

ROS_INFO("load mask fail");

ROS_BREAK();

}

else

ROS_INFO("loas mask success");

}

}

// 5.订阅IMAGE_TOPIC执行img_callback

ros::Subscriber sub_img = n.subscribe(IMAGE_TOPIC, 100, img_callback);

// 6. 发布的三个topics

pub_img = n.advertise<sensor_msgs::PointCloud>("feature", 1000);

pub_match = n.advertise<sensor_msgs::Image>("feature_img", 1000);

pub_restart = n.advertise<std_msgs::Bool>("restart", 1000);

// 7. ros::spin()

ros::spin();

return 0;

}2.IMAGE_TOPIC执行img_callback

这一部分对应流程图中的绿色部分~ 执行图像订阅的回调函数

void img_callback(const sensor_msgs::ImageConstPtr &img_msg)

{

// 1. 判断是否是第1帧

if(first_image_flag)

{

first_image_flag = false;

first_image_time = img_msg->header.stamp.toSec();

last_image_time = img_msg->header.stamp.toSec();

return;

}

// 2. 判断时间间隔,有问题则restart

if(img_msg->header.stamp.toSec() - last_image_time > 1.0 || img_msg->header.stamp.toSec() < last_image_time)

{

ROS_WARN("image discontinue! reset the feature tracker!");

first_image_flag = true;

last_image_time = 0;

pub_count = 1;

std::msgs::Bool restart_flag;

restart_flag.data = true;

pub_restart.publish(restart_flag);

return;

}

last_image_time = img_msg->header.stamp.toSec();

// 3. 通过控制间隔时间内的发布次数进行发布频率控制

if(round(1.0 * pub_count) / (img_msg->header.stamp.toSec() - first_image_time)) <= FREQ)

{

PUB_THIS_FRAME = true;

if(abs(1.0 *pub_count / (img_msg->header.stamp.toSec() - first_image_time) - FREQ) < 0.01 * FREQ)

{

first_image_time = img_msg->header.stamp.toSec();

pub_count = 0;

}

}

else

PUB_THIS_FRAME = false;

// 4. FeatureTracker::readImage()

....

// 这部分放到下一节讲解~

// 5. 更新全局ID

for(unsigned int i=0; ; i++)

{

bool completed = false;

for(int j=0; j<NUM_OF_CAM; j++)

if(j!=1 || !STEREO_TRACK)

completed |= trackerData[j].updateID(i);

if(!completed)

break;

}

// 6. 如果PUB_THIS_FRAMR=1,则进行信息封装与发布

if(PUB_THIS_FRAME)

{

pub_count++;

// 6.1 将特征点id,矫正后归一化3D点,像素2D点,像素速度,封装成PointCloudPtr类型的feature_points实例中,发布到pub_img

sensor_msgs::PointCloudPtr feature_points(new sensor_msgs::PointCloud);

sensor_msgs::ChannelFloat32 id_of_point; // 特征点id

sensor_msgs::ChannelFloat32 u_of_point; // 像素2D点

sensor_msgs::ChannelFloat32 v_of_point;

sensor_msgs::ChannelFloat32 velocity_x_of_point; // 像素速度

sensor_msgs::ChannelFloat32 velocity_y_of_point;

feature_points->header = img_msg->header;

feature_points->header.frame_id = "world";

vector<set<int>> hash_ids(NUM_OF_CAM);

for(int i=0; i<NUM_OF_CAM; i++)

{

auto &un_pts = trackerData[i].cur_un_pts;

auto &cur_pts = trackerData[i].cur_pts;

auto &id = trackerData[i].ids;

auto &pts_velocity = trackerData[i].pts_velocity;

for(unsigned int j=0; j<ids.size(); j++)

{

if(trackerData[i].track_cnt[j] > 1)

{

int p_id = ids[j];

hash_ids[i].insert(p_id);

geometry_msgs::Point32 p;

p.x = un_pts[j].x; // 归一化的3D点

p.y = un_pts[j].y;

p.z = 1;

feature_points->points.push_back(p);

id_of_point.values.push_back(p_id * NUM_OF_CAM + i);

u_of_point.values.push_back(cur_pts[j].x);

v_of_point.values.push_back(cur_pts[j].y);

velocity_x_of_point.values.push_back(pts_velocity[j].x);

velocity_y_of_point.values.push_back(pts_velocity[j].y);

}

}

}

feature_points->channels.push_back(id_of_point);

feature_points->channels.push_back(u_of_point);

feature_points->channels.push_back(v_of_point);

feature_points->channels.push_back(velocity_x_of_point);

feature_points->channels.push_back(velocity_y_of_point);

if(!init_pub)

init_pub = 1;

else

pub_img.publish(feature_points);

// 6.2 将图像封装到cvtColor类型的ptr实例中发布到pub_match

if(SHOW_TRACK)

{

ptr = cv_bridge::cvtColor(ptr, sensor_msgs::image_encoding::BGR8);

cv::Mat stereo_img = ptr->image;

for(int i=0; i<NUM_OF_CAM; i++)

{

cv::Mat tmp_img = stereo_img.rowRange(i*ROW, (i+1)*ROW);

cv::cvtColor(show_img, tmp_img, CV_GRAY2RGB);

for(unsigned int j=0; j<trackerData[i].cur_pts.size(); j++)

{

double len = std::min(1.0, 1.0 * trackerData[i].track_cnt[j] / WINDOW_SIZE);

cv::circle(tmp_img, trackerData[i].cur_pts[j], 2, cv::Scalar(255*(1-len), 0, 255*len), 2);

}

}

pub_match.publish(ptr->toImageMsg);

}

}

}3.FeatureTracker::readImage()

这一部分对应流程图中的蓝色部分~读取图像数据进行处理

void FeatureTracker::readImage(const cv::Mat &_img, double _cur_time)

{

cv::Mat img;

TicToc t_r;

cur_time = _cur_time;

// 1. EQUALIZE=1则createCLAHE()自适应直方图均衡化

if(EQUALIZE)

{

cv::Ptr<cv::CLAHE> clahe = cv::createCLAHE(3.0, cv::Size(8, 8));

TicToc t_c;

clahe->apply(_img, img);

ROS_DEBUG("CLAHE costs: %fms", t_c.toc());

}

else

img = _img;

// 图像帧更新

if(forw_img.empty())

prev_img = cur_img = forw_img = img;

else

forw_img = img;

forw_pts.clear();

// 2. calcOpticalFlowPyrLK()对前一帧特征点cur_pts进行LK金字塔光流跟踪,得到forw_pts

if(cur_pts.size()>0)

{

TicToc t_o;

vector<uchar> status;

vector<float> err;

cv::calcOpticalFlowPyrLK(cur_img, forw_img, cur_pts, forw_pts, status, err, cv::Size(21, 21), 3);

// 3. reduceVector()根据status,提出跟踪失败和图像边界的点

for(int i=0; i<int(forw_pts.size()); i++)

if(status[i] && !inBorder(forw_pts[i]))

status[i] = 0;

reduceVector(prev_pts, status); // 特征点prev_pts

reduceVector(cur_pts, status); // 特征点cur_pts

reduceVector(forw_pts, status); // 特征点forw_pts

reduceVector(ids, status); // 记录特征点id的ids

reduceVector(cur_un_pts, status); // 特征点cur_un_pts

reduceVector(track_cnt, status); // 记录被跟踪次数的track_cnt

}

for(auto &n : track_cnt)

n++;

if(PUB_THIS_FRAME)

{

// 4. rejectWithF()通过基本矩阵剔除外点

rejectWithF();

// 5. setMask()对跟踪点排序并依次选点,去除密集点使特征点分布均匀

setMask();

// detect feature begins

// 6. goodFeaturesToTrack()寻找新的特征点补齐

int n_max_cnt = MAX_CNT - static_cast<int>(forw_pts.size());

if(n_max_cnt > 0)

{

cv::goodFeaturesToTrack(forw_img, n_pts, MAX_CNT-forw_pts.size(), 0.01, MIN_DIST, mask);

}

else

n_pts.clear();

// 7. addPoints()向forw_pts添加新的追踪点,id初始化-1,track_cnt初始化1

addPoints();

}

prev_img = cur_img;

prev_pts = cur_pts;

prev_un_pts = cur_un_pts;

cur_img = forw_img;

cur_pts = forw_pts;

// 8. undistortedPoints()对特征点去畸变矫正和深度归一化,计算每个角点的深度

undistortedPoints();

prev_time = cur_time;

}然后看看FeatureTracker::readImage()中涉及到的函数,见下表。

| 函数 | 功能 |

| bool inBorder() | 判断跟踪的特征点是否在图像边界内 |

| void reduceVector() | 去除无法跟踪的特征点 |

| void FeatureTracker::setMask() | 对跟踪点进行排序并去除密集点 |

| void FeatureTracker::addPoints() | 添加新检测到的特征点n_pts,ID初始化-1,跟踪次数1 |

| void FeatureTracker::rejectWithF() | 通过F矩阵去除outliers |

| bool FetaureTracker::updateID() | 更新特征点id |

| void FeatureTracker::readIntrinsicParameter() | 读取相机内参 |

| void FeatureTracker::showUndistortion() | 显示去畸变矫正后的特征点 |

| void FeatureTracker::undistortedPoints() | 对特征点的图像坐标去畸变矫正,并计算每个角点的速度 |

3.1 createCLAHE()

使用的是opencv提供的函数

cv::createCLAHE(3.0, cv::Size(8, 8));3.2 calcOpticalFlowPyrLK()

使用的也是opencv提供的函数

cv::calcOpticalFlowPyrLK(cur_img, forw_img, cur_pts, forw_pts, status, err, cv::Size(21,21), 3);3.3 reduceVector()

void reduceVector(vector<cv::Point2f> &v, vector<uchar> status)

{

int j=0;

for(int i=0; i<int(v.size()); i++)

if(status[i])

v[j++]=v[i];

v.resize(j);

}

void reduceVector(vector<int> &v, vector<uchar> status)

{

int j=0;

for(int i=0; i<int(v.size()); i++)

if(status[i])

v[j++] = v[i];

v.resize(j);

}3.4 rejectWithF()

void FeatureTracker::rejectWithF()

{

if (forw_pts.size() >= 8)

{

ROS_DEBUG("FM ransac begins");

TicToc t_f;

vector<cv::Point2f> un_cur_pts(cur_pts.size()), un_forw_pts(forw_pts.size());

for (unsigned int i = 0; i < cur_pts.size(); i++)

{

Eigen::Vector3d tmp_p;

m_camera->liftProjective(Eigen::Vector2d(cur_pts[i].x, cur_pts[i].y), tmp_p);

tmp_p.x() = FOCAL_LENGTH * tmp_p.x() / tmp_p.z() + COL / 2.0;

tmp_p.y() = FOCAL_LENGTH * tmp_p.y() / tmp_p.z() + ROW / 2.0;

un_cur_pts[i] = cv::Point2f(tmp_p.x(), tmp_p.y());

m_camera->liftProjective(Eigen::Vector2d(forw_pts[i].x, forw_pts[i].y), tmp_p);

tmp_p.x() = FOCAL_LENGTH * tmp_p.x() / tmp_p.z() + COL / 2.0;

tmp_p.y() = FOCAL_LENGTH * tmp_p.y() / tmp_p.z() + ROW / 2.0;

un_forw_pts[i] = cv::Point2f(tmp_p.x(), tmp_p.y());

}

vector<uchar> status;

cv::findFundamentalMat(un_cur_pts, un_forw_pts, cv::FM_RANSAC, F_THRESHOLD, 0.99, status);

int size_a = cur_pts.size();

reduceVector(prev_pts, status);

reduceVector(cur_pts, status);

reduceVector(forw_pts, status);

reduceVector(cur_un_pts, status);

reduceVector(ids, status);

reduceVector(track_cnt, status);

ROS_DEBUG("FM ransac: %d -> %lu: %f", size_a, forw_pts.size(), 1.0 * forw_pts.size() / size_a);

ROS_DEBUG("FM ransac costs: %fms", t_f.toc());

}

}3.5 setMask()

void FeatureTracker::setMask()

{

if(FISHEYE)

mask = fisheye_mask.clone();

else

mask = cv::Mat(ROW, COL, CV_8UC1, cv::Scalar(255));

// prefer to keep features that are tracked for long time

vector<pair<int, pair<cv::Point2f, int>>> cnt_pts_id;

for (unsigned int i = 0; i < forw_pts.size(); i++)

cnt_pts_id.push_back(make_pair(track_cnt[i], make_pair(forw_pts[i], ids[i])));

sort(cnt_pts_id.begin(), cnt_pts_id.end(), [](const pair<int, pair<cv::Point2f, int>> &a, const pair<int, pair<cv::Point2f, int>> &b)

{

return a.first > b.first;

});

forw_pts.clear();

ids.clear();

track_cnt.clear();

for (auto &it : cnt_pts_id)

{

if (mask.at<uchar>(it.second.first) == 255)

{

forw_pts.push_back(it.second.first);

ids.push_back(it.second.second);

track_cnt.push_back(it.first);

cv::circle(mask, it.second.first, MIN_DIST, 0, -1);

}

}

}3.6 goodFeaturesToTrack()

这里用的也是opencv提供的函数

cv::goodFeaturesToTrack(forw_img, n_pts, MAX_CNT - forw_pts.size(), 0.01, MIN_DIST, mask);3.7 addPoints()

void FeatureTracker::addPoints()

{

for (auto &p : n_pts)

{

forw_pts.push_back(p);

ids.push_back(-1);

track_cnt.push_back(1);

}

}3.8 undistortedPoints()

void FeatureTracker::undistortedPoints()

{

cur_un_pts.clear();

cur_un_pts_map.clear();

//cv::undistortPoints(cur_pts, un_pts, K, cv::Mat());

for (unsigned int i = 0; i < cur_pts.size(); i++)

{

Eigen::Vector2d a(cur_pts[i].x, cur_pts[i].y);

Eigen::Vector3d b;

m_camera->liftProjective(a, b);

cur_un_pts.push_back(cv::Point2f(b.x() / b.z(), b.y() / b.z()));

cur_un_pts_map.insert(make_pair(ids[i], cv::Point2f(b.x() / b.z(), b.y() / b.z())));

//printf("cur pts id %d %f %f", ids[i], cur_un_pts[i].x, cur_un_pts[i].y);

}

// caculate points velocity

if (!prev_un_pts_map.empty())

{

double dt = cur_time - prev_time;

pts_velocity.clear();

for (unsigned int i = 0; i < cur_un_pts.size(); i++)

{

if (ids[i] != -1)

{

std::map<int, cv::Point2f>::iterator it;

it = prev_un_pts_map.find(ids[i]);

if (it != prev_un_pts_map.end())

{

double v_x = (cur_un_pts[i].x - it->second.x) / dt;

double v_y = (cur_un_pts[i].y - it->second.y) / dt;

pts_velocity.push_back(cv::Point2f(v_x, v_y));

}

else

pts_velocity.push_back(cv::Point2f(0, 0));

}

else

{

pts_velocity.push_back(cv::Point2f(0, 0));

}

}

}

else

{

for (unsigned int i = 0; i < cur_pts.size(); i++)

{

pts_velocity.push_back(cv::Point2f(0, 0));

}

}

prev_un_pts_map = cur_un_pts_map;

}好了,前端特征部分就到这里了~

2802

2802

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?