- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊

一、前期工作

1. 设置GPU

from tensorflow import keras

from tensorflow.keras import layers,models

import os, PIL, pathlib

import matplotlib.pyplot as plt

import tensorflow as tf

import numpy as np

gpus = tf.config.list_physical_devices("GPU")

if gpus:

gpu0 = gpus[0] #如果有多个GPU,仅使用第0个GPU

tf.config.experimental.set_memory_growth(gpu0, True) #设置GPU显存用量按需使用

tf.config.set_visible_devices([gpu0],"GPU")

gpus

[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

2. 导入数据

data_dir = "F:/host/Data/好莱坞明星人脸识别识别数据/"

data_dir = pathlib.Path(data_dir)

3. 查看数据

image_count = len(list(data_dir.glob('*/*.jpg')))

print("图片总数为:",image_count)

图片总数为: 1800

roses = list(data_dir.glob('Jennifer Lawrence/*.jpg'))

PIL.Image.open(str(roses[0]))

二、数据预处理

1. 加载数据

使用image_dataset_from_directory方法将磁盘中的数据加载到tf.data.Dataset`中

测试集与验证集的关系:

- 验证集并没有参与训练过程梯度下降过程的,狭义上来讲是没有参与模型的参数训练更新的。

- 但是广义上来讲,验证集存在的意义确实参与了一个“人工调参”的过程,我们根据每一个epoch训练之后模型在valid data上的表现来决定是否需要训练进行early stop,或者根据这个过程模型的性能变化来调整模型的超参数,如学习率,batch_size等等。

- 因此,我们也可以认为,验证集也参与了训练,但是并没有使得模型去overfit验证集

batch_size = 32

img_height = 224

img_width = 224

label_mode:

int:标签将被编码成整数(使用的损失函数应为:sparse_categorical_crossentropy loss)。categorical:标签将被编码为分类向量(使用的损失函数应为:categorical_crossentropy loss)。

"""

关于image_dataset_from_directory()的详细介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/117018789

"""

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.1,

subset="training",

label_mode = "categorical",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

Found 1800 files belonging to 17 classes.

Using 1620 files for training.

"""

关于image_dataset_from_directory()的详细介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/117018789

"""

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.1,

subset="validation",

label_mode = "categorical",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

Found 1800 files belonging to 17 classes.

Using 180 files for validation.

我们可以通过class_names输出数据集的标签。标签将按字母顺序对应于目录名称。

class_names = train_ds.class_names

print(class_names)

['Angelina Jolie', 'Brad Pitt', 'Denzel Washington', 'Hugh Jackman', 'Jennifer Lawrence', 'Johnny Depp', 'Kate Winslet', 'Leonardo DiCaprio', 'Megan Fox', 'Natalie Portman', 'Nicole Kidman', 'Robert Downey Jr', 'Sandra Bullock', 'Scarlett Johansson', 'Tom Cruise', 'Tom Hanks', 'Will Smith']

2. 可视化数据

plt.figure(figsize=(20, 10))

for images, labels in train_ds.take(1):

for i in range(20):

ax = plt.subplot(5, 10, i + 1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(class_names[np.argmax(labels[i])])

plt.axis("off")

3. 再次检查数据

Image_batch是形状的张量(32,224,224,3)。这是一批形状224x224x3的32张图片(最后一维指的是彩色通道RGB)。Label_batch是形状(32,)的张量,这些标签对应32张图片

for image_batch, labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break

(32, 224, 224, 3)

(32, 17)

4. 配置数据集

- shuffle() :打乱数据,关于此函数的详细介绍可以参考:https://zhuanlan.zhihu.com/p/42417456

- prefetch() :预取数据,加速运行

AUTOTUNE = tf.data.AUTOTUNE

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

三、构建CNN网络

卷积神经网络(CNN)的输入是张量 (Tensor) 形式的 (image_height, image_width, color_channels),包含了图像高度、宽度及颜色信息。不需要输入batch size。color_channels 为 (R,G,B) 分别对应 RGB 的三个颜色通道(color channel)。在此示例中,我们的 CNN 输入的形状是 (224, 224, 3)即彩色图像。我们需要在声明第一层时将形状赋值给参数input_shape。

"""

关于卷积核的计算不懂的可以参考文章:https://blog.csdn.net/qq_38251616/article/details/114278995

layers.Dropout(0.4) 作用是防止过拟合,提高模型的泛化能力。

关于Dropout层的更多介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/115826689

"""

model = models.Sequential([

layers.experimental.preprocessing.Rescaling(1./255, input_shape=(img_height, img_width, 3)),

layers.Conv2D(16, (3, 3), activation='relu', input_shape=(img_height, img_width, 3)), # 卷积层1,卷积核3*3

layers.AveragePooling2D((2, 2)), # 池化层1,2*2采样

layers.Conv2D(32, (3, 3), activation='relu'), # 卷积层2,卷积核3*3

layers.AveragePooling2D((2, 2)), # 池化层2,2*2采样

layers.Dropout(0.5),

layers.Conv2D(64, (3, 3), activation='relu'), # 卷积层3,卷积核3*3

layers.AveragePooling2D((2, 2)),

layers.Dropout(0.5),

layers.Conv2D(128, (3, 3), activation='relu'), # 卷积层3,卷积核3*3

layers.Dropout(0.5),

layers.Flatten(), # Flatten层,连接卷积层与全连接层

layers.Dense(128, activation='relu'), # 全连接层,特征进一步提取

layers.Dense(len(class_names)) # 输出层,输出预期结果

])

model.summary() # 打印网络结构

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

rescaling_1 (Rescaling) (None, 224, 224, 3) 0

conv2d_4 (Conv2D) (None, 222, 222, 16) 448

average_pooling2d_3 (Averag (None, 111, 111, 16) 0

ePooling2D)

conv2d_5 (Conv2D) (None, 109, 109, 32) 4640

average_pooling2d_4 (Averag (None, 54, 54, 32) 0

ePooling2D)

dropout_3 (Dropout) (None, 54, 54, 32) 0

conv2d_6 (Conv2D) (None, 52, 52, 64) 18496

average_pooling2d_5 (Averag (None, 26, 26, 64) 0

ePooling2D)

dropout_4 (Dropout) (None, 26, 26, 64) 0

conv2d_7 (Conv2D) (None, 24, 24, 128) 73856

dropout_5 (Dropout) (None, 24, 24, 128) 0

flatten_1 (Flatten) (None, 73728) 0

dense_2 (Dense) (None, 128) 9437312

dense_3 (Dense) (None, 17) 2193

=================================================================

Total params: 9,536,945

Trainable params: 9,536,945

Non-trainable params: 0

_________________________________________________________________

四、训练模型

1.设置动态学习率

# 设置初始学习率

initial_learning_rate = 1e-4

lr_schedule = tf.keras.optimizers.schedules.ExponentialDecay(

initial_learning_rate,

decay_steps=60, # 敲黑板!!!这里是指 steps,不是指epochs

decay_rate=0.96, # lr经过一次衰减就会变成 decay_rate*lr

staircase=True)

# 将指数衰减学习率送入优化器

optimizer = tf.keras.optimizers.Adam(learning_rate=lr_schedule)

model.compile(optimizer=optimizer,

loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

损失函数Loss详解:

1. binary_crossentropy(对数损失函数)

与 sigmoid 相对应的损失函数,针对于二分类问题。

2. categorical_crossentropy(对数损失函数)

与 softmax 相对应的损失函数,针对于多分类问题,如果是one-hot编码,则使用 categorical_crossentropy

调用方法一:

model.compile(optimizer="adam",

loss='categorical_crossentropy',

metrics=['accuracy']

)

调用方法二:

model.compile(optimizer=optimizer,

loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

3. sparse_categorical_crossentropy(稀疏性多分类的对数损失函数)

与 softmax 相对应的损失函数,针对于多分类问题,如果是整数编码,则使用 sparse_categorical_crossentropy

调用方法一:

model.compile(optimizer="adam",

loss='sparse_categorical_crossentropy',

metrics=['accuracy']

)

调用方法二:

model.compile(optimizer=optimizer,

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

函数原型

tf.keras.losses.SparseCategoricalCrossentropy(

from_logits=False,

reduction=losses.Reduction.AUTO,

name='sparse_categorical_crossentropy')

参数说明

-

from_logits:是否将预测值转换为概率值,即是否使用softmax函数。如果为True,则将预测值转换为概率值,即使用softmax函数。

如果为False,则不进行转换,直接使用预测值作为损失函数的输入。

默认值为False。

-

reduction:损失函数的计算方式。可选值为:

-

losses.Reduction.SUM:将所有样本的损失值相加,然后除以样本数量。 -

losses.Reduction.NONE:不进行任何归一化操作,直接返回每个样本的损失值。 -

losses.Reduction.AUTO:根据输入的形状自动选择计算方式。

默认值为

losses.Reduction.AUTO。 -

-

name:损失函数的名称。默认值为

sparse_categorical_crossentropy。

2.早停与保存最佳模型参数

关于ModelCheckpoint的详细介绍可参考文章:https://blog.csdn.net/qq_38251616/article/details/122318957

EarlyStopping()参数说明:

monitor: 被监测的数据。min_delta: 在被监测的数据中被认为是提升的最小变化, 例如,小于 min_delta 的绝对变化会被认为没有提升。patience: 没有进步的训练轮数,在这之后训练就会被停止。verbose: 详细信息模式。mode: {auto, min, max} 其中之一。 在 min 模式中, 当被监测的数据停止下降,训练就会停止;在 max 模式中,当被监测的数据停止上升,训练就会停止;在 auto 模式中,方向会自动从被监测的数据的名字中判断出来。baseline: 要监控的数量的基准值。 如果模型没有显示基准的改善,训练将停止。estore_best_weights: 是否从具有监测数量的最佳值的时期恢复模型权重。 如果为 False,则使用在训练的最后一步获得的模型权重。

关于EarlyStopping()的详细介绍可参考文章:https://blog.csdn.net/qq_38251616/article/details/122319538

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

epochs = 100

# 保存最佳模型参数

checkpointer = ModelCheckpoint('./model/好莱坞明星识别_best_model.h5',

monitor='val_accuracy',

verbose=1,

save_best_only=True,

save_weights_only=True)

# 设置早停

earlystopper = EarlyStopping(monitor='val_accuracy',

min_delta=0.001,

patience=20,

verbose=1)

3. 模型训练

history = model.fit(train_ds,

validation_data=val_ds,

epochs=epochs,

callbacks=[checkpointer, earlystopper])

Epoch 1/100

50/51 [============================>.] - ETA: 0s - loss: 2.8168 - accuracy: 0.1064

Epoch 1: val_accuracy improved from -inf to 0.14444, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 4s 40ms/step - loss: 2.8174 - accuracy: 0.1056 - val_loss: 2.8029 - val_accuracy: 0.1444

Epoch 2/100

50/51 [============================>.] - ETA: 0s - loss: 2.7704 - accuracy: 0.1159

Epoch 2: val_accuracy did not improve from 0.14444

51/51 [==============================] - 2s 31ms/step - loss: 2.7691 - accuracy: 0.1154 - val_loss: 2.7592 - val_accuracy: 0.1444

Epoch 3/100

50/51 [============================>.] - ETA: 0s - loss: 2.7197 - accuracy: 0.1379

Epoch 3: val_accuracy improved from 0.14444 to 0.21667, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 2s 33ms/step - loss: 2.7186 - accuracy: 0.1377 - val_loss: 2.6696 - val_accuracy: 0.2167

Epoch 4/100

50/51 [============================>.] - ETA: 0s - loss: 2.5964 - accuracy: 0.1606

Epoch 4: val_accuracy did not improve from 0.21667

51/51 [==============================] - 2s 31ms/step - loss: 2.5905 - accuracy: 0.1648 - val_loss: 2.5789 - val_accuracy: 0.2056

Epoch 5/100

51/51 [==============================] - ETA: 0s - loss: 2.4681 - accuracy: 0.1975

Epoch 5: val_accuracy did not improve from 0.21667

51/51 [==============================] - 2s 31ms/step - loss: 2.4681 - accuracy: 0.1975 - val_loss: 2.5502 - val_accuracy: 0.1500

Epoch 6/100

50/51 [============================>.] - ETA: 0s - loss: 2.3424 - accuracy: 0.2361

Epoch 6: val_accuracy improved from 0.21667 to 0.24444, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 2s 32ms/step - loss: 2.3382 - accuracy: 0.2364 - val_loss: 2.4447 - val_accuracy: 0.2444

Epoch 7/100

50/51 [============================>.] - ETA: 0s - loss: 2.2089 - accuracy: 0.2727

Epoch 7: val_accuracy did not improve from 0.24444

51/51 [==============================] - 2s 31ms/step - loss: 2.2096 - accuracy: 0.2710 - val_loss: 2.4229 - val_accuracy: 0.1944

Epoch 8/100

50/51 [============================>.] - ETA: 0s - loss: 2.1083 - accuracy: 0.3312

Epoch 8: val_accuracy did not improve from 0.24444

51/51 [==============================] - 2s 31ms/step - loss: 2.1141 - accuracy: 0.3296 - val_loss: 2.3777 - val_accuracy: 0.2167

Epoch 9/100

51/51 [==============================] - ETA: 0s - loss: 2.0169 - accuracy: 0.3302

Epoch 9: val_accuracy did not improve from 0.24444

51/51 [==============================] - 2s 31ms/step - loss: 2.0169 - accuracy: 0.3302 - val_loss: 2.4826 - val_accuracy: 0.2222

Epoch 10/100

50/51 [============================>.] - ETA: 0s - loss: 1.9355 - accuracy: 0.3703

Epoch 10: val_accuracy did not improve from 0.24444

51/51 [==============================] - 2s 32ms/step - loss: 1.9361 - accuracy: 0.3691 - val_loss: 2.3449 - val_accuracy: 0.2333

Epoch 11/100

50/51 [============================>.] - ETA: 0s - loss: 1.8232 - accuracy: 0.4144

Epoch 11: val_accuracy did not improve from 0.24444

51/51 [==============================] - 2s 31ms/step - loss: 1.8288 - accuracy: 0.4130 - val_loss: 2.3971 - val_accuracy: 0.2222

Epoch 12/100

50/51 [============================>.] - ETA: 0s - loss: 1.7745 - accuracy: 0.4263

Epoch 12: val_accuracy improved from 0.24444 to 0.28333, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 2s 33ms/step - loss: 1.7787 - accuracy: 0.4265 - val_loss: 2.4528 - val_accuracy: 0.2833

Epoch 13/100

50/51 [============================>.] - ETA: 0s - loss: 1.6931 - accuracy: 0.4540

Epoch 13: val_accuracy improved from 0.28333 to 0.31667, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 2s 33ms/step - loss: 1.6865 - accuracy: 0.4574 - val_loss: 2.4144 - val_accuracy: 0.3167

Epoch 14/100

51/51 [==============================] - ETA: 0s - loss: 1.5903 - accuracy: 0.5000

Epoch 14: val_accuracy did not improve from 0.31667

51/51 [==============================] - 2s 31ms/step - loss: 1.5903 - accuracy: 0.5000 - val_loss: 2.4333 - val_accuracy: 0.3000

Epoch 15/100

50/51 [============================>.] - ETA: 0s - loss: 1.5582 - accuracy: 0.5113

Epoch 15: val_accuracy did not improve from 0.31667

51/51 [==============================] - 2s 31ms/step - loss: 1.5563 - accuracy: 0.5117 - val_loss: 2.3228 - val_accuracy: 0.3000

Epoch 16/100

50/51 [============================>.] - ETA: 0s - loss: 1.4643 - accuracy: 0.5378

Epoch 16: val_accuracy improved from 0.31667 to 0.32778, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 2s 33ms/step - loss: 1.4646 - accuracy: 0.5370 - val_loss: 2.3622 - val_accuracy: 0.3278

Epoch 17/100

50/51 [============================>.] - ETA: 0s - loss: 1.4028 - accuracy: 0.5542

Epoch 17: val_accuracy improved from 0.32778 to 0.33889, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 2s 33ms/step - loss: 1.3961 - accuracy: 0.5586 - val_loss: 2.3537 - val_accuracy: 0.3389

Epoch 18/100

50/51 [============================>.] - ETA: 0s - loss: 1.3079 - accuracy: 0.5970

Epoch 18: val_accuracy improved from 0.33889 to 0.35556, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 2s 33ms/step - loss: 1.3109 - accuracy: 0.5981 - val_loss: 2.3690 - val_accuracy: 0.3556

Epoch 19/100

50/51 [============================>.] - ETA: 0s - loss: 1.2587 - accuracy: 0.6026

Epoch 19: val_accuracy did not improve from 0.35556

51/51 [==============================] - 2s 31ms/step - loss: 1.2683 - accuracy: 0.5994 - val_loss: 2.4178 - val_accuracy: 0.3222

Epoch 20/100

51/51 [==============================] - ETA: 0s - loss: 1.1746 - accuracy: 0.6426

Epoch 20: val_accuracy did not improve from 0.35556

51/51 [==============================] - 2s 32ms/step - loss: 1.1746 - accuracy: 0.6426 - val_loss: 2.4300 - val_accuracy: 0.3056

Epoch 21/100

51/51 [==============================] - ETA: 0s - loss: 1.1117 - accuracy: 0.6488

Epoch 21: val_accuracy did not improve from 0.35556

51/51 [==============================] - 2s 32ms/step - loss: 1.1117 - accuracy: 0.6488 - val_loss: 2.4838 - val_accuracy: 0.3556

Epoch 22/100

51/51 [==============================] - ETA: 0s - loss: 1.0289 - accuracy: 0.6877

Epoch 22: val_accuracy improved from 0.35556 to 0.37222, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 2s 34ms/step - loss: 1.0289 - accuracy: 0.6877 - val_loss: 2.5050 - val_accuracy: 0.3722

Epoch 23/100

51/51 [==============================] - ETA: 0s - loss: 1.0267 - accuracy: 0.6778

Epoch 23: val_accuracy did not improve from 0.37222

51/51 [==============================] - 2s 32ms/step - loss: 1.0267 - accuracy: 0.6778 - val_loss: 2.4621 - val_accuracy: 0.3333

Epoch 24/100

51/51 [==============================] - ETA: 0s - loss: 0.9193 - accuracy: 0.7074

Epoch 24: val_accuracy did not improve from 0.37222

51/51 [==============================] - 2s 33ms/step - loss: 0.9193 - accuracy: 0.7074 - val_loss: 2.4800 - val_accuracy: 0.3667

Epoch 25/100

51/51 [==============================] - ETA: 0s - loss: 0.8588 - accuracy: 0.7315

Epoch 25: val_accuracy did not improve from 0.37222

51/51 [==============================] - 2s 33ms/step - loss: 0.8588 - accuracy: 0.7315 - val_loss: 2.4512 - val_accuracy: 0.3722

Epoch 26/100

51/51 [==============================] - ETA: 0s - loss: 0.8278 - accuracy: 0.7272

Epoch 26: val_accuracy did not improve from 0.37222

51/51 [==============================] - 2s 33ms/step - loss: 0.8278 - accuracy: 0.7272 - val_loss: 2.6059 - val_accuracy: 0.3500

Epoch 27/100

51/51 [==============================] - ETA: 0s - loss: 0.7679 - accuracy: 0.7630

Epoch 27: val_accuracy did not improve from 0.37222

51/51 [==============================] - 2s 33ms/step - loss: 0.7679 - accuracy: 0.7630 - val_loss: 2.5223 - val_accuracy: 0.3722

Epoch 28/100

51/51 [==============================] - ETA: 0s - loss: 0.7020 - accuracy: 0.7722

Epoch 28: val_accuracy did not improve from 0.37222

51/51 [==============================] - 2s 34ms/step - loss: 0.7020 - accuracy: 0.7722 - val_loss: 2.6698 - val_accuracy: 0.3667

Epoch 29/100

51/51 [==============================] - ETA: 0s - loss: 0.6614 - accuracy: 0.7926

Epoch 29: val_accuracy improved from 0.37222 to 0.40000, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 2s 35ms/step - loss: 0.6614 - accuracy: 0.7926 - val_loss: 2.5832 - val_accuracy: 0.4000

Epoch 30/100

51/51 [==============================] - ETA: 0s - loss: 0.6528 - accuracy: 0.7988

Epoch 30: val_accuracy did not improve from 0.40000

51/51 [==============================] - 2s 33ms/step - loss: 0.6528 - accuracy: 0.7988 - val_loss: 2.6500 - val_accuracy: 0.3722

Epoch 31/100

51/51 [==============================] - ETA: 0s - loss: 0.5936 - accuracy: 0.8167

Epoch 31: val_accuracy did not improve from 0.40000

51/51 [==============================] - 2s 33ms/step - loss: 0.5936 - accuracy: 0.8167 - val_loss: 2.7787 - val_accuracy: 0.3889

Epoch 32/100

51/51 [==============================] - ETA: 0s - loss: 0.5690 - accuracy: 0.8235

Epoch 32: val_accuracy did not improve from 0.40000

51/51 [==============================] - 2s 34ms/step - loss: 0.5690 - accuracy: 0.8235 - val_loss: 2.7991 - val_accuracy: 0.3611

Epoch 33/100

51/51 [==============================] - ETA: 0s - loss: 0.5228 - accuracy: 0.8432

Epoch 33: val_accuracy did not improve from 0.40000

51/51 [==============================] - 2s 34ms/step - loss: 0.5228 - accuracy: 0.8432 - val_loss: 2.8537 - val_accuracy: 0.3722

Epoch 34/100

51/51 [==============================] - ETA: 0s - loss: 0.5079 - accuracy: 0.8475

Epoch 34: val_accuracy did not improve from 0.40000

51/51 [==============================] - 2s 34ms/step - loss: 0.5079 - accuracy: 0.8475 - val_loss: 2.7718 - val_accuracy: 0.4000

Epoch 35/100

51/51 [==============================] - ETA: 0s - loss: 0.4884 - accuracy: 0.8438

Epoch 35: val_accuracy did not improve from 0.40000

51/51 [==============================] - 2s 34ms/step - loss: 0.4884 - accuracy: 0.8438 - val_loss: 2.8398 - val_accuracy: 0.3611

Epoch 36/100

51/51 [==============================] - ETA: 0s - loss: 0.4437 - accuracy: 0.8753

Epoch 36: val_accuracy did not improve from 0.40000

51/51 [==============================] - 2s 34ms/step - loss: 0.4437 - accuracy: 0.8753 - val_loss: 2.8568 - val_accuracy: 0.3944

Epoch 37/100

51/51 [==============================] - ETA: 0s - loss: 0.4274 - accuracy: 0.8623

Epoch 37: val_accuracy did not improve from 0.40000

51/51 [==============================] - 2s 34ms/step - loss: 0.4274 - accuracy: 0.8623 - val_loss: 2.8914 - val_accuracy: 0.3889

Epoch 38/100

51/51 [==============================] - ETA: 0s - loss: 0.3903 - accuracy: 0.8765

Epoch 38: val_accuracy did not improve from 0.40000

51/51 [==============================] - 2s 34ms/step - loss: 0.3903 - accuracy: 0.8765 - val_loss: 2.9627 - val_accuracy: 0.3667

Epoch 39/100

51/51 [==============================] - ETA: 0s - loss: 0.3692 - accuracy: 0.8833

Epoch 39: val_accuracy did not improve from 0.40000

51/51 [==============================] - 2s 34ms/step - loss: 0.3692 - accuracy: 0.8833 - val_loss: 3.0202 - val_accuracy: 0.3889

Epoch 40/100

51/51 [==============================] - ETA: 0s - loss: 0.3492 - accuracy: 0.8981

Epoch 40: val_accuracy did not improve from 0.40000

51/51 [==============================] - 2s 34ms/step - loss: 0.3492 - accuracy: 0.8981 - val_loss: 3.1077 - val_accuracy: 0.3889

Epoch 41/100

51/51 [==============================] - ETA: 0s - loss: 0.3307 - accuracy: 0.9037

Epoch 41: val_accuracy improved from 0.40000 to 0.41111, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 2s 36ms/step - loss: 0.3307 - accuracy: 0.9037 - val_loss: 3.1079 - val_accuracy: 0.4111

Epoch 42/100

51/51 [==============================] - ETA: 0s - loss: 0.3219 - accuracy: 0.8994

Epoch 42: val_accuracy did not improve from 0.41111

51/51 [==============================] - 2s 36ms/step - loss: 0.3219 - accuracy: 0.8994 - val_loss: 3.0905 - val_accuracy: 0.3944

Epoch 43/100

51/51 [==============================] - ETA: 0s - loss: 0.2907 - accuracy: 0.9179

Epoch 43: val_accuracy did not improve from 0.41111

51/51 [==============================] - 2s 35ms/step - loss: 0.2907 - accuracy: 0.9179 - val_loss: 3.0915 - val_accuracy: 0.4000

Epoch 44/100

51/51 [==============================] - ETA: 0s - loss: 0.3048 - accuracy: 0.9154

Epoch 44: val_accuracy did not improve from 0.41111

51/51 [==============================] - 2s 35ms/step - loss: 0.3048 - accuracy: 0.9154 - val_loss: 3.1775 - val_accuracy: 0.3944

Epoch 45/100

51/51 [==============================] - ETA: 0s - loss: 0.2953 - accuracy: 0.9117

Epoch 45: val_accuracy did not improve from 0.41111

51/51 [==============================] - 2s 36ms/step - loss: 0.2953 - accuracy: 0.9117 - val_loss: 3.1419 - val_accuracy: 0.3889

Epoch 46/100

51/51 [==============================] - ETA: 0s - loss: 0.2644 - accuracy: 0.9340

Epoch 46: val_accuracy did not improve from 0.41111

51/51 [==============================] - 2s 35ms/step - loss: 0.2644 - accuracy: 0.9340 - val_loss: 3.2602 - val_accuracy: 0.3944

Epoch 47/100

51/51 [==============================] - ETA: 0s - loss: 0.2554 - accuracy: 0.9296

Epoch 47: val_accuracy did not improve from 0.41111

51/51 [==============================] - 2s 35ms/step - loss: 0.2554 - accuracy: 0.9296 - val_loss: 3.3103 - val_accuracy: 0.3833

Epoch 48/100

51/51 [==============================] - ETA: 0s - loss: 0.2667 - accuracy: 0.9167

Epoch 48: val_accuracy improved from 0.41111 to 0.42222, saving model to ./model\好莱坞明星识别_best_model.h5

51/51 [==============================] - 2s 37ms/step - loss: 0.2667 - accuracy: 0.9167 - val_loss: 3.1441 - val_accuracy: 0.4222

Epoch 49/100

51/51 [==============================] - ETA: 0s - loss: 0.2386 - accuracy: 0.9302

Epoch 49: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 35ms/step - loss: 0.2386 - accuracy: 0.9302 - val_loss: 3.2786 - val_accuracy: 0.3833

Epoch 50/100

51/51 [==============================] - ETA: 0s - loss: 0.2260 - accuracy: 0.9358

Epoch 50: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 35ms/step - loss: 0.2260 - accuracy: 0.9358 - val_loss: 3.2839 - val_accuracy: 0.3889

Epoch 51/100

51/51 [==============================] - ETA: 0s - loss: 0.2175 - accuracy: 0.9383

Epoch 51: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 35ms/step - loss: 0.2175 - accuracy: 0.9383 - val_loss: 3.3833 - val_accuracy: 0.3833

Epoch 52/100

51/51 [==============================] - ETA: 0s - loss: 0.2225 - accuracy: 0.9315

Epoch 52: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 36ms/step - loss: 0.2225 - accuracy: 0.9315 - val_loss: 3.3515 - val_accuracy: 0.3889

Epoch 53/100

51/51 [==============================] - ETA: 0s - loss: 0.1964 - accuracy: 0.9463

Epoch 53: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 36ms/step - loss: 0.1964 - accuracy: 0.9463 - val_loss: 3.3062 - val_accuracy: 0.4056

Epoch 54/100

51/51 [==============================] - ETA: 0s - loss: 0.2013 - accuracy: 0.9438

Epoch 54: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 37ms/step - loss: 0.2013 - accuracy: 0.9438 - val_loss: 3.3462 - val_accuracy: 0.3889

Epoch 55/100

51/51 [==============================] - ETA: 0s - loss: 0.1928 - accuracy: 0.9488

Epoch 55: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 35ms/step - loss: 0.1928 - accuracy: 0.9488 - val_loss: 3.4539 - val_accuracy: 0.3889

Epoch 56/100

51/51 [==============================] - ETA: 0s - loss: 0.1976 - accuracy: 0.9475

Epoch 56: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 37ms/step - loss: 0.1976 - accuracy: 0.9475 - val_loss: 3.4664 - val_accuracy: 0.3889

Epoch 57/100

51/51 [==============================] - ETA: 0s - loss: 0.1866 - accuracy: 0.9475

Epoch 57: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 37ms/step - loss: 0.1866 - accuracy: 0.9475 - val_loss: 3.4143 - val_accuracy: 0.3833

Epoch 58/100

51/51 [==============================] - ETA: 0s - loss: 0.2077 - accuracy: 0.9407

Epoch 58: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 35ms/step - loss: 0.2077 - accuracy: 0.9407 - val_loss: 3.5146 - val_accuracy: 0.4111

Epoch 59/100

51/51 [==============================] - ETA: 0s - loss: 0.1606 - accuracy: 0.9568

Epoch 59: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 35ms/step - loss: 0.1606 - accuracy: 0.9568 - val_loss: 3.4810 - val_accuracy: 0.3889

Epoch 60/100

51/51 [==============================] - ETA: 0s - loss: 0.1473 - accuracy: 0.9593

Epoch 60: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 36ms/step - loss: 0.1473 - accuracy: 0.9593 - val_loss: 3.4740 - val_accuracy: 0.4167

Epoch 61/100

51/51 [==============================] - ETA: 0s - loss: 0.1676 - accuracy: 0.9562

Epoch 61: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 38ms/step - loss: 0.1676 - accuracy: 0.9562 - val_loss: 3.4758 - val_accuracy: 0.4000

Epoch 62/100

51/51 [==============================] - ETA: 0s - loss: 0.1636 - accuracy: 0.9500

Epoch 62: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 36ms/step - loss: 0.1636 - accuracy: 0.9500 - val_loss: 3.5540 - val_accuracy: 0.3833

Epoch 63/100

51/51 [==============================] - ETA: 0s - loss: 0.1680 - accuracy: 0.9549

Epoch 63: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 36ms/step - loss: 0.1680 - accuracy: 0.9549 - val_loss: 3.5183 - val_accuracy: 0.3944

Epoch 64/100

51/51 [==============================] - ETA: 0s - loss: 0.1541 - accuracy: 0.9568

Epoch 64: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 36ms/step - loss: 0.1541 - accuracy: 0.9568 - val_loss: 3.5818 - val_accuracy: 0.4056

Epoch 65/100

51/51 [==============================] - ETA: 0s - loss: 0.1491 - accuracy: 0.9636

Epoch 65: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 36ms/step - loss: 0.1491 - accuracy: 0.9636 - val_loss: 3.5810 - val_accuracy: 0.3944

Epoch 66/100

51/51 [==============================] - ETA: 0s - loss: 0.1423 - accuracy: 0.9630

Epoch 66: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 36ms/step - loss: 0.1423 - accuracy: 0.9630 - val_loss: 3.4993 - val_accuracy: 0.4056

Epoch 67/100

51/51 [==============================] - ETA: 0s - loss: 0.1450 - accuracy: 0.9605

Epoch 67: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 36ms/step - loss: 0.1450 - accuracy: 0.9605 - val_loss: 3.4847 - val_accuracy: 0.4167

Epoch 68/100

51/51 [==============================] - ETA: 0s - loss: 0.1476 - accuracy: 0.9531

Epoch 68: val_accuracy did not improve from 0.42222

51/51 [==============================] - 2s 37ms/step - loss: 0.1476 - accuracy: 0.9531 - val_loss: 3.5448 - val_accuracy: 0.4111

Epoch 68: early stopping

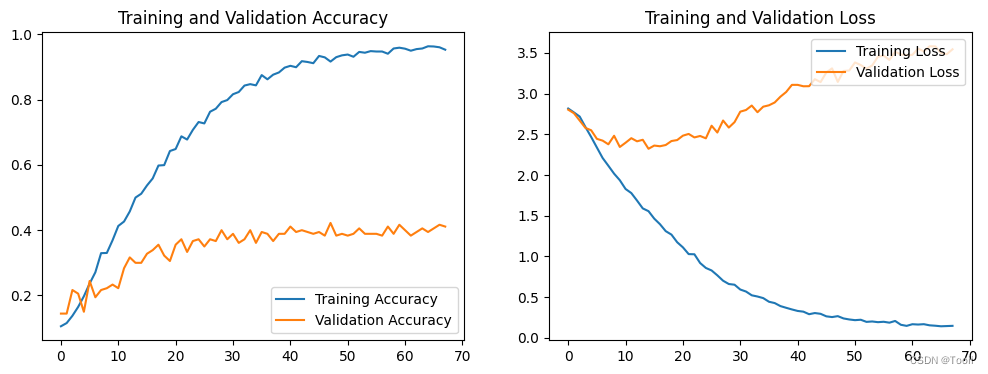

五、模型评估

1. Loss与Accuracy图

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs_range = range(len(loss))

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

2. 指定图片进行预测

# 加载效果最好的模型权重

model.load_weights('./model/好莱坞明星识别_best_model.h5')

from PIL import Image

import numpy as np

import tensorflow as tf

# 加载图像并调整大小

img = Image.open("F:/host/Data/好莱坞明星人脸识别识别数据/Tom Cruise/004_dc64d954.jpg")

img = img.resize((img_width, img_height)) # 调整图像大小以匹配模型输入尺寸

img_array = np.array(img) / 255.0 # 将图像转换为 NumPy 数组并归一化

# 将 NumPy 数组转换为 TensorFlow 张量

img_tensor = tf.convert_to_tensor(img_array, dtype=tf.float32)

img_tensor = tf.expand_dims(img_tensor, axis=0) # 在第一个维度上添加批处理维度

# 进行预测

predictions = model.predict(img_tensor)

predicted_class = np.argmax(predictions)

print("预测结果为:", class_names[predicted_class])

1/1 [==============================] - 0s 22ms/step

预测结果为: Tom Cruise

加载效果最好的模型权重

model.load_weights(‘./model/好莱坞明星识别_best_model.h5’)

```python

from PIL import Image

import numpy as np

import tensorflow as tf

# 加载图像并调整大小

img = Image.open("F:/host/Data/好莱坞明星人脸识别识别数据/Tom Cruise/004_dc64d954.jpg")

img = img.resize((img_width, img_height)) # 调整图像大小以匹配模型输入尺寸

img_array = np.array(img) / 255.0 # 将图像转换为 NumPy 数组并归一化

# 将 NumPy 数组转换为 TensorFlow 张量

img_tensor = tf.convert_to_tensor(img_array, dtype=tf.float32)

img_tensor = tf.expand_dims(img_tensor, axis=0) # 在第一个维度上添加批处理维度

# 进行预测

predictions = model.predict(img_tensor)

predicted_class = np.argmax(predictions)

print("预测结果为:", class_names[predicted_class])

1/1 [==============================] - 0s 22ms/step

预测结果为: Tom Cruise

个人收获

通过本次实践,我对深度学习中的环境配置、数据预处理、模型构建、训练优化以及评估预测等关键步骤有了更深入的理解和实践经验。我学会了如何高效利用GPU资源,掌握了数据集的加载和预处理技巧,以及如何通过调整卷积神经网络的结构和参数来提升模型性能。此外,我也认识到了Dropout和学习率调度在防止过拟合和加快收敛中的重要性,并通过实际操作加深了对早停法等回调函数的运用

2022

2022

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?