Jetson nano Yolov5环境配置

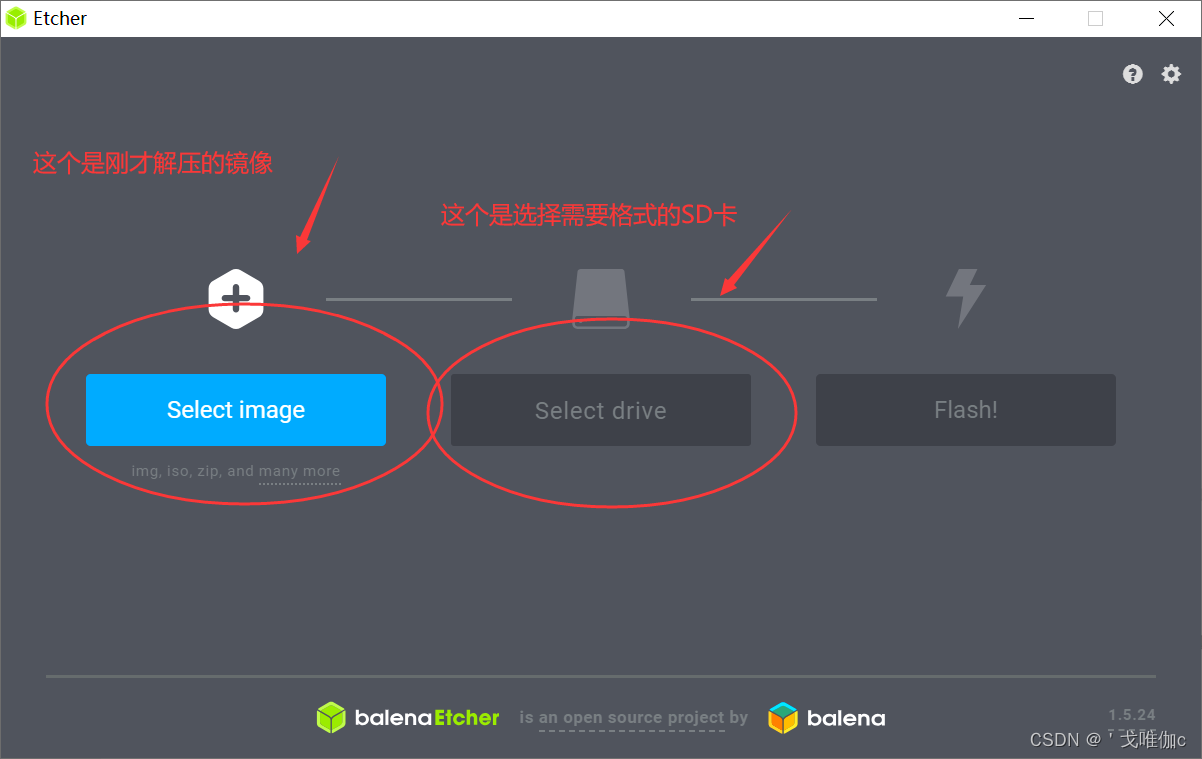

1.下载 Jetson nano 系统镜像 烧录到tf'卡

链接:百度网盘 请输入提取码 提取码:qwer

烧录2遍(大约20分钟左右)

建议选择英文版本

如果要换回中文先更新软件列表

sudo apt-get update再到语言选择里将中文拖到第一个

2.配置CUDA

在终端输入以下命令

sudo gedit ~/.bashrc

进入文档后在文档的最后面加上以下代码

export CUDA_HOME=/usr/local/cuda-10.2

export LD_LIBRARY_PATH=/usr/local/cuda-10.2/lib64:$LD_LIBRARY_PATH

export PATH=/usr/local/cuda-10.2/bin:$PATH验证是否配置成功

nvcc -V如果未出现一下内容则关闭终端重新打开进行验证,如还是没有则是配置未成功

3.配置conda

直接打开终端输入以下代码

wget https://github.com/Archiconda/build-tools/releases/download/0.2.3/Archiconda3-0.2.3-Linux-aarch64.sh可能会连接好多次才能下载成功

如果下到一半失败直接输入以下代码修改系统堆栈大小

ulimit -s 102400下载完成后进行安装

bash Archiconda3-0.2.3-Linux-aarch64.sh安装完成后添加系统变量

sudo gedit ~/.bashrc在文件最下面加入以下代码y

export PATH=~/archiconda3/bin:$PATH验证是否成功

conda -V4.创建虚拟环境

conda create -n xxx(虚拟环境名) python=3.6 #创建一个python3.6的虚拟环境

conda activate xxx #进入虚拟环境

conda deactivate #(退出虚拟环境)(后面的安装包之类只支持python3.6)下方提供换源教程

安装pip并且升级到最新

sudo apt-get install python3-pip libopenblas-base libopenmpi-dev

pip3 install --upgrade pip5.下载torch和torchvision

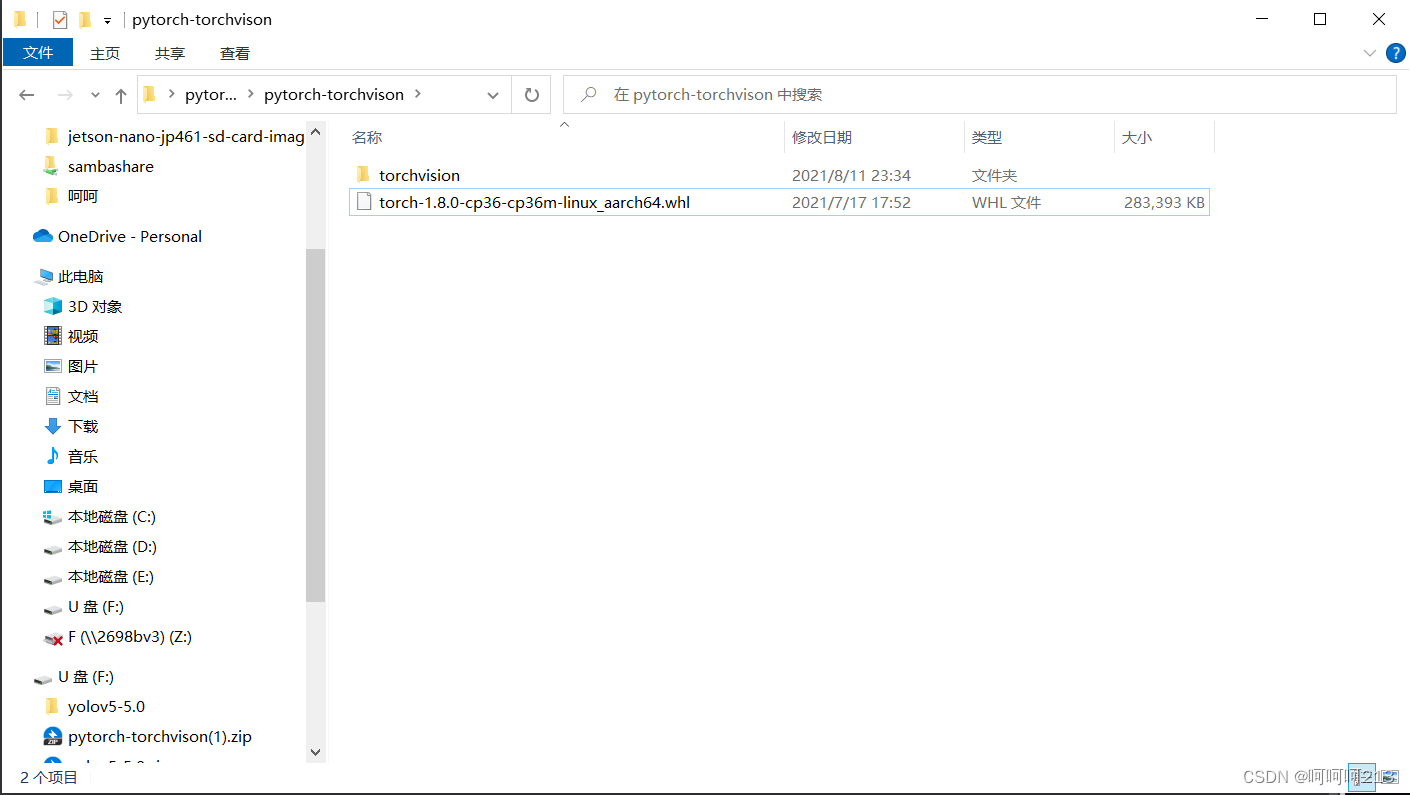

这里提供python3.6版本的torch1.8.0和torchvision0.9.0,同时附上版本对应表

链接:百度网盘 请输入提取码 提取码:qwer

下载资源包解压到主目录

安装torch

安装torch

在jetson nano里进入这个文件夹

可使用cd进入 或者 进入文件夹右键打开终端 注意注意!!!! 要进虚拟环境 !!!!!

输入以下代码进行torch的安装

sudo apt-get install python3-pip libopenblas-base libopenmpi-dev

pip install torch-1.8.0-cp36-cp36m-linux_aarch64.whl验证是否安装成功

python

import torch

print(torch.__version__)出现版本号就是成功了( 记得 exit() 退出python )

如果输入 import torch 出现 非法指令(核心已转移) 输入以下代码再进行验证

export OPENBLAS_CORETYPE=ARMV8安装torchvision

进入torchvision的文件夹 输入以下代码

export BUILD_VERSION=0.9.0

sudo python setup.py install 如果第二个指令执行完报错,继续执行下面的代码

python setup.py build

python setup.py install最后验证是否成功

python

import torch

import torchvision

print(torch.cuda.is_available()) # 这一步如果输出True那么就成功了!( 记得 exit() 退出python )

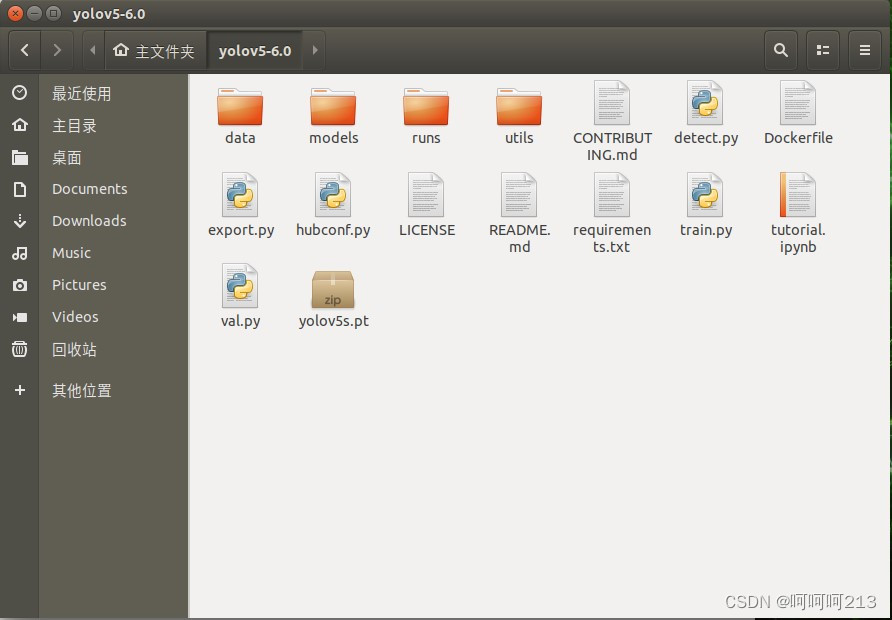

6.下载yolov5代码和yolo环境的安装 !!!!!!千万不要用5.0的版本 这个版本不能用n的模型,不能用摄像头实时!!!!!

下面附上yolov5和权重的地址

https://github.com/ultralytics/yolov5/releases/tag/v7.0或者网盘,里面有yolov5和tensorrtx

链接:百度网盘 请输入提取码 提取码:qwer

解压到主目录,将权重也放进去

进入yolov5的文件夹

进入yolov5的文件夹

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simpleBuilding wheel for opencv-python (pyroject.toml)...会用很多的时间

完成后即可进行验证

python3 detect.py --weights yolov5n.pt --source 0

7. 利用tensorrtx加速推理

1.下载tensorrtx

下载地址:https://github.com/wang-xinyu/tensorrtx.git

2.生成wts文件

将tensorrtx项目中的yolov5/gen_wts.py复制到原yolov5中

python3 gen_wts.py -w yolov5n.pt -o yolov5n.wts 3.编译

cd ~/ tensorrtx/yolov5/ 路径根据自己实际来

mkdir build

cd build

将生成的wts文件复制到build下

cmake ..

make -j4

sudo ./yolov5 -s yolov5n.wts yolov5n.engine n #生成engine文件4.安装pycuda

pip3 install pycuda如果失败

sudo apt-get install python3-dev

sudo apt install python3-dev python3-pip libatlas-base-dev报错样例

cuda.h错误

export CUDA_HOME=/usr/local/cuda-10.2

export CPATH=${CUDA_HOME}/include:${CPATH}

export LIBRARY_PATH=${CUDA_HOME}/lib64:$LIBRARY_PATHpyconfig.h找不到文件错误

sudo apt install libpython3.8-dev

export CPLUS_INCLUDE_PATH=/usr/include/python3.xnvcc没有配置

export PATH=/usr/local/cuda/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}其它未知依赖问题

sudo apt install python-dev libxml2-dev libxslt-dev

sudo apt install python3-dev

sudo apt install python3.6-dev5.建立tensorrt软连接(如使用虚拟环境需要)

sudo ln -s /usr/lib/python3.6/dist-packages/tensorrt* /home/hehe/archiconda3/envs/yolo/lib/python3.6/site-packages6.测试图片查看效果

python yolov5_trt.py #测试图片查看效果8.改为摄像头

将yolov5.cpp的代码改为

(如果要到50帧,将yololayer.h 文件里的模型大小640改为320)

修改了12行和342行

#include <iostream>

#include <chrono>

#include "cuda_utils.h"

#include "logging.h"

#include "common.hpp"

#include "utils.h"

#include "calibrator.h"

#define USE_FP32 // set USE_INT8 or USE_FP16 or USE_FP32

#define DEVICE 0 // GPU id

#define NMS_THRESH 0.4 //0.4

#define CONF_THRESH 0.25 //置信度,默认值为0.5,由于效果不好修改为0.25取得了较好的效果

#define BATCH_SIZE 1

// stuff we know about the network and the input/output blobs

static const int INPUT_H = Yolo::INPUT_H;

static const int INPUT_W = Yolo::INPUT_W;

static const int CLASS_NUM = Yolo::CLASS_NUM;

static const int OUTPUT_SIZE = Yolo::MAX_OUTPUT_BBOX_COUNT * sizeof(Yolo::Detection) / sizeof(float) + 1; // we assume the yololayer outputs no more than MAX_OUTPUT_BBOX_COUNT boxes that conf >= 0.1

const char* INPUT_BLOB_NAME = "data";

const char* OUTPUT_BLOB_NAME = "prob";

static Logger gLogger;

char* my_classes[] = { "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard","surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush" };

static int get_width(int x, float gw, int divisor = 8) {

//return math.ceil(x / divisor) * divisor

if (int(x * gw) % divisor == 0) {

return int(x * gw);

}

return (int(x * gw / divisor) + 1) * divisor;

}

static int get_depth(int x, float gd) {

if (x == 1) {

return 1;

}

else {

return round(x * gd) > 1 ? round(x * gd) : 1;

}

}

ICudaEngine* build_engine(unsigned int maxBatchSize, IBuilder* builder, IBuilderConfig* config, DataType dt, float& gd, float& gw, std::string& wts_name) {

INetworkDefinition* network = builder->createNetworkV2(0U);

// Create input tensor of shape {3, INPUT_H, INPUT_W} with name INPUT_BLOB_NAME

ITensor* data = network->addInput(INPUT_BLOB_NAME, dt, Dims3{ 3, INPUT_H, INPUT_W });

assert(data);

std::map<std::string, Weights> weightMap = loadWeights(wts_name);

/* ------ yolov5 backbone------ */

auto focus0 = focus(network, weightMap, *data, 3, get_width(64, gw), 3, "model.0");

auto conv1 = convBlock(network, weightMap, *focus0->getOutput(0), get_width(128, gw), 3, 2, 1, "model.1");

auto bottleneck_CSP2 = C3(network, weightMap, *conv1->getOutput(0), get_width(128, gw), get_width(128, gw), get_depth(3, gd), true, 1, 0.5, "model.2");

auto conv3 = convBlock(network, weightMap, *bottleneck_CSP2->getOutput(0), get_width(256, gw), 3, 2, 1, "model.3");

auto bottleneck_csp4 = C3(network, weightMap, *conv3->getOutput(0), get_width(256, gw), get_width(256, gw), get_depth(9, gd), true, 1, 0.5, "model.4");

auto conv5 = convBlock(network, weightMap, *bottleneck_csp4->getOutput(0), get_width(512, gw), 3, 2, 1, "model.5");

auto bottleneck_csp6 = C3(network, weightMap, *conv5->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(9, gd), true, 1, 0.5, "model.6");

auto conv7 = convBlock(network, weightMap, *bottleneck_csp6->getOutput(0), get_width(1024, gw), 3, 2, 1, "model.7");

auto spp8 = SPP(network, weightMap, *conv7->getOutput(0), get_width(1024, gw), get_width(1024, gw), 5, 9, 13, "model.8");

/* ------ yolov5 head ------ */

auto bottleneck_csp9 = C3(network, weightMap, *spp8->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.9");

auto conv10 = convBlock(network, weightMap, *bottleneck_csp9->getOutput(0), get_width(512, gw), 1, 1, 1, "model.10");

auto upsample11 = network->addResize(*conv10->getOutput(0));

assert(upsample11);

upsample11->setResizeMode(ResizeMode::kNEAREST);

upsample11->setOutputDimensions(bottleneck_csp6->getOutput(0)->getDimensions());

ITensor* inputTensors12[] = { upsample11->getOutput(0), bottleneck_csp6->getOutput(0) };

auto cat12 = network->addConcatenation(inputTensors12, 2);

auto bottleneck_csp13 = C3(network, weightMap, *cat12->getOutput(0), get_width(1024, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.13");

auto conv14 = convBlock(network, weightMap, *bottleneck_csp13->getOutput(0), get_width(256, gw), 1, 1, 1, "model.14");

auto upsample15 = network->addResize(*conv14->getOutput(0));

assert(upsample15);

upsample15->setResizeMode(ResizeMode::kNEAREST);

upsample15->setOutputDimensions(bottleneck_csp4->getOutput(0)->getDimensions());

ITensor* inputTensors16[] = { upsample15->getOutput(0), bottleneck_csp4->getOutput(0) };

auto cat16 = network->addConcatenation(inputTensors16, 2);

auto bottleneck_csp17 = C3(network, weightMap, *cat16->getOutput(0), get_width(512, gw), get_width(256, gw), get_depth(3, gd), false, 1, 0.5, "model.17");

// yolo layer 0

IConvolutionLayer* det0 = network->addConvolutionNd(*bottleneck_csp17->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.0.weight"], weightMap["model.24.m.0.bias"]);

auto conv18 = convBlock(network, weightMap, *bottleneck_csp17->getOutput(0), get_width(256, gw), 3, 2, 1, "model.18");

ITensor* inputTensors19[] = { conv18->getOutput(0), conv14->getOutput(0) };

auto cat19 = network->addConcatenation(inputTensors19, 2);

auto bottleneck_csp20 = C3(network, weightMap, *cat19->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.20");

//yolo layer 1

IConvolutionLayer* det1 = network->addConvolutionNd(*bottleneck_csp20->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.1.weight"], weightMap["model.24.m.1.bias"]);

auto conv21 = convBlock(network, weightMap, *bottleneck_csp20->getOutput(0), get_width(512, gw), 3, 2, 1, "model.21");

ITensor* inputTensors22[] = { conv21->getOutput(0), conv10->getOutput(0) };

auto cat22 = network->addConcatenation(inputTensors22, 2);

auto bottleneck_csp23 = C3(network, weightMap, *cat22->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.23");

IConvolutionLayer* det2 = network->addConvolutionNd(*bottleneck_csp23->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.24.m.2.weight"], weightMap["model.24.m.2.bias"]);

auto yolo = addYoLoLayer(network, weightMap, "model.24", std::vector<IConvolutionLayer*>{det0, det1, det2});

yolo->getOutput(0)->setName(OUTPUT_BLOB_NAME);

network->markOutput(*yolo->getOutput(0));

// Build engine

builder->setMaxBatchSize(maxBatchSize);

config->setMaxWorkspaceSize(16 * (1 << 20)); // 16MB

#if defined(USE_FP16)

config->setFlag(BuilderFlag::kFP16);

#elif defined(USE_INT8)

std::cout << "Your platform support int8: " << (builder->platformHasFastInt8() ? "true" : "false") << std::endl;

assert(builder->platformHasFastInt8());

config->setFlag(BuilderFlag::kINT8);

Int8EntropyCalibrator2* calibrator = new Int8EntropyCalibrator2(1, INPUT_W, INPUT_H, "./coco_calib/", "int8calib.table", INPUT_BLOB_NAME);

config->setInt8Calibrator(calibrator);

#endif

std::cout << "Building engine, please wait for a while..." << std::endl;

ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

std::cout << "Build engine successfully!" << std::endl;

// Don't need the network any more

network->destroy();

// Release host memory

for (auto& mem : weightMap)

{

free((void*)(mem.second.values));

}

return engine;

}

ICudaEngine* build_engine_p6(unsigned int maxBatchSize, IBuilder* builder, IBuilderConfig* config, DataType dt, float& gd, float& gw, std::string& wts_name) {

INetworkDefinition* network = builder->createNetworkV2(0U);

// Create input tensor of shape {3, INPUT_H, INPUT_W} with name INPUT_BLOB_NAME

ITensor* data = network->addInput(INPUT_BLOB_NAME, dt, Dims3{ 3, INPUT_H, INPUT_W });

assert(data);

std::map<std::string, Weights> weightMap = loadWeights(wts_name);

/* ------ yolov5 backbone------ */

auto focus0 = focus(network, weightMap, *data, 3, get_width(64, gw), 3, "model.0");

auto conv1 = convBlock(network, weightMap, *focus0->getOutput(0), get_width(128, gw), 3, 2, 1, "model.1");

auto c3_2 = C3(network, weightMap, *conv1->getOutput(0), get_width(128, gw), get_width(128, gw), get_depth(3, gd), true, 1, 0.5, "model.2");

auto conv3 = convBlock(network, weightMap, *c3_2->getOutput(0), get_width(256, gw), 3, 2, 1, "model.3");

auto c3_4 = C3(network, weightMap, *conv3->getOutput(0), get_width(256, gw), get_width(256, gw), get_depth(9, gd), true, 1, 0.5, "model.4");

auto conv5 = convBlock(network, weightMap, *c3_4->getOutput(0), get_width(512, gw), 3, 2, 1, "model.5");

auto c3_6 = C3(network, weightMap, *conv5->getOutput(0), get_width(512, gw), get_width(512, gw), get_depth(9, gd), true, 1, 0.5, "model.6");

auto conv7 = convBlock(network, weightMap, *c3_6->getOutput(0), get_width(768, gw), 3, 2, 1, "model.7");

auto c3_8 = C3(network, weightMap, *conv7->getOutput(0), get_width(768, gw), get_width(768, gw), get_depth(3, gd), true, 1, 0.5, "model.8");

auto conv9 = convBlock(network, weightMap, *c3_8->getOutput(0), get_width(1024, gw), 3, 2, 1, "model.9");

auto spp10 = SPP(network, weightMap, *conv9->getOutput(0), get_width(1024, gw), get_width(1024, gw), 3, 5, 7, "model.10");

auto c3_11 = C3(network, weightMap, *spp10->getOutput(0), get_width(1024, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.11");

/* ------ yolov5 head ------ */

auto conv12 = convBlock(network, weightMap, *c3_11->getOutput(0), get_width(768, gw), 1, 1, 1, "model.12");

auto upsample13 = network->addResize(*conv12->getOutput(0));

assert(upsample13);

upsample13->setResizeMode(ResizeMode::kNEAREST);

upsample13->setOutputDimensions(c3_8->getOutput(0)->getDimensions());

ITensor* inputTensors14[] = { upsample13->getOutput(0), c3_8->getOutput(0) };

auto cat14 = network->addConcatenation(inputTensors14, 2);

auto c3_15 = C3(network, weightMap, *cat14->getOutput(0), get_width(1536, gw), get_width(768, gw), get_depth(3, gd), false, 1, 0.5, "model.15");

auto conv16 = convBlock(network, weightMap, *c3_15->getOutput(0), get_width(512, gw), 1, 1, 1, "model.16");

auto upsample17 = network->addResize(*conv16->getOutput(0));

assert(upsample17);

upsample17->setResizeMode(ResizeMode::kNEAREST);

upsample17->setOutputDimensions(c3_6->getOutput(0)->getDimensions());

ITensor* inputTensors18[] = { upsample17->getOutput(0), c3_6->getOutput(0) };

auto cat18 = network->addConcatenation(inputTensors18, 2);

auto c3_19 = C3(network, weightMap, *cat18->getOutput(0), get_width(1024, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.19");

auto conv20 = convBlock(network, weightMap, *c3_19->getOutput(0), get_width(256, gw), 1, 1, 1, "model.20");

auto upsample21 = network->addResize(*conv20->getOutput(0));

assert(upsample21);

upsample21->setResizeMode(ResizeMode::kNEAREST);

upsample21->setOutputDimensions(c3_4->getOutput(0)->getDimensions());

ITensor* inputTensors21[] = { upsample21->getOutput(0), c3_4->getOutput(0) };

auto cat22 = network->addConcatenation(inputTensors21, 2);

auto c3_23 = C3(network, weightMap, *cat22->getOutput(0), get_width(512, gw), get_width(256, gw), get_depth(3, gd), false, 1, 0.5, "model.23");

auto conv24 = convBlock(network, weightMap, *c3_23->getOutput(0), get_width(256, gw), 3, 2, 1, "model.24");

ITensor* inputTensors25[] = { conv24->getOutput(0), conv20->getOutput(0) };

auto cat25 = network->addConcatenation(inputTensors25, 2);

auto c3_26 = C3(network, weightMap, *cat25->getOutput(0), get_width(1024, gw), get_width(512, gw), get_depth(3, gd), false, 1, 0.5, "model.26");

auto conv27 = convBlock(network, weightMap, *c3_26->getOutput(0), get_width(512, gw), 3, 2, 1, "model.27");

ITensor* inputTensors28[] = { conv27->getOutput(0), conv16->getOutput(0) };

auto cat28 = network->addConcatenation(inputTensors28, 2);

auto c3_29 = C3(network, weightMap, *cat28->getOutput(0), get_width(1536, gw), get_width(768, gw), get_depth(3, gd), false, 1, 0.5, "model.29");

auto conv30 = convBlock(network, weightMap, *c3_29->getOutput(0), get_width(768, gw), 3, 2, 1, "model.30");

ITensor* inputTensors31[] = { conv30->getOutput(0), conv12->getOutput(0) };

auto cat31 = network->addConcatenation(inputTensors31, 2);

auto c3_32 = C3(network, weightMap, *cat31->getOutput(0), get_width(2048, gw), get_width(1024, gw), get_depth(3, gd), false, 1, 0.5, "model.32");

/* ------ detect ------ */

IConvolutionLayer* det0 = network->addConvolutionNd(*c3_23->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.0.weight"], weightMap["model.33.m.0.bias"]);

IConvolutionLayer* det1 = network->addConvolutionNd(*c3_26->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.1.weight"], weightMap["model.33.m.1.bias"]);

IConvolutionLayer* det2 = network->addConvolutionNd(*c3_29->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.2.weight"], weightMap["model.33.m.2.bias"]);

IConvolutionLayer* det3 = network->addConvolutionNd(*c3_32->getOutput(0), 3 * (Yolo::CLASS_NUM + 5), DimsHW{ 1, 1 }, weightMap["model.33.m.3.weight"], weightMap["model.33.m.3.bias"]);

auto yolo = addYoLoLayer(network, weightMap, "model.33", std::vector<IConvolutionLayer*>{det0, det1, det2, det3});

yolo->getOutput(0)->setName(OUTPUT_BLOB_NAME);

network->markOutput(*yolo->getOutput(0));

// Build engine

builder->setMaxBatchSize(maxBatchSize);

config->setMaxWorkspaceSize(16 * (1 << 20)); // 16MB

#if defined(USE_FP16)

config->setFlag(BuilderFlag::kFP16);

#elif defined(USE_INT8)

std::cout << "Your platform support int8: " << (builder->platformHasFastInt8() ? "true" : "false") << std::endl;

assert(builder->platformHasFastInt8());

config->setFlag(BuilderFlag::kINT8);

Int8EntropyCalibrator2* calibrator = new Int8EntropyCalibrator2(1, INPUT_W, INPUT_H, "./coco_calib/", "int8calib.table", INPUT_BLOB_NAME);

config->setInt8Calibrator(calibrator);

#endif

std::cout << "Building engine, please wait for a while..." << std::endl;

ICudaEngine* engine = builder->buildEngineWithConfig(*network, *config);

std::cout << "Build engine successfully!" << std::endl;

// Don't need the network any more

network->destroy();

// Release host memory

for (auto& mem : weightMap)

{

free((void*)(mem.second.values));

}

return engine;

}

void APIToModel(unsigned int maxBatchSize, IHostMemory** modelStream, float& gd, float& gw, std::string& wts_name) {

// Create builder

IBuilder* builder = createInferBuilder(gLogger);

IBuilderConfig* config = builder->createBuilderConfig();

// Create model to populate the network, then set the outputs and create an engine

ICudaEngine* engine = build_engine(maxBatchSize, builder, config, DataType::kFLOAT, gd, gw, wts_name);

assert(engine != nullptr);

// Serialize the engine

(*modelStream) = engine->serialize();

// Close everything down

engine->destroy();

builder->destroy();

config->destroy();

}

void doInference(IExecutionContext& context, cudaStream_t& stream, void** buffers, float* input, float* output, int batchSize) {

// DMA input batch data to device, infer on the batch asynchronously, and DMA output back to host

CUDA_CHECK(cudaMemcpyAsync(buffers[0], input, batchSize * 3 * INPUT_H * INPUT_W * sizeof(float), cudaMemcpyHostToDevice, stream));

context.enqueue(batchSize, buffers, stream, nullptr);

CUDA_CHECK(cudaMemcpyAsync(output, buffers[1], batchSize * OUTPUT_SIZE * sizeof(float), cudaMemcpyDeviceToHost, stream));

cudaStreamSynchronize(stream);

}

bool parse_args(int argc, char** argv, std::string& engine) {

if (argc < 3) return false;

if (std::string(argv[1]) == "-v" && argc == 3) {

engine = std::string(argv[2]);

}

else {

return false;

}

return true;

}

int main(int argc, char** argv) {

cudaSetDevice(DEVICE);

//std::string wts_name = "";

std::string engine_name = "";

//float gd = 0.0f, gw = 0.0f;

//std::string img_dir;

if (!parse_args(argc, argv, engine_name)) {

std::cerr << "arguments not right!" << std::endl;

std::cerr << "./yolov5 -v [.engine] // run inference with camera" << std::endl;

return -1;

}

std::ifstream file(engine_name, std::ios::binary);

if (!file.good()) {

std::cerr << " read " << engine_name << " error! " << std::endl;

return -1;

}

char* trtModelStream{ nullptr };

size_t size = 0;

file.seekg(0, file.end);

size = file.tellg();

file.seekg(0, file.beg);

trtModelStream = new char[size];

assert(trtModelStream);

file.read(trtModelStream, size);

file.close();

// prepare input data ---------------------------

static float data[BATCH_SIZE * 3 * INPUT_H * INPUT_W];

//for (int i = 0; i < 3 * INPUT_H * INPUT_W; i++)

// data[i] = 1.0;

static float prob[BATCH_SIZE * OUTPUT_SIZE];

IRuntime* runtime = createInferRuntime(gLogger);

assert(runtime != nullptr);

ICudaEngine* engine = runtime->deserializeCudaEngine(trtModelStream, size);

assert(engine != nullptr);

IExecutionContext* context = engine->createExecutionContext();

assert(context != nullptr);

delete[] trtModelStream;

assert(engine->getNbBindings() == 2);

void* buffers[2];

// In order to bind the buffers, we need to know the names of the input and output tensors.

// Note that indices are guaranteed to be less than IEngine::getNbBindings()

const int inputIndex = engine->getBindingIndex(INPUT_BLOB_NAME);

const int outputIndex = engine->getBindingIndex(OUTPUT_BLOB_NAME);

assert(inputIndex == 0);

assert(outputIndex == 1);

// Create GPU buffers on device

CUDA_CHECK(cudaMalloc(&buffers[inputIndex], BATCH_SIZE * 3 * INPUT_H * INPUT_W * sizeof(float)));

CUDA_CHECK(cudaMalloc(&buffers[outputIndex], BATCH_SIZE * OUTPUT_SIZE * sizeof(float)));

// Create stream

cudaStream_t stream;

CUDA_CHECK(cudaStreamCreate(&stream));

cv::VideoCapture capture("/home/cao-yolox/yolov5/tensorrtx-master/yolov5/samples/1.mp4"); #修改为自己要检测的视频或者图片,注意要写全路径,如果调用摄像头,则括号内的参数设为0,注意引号要去掉。

//cv::VideoCapture capture("../overpass.mp4");

//int fourcc = cv::VideoWriter::fourcc('M','J','P','G');

//capture.set(cv::CAP_PROP_FOURCC, fourcc);

if (!capture.isOpened()) {

std::cout << "Error opening video stream or file" << std::endl;

return -1;

}

int key;

int fcount = 0;

while (1)

{

cv::Mat frame;

capture >> frame;

if (frame.empty())

{

std::cout << "Fail to read image from camera!" << std::endl;

break;

}

fcount++;

//if (fcount < BATCH_SIZE && f + 1 != (int)file_names.size()) continue;

for (int b = 0; b < fcount; b++) {

//cv::Mat img = cv::imread(img_dir + "/" + file_names[f - fcount + 1 + b]);

cv::Mat img = frame;

if (img.empty()) continue;

cv::Mat pr_img = preprocess_img(img, INPUT_W, INPUT_H); // letterbox BGR to RGB

int i = 0;

for (int row = 0; row < INPUT_H; ++row) {

uchar* uc_pixel = pr_img.data + row * pr_img.step;

for (int col = 0; col < INPUT_W; ++col) {

data[b * 3 * INPUT_H * INPUT_W + i] = (float)uc_pixel[2] / 255.0;

data[b * 3 * INPUT_H * INPUT_W + i + INPUT_H * INPUT_W] = (float)uc_pixel[1] / 255.0;

data[b * 3 * INPUT_H * INPUT_W + i + 2 * INPUT_H * INPUT_W] = (float)uc_pixel[0] / 255.0;

uc_pixel += 3;

++i;

}

}

}

// Run inference

auto start = std::chrono::system_clock::now();

doInference(*context, stream, buffers, data, prob, BATCH_SIZE);

auto end = std::chrono::system_clock::now();

//std::cout << std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count() << "ms" << std::endl;

int fps = 1000.0 / std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count();

std::vector<std::vector<Yolo::Detection>> batch_res(fcount);

for (int b = 0; b < fcount; b++) {

auto& res = batch_res[b];

nms(res, &prob[b * OUTPUT_SIZE], CONF_THRESH, NMS_THRESH);

}

for (int b = 0; b < fcount; b++) {

auto& res = batch_res[b];

//std::cout << res.size() << std::endl;

//cv::Mat img = cv::imread(img_dir + "/" + file_names[f - fcount + 1 + b]);

for (size_t j = 0; j < res.size(); j++) {

cv::Rect r = get_rect(frame, res[j].bbox);

cv::rectangle(frame, r, cv::Scalar(0x27, 0xC1, 0x36), 2);

std::string label = my_classes[(int)res[j].class_id];

cv::putText(frame, label, cv::Point(r.x, r.y - 1), cv::FONT_HERSHEY_PLAIN, 1.2, cv::Scalar(0xFF, 0xFF, 0xFF), 2);

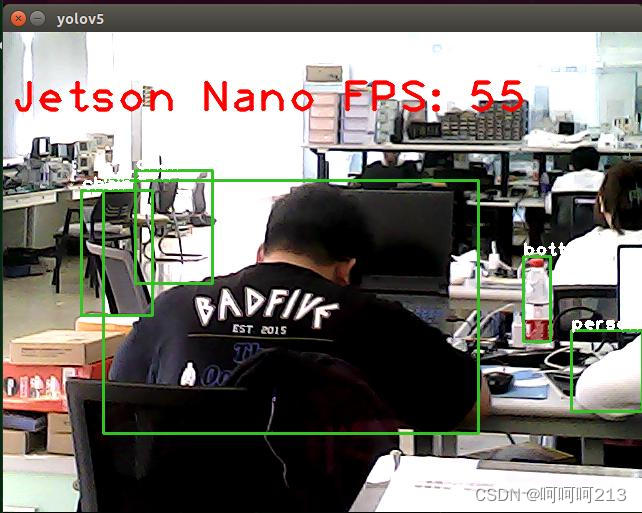

std::string jetson_fps = "Jetson Nano FPS: " + std::to_string(fps);

cv::putText(frame, jetson_fps, cv::Point(11, 80), cv::FONT_HERSHEY_PLAIN, 3, cv::Scalar(0, 0, 255), 2, cv::LINE_AA);

}

//cv::imwrite("_" + file_names[f - fcount + 1 + b], img);

}

cv::imshow("yolov5", frame);

key = cv::waitKey(1);

if (key == 'q') {

break;

}

fcount = 0;

}

capture.release();

// Release stream and buffers

cudaStreamDestroy(stream);

CUDA_CHECK(cudaFree(buffers[inputIndex]));

CUDA_CHECK(cudaFree(buffers[outputIndex]));

// Destroy the engine

context->destroy();

engine->destroy();

runtime->destroy();

return 0;

}到buid下重新make

sudo ./yolov5 -v yolov5n.engine #注意要提前插好摄像头

扩展

换源(可换可不换)

修改文件,输入以下代码

sudo vim /etc/apt/sources.list文件内容全部删除换成以下内容(此为中科源)建议备份原文件内容(新建记事本复制一份即可)

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic main multiverse restricted universe

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-security main multiverse restricted universe

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-updates main multiverse restricted universe

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-backports main multiverse restricted universe

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic main multiverse restricted universe

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-security main multiverse restricted universe

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-updates main multiverse restricted universe

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-backports main multiverse restricted universe更新软件包

sudo apt update

sudo apt-get dist-upgradepycharm安装

先安装jdk

sudo apt-get install openjdk-11-jdk验证

java -version再到官网下载 要linux版本 免费的即可

同网络下文件共享 Samba服务安装

同网络下文件共享 Samba服务安装

安装Samba

sudo apt-get install samba -y创建文件夹

mkdir sambashare配置文件

sudo vi /etc/samba/smb.conf加入

[sambashare]

comment = Samba on JetsonNano

path = /home/自己的用户名/sambashare

read only = no

browsable = yes重启Samba服务

sudo service smbd restart设置共享文件夹密码

sudo smbpasswd -a 自己的用户名再window的电脑的此电脑输入 \\ip

例如 \\192.168.1.123

终端输入ifconfig查看电脑ip

u盘兼容

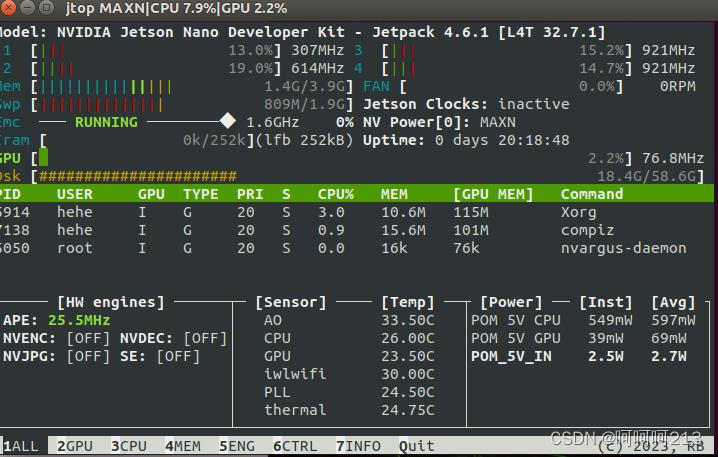

sudo apt-get install exfat-utils安装jtop可查看cpu gpu利用率等

sudo -H pip3 install jetson-stats

sudo jtop #运行jtop(第一次可能不行,第二次就好了) 按【q】退出

swap开辟

-

创建一个用于交换的空间文件:

使用

dd命令创建一个交换文件。以下是一个创建 4GB 交换文件的示例命令:sudo dd if=/dev/zero of=/swapfile bs=1M count=4096这会创建一个名为

swapfile的 4GB 交换文件。 -

设置文件权限:

为了确保交换文件的安全性,只有超级用户才能访问它。运行以下命令来设置文件权限:

sudo chmod 600 /swapfile -

将文件转换为交换空间:

使用

mkswap命令将文件转换为交换空间:sudo mkswap /swapfile -

启用交换空间:

使用以下命令启用交换空间:

sudo swapon /swapfile -

永久启用交换空间:

要在系统启动时自动启用交换空间,您需要将交换文件的信息添加到

/etc/fstab文件中。可以使用文本编辑器打开该文件:sudo nano /etc/fstab在文件的末尾添加以下行:

/swapfile none swap sw 0 0保存并退出编辑器。

-

检查交换空间:

使用以下命令确保交换空间已启用:

sudo swapon --show这将显示已启用的交换空间信息。

参考

1.Jetson Nano配置YOLOv5并实现FPS=25-CSDN博客

2.Jetson Nano部署YOLOv5与Tensorrtx加速——(自己走一遍全过程记录)_jetson yolo-CSDN博客

3.https://github.com/wang-xinyu/tensorrtx/blob/master/yolov5/README.md

1668

1668

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?