一句话区分on-policy and off-policy: 看behaviour policy和current policy是不是同一个就OK了!

我这篇文章主要想借着理解on-policy和off-policy的过程来加深对其他RL算法的认识。因为万事万物总是相互联系的,所以在自己探究,琢磨为什么有些算法是on-policy或者off-policy的过程中,对于它们的本质也有了更深的认识。

首先从DDPG说起,今天把DDPG这篇文章看完了,不得不感叹DeepMind不仅学术厉害,文章也写得好啊。读完DDPG,感觉就很容易理解,结论的得出也顺理成章。文章中虽然说,DDPG是基于DPG的,但是行文中也没有看到DPG推理过程的影子,而是另辟蹊径地从一个简单的fitted action-value function出发,轻松地推出了DPG,看完这个推理觉得没毛病。而且联想到为什么Sergey Levine在CS294中讲Advanced Q-learning algorithms的时候提到了DDPG,并且说它其实也可以看作是Q-learning with continuous actions的一个处理方法,就是learn an approximate maximizer,联系DDPG原文的阐述,这样看确实是有道理的!

下面的三行英文和公式就是DDPG原文的推导过程:

The action-value function is used in many reinforcement learning algorithms. It describes the expected return after taking an action

at

a

t

in state

st

s

t

and thereafter following policy

π

π

:

Qπ(st,at)=Eri≥t,si>t∼E,ai>t∼π[Rt|st,at] (1)

Q

π

(

s

t

,

a

t

)

=

E

r

i

≥

t

,

s

i

>

t

∼

E

,

a

i

>

t

∼

π

[

R

t

|

s

t

,

a

t

]

(

1

)

Many approaches in reinforcement learning make use of the recursive relationship known as the Bellman equation:

Qπ(st,at)=Ert,st+1∼E[r(st,at)+γEat+1∼π[Qπ(st+1,at+1)]] (2)

Q

π

(

s

t

,

a

t

)

=

E

r

t

,

s

t

+

1

∼

E

[

r

(

s

t

,

a

t

)

+

γ

E

a

t

+

1

∼

π

[

Q

π

(

s

t

+

1

,

a

t

+

1

)

]

]

(

2

)

If the target policy is deterministic we can describe it as a function

μ:S←A

μ

:

S

←

A

and avoid the inner expectation:

Qπ(st,at)=Ert,st+1∼E[r(st,at)+γQπ(st+1,μ(st+1))] (3)

Q

π

(

s

t

,

a

t

)

=

E

r

t

,

s

t

+

1

∼

E

[

r

(

s

t

,

a

t

)

+

γ

Q

π

(

s

t

+

1

,

μ

(

s

t

+

1

)

)

]

(

3

)

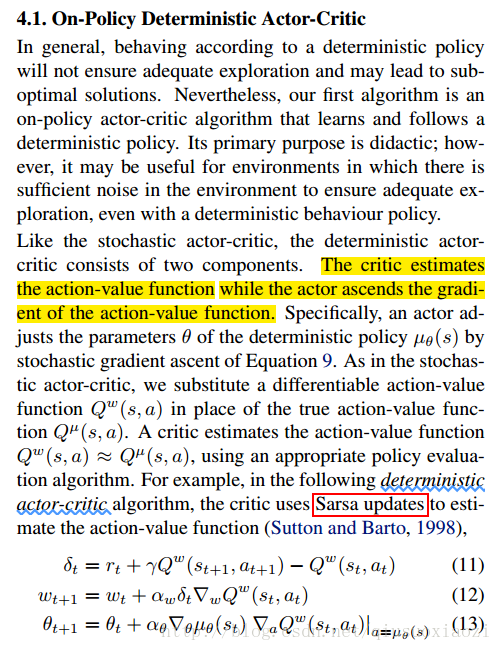

继续说DDPG,看完文章中(1)(2)(3)三个式子的推导,借着deterministic的名头,把原本对action的期望去掉了,正是由于这一招,DPG成功甩掉了on-policy的包袱(因为不需要sample from current policy来对action求期望了)。从此,谁要是说DPG是off-policy的,那么我也不敢反驳了。但是,说DPG是off-policy其实还是不严谨的。因为DPG只能算一个theorem,只能说从这个theorem出发的算法,不再像从PG theorme出发的算法那样必须戴上on-policy的枷锁。事实上,off-policy的算法也可以用on-policy的方式去训练,因为off-policy本来就对behaviour policy不做要求了,那么用current policy自然也可以。这样的例子,比如说Q-learning,如果你要用SARSA那样的方式去训练,那就成on-policy算法了。不得不感叹一句,off-policy真是自由的象征啊。再比如,下面的截图,来自于DPG原文,使用Sarsa updates,强行弄出一个On-Policy Deterministic Actor-Critic,作者说到on-policy的弊端是exploration不够,但是假如environments中的noise已经够多了,那么说不定on-policy也可以用(因为不需要再担心exploration不够的问题了)。

我当时看完DDPG用deterministic甩掉on-policy的包袱之后,一方面觉得推理顺畅,另一方面又在纳闷那么Q-learning呢?毕竟Q-learning也是在fit action-value function,那么Q-learning是怎么甩掉on-policy的包袱的呢?带着这样的问题,我又回看了CS294 fitted Q-iteration部分的PPT。我发现…fitted Q-iteration的推导也没毛病啊(笑哭)。感觉公说公有理婆说婆有理了。但是,后来我发现,其实仔细看,fitted Q-iteraion的算法过程中,它其实也会走到下面这一步:

Qπ(st,at)=Ert,st+1∼E[r(st,at)+γEat+1∼π[Qπ(st+1,at+1)]] (2) Q π ( s t , a t ) = E r t , s t + 1 ∼ E [ r ( s t , a t ) + γ E a t + 1 ∼ π [ Q π ( s t + 1 , a t + 1 ) ] ] ( 2 )

不过,由于fitted Q-iteration的current policy使用的是greedy policy,而不是一个stochastic policy,所以对于 at+1 a t + 1 的期望也用不着求了。某种程度上,fitted Q-iteration其实也可以看作是一个特殊的DPG,它的actor是 argmaxaQ(s,a) a r g m a x a Q ( s , a ) ,可以看作是一个deterministic actor。当然咯,这其实只能在脑海中这么理解,实际去证明的话还是不好证明的(至少我是这么感觉的)。原因是因为,DPG其实应该用在continuous aciton的控制中,不然 μ(st+1) μ ( s t + 1 ) 就不好解释了,如果非要用到discret action中,可能需要一些trick。

525

525

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?