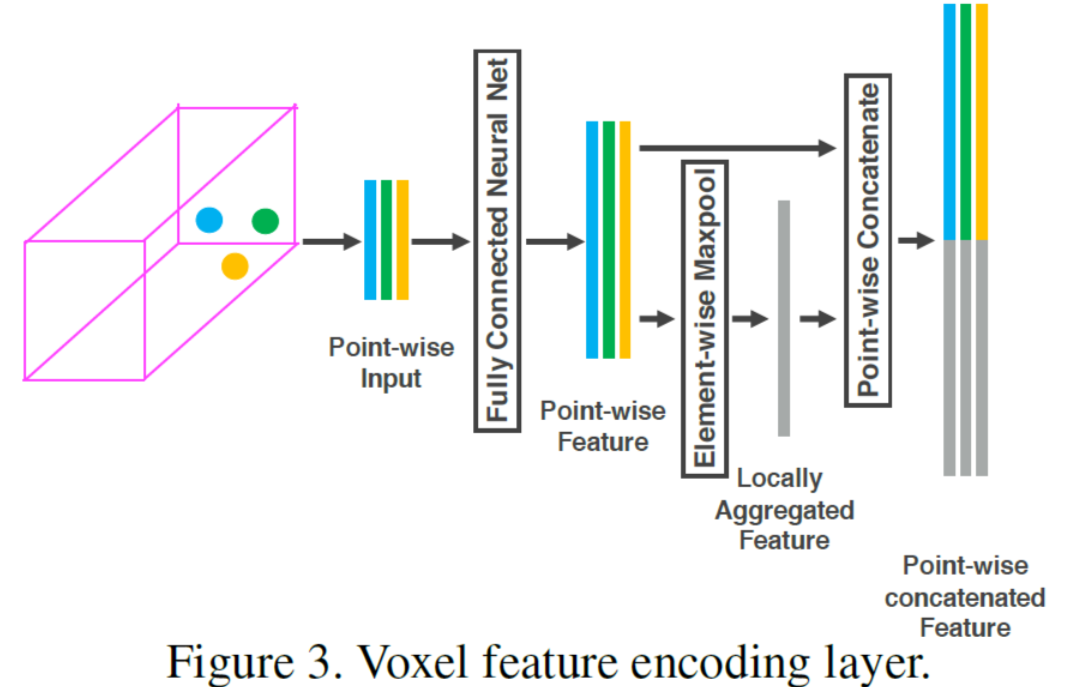

1.VFE

import torch

from .vfe_template import VFETemplate

class MeanVFE(VFETemplate):

def __init__(self, model_cfg, num_point_features, **kwargs):

super().__init__(model_cfg=model_cfg)

self.num_point_features = num_point_features

def get_output_feature_dim(self):

return self.num_point_features

def forward(self, batch_dict, **kwargs):

"""

Args:

batch_dict:

voxels: (num_voxels, max_points_per_voxel, C)

voxels:表示num_voxels 体素的数量,max_points_per_voxel每个体素所容纳的最大点的数量,C是每个点的特征数量,表示每个点的属性或特征的维度

voxel_num_points: optional (num_voxels)

voxel_num_points表示voxel_num_points参数可选,并且长度和num_voxels匹配

**kwargs:

Returns:

vfe_features: (num_voxels, C)

"""

voxel_features, voxel_num_points = batch_dict['voxels'], batch_dict['voxel_num_points']#在batch_dict里面找到voxel_features和voxel_num_points

points_mean = voxel_features[:, :, :].sum(dim=1, keepdim=False)#对所有维度进行一个切片操作,并且在第一维度求和,求和后不保留维度,即返回形状为 (num_voxels, C) 的结果

normalizer = torch.clamp_min(voxel_num_points.view(-1, 1), min=1.0).type_as(voxel_features)#正则化项, 保证每个voxel中最少有一个点,防止除0

points_mean = points_mean / normalizer## 求每个voxel内点坐标的平均值

batch_dict['voxel_features'] = points_mean.contiguous()#将处理好的voxel_feature信息重新加入batch_dict中

return batch_dict

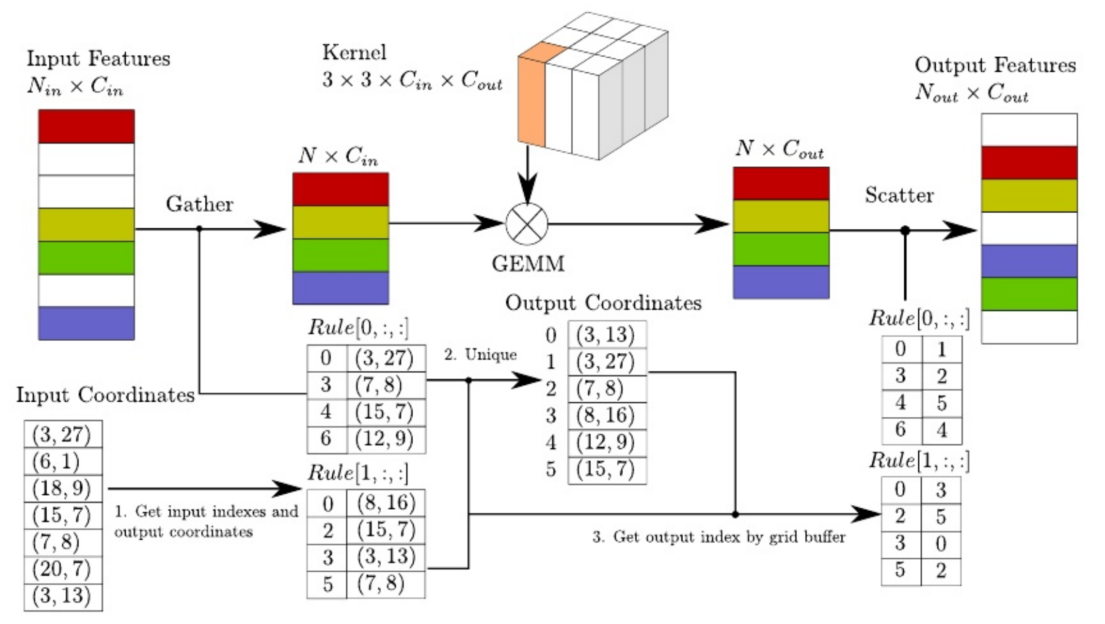

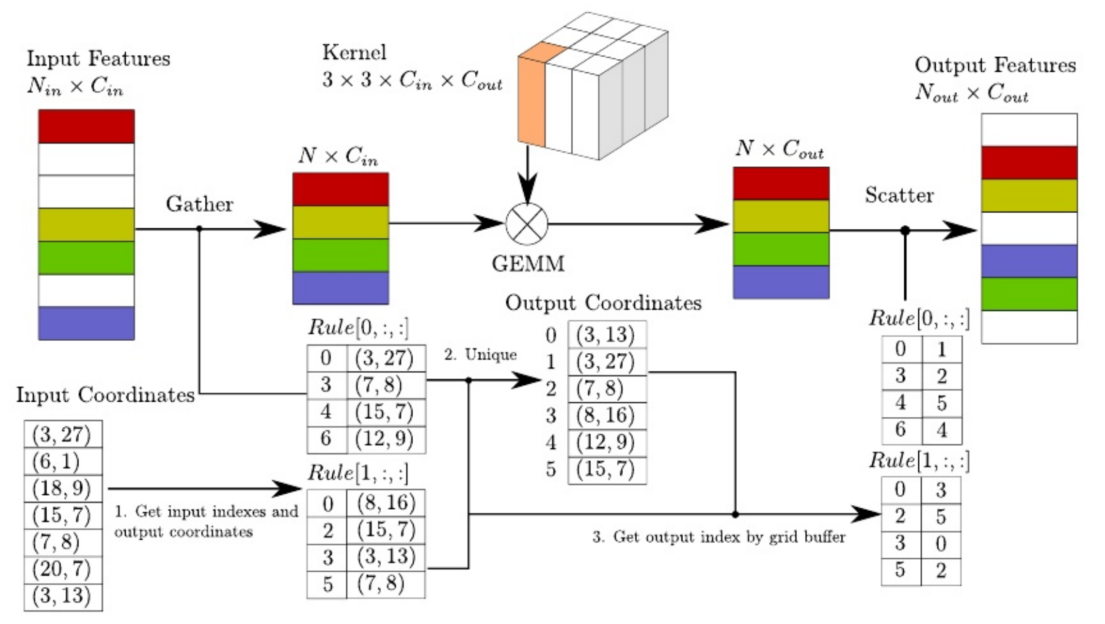

2.稀疏卷积

##稀疏卷积构建block

def post_act_block(in_channels, out_channels, kernel_size, indice_key=None, stride=1, padding=0,

conv_type='subm', norm_fn=None):

## 后处理执行块,根据conv_type选择对应的卷积操作并和norm与激活函数封装为块

if conv_type == 'subm':

conv = spconv.SubMConv3d(in_channels, out_channels, kernel_size, bias=False, indice_key=indice_key)

elif conv_type == 'spconv':

conv = spconv.SparseConv3d(in_channels, out_channels, kernel_size, stride=stride, padding=padding,

bias=False, indice_key=indice_key)

elif conv_type == 'inverseconv':

conv = spconv.SparseInverseConv3d(in_channels, out_channels, kernel_size, indice_key=indice_key, bias=False)

else:

raise NotImplementedError

m = spconv.SparseSequential(

conv,

norm_fn(out_channels),

nn.ReLU(),

)

return m

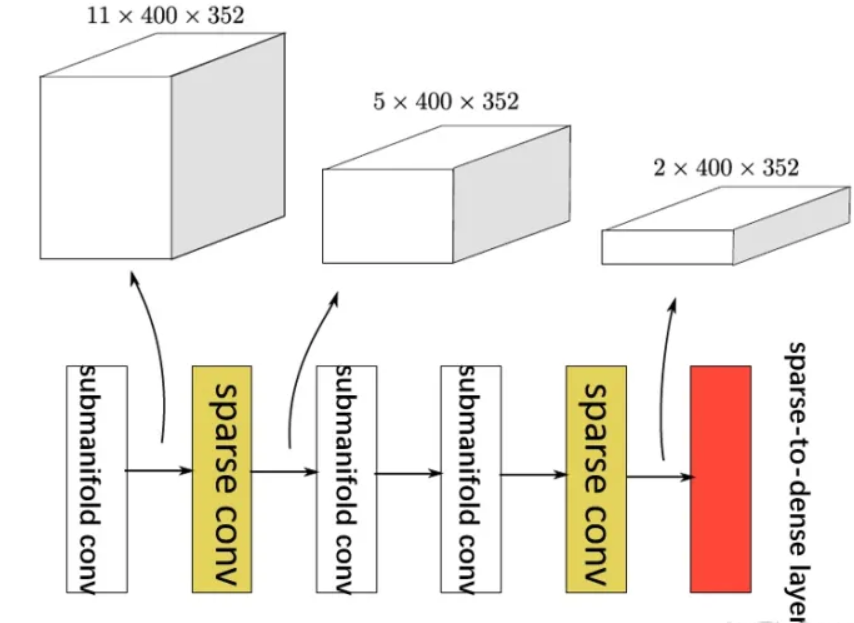

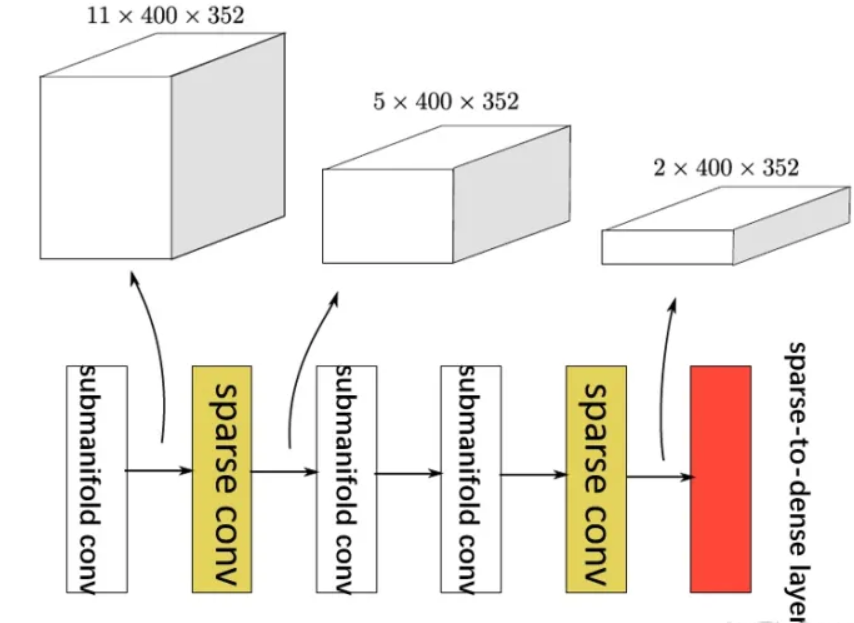

class VoxelBackBone8x(nn.Module):

def __init__(self, model_cfg, input_channels, grid_size, **kwargs):

super().__init__()

self.model_cfg = model_cfg

norm_fn = partial(nn.BatchNorm1d, eps=1e-3, momentum=0.01)#实现批归一化

self.sparse_shape = grid_size[::-1] + [1, 0, 0]

#稀疏数据中以 z、y、x 的顺序表示维度,所以grid_size[::-1将x,y,z变为z,y,x

#将偏移量 [1, 0, 0] 追加到反转后的 grid_size 列表之后,形成一个新的列表

self.conv_input = spconv.SparseSequential(

spconv.SubMConv3d(input_channels, 16, 3, padding=1, bias=False, indice_key='subm1'),#创建一个稀疏卷积层对象

norm_fn(16),#输入通道数为16的再进行一个批归一化

nn.ReLU(),#进行一个激活函数

)

block = post_act_block

self.conv1 = spconv.SparseSequential(

block(16, 16, 3, norm_fn=norm_fn, padding=1, indice_key='subm1'),

)

#self.conv1创建一个稀疏卷积层对象。

self.conv2 = spconv.SparseSequential(

# [1600, 1408, 41] <- [800, 704, 21]

block(16, 32, 3, norm_fn=norm_fn, stride=2, padding=1, indice_key='spconv2', conv_type='spconv'),

block(32, 32, 3, norm_fn=norm_fn, padding=1, indice_key='subm2'),

block(32, 32, 3, norm_fn=norm_fn, padding=1, indice_key='subm2'),

)#self.conv2包含了三个稀疏卷积层

self.conv3 = spconv.SparseSequential(

# [800, 704, 21] <- [400, 352, 11]

block(32, 64, 3, norm_fn=norm_fn, stride=2, padding=1, indice_key='spconv3', conv_type='spconv'),

block(64, 64, 3, norm_fn=norm_fn, padding=1, indice_key='subm3'),

block(64, 64, 3, norm_fn=norm_fn, padding=1, indice_key='subm3'),

)#self.conv3包含了三个稀疏卷积层

self.conv4 = spconv.SparseSequential(

# [400, 352, 11] <- [200, 176, 5]

block(64, 64, 3, norm_fn=norm_fn, stride=2, padding=(0, 1, 1), indice_key='spconv4', conv_type='spconv'),

block(64, 64, 3, norm_fn=norm_fn, padding=1, indice_key='subm4'),

block(64, 64, 3, norm_fn=norm_fn, padding=1, indice_key='subm4'),

)#self.conv4包含了三个稀疏卷积层

last_pad = 0

last_pad = self.model_cfg.get('last_pad', last_pad)#从模型配置中获取到的值,如果模型配置中不存在 'last_pad' 键,则 last_pad 保持不变。

self.conv_out = spconv.SparseSequential(

# [200, 150, 5] -> [200, 150, 2]

spconv.SparseConv3d(64, 128, (3, 1, 1), stride=(2, 1, 1), padding=last_pad,

bias=False, indice_key='spconv_down2'),

norm_fn(128),

nn.ReLU(),

)#创建一个稀疏卷积层对象,进行一个批归一化和Relu激活函数

self.num_point_features = 128

self.backbone_channels = {

'x_conv1': 16,

'x_conv2': 32,

'x_conv3': 64,

'x_conv4': 64

}

def forward(self, batch_dict):

"""

Args:

batch_dict:

batch_size: int

vfe_features: (num_voxels, C)

voxel_coords: (num_voxels, 4), [batch_idx, z_idx, y_idx, x_idx]

Returns:

batch_dict:

encoded_spconv_tensor: sparse tensor

"""

voxel_features, voxel_coords = batch_dict['voxel_features'], batch_dict['voxel_coords']

batch_size = batch_dict['batch_size']

input_sp_tensor = spconv.SparseConvTensor(

features=voxel_features,

indices=voxel_coords.int(),

spatial_shape=self.sparse_shape,

batch_size=batch_size

)

#创建的稀疏卷积的输入张量对象,该对象包含了特征值、索引、空间形状和批次大小等信息,可以作为稀疏卷积神经网络模型的输入。

x = self.conv_input(input_sp_tensor)

x_conv1 = self.conv1(x)

x_conv2 = self.conv2(x_conv1)# [1600, 1408, 41] -> [800, 704, 21]

x_conv3 = self.conv3(x_conv2)# [800, 704, 21] ->[400, 352, 11]

x_conv4 = self.conv4(x_conv3)# [400, 352, 11] -> [200, 176, 5]

# for detection head

out = self.conv_out(x_conv4) # [200, 176, 5] -> [200, 176, 2]

#将这些键值对添加到了 batch_dict 中

batch_dict.update({

'encoded_spconv_tensor': out,

'encoded_spconv_tensor_stride': 8

})

batch_dict.update({

'multi_scale_3d_features': {

'x_conv1': x_conv1,

'x_conv2': x_conv2,

'x_conv3': x_conv3,

'x_conv4': x_conv4,

}

})

batch_dict.update({

'multi_scale_3d_strides': {

'x_conv1': 1,

'x_conv2': 2,

'x_conv3': 4,

'x_conv4': 8,

}

})

return batch_dict

3.稀疏卷积中间提取器

import torch.nn as nn

#再高度方向上面进行一个压缩

class HeightCompression(nn.Module):

def __init__(self, model_cfg, **kwargs):

super().__init__()

self.model_cfg = model_cfg

self.num_bev_features = self.model_cfg.NUM_BEV_FEATURES#高度的特征数

def forward(self, batch_dict):

"""

Args:

batch_dict:

encoded_spconv_tensor: sparse tensor

Returns:

batch_dict:

spatial_features:

"""

encoded_spconv_tensor = batch_dict['encoded_spconv_tensor']#得到VoxelBackBone8x的输出特征里面的batch_dict

spatial_features = encoded_spconv_tensor.dense()#将稀疏的tensor转化为

#密集tensor,[bacth_size, 128, 2, 200, 176]目的为了结合batch,spatial_shape、indice和feature将特征还原到密集tensor中对应位置

N, C, D, H, W = spatial_features.shape## batch_size,128,2,200,176

spatial_features = spatial_features.view(N, C * D, H, W)

"""

将密集的3D tensor reshape为2D鸟瞰图特征

将两个深度方向内的voxel特征拼接成一个 shape : (batch_size, 256, 200, 176)

z轴方向上没有物体会堆叠在一起,这样做可以增大Z轴的感受野,

同时加快网络的速度,减小后期检测头的设计难度

"""

batch_dict['spatial_features'] = spatial_features#将特征和采样尺度加入batch_dict

batch_dict['spatial_features_stride'] = batch_dict['encoded_spconv_tensor_stride']# 特征图的下采样倍数为8倍

return batch_dict

4.RPN

def forward(self, data_dict):

"""

Args:

data_dict:

spatial_features

Returns:

"""

spatial_features = data_dict['spatial_features']

ups = []

ret_dict = {}

x = spatial_features

# 对不同的分支部分分别进行conv和deconv的操作

for i in range(len(self.blocks)):

"""

SECOND中存在两个下采样,则分别对两个下采样分支进行反卷积操作

分支一: (batch,128,200,176)-->(batch,256,200,176)

分支二: (batch,256,100,88)-->(batch,256,200,176)

"""

x = self.blocks[i](x)#则表示将输入张量 x 作为参数传递给第 i 个块,并将计算结果赋值给 x。

stride = int(spatial_features.shape[2] / x.shape[2])#通过将 spatial_features 的深度(depth)维度大小除以x的深度维度大小得到。这个步长值可能用于进一步的操作。

ret_dict['spatial_features_%dx' % stride] = x#将步长值和 x 的值作为键值对添加到 ret_dict 字典中。

if len(self.deblocks) > 0:

ups.append(self.deblocks[i](x))

else:

ups.append(x)

if len(ups) > 1:

"""

最终经过所有上采样层得到的2个尺度的的信息

每个尺度的 shape 都是 (batch,256,200,176)

在第一个维度上进行拼接得到x 维度是 (batch,512,200,176)

"""

x = torch.cat(ups, dim=1)

elif len(ups) == 1:

x = ups[0]

if len(self.deblocks) > len(self.blocks):

x = self.deblocks[-1](x)

data_dict['spatial_features_2d'] = x

return data_dict

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?