网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

0. 前言

下面我用三种方法来训练Bert用IMDB影评数据集

1. 什么是WordPiece

现在基本性能好一些的NLP模型,例如OpenAI GPT,google的BERT,在数据预处理的时候都会有WordPiece的过程。WordPiece字面理解是把word拆成piece一片一片,其实就是这个意思。

WordPiece的一种主要的实现方式叫做BPE(Byte-Pair Encoding)双字节编码。

BPE的过程可以理解为把一个单词再拆分,使得我们的此表会变得精简,并且寓意更加清晰。

比如"loved",“loving”,"loves"这三个单词。其实本身的语义都是“爱”的意思,但是如果我们以单词为单位,那它们就算不一样的词,在英语中不同后缀的词非常的多,就会使得词表变的很大,训练速度变慢,训练的效果也不是太好。

BPE算法通过训练,能够把上面的3个单词拆分成"lov",“ed”,“ing”,"es"几部分,这样可以把词的本身的意思和时态分开,有效的减少了词表的数量。

在Bert 里做法其实是先查找这个单词是否存在在里,如果不存在,那么会尝试分成两个词。

例如"tokenizer" --> “token”,“##izer”

2. 第一种方法-利用Google-search在git-hub开源的代码训练Bert

2.1 所需环境

1.1.1 如果有GPU的话。

conda install python=3.6

conda install tensorflow-gpu=1.11.0

如果没有GPU, cpu版本的Tensorflow也可以。只是跑的慢而已

pip install tensorflow=1.11.0

1.1.2 在GitHub上下载google-search开源的bert代码

1.1.3 下载Bert的模型参数uncased_L-12_H-768_A-12, 解压

https://storage.googleapis.com/bert_models/2018_10_18/uncased_L-12_H-768_A-12.zip

2.2 代码修改与介绍

打开run_classifier.py 文件, 加入下面这个ImdbProcessor。

数据集imdb_train.npz,imdb_test.npz和imdb_val.npz可以看我下面这个博客

Imdb影评的数据集介绍与下载

class ImdbProcessor(DataProcessor):

"""Processor for the MRPC data set (GLUE version)."""

def get\_train\_examples(self, data_dir):

data = np.load('./data/imdb\_train.npz')

return self._create_examples(data, 'train')

def get\_dev\_examples(self, data_dir):

data = np.load('./data/imdb\_val.npz')

return self._create_examples(data, 'val')

def get\_test\_examples(self, data_dir):

data = np.load('./data/imdb\_test.npz')

return self._create_examples(data,'test')

def get\_labels(self):

"""See base class."""

return ["0", "1"]

def \_create\_examples(self, train_data, set_type):

"""Creates examples for the training and dev sets."""

X = train_data['x']

Y = train_data['y']

examples = []

i = 0

for data, label in zip(X, Y):

guid = "%s-%s" % (set_type, i)

text_a = tokenization.convert_to_unicode(data)

label1 = tokenization.convert_to_unicode(str(label))

examples.append(InputExample(guid=guid, text_a=text_a, label=label1))

i = i + 1

return examples

搜main()方法在run_classifier.py文件里,然后加一下你刚才写的ImdbProcessor类

运行run_classifier.py用命令行。

export BERT_BASE_DIR=.\data\uncased_L-12_H-768_A-12

export DATASET=../data/

python run_classifier.py \

--data_dir=$DATASET \

--task_name=imdb \

--vocab_file=$BERT_BASE_DIR/vocab.txt \

--bert_config_file=$BERT_BASE_DIR/bert_config.json \

--output_dir=../output/ \

--do_train=true \

--do_eval=true \

--init_checkpoint=$BERT_BASE_DIR/bert_model.ckpt \

--max_seq_length=200 \

--train_batch_size=16 \

--learning_rate=5e-5\

--num_train_epochs=2.0

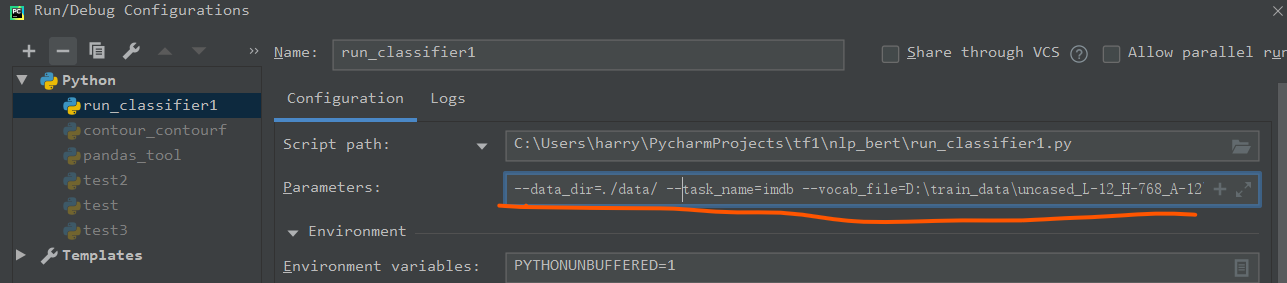

或者用pycharm运行, 通过run菜单进去的然后输入下面的参数。

注意,你要修改两个目录的参数,第一个时数据集(我的时./data/),第二个是模型的目录(我的是D:\train_data\uncased_L-12_H-768_A-12)

--data_dir=./data/ --task_name=imdb --vocab_file=D:\train_data\uncased_L-12_H-768_A-12\vocab.txt --bert_config_file=D:\train_data\uncased_L-12_H-768_A-12\bert_config.json --output_dir=../output/ --do_train=true --do_eval=true --init_checkpoint=D:\train_data\uncased_L-12_H-768_A-12\bert_model.ckpt --max_seq_length=200 --train_batch_size=16 --learning_rate=5e-5 --num_train_epochs=2.0

然后执行run_classifier.py

差不多2小时左右在GPU上就结果了。准确率92.24%

3. 第二种方法-利用 tensorflow_hub与bert-tensorflow训练Bert

所需环境

conda install python=3.6

conda install tensorflow-gpu=1.11.0

conda install tensorflow-hub

pip install bert-tensorflow

代码介绍

import pandas as pd

import tensorflow as tf

import tensorflow_hub as hub

from datetime import datetime

import bert

from bert import run_classifier

from bert import optimization

from bert import tokenization

import numpy as np

OUTPUT_DIR = 'output1'

def download\_and\_load\_datasets():

train_data = np.load('./data/bert\_train.npz')

test_data = np.load('./data/bert\_test.npz')

train_df = pd.DataFrame({'sentence':train_data['x'], 'polarity':train_data['y']})

test_df = pd.DataFrame({'sentence':test_data['x'], 'polarity':test_data['y']})

return train_df, test_df

# This is a path to an uncased (all lowercase) version of BERT

BERT_MODEL_HUB = "D:/train\_data/tf-hub/bert\_uncased\_L-12\_H-768\_A-12\_1"

def create\_tokenizer\_from\_hub\_module():

"""Get the vocab file and casing info from the Hub module."""

with tf.Graph().as_default():

bert_module = hub.Module(BERT_MODEL_HUB)

tokenization_info = bert_module(signature="tokenization\_info", as_dict=True)

with tf.Session() as sess:

vocab_file, do_lower_case = sess.run([tokenization_info["vocab\_file"], tokenization_info["do\_lower\_case"]])

return bert.tokenization.FullTokenizer(

vocab_file=vocab_file, do_lower_case=do_lower_case)

# We'll set sequences to be at most 128 tokens long.

MAX_SEQ_LENGTH = 128

# label\_list is the list of labels, i.e. True, False or 0, 1 or 'dog', 'cat'

label_list = [0, 1]

def getDataSet():

train, test = download_and_load_datasets()

#train = train.sample(5000)

#test = test.sample(5000)

DATA_COLUMN = 'sentence'

LABEL_COLUMN = 'polarity'

# Use the InputExample class from BERT's run\_classifier code to create examples from the data

train_InputExamples = train.apply(lambda x: bert.run_classifier.InputExample(guid=None,

# Globally unique ID for bookkeeping, unused in this example

text_a=x[DATA_COLUMN],

text_b=None,

label=x[LABEL_COLUMN]), axis=1)

test_InputExamples = test.apply(lambda x: bert.run_classifier.InputExample(guid=None, text_a=x[DATA_COLUMN], text_b=None, label=x[LABEL_COLUMN]), axis=1)

tokenizer = create_tokenizer_from_hub_module()

tokenizer.tokenize("This here's an example of using the BERT tokenizer")

# Convert our train and test features to InputFeatures that BERT understands.

train_features = bert.run_classifier.convert_examples_to_features(train_InputExamples, label_list, MAX_SEQ_LENGTH,tokenizer)

test_features = bert.run_classifier.convert_examples_to_features(test_InputExamples, label_list, MAX_SEQ_LENGTH, tokenizer)

return train_features, test_features

def create\_model(is_predicting, input_ids, input_mask, segment_ids, labels,

num_labels):

"""Creates a classification model."""

bert_module = hub.Module(BERT_MODEL_HUB,trainable=True)

bert_inputs = dict(input_ids=input_ids, input_mask=input_mask, segment_ids=segment_ids)

bert_outputs = bert_module(inputs=bert_inputs, signature="tokens", as_dict=True)

# Use "pooled\_output" for classification tasks on an entire sentence.

# Use "sequence\_outputs" for token-level output.

output_layer = bert_outputs["pooled\_output"]

hidden_size = output_layer.shape[-1].value

print('hidden\_size=',hidden_size)

# Create our own layer to tune for politeness data.

output_weights = tf.get_variable("output\_weights", [num_labels, hidden_size], initializer=tf.truncated_normal_initializer(stddev=0.02))

output_bias = tf.get_variable("output\_bias", [num_labels], initializer=tf.zeros_initializer())

with tf.variable_scope("loss"):

# Dropout helps prevent overfitting

output_layer = tf.nn.dropout(output_layer, keep_prob=0.9)

logits = tf.matmul(output_layer, output_weights, transpose_b=True)

logits = tf.nn.bias_add(logits, output_bias)

log_probs = tf.nn.log_softmax(logits, axis=-1)

# Convert labels into one-hot encoding

one_hot_labels = tf.one_hot(labels, depth=num_labels, dtype=tf.float32)

predicted_labels = tf.squeeze(tf.argmax(log_probs, axis=-1, output_type=tf.int32))

# If we're predicting, we want predicted labels and the probabiltiies.

if is_predicting:

return (predicted_labels, log_probs)

# If we're train/eval, compute loss between predicted and actual label

per_example_loss = -tf.reduce_sum(one_hot_labels \* log_probs, axis=-1)

loss = tf.reduce_mean(per_example_loss)

return (loss, predicted_labels, log_probs)

# model\_fn\_builder actually creates our model function

# using the passed parameters for num\_labels, learning\_rate, etc.

def model\_fn\_builder(num_labels, learning_rate, num_train_steps, num_warmup_steps):

"""Returns `model\_fn` closure for TPUEstimator."""

def model\_fn(features, labels, mode, params): # pylint: disable=unused-argument

"""The `model\_fn` for TPUEstimator."""

input_ids = features["input\_ids"]

input_mask = features["input\_mask"]

segment_ids = features["segment\_ids"]

label_ids = features["label\_ids"]

is_predicting = (mode == tf.estimator.ModeKeys.PREDICT)

# TRAIN and EVAL

if not is_predicting:

(loss, predicted_labels, log_probs) = create_model(is_predicting, input_ids, input_mask, segment_ids, label_ids, num_labels)

train_op = bert.optimization.create_optimizer(loss, learning_rate, num_train_steps, num_warmup_steps, use_tpu=False)

# Calculate evaluation metrics.

def metric\_fn(label_ids, predicted_labels):

accuracy = tf.metrics.accuracy(label_ids, predicted_labels)

f1_score = tf.contrib.metrics.f1_score( label_ids, predicted_labels)

auc = tf.metrics.auc(label_ids,predicted_labels)

recall = tf.metrics.recall( label_ids, predicted_labels)

precision = tf.metrics.precision(label_ids,predicted_labels)

true_pos = tf.metrics.true_positives(label_ids,predicted_labels)

true_neg = tf.metrics.true_negatives(label_ids, predicted_labels)

false_pos = tf.metrics.false_positives( label_ids, predicted_labels)

false_neg = tf.metrics.false_negatives( label_ids,predicted_labels)

return {"eval\_accuracy": accuracy, "f1\_score": f1_score,"auc": auc,"precision": precision,"recall": recall,

"true\_positives": true_pos,"true\_negatives": true_neg, "false\_positives": false_pos,"false\_negatives": false_neg

}

eval_metrics = metric_fn(label_ids, predicted_labels)

if mode == tf.estimator.ModeKeys.TRAIN:

return tf.estimator.EstimatorSpec(mode=mode, loss=loss, train_op=train_op)

else:

return tf.estimator.EstimatorSpec(mode=mode,loss=loss,eval_metric_ops=eval_metrics)

else:

(predicted_labels, log_probs) = create_model(is_predicting, input_ids, input_mask, segment_ids, label_ids, num_labels)

predictions = {'probabilities': log_probs,'labels': predicted_labels}

return tf.estimator.EstimatorSpec(mode, predictions=predictions)

# Return the actual model function in the closure

return model_fn

# Compute train and warmup steps from batch size

# These hyperparameters are copied from this colab notebook (https://colab.sandbox.google.com/github/tensorflow/tpu/blob/master/tools/colab/bert\_finetuning\_with\_cloud\_tpus.ipynb)

BATCH_SIZE = 16

LEARNING_RATE = 2e-5

NUM_TRAIN_EPOCHS = 3.0

# Warmup is a period of time where hte learning rate

# is small and gradually increases--usually helps training.

WARMUP_PROPORTION = 0.1

# Model configs

SAVE_CHECKPOINTS_STEPS = 500

SAVE_SUMMARY_STEPS = 100

def get\_estimator(train_features):

# Compute # train and warmup steps from batch size

num_train_steps = int(len(train_features) / BATCH_SIZE \* NUM_TRAIN_EPOCHS)

num_warmup_steps = int(num_train_steps \* WARMUP_PROPORTION)

# Specify output directory and number of checkpoint steps to save

run_config = tf.estimator.RunConfig(model_dir=OUTPUT_DIR,save_summary_steps=SAVE_SUMMARY_STEPS, save_checkpoints_steps=SAVE_CHECKPOINTS_STEPS)

model_fn = model_fn_builder(num_labels=len(label_list),learning_rate=LEARNING_RATE, num_train_steps=num_train_steps,num_warmup_steps=num_warmup_steps)

estimator = tf.estimator.Estimator(model_fn=model_fn, config=run_config, params={"batch\_size": BATCH_SIZE})

return estimator

def train\_bert\_model(train_features):

num_train_steps = int(len(train_features) / BATCH_SIZE \* NUM_TRAIN_EPOCHS)

estimator = get_estimator(train_features)

# Create an input function for training. drop\_remainder = True for using TPUs.

train_input_fn = bert.run_classifier.input_fn_builder(features=train_features, seq_length=MAX_SEQ_LENGTH, is_training=True, drop_remainder=False)

print(f'Beginning Training!')

current_time = datetime.now()

estimator.train(input_fn=train_input_fn, max_steps=num_train_steps)

print("Training took time ", datetime.now() - current_time)

def test\_bert\_model(test_features):

estimator = get_estimator(test_features)

test_input_fn = run_classifier.input_fn_builder(features=test_features,seq_length=MAX_SEQ_LENGTH,is_training=False, drop_remainder=False)

test_result = estimator.evaluate(input_fn=test_input_fn, steps=None)

print(test_result)

def getPrediction(in_sentences, train_features):

estimator = get_estimator(train_features)

labels = ["Negative", "Positive"]

tokenizer = create_tokenizer_from_hub_module()

input_examples = [run_classifier.InputExample(guid="", text_a = x, text_b = None, label = 0) for x in in_sentences] # here, "" is just a dummy label

input_features = run_classifier.convert_examples_to_features(input_examples, label_list, MAX_SEQ_LENGTH, tokenizer)

predict_input_fn = run_classifier.input_fn_builder(features=input_features, seq_length=MAX_SEQ_LENGTH, is_training=False, drop_remainder=False)

predictions = estimator.predict(predict_input_fn)

return [(sentence, prediction['probabilities'], labels[prediction['labels']]) for sentence, prediction in zip(in_sentences, predictions)]

def predict(train_features):

pred_sentences = [

"That movie was absolutely awful",

"The acting was a bit lacking",

"The film was creative and surprising",

"Absolutely fantastic!"

]

predictions = getPrediction(pred_sentences,train_features)

print(predictions)

def main(_):

train_features, test_features = getDataSet()

print(type(train_features), len(train_features))

print(type(test_features), len(test_features))

train_bert_model(train_features)

test_bert_model(test_features)

#predict(train\_features)

if __name__ == '\_\_main\_\_':

tf.app.run()

执行结果,准确率89.81%

{'auc': 0.89810693, 'eval\_accuracy': 0.8981, 'f1\_score': 0.897557, 'false\_negatives': 501.0, 'false\_positives': 518.0, 'loss': 0.52041256, 'precision': 0.8960257, 'recall': 0.8990936, 'true\_negatives': 4517.0, 'true\_positives': 4464.0, 'global\_step': 6750}

4. 第三种方法-利用huggingFace的Transformer训练Bert

3.1. 所需环境

安装transformers

pip install transformers

下载Bert预训练数据集bert-base-uncased在HuggingFace的官网

注意bert-base-uncased-tf_model.h5或bert-base-uncased-pytorch_model.bin是二选择一

一个是pytorch的,一个是tensorflow的

https://cdn.huggingface.co/bert-base-uncased-tf_model.h5

https://cdn.huggingface.co/bert-base-uncased-pytorch_model.bin

https://cdn.huggingface.co/bert-base-uncased-vocab.txt

https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-config.json

下载后放在同一个文件夹下比如bert-base-uncased

对每一个文件改名

bert-base-uncased-tf_model.h5 --> tf_model.h5

bert-base-uncased-pytorch_model.bin - > pytorch_model.bin

bert-base-uncased-vocab.txt -->vocab.txt

bert-base-uncased-config.json --> config.json

4.2. 代码解释

imdb_train.npz与imdb_test.npz数据文件参数可以看下面博客

Imdb影评的数据集介绍与下载

Transformer包是HuggingFace公司基于Google开源的bert做了一个封装。使得用起来更方便

下面代码的功能和tokenizer.encode_plus()功能是一样的。

from transformers import BertTokenizer

bert_weight_folder = r'D:\train\_data\bert\bert-base-uncased'

tokenizer = BertTokenizer.from_pretrained(bert_weight_folder, do_lower_case=True)

max_length_test = 20

test_sentence = 'Test tokenization sentence. Followed by another sentence'

# add special tokens

test_sentence_with_special_tokens = '[CLS]' + test_sentence + '[SEP]'

tokenized = tokenizer.tokenize(test_sentence_with_special_tokens)

print('tokenized', tokenized)

# convert tokens to ids in WordPiece

input_ids = tokenizer.convert_tokens_to_ids(tokenized)

# precalculation of pad length, so that we can reuse it later on

padding_length = max_length_test - len(input_ids)

# map tokens to WordPiece dictionary and add pad token for those text shorter than our max length

input_ids = input_ids + ([0] \* padding_length)

# attention should focus just on sequence with non padded tokens

attention_mask = [1] \* len(input_ids)

# do not focus attention on padded tokens

attention_mask = attention_mask + ([0] \* padding_length)

# token types, needed for example for question answering, for our purpose we will just set 0 as we have just one sequence

token_type_ids = [0] \* max_length_test

bert_input = {

"token\_ids": input_ids,

"token\_type\_ids": token_type_ids,

"attention\_mask": attention_mask

} print(bert_input)

tokenized ['[CLS]', 'test', 'token', '##ization', 'sentence', '.', 'followed', 'by', 'another', 'sentence', '[SEP]']

{

'token\_ids': [101, 3231, 19204, 3989, 6251, 1012, 2628, 2011, 2178, 6251, 102, 0, 0, 0, 0, 0, 0, 0, 0, 0],

'token\_type\_ids': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0],

'attention\_mask': [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0]

}

完整的代码时如下:

import tensorflow as tf

import os as os

import numpy as np

from transformers import BertTokenizer

from transformers import TFBertForSequenceClassification

import tensorflow as tf

from sklearn.model_selection import train_test_split

max_length = 200

batch_size = 16

learning_rate = 2e-5

number_of_epochs = 10

bert_weight_folder = r'D:\train\_data\bert\bert-base-uncased'

tokenizer = BertTokenizer.from_pretrained(bert_weight_folder, do_lower_case=True)

def convert\_example\_to\_feature(review):

return tokenizer.encode_plus(review,

add_special_tokens=True, # add [CLS], [SEP]

max_length=max_length, # max length of the text that can go to BERT

pad_to_max_length=True, # add [PAD] tokens

return_attention_mask=True, # add attention mask to not focus on pad tokens

truncation=True

)

# map to the expected input to TFBertForSequenceClassification, see here

def map\_example\_to\_dict(input_ids, attention_masks, token_type_ids, label):

return {"input\_ids": input_ids, "token\_type\_ids": token_type_ids, "attention\_mask": attention_masks,}, label

def encode\_examples(x, y , limit=-1):

# prepare list, so that we can build up final TensorFlow dataset from slices.

input_ids_list = []

token_type_ids_list = []

attention_mask_list = []

label_list = []

for review, label in zip(x, y ):

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**[需要这份系统化的资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618631832)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

el

def encode\_examples(x, y , limit=-1):

# prepare list, so that we can build up final TensorFlow dataset from slices.

input_ids_list = []

token_type_ids_list = []

attention_mask_list = []

label_list = []

for review, label in zip(x, y ):

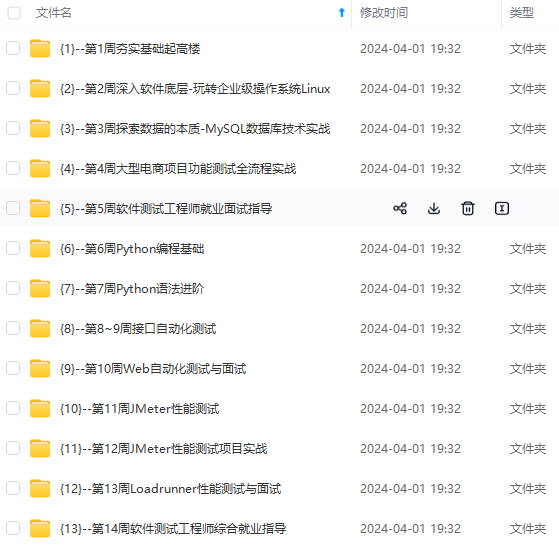

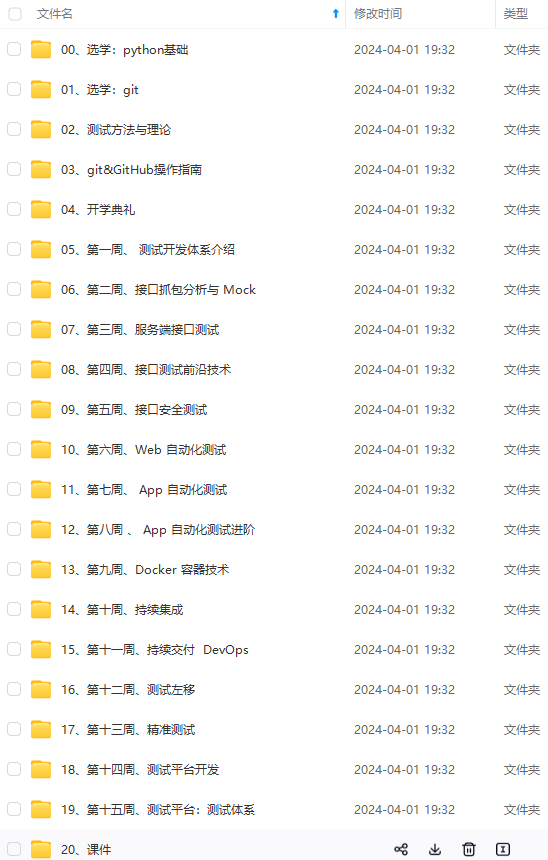

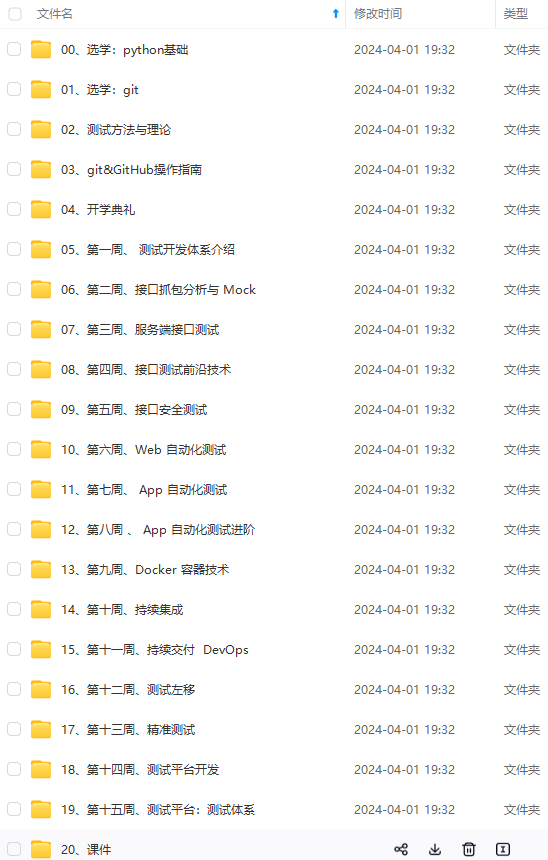

[外链图片转存中...(img-vhCTCmYc-1715898347816)]

[外链图片转存中...(img-dQ9UZRKj-1715898347816)]

**网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。**

**[需要这份系统化的资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618631832)**

**一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!**

2535

2535

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?