摘自https://medium.com/activating-robotic-minds/up-sampling-with-transposed-convolution-9ae4f2df52d0

When we use neural networks to generate images, it usually involves up-sampling from low resolution to high resolution.

There are various methods to conduct up-sampling operation:

- Nearest neighbor interpolation

- Bi-linear interpolation

- Bi-cubic interpolation

All these methods involve some interpolation method which we need to chose when deciding a network architecture. It is like a manual feature engineering and there is nothing that the network can learn about.

If we want our network to learn how to up-sample optimally, we can use the transposed convolution. It does not use a predefined interpolation method. It has learnable parameters.

Convolution Operation

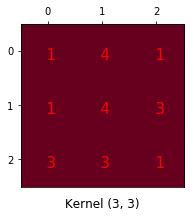

The convolution operation calculates the sum of the element-wise multiplication between the input matrix and kernel matrix.

One important point of such convolution operation is that the positional connectivity exists between the input values and the output values. More concretely, the 3x3 kernel is used to connect the 9 values in the input matrix to 1 value in the output matrix. A convolution operation forms a many-to-one relationship.

Going Backward

Now, suppose we want to go the other direction. We want to associate 1 value in a matrix to 9 values in another matrix. It’s a one-to-many relationship. This is like going backward of convolution operation, and it is the core idea of transposed convolution.

But how do we perform such operation?

Convolution Matrix

We can express a convolution operation using a matrix. It is nothing but a kernel matrix rearranged so that we can use a matrix multiplication to conduct convolution operations.

We rearrange the 3x3 kernel into a 4x16 matrix as below:

This is the convolution matrix. Each row defines one convolution operation.

With the convolution matrix, you can go from 16 (4x4) to 4 (2x2) because the convolution matrix is 4x16. Then, if you have a 16x4 matrix, you can go from 4 (2x2) to 16 (4x4).

Transposed Convolution Matrix

We want to go from 4 (2x2) to 16 (4x4). So, we use a 16x4 matrix. But there is one more thing here. We want to maintain the 1 to 9 relationship.

We have just up-sampled a smaller matrix (2x2) into a larger one (4x4). The transposed convolution maintains the 1 to 9 relationship because of the way it lays out the weights.

Jesse comment:以input中的2为例,它会与transposed Convolution Matrix中的第一列相乘,第一列有9个值不为0.即这里的一对九。而最重要的还是transposed Convolution Matrix的参数排布。确实是“反卷积”。

NB: the actual weight values in the matrix does not have to come from the original convolution matrix. What’s important is that the weight layout is transposed from that of the convolution matrix.

Summary

The transposed convolution operation forms the same connectivity as the normal convolution but in the backward direction.

We can use it to conduct up-sampling. Moreover, the weights in the transposed convolution are learnable. So we do not need a predefined interpolation method.

The transposed convolution is not a convolution. But we can emulate the transposed convolution using a convolution. We up-sample the input by adding zeros between the values in the input matrix in a way that the direct convolution produces the same effect as the transposed convolution. You may find some article explains the transposed convolution in this way. However, it is less efficient due to the need to add zeros to up-sample the input before the convolution.

Jesse comment:????

5811

5811

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?