Machine learning is being rapidly adopted for a range of applications in the financial services industry. The adoption of machine learning in financial services has been driven by both supply factors, such as technological advances in data storage, algorithms, and computing infrastructure, and by demand factors, such as profitability needs, competition with other firms, and supervisory and regulatory requirements. Machine learning in finance includes algorithmic trading, portfolio management, insurance underwriting保险承保, and fraud detection, just to name a few subject areas.

There are several types of machine learning algorithms, but the two main ones that you will commonly come across in machine learning literature are supervised and unsupervised machine learning. Our discussion in this chapter focuses on supervised learning. Supervised machine learning involves supplying both the input and output data to help the machine predict new input data. Supervised machine learning can be regression-based or classification-based. Regression-based machine learning algorithms predict continuous values, while classification-based machine learning algorithms predict a class or label.

In this chapter, we will be introduced to machine learning, study its concepts and applications in finance, and look at some practical examples for applying machine learning to assist in trading decisions. We will cover the following topics:

- Explore the uses of machine learning in finance

- Supervised and unsupervised machine learning

- Classification-based and regression-based machine learning

- Using scikit-learn for implementing machine learning algorithms

- Applying single-asset regression-based machine learning in predicting prices

- Understanding risk metrics风险指标 in measuring regression models

- Applying multi-asset regression-based machine learning in predicting returns

- Applying classification-based machine learning in predicting trends

- Understanding risk metrics in measuring classification models

Introduction to machine learning

Before machine learning algorithms became mature, many software application decisions were rule-based, consisting of a bunch of if and else statements to generate the appropriate response in exchange to some input data. A commonly cited引用 example is a spam filter function in email inboxeshttps://blog.csdn.net/Linli522362242/article/details/93034532, https://blog.csdn.net/Linli522362242/article/details/120259487. A mailbox may contain blacklisted words黑名单的词 defined by a mail server administrator or owner. Incoming emails have their contents scanned against blacklisted words, and should the blacklist condition hold true, the mail is marked as spammed and sent to the Junk folder. As the nature of unwanted emails continues to evolve to avoid detection, spam filter mechanisms must also continuously update themselves to keep up with doing a better job. However, with machine learning, spam filters can automatically learn from past email data and, given an incoming email, calculate the possibility of classifying whether the new email is spam or not.

The algorithms behind facial recognition and image detection largely work in the same way. Digital images stored in bits and bytes are collected, analyzed, and classified according to expected responses provided by the owner. This process is known as training, using a supervised learning approach. The trained data may subsequently be used for predicting the next set of input data as some output response with a certain level of confidence. On the other hand, when the training data does not contain the expected response, the machine learning algorithm is expected to learn from the training data, and this process is called unsupervised learning.

Uses of machine learning in finance

Machine learning is increasingly finding its uses in many areas of finance, such as data security, customer service, forecasting, and financial services. A number of use cases leverage big data and artificial intelligence (AI) as well; they are not exclusive to machine learning. In this section, we will examine some of the ways in which machine learning is transforming the financial sector.

Algorithmic trading

Machine learning algorithms study the statistical properties of the prices of highly correlated assets, measure their predictive power on historical data during backtesting, and forecast prices to within certain accuracy(Prediction involves generating best estimates of in-sample data. Forecasting involves generating best estimates of out-of-sample data.). Machine learning trading algorithms may involve the analysis of

- the order book,

- market depth and volume,

- news releases新闻发布, e

- arnings calls财报会议(财报电话会议),

- or financial statements财务报表,

- where the analysis translates into price movement possibilities and is taken into account for generating trading signals.

Portfolio management

The concept of robo advisors机器人顾问 has been gaining popularity in recent years to act as automated hedge fund managers. They aid with portfolio construction, optimization, allocation, and rebalancing, and even suggest to clients the instruments to invest in based on their risk tolerance and preferred choice of investment vehicle. These advisories serve as a platform for interacting with a digital financial planner, providing financial advice and portfolio management.

Supervisory and regulatory functions

Financial institutions and regulators are adopting the use of AI and machine learning to analyze, identify, and flag suspicious/səˈspɪʃəs/感觉可疑的 transactions that warrant further investigation. Supervisors such as the Securities and Exchange Commission (SEC) take a data-driven approach and employ AI, machine learning, and natural language processing to identify behavior that warrants enforcement授权执行. Worldwide, central authorities中央当局 are developing machine learning capabilities in regulatory/ˈreɡjələtɔːri /监管的 functions.

Insurance and loan underwriting保险和贷款承保

Insurance companies actively use AI and machine learning to augment some insurance sector区域,部分 functions, improve pricing and marketing of insurance products, and to reduce claims索赔 processing times and operational costs. In loan underwriting承保, many data points of a single consumer, such as age, income, and credit score, are compared against a database of candidates in building credit risk profiles, determining credit scores, and calculating the possibility of loan defaults. Such data relies on transaction and payment history from financial institutions. However, lenders are increasingly turning to social media activities, mobile phone usage, and messaging activities to capture a more holistic/hoʊˈlɪstɪk/全面的 view of creditworthiness /ˈkredɪtwɜːrðinəs/有资格接受信用贷款,好信誉 speed up lending decisions, limit incremental risk, and improve the rating accuracy of loans.

News sentiment analysis新闻情绪分析

Natural language processing, a subset of machine learning, may be used to analyze alternative data, financial statements, news announcements, and even Twitter feeds, in creating investment sentiment indicators used by hedge funds, high-frequency trading firms, social trading, and investment platforms for analyzing markets in real time. Politicians' speeches, or important new releases, such as those made by central banks, are also being analyzed in real time, where each and every word is being scrutinized/ˈskruːtənaɪzd/详细检查的 and calculated to predict which asset prices could move and by how much. Machine learning will not only understand the movement of stock prices and trades, but also understand social media feeds, news trends, and other data sourceshttps://blog.csdn.net/Linli522362242/article/details/121172551.cp8_Sentiment_urlretrieve_pyprind_tarfile_bag词袋_walk目录_regex_verbose_N-gram_Hash_colab_verbose_文本向量化_LIQING LIN的博客-CSDN博客

Machine learning beyond finance

Machine learning is increasingly being employed in areas of facial recognition, voice recognition, biometrics, trade settlement贸易结算, chatbots, sales recommendations, content creation, and more. As machine learning algorithms improve and their rate of adoption picks up, the list of use cases becomes even longer.

Let's begin our journey in machine learning by understanding some of the terminology you will come across in the machine learning literature.

Supervised and unsupervised learning

There are many types of machine learning algorithms, but the two main ones that you will commonly come across are supervised and unsupervised machine learning.

Supervised learning

Supervised learning predicts a certain output from given inputs. These pairings of input to output data are known as training data. The quality of the prediction entirely depends on the training data; incorrect training data reduces the effectiveness of the machine learning model. An example is a dataset of transactions with labels identifying which ones are fraudulent/ˈfrɔːdʒələnt/欺诈的 , and which are not. A model can then be built to predict whether a new transaction will be fraudulent.

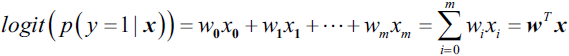

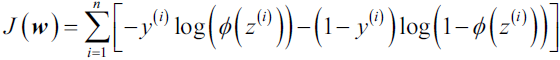

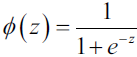

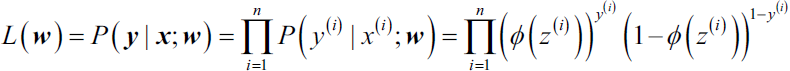

Some common algorithms in supervised learning are logistic regression, the support vector machine, and random forests.

Unsupervised learning

Unsupervised learning builds a model based on given input data that does not contain labels, but instead is asked to detect patterns in the data. This may involve identifying clusters of observations with similar underlying characteristics. Unsupervised learning aims to make accurate predictions to new, never-before-seen data.

For example, an unsupervised learning model may price illiquid非流动 securities by looking for a cluster of securities with similar characteristics. Common unsupervised learning algorithms include k-means clustering, principal component analysis, and autoencoders.

Classification and regression in supervised machine learning

There are two major types of supervised machine algorithms, mainly classification and regression.

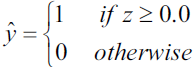

Classification machine learning models attempt to predict and classify responses from a list of predefined possibilities. These predefined possibilities may be binary classification (such as a Yes or No response to a question: Is this email spam?) or multiclass classification.

Regression machine learning models attempt to predict continuous output values. For example, predicting housing prices or the temperature expects a continuous range of output values. Common forms of regressions are ordinary least squares (OLS) regression, LASSO regression, ridge regression, and elastic net regularization.

Overfitting and underfitting models

Poor performance in machine learning models can be caused by overfitting or underfitting.

https://blog.csdn.net/Linli522362242/article/details/96480059

An overfitted machine learning model is one that is trained too well with the training data such that it leads to negative performance on new data. This occurs when the training data is fitted to every minor variation, including noise and random fluctuations. Unsupervised learning algorithms are highly susceptible/səˈseptəb(ə)l/易受影响的 to overfitting, since the model learns from every piece of data, both good and bad.

An underfitted machine learning model gives poor accuracy of prediction. It may be caused by too little training data being available to build an accurate model, or that the data is not suitable for extracting its underlying trends数据不适合提取其潜在趋势. Underfitting models are easy to detect since they give consistently poor performance. To improve such models, provide more training data or use another machine learning algorithm.

Feature engineering

A feature is an attribute of the data that defines its characteristic. By using domain knowledge of the data, features can be created to help machine learning algorithms increase their predictive performance. This can be as simple as grouping or bucketing related parts of the existing data to form defining features. Even removing unwanted features is also feature engineering即使删除不需要的特征也是特征工程.

As an example, suppose we have the following time series price data that looks like this:

Grouping the time series into buckets by the hour of the day and taking the last price action in each bucket, we end up with a feature like this:

The process of feature engineering involves these four steps:

- 1. Brainstorming features to include in the training model

- 2. Creating those features

- 3. Checking how the features work with the model

- 4. Repeating from step 1 until the features work perfectly

There are absolutely no hard and fast rules when it comes to what constitutes creating features. Feature engineering is considered more of an art than a science.

Scikit-learn for machine learning

Scikit-learn is a Python library designed for scientific computing and contains a number of state-of-the-art machine learning algorithms for classification, regression, clustering, dimensionality reduction, model selection, and preprocessing. Its name is derived from the SciPy Toolkit, which is an extension of the SciPy module. Comprehensive documentation on scikit-learn can be found at https://scikit-learn.org/stable/.

SciPy is a collection of Python modules for scientific computing, containing a number of core packages, such as NumPy, Matplotlib, IPython, and others.

In this chapter, we will be using scikit-learn's machine learning algorithms to predict securities movements. Scikit-learn require a working installation of NumPy and SciPy. Install scikit-learn with the pip package manager by using the following command:

pip install scikit-learnOR

conda install scikit-learnPredicting prices with a single-asset regression model使用单一资产回归模型预测价格

Pairs trading is a common statistical arbitrage trading strategy employed by traders using a pair of co-integrated协同整合 and highly positively correlated assets, though negatively correlated pairs can also be considered.

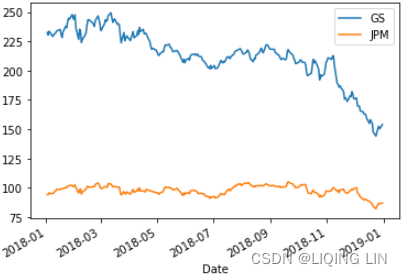

In this section, we will use machine learning to train regression-based models using the historical prices of a pair of securities that might be used in pairs trading. Given the current price of one security for a particular day, we predict the other security's price on a daily basis. The following examples uses the historical daily prices of Goldman Sachs (GS) and J.P. Morgan (JPM) traded on the New York Stock Exchange (NYSE). We will be predicting prices of JPM's stock price for the year 2018.

cointegration and correlation

to predict the prices of JPM using GS, with the assumption that the pair is cointegrated and highly correlated.

https://blog.csdn.net/Linli522362242/article/details/121721868

平稳性是很好用,但在现实中,绝大多数的股票都是非平稳的,那么我们是否还能够利用平稳性质进行获利呢?答案是肯定的,这时协整关系(cointegration)就出场了!如果两组序列是非平稳的,但它们的线性组合可以得到一个平稳序列,那么我们就说这两组时间序列数据具有协整的性质,我们同样可以把统计性质用到这个组合的序列上来。但是需要指出的一点,协整关系并不是相关关系(correlation)。

gs_jpm=df['Adj Close'].loc['2018-01-01':'2019-01-01']

gs_jpm

gs_jpm.plot()

plt.show()  两组序列是非平稳的

两组序列是非平稳的

cm = np.corrcoef( gs_jpm.values.T )

cm ![]() But, their daily prices are existing a correlation

But, their daily prices are existing a correlation

from statsmodels.tsa.stattools import coint

coin_t_statistic, pvalue, _ = coint( gs_jpm['GS'], gs_jpm['JPM'] )

pvalue![]() (the time series is not stationary) 显然,这两组数据都是非平稳的,因为均值随着时间的变化而变化。但这两组数据的daily change是具有协整关系(cointegration)的,因为他们的daily change 序列 是平稳的:

(the time series is not stationary) 显然,这两组数据都是非平稳的,因为均值随着时间的变化而变化。但这两组数据的daily change是具有协整关系(cointegration)的,因为他们的daily change 序列 是平稳的:

gs_jpm.pct_change().plot()

plt.axhline( ( gs_jpm['JPM'].pct_change()

#gs_jpm['GS'].pct_change()

).mean(),

color="b", linestyle="--"

)

plt.axhline( ( #gs_jpm['JPM'].pct_change()#-\

gs_jpm['GS'].pct_change()

).mean(),

color="k", linestyle="--"

)

# plt.axhline( ( gs_jpm['JPM'].pct_change()-\

# gs_jpm['GS'].pct_change()

# ).mean(),

# color="k", linestyle="-"

# )

plt.show()

# pct_change() : prices-prices.shift(1)/prices.shift(1)

plt.plot( gs_jpm['JPM'].pct_change()-\

gs_jpm['GS'].pct_change()

)

plt.axhline( ( gs_jpm['JPM'].pct_change()-\

gs_jpm['GS'].pct_change()

).mean(),

color="red", linestyle="--")

plt.xlabel("Time")

plt.ylabel("Price")

plt.legend(["JPM-GS", "Mean"])

plt.show()

上图中,可以看出蓝线 一直围绕均值波动。而均值不随时间变化(其实方差也不随时间变化)他们的daily change 差序列![]() 也是平稳的

也是平稳的

coin_t_statistic, pvalue, _ = coint( gs_jpm['GS'].pct_change().dropna(),

gs_jpm['JPM'].pct_change().dropna()

)

pvalue![]()

import numpy as np

cm = np.corrcoef( gs_jpm['GS'].pct_change().dropna(),

gs_jpm['JPM'].pct_change().dropna()

)

cm ![]() their daily change is highly correlated(so we can predict the prices of JPM using GS)

their daily change is highly correlated(so we can predict the prices of JPM using GS)

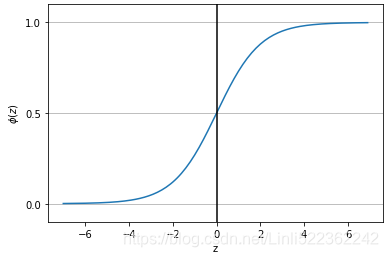

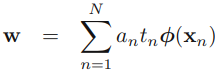

Linear regression by OLS

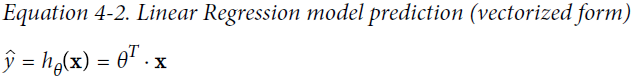

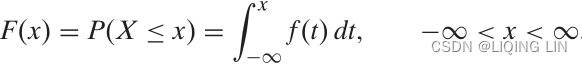

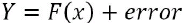

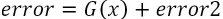

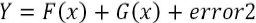

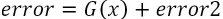

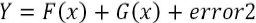

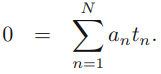

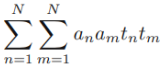

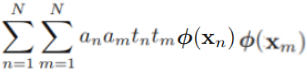

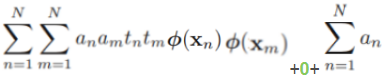

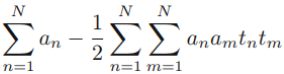

Let's begin our investigation of regression-based machine learning with a simple linear regression model. A straight line is in the following form: OR

OR

This attempts to fit the data by OLS:

is the coefficients(or the weight coefficients of the explanatory variable

) (coef_)

is the value of the y-intercept (intercept_) when

- x is a matrix containing all the features(excluding labels) of all instances in the dataset. There is one row per instance and the

row containing n features

is the predicted value from the straight line

- Our goal is to learn the weights of the linear equation to describe the relationship between the explanatory variable

and the target variable

, which can then be used to predict the responses

of new explanatory variables that were not part of the training dataset.

one feature

one feature two features

two features

The coefficients and intercept are determined by minimizing the cost function: =

![]() m: the number of instances

m: the number of instances

minimize the residual sum of squares between the observed targets in the dataset, and the targets predicted

(or

) by the linear approximation.

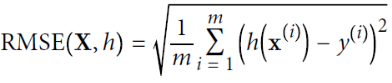

==>minimizes: MSE(Mean Square Error) cost function for a Linear Regression model

minimizes:![]()

==> minimizes RMSE(Root Mean Square Error)

minimizes:  ==> need to find the value of θ or

==> need to find the value of θ or that minimizes the RMSE

note: the RMSE is more sensitive to outliers than the MAE ( the sum of absolutes MAE(Mean Absolute Error, It is sometimes called the Manhattan norm) since the higher the norm index

( the sum of absolutes MAE(Mean Absolute Error, It is sometimes called the Manhattan norm) since the higher the norm index (the more it focuses on large values and neglects small ones). But when outliers are exponentially rare (like in a bell-shaped curve), the RMSE performs very well and is generally preferred.

y is the dataset of observed actual values(labels) used in performing a straight-line fit. In other words, we are performing a least sum of squared errors in finding the coefficients and

, from which we can predict the current period.

Before developing a model, let's download and prepare the required datasets.

Preparing the independent and target variables

Let's obtain the datasets of GS and JPM prices with the following code:

import yfinance as yf

df = yf.download( 'JPM GS')

dfGoldman Sachs (GS) and J.P. Morgan (JPM)

Let's prepare our independent variables with the following code:

import pandas as pd

df_x = pd.DataFrame({ 'GS': df['Adj Close']['GS'] }).dropna()

df_x

The adjusted closing prices of GS are extracted to a new DataFrame object, df_x. Next, obtain our target variables with the following code:

jpm_prices = df['Adj Close']['JPM']

jpm_prices

The adjusted closing prices of JPM are extracted to the jpm_prices variable as a pandas Series object. Having prepared our datasets for use in modeling, let's proceed to develop the linear regression model.

Writing the linear regression model

(使用1个特征值('GS')的连续多个之前日期的adjusted closing prices作为样本训练当前日期的model,使用该模型对当前的jpm closing price进行预测。每个日期都有一个model对该日期进行预测jpm closing price)

We will create a class for using a linear regression model to fit and predict values. This class also serves as a base class for implementing other models in this chapter. The following steps illustrates this process.

- 1. Declare a class named LinearRegressionModel as follows:

In the constructor of our new class, we declare a pandas DataFrame called df_result to store the actual and predicted values for plotting on a chart later on.

The get_model() method returns an instance of the LinearRegression class in the sklearn.linear_model module for fitting and predicting the data.

The set fit_intercept parameter is set to True as the data is not centered (around 0 on the x- and y-axes, that is).

fit_intercept bool, default=True

Whether to calculate the intercept for this model. If set to False, no intercept will be used in calculations (i.e. data is expected to be centered).

if set to True, we will append a feature column which is filled with 1s.

normalize bool, default=False

This parameter is ignored when fit_intercept is set to False. If True, the regressors X will be normalized before regression by subtracting the mean and dividing by the l2-norm. If you wish to standardize, please use StandardScaler before callingfiton an estimator withnormalize=False.

More information about the LinearRegression of scikit-learn can be found at https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LinearRegression.html

The get_prices_since() method slices a subset of the supplied dataset with the iloc command, from the given date index date_since and up to a number of earlier periods defined by the lookback value.from sklearn.linear_model import LinearRegression import numpy as np class LinearRegressionModel( object ): def __init__(self): self.df_result = pd.DataFrame( columns=['Actual', 'Predicted'] ) def get_model( self ): return LinearRegression( fit_intercept=False ) def get_prices_since( self, df, date_since, lookback ): index = df.index.get_loc( date_since ) return df.iloc[ index-lookback:index ] def learn( self, df, ys, start_date, end_date, lookback_period=20 ): model = self.get_model() df.sort_index( inplace=True ) for date in df[start_date:end_date].index: # Fit the model x = self.get_prices_since( df, date, lookback_period ) y = self.get_prices_since( ys, date, lookback_period ) model.fit( x.values, y.ravel() ) # Predict the current period x_current = df.loc[date].values # e.g. [232.54751587] [y_pred] = model.predict([x_current]) # [y_pred]=[93.2564989817077] # ==> y_pred = 93.2564989817077 # Store predictions new_index = pd.to_datetime( date, format='%Y-%m-%d' ) y_actual = ys.loc[date] self.df_result.loc[new_index] = [y_actual, y_pred] - 2. The learn() method serves as the entry point for running the model. It accepts the df_x and ys parameters as our independent and target variables, start_date and end_date as strings corresponding to the index of the dataset for the period we will be predicting, and the lookback_period parameter as the number of historical data points used for fitting the model in the current period.

The for loop simulates a backtest on a daily basis. The call to get_prices_since() fetches a subset of the dataset for fitting the model on the x- and y-axes with the fit() command. The ravel() command transforms the given pandas Series object into a flattened list of target values for fitting the model.

The x_current variable represents independent variable values for the specified date, fed into the predict() method. The predicted output is a list object, from which we extract the first value. Both the actual and predicted values are saved to the df_result DataFrame, indexed by the current date as a pandas object. - 3. Let's instantiate this class and run our machine learning model by issuing the following commands:

linear_reg_model = LinearRegressionModel() linear_reg_model.learn( df_x, jpm_prices, start_date='2018-01-01', end_date='2019-01-01', lookback_period=20 ) linear_reg_model.df_resultIn the learn() command, we provided our prepared datasets, df_x and jpm_prices , and specified the prediction for the year of 2018. For this example, we assumed there are 20 trading days in a month. Using a lookback_period value of 20 , we are using a past month's prices to fit our model for prediction daily.

- 4. Let's retrieve the resulting df_result DataFrame from the model and plot both the actual and predicted values:

%matplotlib inline import matplotlib.pyplot as plt linear_reg_model.df_result.plot( title='JPM prediction by OLS', style=['b-', 'g--'], figsize=(12,8), ) plt.show()In the style parameter, we specified that actual values are to be drawn as a solid line, and predicted values drawn as dotted lines. This gives us the following graph:

The chart shows our predicted results trailing closely behind the actual values up to a certain extent. How well does our model actually perform? In the next section, we will discuss several common risk metrics for measuring regression-based models.

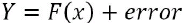

Risk metrics for measuring prediction performance

The sklearn.metrics module implements several regression metrics for measuring prediction performance. We will discuss the mean absolute error, the mean squared error, the explained variance score, and the score in subsequent sections.

Mean Absolute Error as a risk metric

The mean absolute error (MAE) is a risk metric that measures the average absolute prediction error and can be written as follows:  OR

OR

Here, y and are the actual and predicted lists of values, respectively, with the same length, m. and

are the predicted and

actual values, respectively, at the index i. Taking the absolute values of errors means that our output results in a positive decimal value. Low values of MAE are highly desired. A perfect score of 0 implies that our prediction powers are exactly aligned with actual values, since there are no differences between the two.

Obtain the MAE value of our predictions using the mean_abolute_error function of the sklearn.metrics module with the following code:

from sklearn.metrics import mean_absolute_error

actual = linear_reg_model.df_result['Actual']

predicted = linear_reg_model.df_result['Predicted']

mae = mean_absolute_error( actual, predicted )

print('Mean Absolute Error:', mae)![]()

![]()

The MAE of our linear regression model is 2.213.

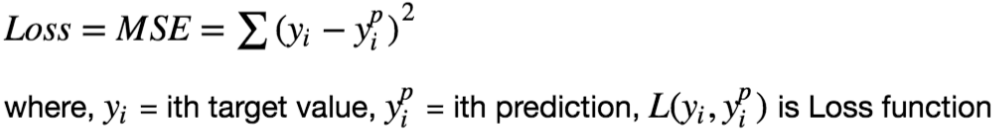

Mean Squared Error as a risk metric

Like the MAE, the mean squared error (MSE) is a risk metric that measures the average of the squares of the prediction errors and can be written as follows:

OR

![]()

Squaring the errors means that values of MSE are always positive, and low values of MSE are highly desired. A perfect MSE score of 0 implies that our prediction powers are exactly aligned with actual values, and that the squares of such differences are negligible. While the application of both the MSE and MAE helps determine the strength of our model's predictive powers, MSE triumphs/ˈtraɪʌmfs/ over击败,得胜,成功 MAE by penalizing errors that are farther away from the mean(MSE通过惩罚远离均值的错误来战胜MAE). Squaring the errors places a heavier bias on the risk metrics(对误差进行平方会使风险指标产生更大的偏差).

Obtain the MSE value of our predictions using the mean_squared_error function of the sklearn. metrics module with the following code:

from sklearn.metrics import mean_squared_error

mse = mean_squared_error( actual, predicted )

print( 'Mean Squared Error:',mse )![]()

![]()

The MSE of our linear regression model is 9.854 .

Explained variance score as a risk metric

The explained variance score explains the dispersion of errors of a given dataset解释给定数据集的误差分布, and the formula is written as follows:

Here, and

is the variance of prediction errors and actual values respectively. Scores close to 1.0 are highly desired, indicating better squares of standard deviations of errors.

Obtain the explained variance score of our predictions using the explained_variance_score function of the sklearn.metrics module with the following code:

from sklearn.metrics import explained_variance_score

eva = explained_variance_score( actual, predicted )

print( "Explained Variance Score:", eva )![]()

The explained variance score of our linear regression model is 0.533.

R^2 as a risk metric

as a risk metric

The score is also known as the coefficient of determination确定系数, and it measures how well future samples are likely to be predicted by the model. It is written as follows:

OR ![]() OR

OR ![]()

Here, SSE is the sum of squared errors(OR the sum of squared of residuals)

and SST is the total sum of squares: In other words, SST is simply the variance of the response.

In other words, SST is simply the variance of the response.

Here, is the mean of actual values and can be written as follows:

Let's quickly show that ![]() is indeed just a rescaled version of the MSE:

is indeed just a rescaled version of the MSE:

scores ranges from negative values to 1.0.

- A perfect

score of 1 implies that there is no error in the regression analysis(the model fits the data perfectly with a corresponding MSE = 0(since Var(y)>0)),

the closer the value to 1, the better the fit

value to 1, the better the fit - while a score of 0 indicates that the model always predicts the mean of target values.

the closer the value to 0, the worse the fit - A

score indicates that the prediction performs below average.

Negative values mean that the model fits worse than the baseline model. Models with negative values usually indicate issues in the training data or process and cannot be used.

Obtain thescore of our predictions using the r2_score function of the sklearn.metrics module with the following code:

from sklearn.metrics import r2_score

r2 = r2_score( actual, predicted )

print( 'r^2 score:', r2 ) ![]() OR

OR

The of our linear regression model is 0.416. This implies that 41.6% of the variability of the target variables have been accounted for.

Coefficient of determination, in statistics, ![]() (or R2), a measure that assesses the ability of a model to predict or explain an outcome in the linear regression setting. More specifically,

(or R2), a measure that assesses the ability of a model to predict or explain an outcome in the linear regression setting. More specifically, ![]() indicates the proportion of the variance方差 in the dependent variable 因变量(Y) that is predicted or explained by linear regression and the predictor variable (X, also known as the independent variable自变量).

indicates the proportion of the variance方差 in the dependent variable 因变量(Y) that is predicted or explained by linear regression and the predictor variable (X, also known as the independent variable自变量).

The coefficient of determination![]() shows only association. As with linear regression, it is impossible to use to determine whether one variable causes the other. In addition, the coefficient of determination shows only the magnitude of the association, not whether that association is statistically significant.

shows only association. As with linear regression, it is impossible to use to determine whether one variable causes the other. In addition, the coefficient of determination shows only the magnitude of the association, not whether that association is statistically significant.

In general, a high R2 value indicates that the model is a good fit for the data, although interpretations of fit depend on the context of analysis. An R2 of 0.35, for example, indicates that 35 percent of the variation变动,差异 in the outcome has been explained just by predicting the outcome using the covariates(协变量,  ) included in the model表明仅通过使用模型中包含的协变量, 预测结果即可解释结果差异的35%. That percentage might be a very high portion of variation to predict in a field such as the social sciences; in other fields, such as the physical sciences, one would expect R2 to be much closer to 100 percent. The theoretical minimum R2 is 0. However, since linear regression is based on the best possible fit, R2 will always be greater than zero, even when the predictor(X) and outcome variables(Y) bear no relationship to one another.

) included in the model表明仅通过使用模型中包含的协变量, 预测结果即可解释结果差异的35%. That percentage might be a very high portion of variation to predict in a field such as the social sciences; in other fields, such as the physical sciences, one would expect R2 to be much closer to 100 percent. The theoretical minimum R2 is 0. However, since linear regression is based on the best possible fit, R2 will always be greater than zero, even when the predictor(X) and outcome variables(Y) bear no relationship to one another.

R2 increases when a new predictor variable is added to the model, even if the new predictor is not associated with the outcome

increases when a new predictor variable is added to the model, even if the new predictor is not associated with the outcome . To account for that effect, the adjusted R2 (typically denoted with a bar over the R in R2) incorporates the same information as the usual but then also penalizes for the number(k) of predictor variables included in the model. As a result, R2 increases as new predictors are added to a multiple linear regression model, but the adjusted R2 increases only if the increase in R2 is greater than one would expect from chance alone

. To account for that effect, the adjusted R2 (typically denoted with a bar over the R in R2) incorporates the same information as the usual but then also penalizes for the number(k) of predictor variables included in the model. As a result, R2 increases as new predictors are added to a multiple linear regression model, but the adjusted R2 increases only if the increase in R2 is greater than one would expect from chance alone 仅当新项对模型的改进超出偶然的预期时,the adjusted R2 才会增加. It decreases when a predictor improves the model(decreases MSE or

仅当新项对模型的改进超出偶然的预期时,the adjusted R2 才会增加. It decreases when a predictor improves the model(decreases MSE or ) by less than expected by chance.

In such a model, the adjusted R2 is the most realistic estimate of the proportion of the variation that is predicted by the covariates included in the model.

In such a model, the adjusted R2 is the most realistic estimate of the proportion of the variation that is predicted by the covariates included in the model.

# lookback_period = 20

print( 'Adjusted r^2 score:',

1 - (mse/ np.std(actual)**2)*(len(actual)-1)/(len(actual)-20-1) )

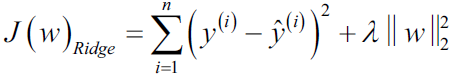

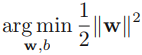

Ridge regression

regularization(e.g. L2 shrinkage or weight decay ) is one approach to tackling the problem of overfitting by adding additional information, and thereby shrinking the parameter values of the model to induce a penalty against complexity. The most popular approaches to regularized linear regression are the so-called Ridge Regression, least absolute shrinkage and selection operator (LASSO), and elastic Net.

) is one approach to tackling the problem of overfitting by adding additional information, and thereby shrinking the parameter values of the model to induce a penalty against complexity. The most popular approaches to regularized linear regression are the so-called Ridge Regression, least absolute shrinkage and selection operator (LASSO), and elastic Net.

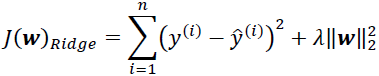

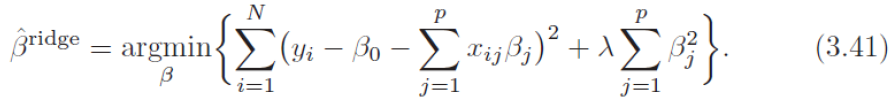

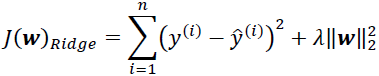

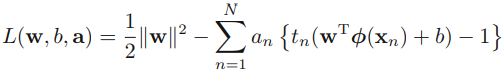

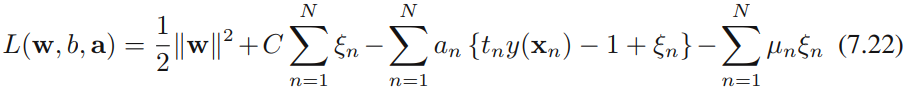

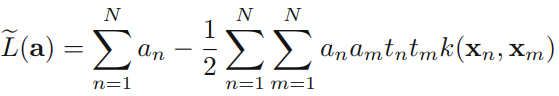

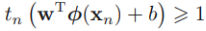

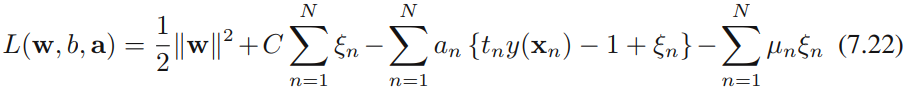

The ridge regression, or L2 regularization(ℓ2 norm), addresses some of the problems of OLS regression by imposing a penalty on the size of the coefficients. Ridge Regression is an L2 penalized model where we simply add a regularization term to our least-squares cost function. This forces the learning algorithm to not only fit the data but also keep the model weights as small as possible. Note that the regularization term should only be added to the cost function during training. Once the model is trained, you want to evaluate the model’s performance using the unregularized performance measure: OR

OR![]()

OR

OR

Here, the α or 𝜆 parameter is expected to be a positive value that controls the amount of shrinkage. Larger values of alpha give greater shrinkage, making the coefficients more robust to collinearity.

n instances ==>

n instances ==>

m instances

Ridge gradient vector :

The penalty term ![]() without using the term

without using the term ![]() for convenient computation

for convenient computation

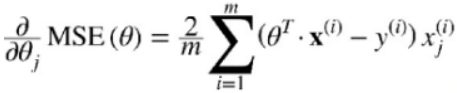

For Gradient Descent, just add 2αw to the MSE gradient vector (Equation 4-6, n features, m instances).

+αw (or +α

+αw (or +α![]() )

)![]() αw

αw

![]() Note: here

Note: here ![]() ==

==![]()

By increasing the value of hyperparameter α or 𝜆 , we increase the regularization strength and thereby shrink the weights of our model. Please note that we don't regularize the intercept term, or

. The hyperparameter 𝜆 controls how much you want to regularize the model. If 𝜆= 0 then Ridge Regression is just Linear Regression. If 𝜆 is very large, then all weights end up very close to zero

and the result is a flat line going through the data’s mean.https://scikit-learn.org/stable/auto_examples/linear_model/plot_ridge_path.html

and the result is a flat line going through the data’s mean.https://scikit-learn.org/stable/auto_examples/linear_model/plot_ridge_path.html

import numpy as np

import matplotlib.pyplot as plt

from sklearn import linear_model

# X is the 10x10 Hilbert matrix

X = 1.0 / (np.arange(1, 11) + np.arange(0, 10)[:, np.newaxis])

y = np.ones(10)

n_alphas = 200

alphas = np.logspace(-10, -2, n_alphas)

coefs = []

for a in alphas:

ridge = linear_model.Ridge(alpha=a, fit_intercept=False)

ridge.fit(X, y)

coefs.append(ridge.coef_)

ax = plt.gca()

ax.plot(alphas, coefs)

ax.set_xscale("log")

ax.set_xlim(ax.get_xlim()[::-1]) # reverse axis

plt.xlabel("alpha")

plt.ylabel("weights")

plt.title("Ridge coefficients as a function of the regularization")

plt.axis("tight")

plt.show()

将 (3.41) 的准则写成矩阵形式 ![]()

可以简单地看出岭回归的解为 ![]() (本质在自变量信息矩阵的主对角线元素上人为地加入一个非负因子

(本质在自变量信息矩阵的主对角线元素上人为地加入一个非负因子![]() (Ridge Regression closed-form solution)

(Ridge Regression closed-form solution)

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.preprocessing import PolynomialFeatures

np.random.seed(42)

m=20 #number of instances

X= 3*np.random.rand(m,1) #one feature #X=range(0,3)

#noise

y=1 + 0.5*X + np.random.randn(m,1)/1.5

X_new = np.linspace(0,3, 100).reshape(100,1)

from sklearn.linear_model import Ridge

def plot_model(model_class, polynomial, alphas, **model_kargs):

for alpha, style in zip( alphas, ("b-", "y--", "r:") ):

model = model_class(alpha, **model_kargs) if alpha>0 else LinearRegression()

if polynomial:

model = Pipeline([

("poly_features", PolynomialFeatures(degree=10, include_bias=False)),

("std_scaler", StandardScaler()),

("regul_reg", model) #regulized regression

])

model.fit(X,y)

y_new_regul = model.predict(X_new)

lw = 5 if alpha>0 else 1

plt.plot(X_new, y_new_regul, style, linewidth=lw, label=r"$\alpha = {}$".format(alpha) )

plt.plot(X,y, "b.", linewidth=3)

plt.legend(loc="upper left", fontsize=15)

plt.xlabel("$x_1$", fontsize=18)

plt.axis([0,3, 0,4])

plt.figure(figsize=(8,4) )

plt.subplot(121)

plot_model(Ridge, polynomial=False, alphas=(0,10,100), random_state=42)#plain Ridge models are used, leading to

plt.ylabel("$y$", rotation=0, fontsize=18)

plt.subplot(122)

plot_model(Ridge, polynomial=True, alphas=(0,10**-5, 1), random_state=42)

plt.title("Figure 4-17. Ridge Regression")

plt.show()

If α =0 or 𝜆=0 then Ridge Regression is just Linear Regression.

If α is very large, then all weights end up very close to zero and the result is a flat line going through the data’s mean

Figure 4-17 shows several Ridge models trained on some linear data using different α value. On the left, plain Ridge models are used, leading to linear predictions. On the right, the data is first expanded using PolynomialFeatures(degree=10), then it is scaled using a StandardScaler, and finally the Ridge models are applied to the resulting features: this is Polynomial Regression with Ridge regularization. Note how increasing α leads to flatter (i.e., less extreme, more reasonable) predictions; this reduces the model’s variance but increases its bias.

The Ridge class of the sklearn.linear_model module implements ridge regression. To implement this model, create a class named RidgeRegressionModel that extends the LinearRegressionModel class, and run the following code:

In the new class, the get_model() method is overridden to return the ridge regression model of scikit-learn while reusing the other methods in the parent class. The alpha value is set to 0.5, and the rest of the model parameters are left as defaults. The ridge_reg_model variable represents an instance of our ridge regression model, and the learn() command is run with the usual parameters values.

from sklearn.linear_model import Ridge

class RidgeRegressionModel(LinearRegressionModel):

def get_model(self):

return Ridge( alpha=.5 )

ridge_reg_model = RidgeRegressionModel()

ridge_reg_model.learn( df_x, jpm_prices,

start_date='2018', end_date='2019',

lookback_period=20

)

%matplotlib inline

import matplotlib.pyplot as plt

ridge_reg_model.df_result.plot( title='JPM prediction by OLS',

style=['b-', 'g--'],

figsize=(12,8),

)

plt.show()

ridge_reg_model.df_result

Create a function called print_regression_metrics() to print the various risk metrics covered earlier:

from sklearn.metrics import ( mean_absolute_error,

mean_squared_error,

explained_variance_score,

r2_score

)

def print_regression_metrics( df_result ):

# convert the pandas.core.series.Series object to a list object

actual = list( df_result['Actual'] )

predicted = list( df_result['Predicted'] )

print( 'Mean Absolute Error:',

mean_absolute_error(actual, predicted)

)

print( 'mean_squared_error:',

mean_squared_error(actual, predicted)

)

print( 'explained_variance_score:',

explained_variance_score(actual, predicted)

)

print( 'r2_score:',

r2_score(actual, predicted)

)

print_regression_metrics( ridge_reg_model. df_result )

Both Mean Error scores(MAE and MSE) of the ridge regression model are lower than the linear regression model and are closer to zero. The explained variance score and the score are higher than the linear regression model and are closer to 1. This indicates that our ridge regression model is doing a better job of prediction than the linear regression model. Besides having better performance, ridge regression computations are less costly than the original linear regression model.

Selecting meaningful features

If we notice that a model performs much better on a training dataset than on the test dataset, this observation is a strong indicator of overfitting. overfitting means the model fits the parameters too closely with regard to the particular observations in the training dataset, but does not generalize well to new data, and we say the model has a high variance. The reason for the overfitting is that our model is too complex for the given training data. Common solutions to reduce the generalization error are listed as follows:

- Collect more training data

- Introduce a penalty for complexity via regularization

- Choose a simpler model with fewer parameters

- Reduce the dimensionality of the data

L1 and L2 regularization as penalties against model complexity

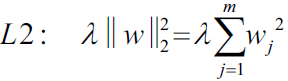

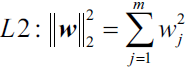

We recall from cp3 A Tour of ML Classifiers_stratify_bincount_likelihood_logistic regression_odds ratio_decay_L2 scikitlearnhttps://blog.csdn.net/Linli522362242/article/details/96480059, that L2 regularization is one approach to reduce the complexity of a model by penalizing large individual weights, where we defined the L2 norm of our weight vector w as follows:

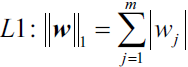

Another approach to reduce the model complexity is the related L1 regularization:

Here, we simply replaced the square of the weights by the sum of the absolute values of the weights. In contrast to L2 regularization, L1 regularization usually yields sparse[spɑrs] feature vectors; most feature weights will be zero. Sparsity['spɑ:sɪtɪ] can be useful in practice if we have a high-dimensional dataset with many features that are irrelevant[ɪˈreləvənt] especially cases where we have more irrelevant dimensions than samples. In this sense, L1 regularization can be understood as a technique for feature selection.

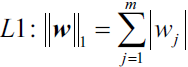

A geometric interpretation of L2 regularization and L1 regularization

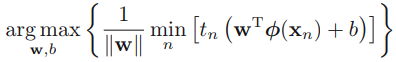

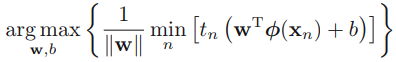

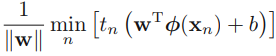

As mentioned in the previous section, L2 regularization adds a penalty term to the cost function that effectively results in less extreme weight values compared to a model trained with an unregularized cost function. To better understand how L1 regularization encourages sparsity, let's take a step back and take a look at a geometric interpretation of regularization. Let us plot the contours of a convex cost function for two weight coefficients  and

and  . Here, we will consider the Sum of Squared Errors (SSE) cost function that we used for Adaline in https://blog.csdn.net/Linli522362242/article/details/96429442, Training Simple Machine Learning Algorithms for Classification, since it is spherical [ˈsfɪrɪkəl, ˈsfɛr-] and easier to draw than the cost function of logistic regression; however, the same concepts apply to the latter. Remember that our goal is to find the combination of weight coefficients that minimize the cost function for the training data, as shown in the following figure (the point in the center of the ellipses):

. Here, we will consider the Sum of Squared Errors (SSE) cost function that we used for Adaline in https://blog.csdn.net/Linli522362242/article/details/96429442, Training Simple Machine Learning Algorithms for Classification, since it is spherical [ˈsfɪrɪkəl, ˈsfɛr-] and easier to draw than the cost function of logistic regression; however, the same concepts apply to the latter. Remember that our goal is to find the combination of weight coefficients that minimize the cost function for the training data, as shown in the following figure (the point in the center of the ellipses):

Now, we can think of regularization as adding a penalty term to the cost function to encourage smaller weights; or, in other words, we penalize large weights.

Thus, by increasing the regularization strength via the regularization parameter , we shrink the weights towards zero and decrease the dependence of our model on the training data. Let's illustrate this concept in the following figure for the L2 penalty term.

, we shrink the weights towards zero and decrease the dependence of our model on the training data. Let's illustrate this concept in the following figure for the L2 penalty term.

04_TrainingModels_02_regularization_L2_cost_Ridge_Lasso_Elastic Net_Early Stopping: https://blog.csdn.net/Linli522362242/article/details/104070847

The quadratic L2 regularization term is represented by the shaded ball. Here, our weight coefficients cannot exceed our regularization budget—the combination of the weight coefficients###W=w1, w2, w3...wn### cannot fall outside the shaded area. On the other hand, we still want to minimize the cost function(such as![]() The term

The term is just added for our convenience https://blog.csdn.net/Linli522362242/article/details/96480059). Under the penalty constraint, our best effort is to choose the point where the L2 ball intersects with the contours of the unpenalized cost function. The larger the value of the regularization parameter

is just added for our convenience https://blog.csdn.net/Linli522362242/article/details/96480059). Under the penalty constraint, our best effort is to choose the point where the L2 ball intersects with the contours of the unpenalized cost function. The larger the value of the regularization parameter  gets, the faster the penalized cost function grows, which leads to a narrower L2 ball. For example, if we increase the regularization parameter

gets, the faster the penalized cost function grows, which leads to a narrower L2 ball. For example, if we increase the regularization parameter towards infinity, the weight coefficients will become effectively zero, denoted by the center of the L2 ball. To summarize the main message of the example: our goal is to minimize the sum of the unpenalized cost function

towards infinity, the weight coefficients will become effectively zero, denoted by the center of the L2 ball. To summarize the main message of the example: our goal is to minimize the sum of the unpenalized cost function plus the penalty term

plus the penalty term, which can be understood as adding bias and preferring a simpler model to reduce the variance(try to underfit) in the absence of sufficient training data to fit the model.

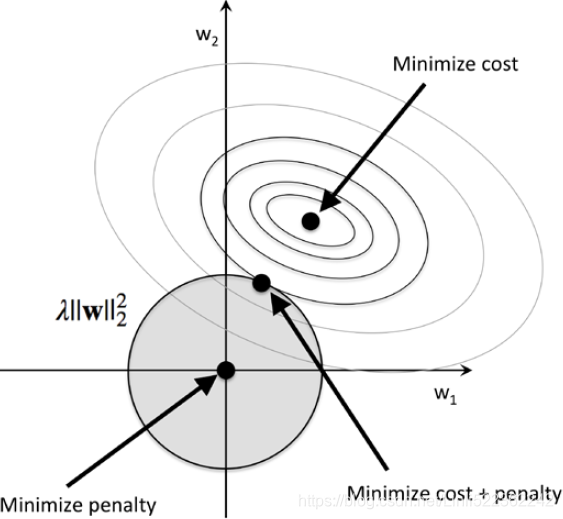

Now let's discuss L1 regularization and sparsity. The main concept behind L1 regularization is similar to what we have discussed here. However, since the L1 penalty is the sum of the absolute weight coefficients (remember that the L2 term is quadratic), we can represent it as a diamond shape budget, as shown in the following figure:

and sparsity. The main concept behind L1 regularization is similar to what we have discussed here. However, since the L1 penalty is the sum of the absolute weight coefficients (remember that the L2 term is quadratic), we can represent it as a diamond shape budget, as shown in the following figure:

In the preceding figure, we can see that the contour of the cost function touches the L1 diamond at  . Since the contours of an L1 regularized system are sharp, it is more likely that the optimum—that is, the intersection between the ellipses of the cost function and the boundary of the L1 diamond—is located on the axes, which encourages sparsity. The mathematical details of why L1 regularization can lead to sparse solutions are beyond the scope of this book. If you are interested, an excellent section on L2 versus L1 regularization can be found in section 3.4 of The Elements of Statistical Learning, Trevor Hastie, Robert Tibshirani, and Jerome Friedman, Springer.

. Since the contours of an L1 regularized system are sharp, it is more likely that the optimum—that is, the intersection between the ellipses of the cost function and the boundary of the L1 diamond—is located on the axes, which encourages sparsity. The mathematical details of why L1 regularization can lead to sparse solutions are beyond the scope of this book. If you are interested, an excellent section on L2 versus L1 regularization can be found in section 3.4 of The Elements of Statistical Learning, Trevor Hastie, Robert Tibshirani, and Jerome Friedman, Springer.

Other regression models

The sklearn.linear_model module contains various regression models that we can consider implementing in our model. The remaining sections briefly describe them. A full list of linear models is available at 1.1. Linear Models — scikit-learn 1.1.2 documentation

Lasso regression

Similar to ridge regression, Least Absolute Shrinkage and Selection Operator (LASSO) regression is also another form of regularization that involves penalizing the sum of absolute values of regression coefficients. It uses the L1 regularization(ℓ1 norm) technique. The cost function for the LASSO regression can be written as follows: OR

OR

Like ridge regression, the alpha parameter α controls the strength of the penalty. However, for geometric reasons, LASSO regression produces different results than ridge regression since it forces a majority of the coefficients to be set to zero. It is better suited for estimating sparse coefficients and models with fewer parameter values.

Figure 4-18 shows the same thing as Figure 4-17 but replaces Ridge models with Lasso models and uses smaller α values.

from sklearn.linear_model import Lasso

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.preprocessing import PolynomialFeatures

np.random.seed(42)

m=20 #number of instances

X= 3*np.random.rand(m,1) #one feature #X=range(0,3)

#noise

y=1 + 0.5*X + np.random.randn(m,1)/1.5

X_new = np.linspace(0,3, 100).reshape(100,1)

from sklearn.linear_model import Ridge

def plot_model(model_class, polynomial, alphas, **model_kargs):

for (alpha, style) in zip( alphas, ("b-", "y--", "r:") ):

model = model_class(alpha, **model_kargs) if alpha>0 else LinearRegression()

if polynomial:

model = Pipeline([

("poly_features", PolynomialFeatures(degree=10, include_bias=False)),

("std_scaler", StandardScaler()),

("regul_reg", model) #regulized regression

])

model.fit(X,y)

y_new_regul = model.predict(X_new)

lw = 5 if alpha>0 else 1

plt.plot(X_new, y_new_regul, style, linewidth=lw, label=r"$\alpha = {}$".format(alpha) )

plt.plot(X,y, "b.", linewidth=3)

plt.legend(loc="upper left", fontsize=15)

plt.xlabel("$x_1$", fontsize=18)

plt.axis([0,3, 0,4])

plt.figure(figsize=(8,4))

plt.subplot(121)

plot_model(Lasso, polynomial=False, alphas=(0, 0.1, 1), random_state=42)

plt.ylabel("$y$", rotation=0, fontsize=18)

plt.subplot(122)

plot_model(Lasso, polynomial=True, alphas=(0, 10**-7, 1), random_state=42)

plt.title("Figure 4-18. Lasso Regression")

plt.show()

An important characteristic of Lasso Regression is that it tends to completely eliminate the weights of the least important features最不重要 (i.e., set them to zero). For example, the dashed line in the right plot on Figure 4-18 (with α = ) looks quadratic, almost linear(compared to Figure 4-17 ):since all the weights for the high-degree polynomial features are equal to zero. In other words, Lasso Regression automatically performs feature selection and outputs a sparse model (i.e., with few nonzero feature weights).

==>

==>

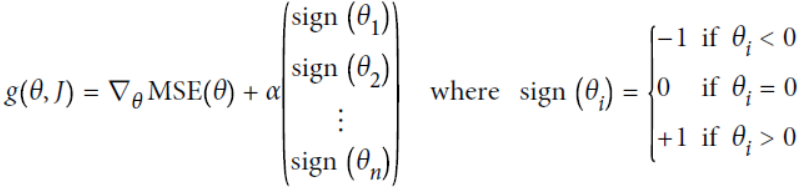

Lasso gradient vector:

![]() Note: here

Note: here ![]() ==

==![]()

from sklearn.linear_model import Lasso

class LassoRegressionModel(LinearRegressionModel):

def get_model(self):

return Lasso( alpha=.5 )

lasso_reg_model = LassoRegressionModel()

lasso_reg_model.learn( df_x, jpm_prices,

start_date='2018', end_date='2019',

lookback_period=20

)

%matplotlib inline

import matplotlib.pyplot as plt

lasso_reg_model.df_result.plot( title='JPM prediction by OLS',

style=['b-', 'g--'],

figsize=(12,8),

)

plt.show()

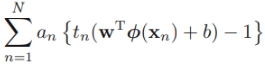

Elastic net

Elastic net is another regularized regression method that combines the L1 and L2 penalties of the LASSO and ridge regression methods. The cost function for elastic net can be written as follows: https://blog.csdn.net/Linli522362242/article/details/104070847

OR Note: ![]() is for convenient computation

is for convenient computation

The alpha values are explained here:

Here, alpha and l1_ratio are parameters of the ElasticNet function.

- When alpha is 0, the cost function is equivalent to an OLS( or

).

- When l1_ratio is 0, the penalty is a ridge or L2 penalty.

- When l1_ratio is 1, the penalty is a LASSO or L1 penalty.

- When l1_ratio is between 0 and 1, the penalty is a combination of L1 and L2.

So when should you use plain Linear Regression (i.e., without any regularization), Ridge, Lasso, or Elastic Net? It is almost always preferable to have at least a little bit of regularization, so generally you should avoid plain Linear Regression. Ridge is a good default, but if you suspect怀疑 that only a few features are actually useful, you should prefer Lasso or Elastic Net since they tend to reduce the useless features’ weights down to zero as we have discussed. In general, Elastic Net is preferred over Lasso since Lasso may behave erratically不规律地 when the number of features is greater than the number of training instances or when several features are strongly correlated.

from sklearn.linear_model import ElasticNet

class ElasticNetRegressionModel(LinearRegressionModel):

def get_model(self):

return ElasticNet( alpha=.5 )

elasticNet_reg_model = ElasticNetRegressionModel()

elasticNet_reg_model.learn( df_x, jpm_prices,

start_date='2018', end_date='2019',

lookback_period=20

)

%matplotlib inline

import matplotlib.pyplot as plt

elasticNet_reg_model.df_result.plot( title='JPM prediction by OLS',

style=['b-', 'g--'],

figsize=(12,8),

)

plt.show()

The ElasticNet class of sklearn.linear_model implements elastic net regression.

Conclusion

We used a single-asset, trend-following momentum strategy

(Momentum, also referred to as MOM, is an important measure of speed and magnitude of price moves.https://blog.csdn.net/Linli522362242/article/details/126443770

trend-following:https://blog.csdn.net/Linli522362242/article/details/121896073

https://blog.csdn.net/Linli522362242/article/details/126353102

) by regression to predict the prices of JPM(J.P. Morgan) using GS(Goldman Sachs (GS)), with the assumption that the pair is cointegrated and highly correlated. We can also consider cross-asset momentum to obtain better results from diversification. The next section explores multi-asset regression for predicting security returns.

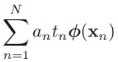

Predicting returns with a cross-asset momentum model

In this section, we will create a cross-asset momentum model by having the prices of four diversified assets predict the returns of JPM on a daily basis for the year of 2018. The prior 1-month, 3-month, 6-month, and 1-year of lagged returns of the S&P 500 stock index, 10-year treasury bond index, US dollar index, and gold prices will be used for fitting our model. This gives us a total of 16 features. Let's begin by preparing our datasets for developing our models.

(使用4个tickers形成的16个特征值(df_lagged)的 连续多个之前日期的adjusted closing prices作为样本训练当前日期的model,使用该模型对当前的jpm closing price进行预测。每个日期都有一个model对该日期进行预测jpm closing price)

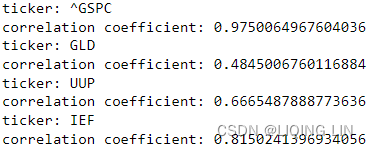

Preparing the independent variables

The ticker symbol for the S&P 500 stock index is SPX. We will use the SPDR Gold Trust (ticker symbol: GLD) to denote a share of the gold bullion/ˈbʊliən/金银 as a proxy for gold prices. The Invesco DB US Dollar Index Bullish/ˈbʊlɪʃ/看涨的 Fund (ticker symbol: UUP) will proxy the US dollar index. The iShares 7-10 Year Treasury Bond ETF (ticker symbol: IEF) will proxy the 10-year Treasury Bond Index. Run the following code to download our datasets:

# ^GSPC : SPX

# https://blog.csdn.net/Linli522362242/article/details/126269188

symbol_list = ['^GSPC','GLD','UUP','IEF']

symbols = ' '.join(symbol_list)

symbols![]()

import yfinance as yf

df = yf.download( symbols)

df

Combine the adjusted closing prices into a single pandas DataFrame named df_assets with the following codes and remove empty values with the dropna() command:

df_assets=df['Adj Close'].dropna()

df_assets

from statsmodels.tsa.stattools import coint

for tick in symbol_list:

x = df_assets[tick].loc['2008-02-29':].dropna()

y = jpm_prices.loc['2008-02-29':].dropna().values

cm = np.corrcoef( x,

y

)

print('ticker:', tick)

print('correlation coefficient:', cm[0,1]) Proved correlation!

Proved correlation!

Calculate the lagged percentage returns of our df_assets dataset with the following code:

On average, the number of trading days in a month is 21 days for the U.S market, but the number varies from month to month. For example, in 2021, January and February have 19 trading days, while March has 23 trading days, which is the most for any month.

On average, the number of trading days in a year is 252 days, which translates to 21 trading days on average each month and 63 trading days per quarter.

https://therobusttrader.com/how-many-trading-days-in-a-year/#:~:text=On%20average%2C%20the%20number%20of,63%20trading%20days%20per%20quarter.

# df_assets.columns : Index(['GLD', 'IEF', 'UUP', '^GSPC'], dtype='object')

# pct_change() : prices-prices.shift(1)/prices.shift(1)

# pct_change() : the percentage change over the prior period values

df_assets_1m = df_assets.pct_change( periods=21 )

df_assets_1m.columns = [ '%s_1m' % col

for col in df_assets.columns

]

df_assets_3m = df_assets.pct_change( periods=63 )

df_assets_3m.columns = [ '%s_3m' % col

for col in df_assets.columns

]

df_assets_6m = df_assets.pct_change( periods=126 )

df_assets_6m.columns = [ '%s_6m' % col

for col in df_assets.columns

]

df_assets_12m = df_assets.pct_change( periods=252 )

df_assets_12m.columns = [ '%s_12m' % col

for col in df_assets.columns

]

df_assets_1m  Time series objects cannot be directly normalized(Otherwise, trend changes cannot be captured), here we have done a certain degree of normalization using pct_change()

Time series objects cannot be directly normalized(Otherwise, trend changes cannot be captured), here we have done a certain degree of normalization using pct_change()

In the pct_change() command, the periods parameter specifies the number of periods to shift. We assumed 21 trading days in a month when calculating the lagged returns. Combine the four pandas DataFrame objects into a single DataFrame with the join() command:

df_lagged = df_assets_1m.join( df_assets_3m )\

.join( df_assets_6m )\

.join( df_assets_12m ).dropna()

df_lagged

Use the info() command to view its properties:

df_lagged.info()

The output is truncated, but you can see 16 features as our independent variables spanning the years 2008 to 2022. Let's continue to obtain the dataset for our target variables.

Preparing the target variables

The closing prices of JPM having been downloaded to the pandas Series object jpm_prices earlier, simply calculate the actual percentage returns with the following code:

jpm_prices = yf.download( 'JPM' )['Adj Close']

jpm_prices

y = jpm_prices.pct_change().loc['2008-02-29':]

y

We obtain a pandas Series object as our target variable y.

A multi-asset linear regression model

In the previous section, we used a single asset with the prices of GS for fitting our linear regression model. This same model, LinearRegressionModel, accommodates multiple assets(one instace martrix: 16 features x lookback_period ==> W [lookback_period x 16 features] in linear model). Run the following commands to create an instance of this model and use our new datasets:

# from sklearn.linear_model import LinearRegression

# class LinearRegressionModel(object):

# def __init__(self):

# self.df_result = pd.DataFrame(columns=['Actual', 'Predicted'])

# def get_model(self):

# return LinearRegression(fit_intercept=False)

# def learn(self, df, ys, start_date, end_date, lookback_period=20):

# model = self.get_model()

# df.sort_index(inplace=True)

# for date in df[start_date:end_date].index:

# # Fit the model

# x = self.get_prices_since(df, date, lookback_period)

# y = self.get_prices_since(ys, date, lookback_period)

# model.fit(x.values, y.ravel())

# # Predict the current period

# x_current = df.loc[date].values

# [y_pred] = model.predict([x_current])

# # Store predictions

# new_index = pd.to_datetime(date, format='%Y-%m-%d')

# y_actual = ys.loc[date]

# self.df_result.loc[new_index] = [y_actual, y_pred]

# def get_prices_since(self, df, date_since, lookback):

# index = df.index.get_loc(date_since)

# return df.iloc[index-lookback:index]

multi_linear_model = LinearRegressionModel()

multi_linear_model.learn( df_lagged, y,

start_date='2018',

end_date='2019',

lookback_period=10

)

multi_linear_model.df_result

In the linear regression model instance, multi_linear_model , the learn() command is supplied with the df_lagged dataset with 16 features and y as the percentage changes of JPM. The lookback_period value is reduced in consideration of the limited lagged returns data available. Let's plot the actual versus predicted percentage changes of JPM:

multi_linear_model.df_result.plot( title='JPM actual versus predicted percentage returns',

style=['-', '--'],

figsize=(12,8)

)

plt.show()This would give us the following graph in which the solid lines show the actual percentage returns of JPM, while the dotted lines show the predicted percentage returns:

(此外也 Proved there is a cointegration in daily price change : 一直围绕均值0波动。而均值不随时间变化; 如果两组序列是非平稳的,但它们的线性组合可以得到一个平稳序列,那么我们就说这两组时间序列数据具有协整的性质,我们同样可以把统计性质用到这个组合的序列上来)

(此外也 Proved there is a cointegration in daily price change : 一直围绕均值0波动。而均值不随时间变化; 如果两组序列是非平稳的,但它们的线性组合可以得到一个平稳序列,那么我们就说这两组时间序列数据具有协整的性质,我们同样可以把统计性质用到这个组合的序列上来)

How well did our model perform? Let's run the same performance metrics in the print_regression_metrics() function defined in the previous section:

print_regression_metrics( multi_linear_model.df_result )

The explained variance score and scores are in the negative range, suggesting that the model performs below average. Can we perform better? Let's explore more complex tree models used in regression.

-

A

score indicates that the prediction performs below average.

Negative values mean that the model fits worse than the baseline model. Models with negative values usually indicate issues in the training data or process and cannot be used.

But the performance issue here is not because of the preprocessing of the training data, but because we use a linear model

########## Proved : Don't do itfrom sklearn.preprocessing import MinMaxScaler mms = MinMaxScaler() df_lagged_mms = pd.DataFrame( mms.fit_transform( df_lagged ) ) df_lagged_mms.columns = df_lagged.columns df_lagged_mms.index = df_lagged.index multi_linear_model_mms = LinearRegressionModel() multi_linear_model_mms.learn( df_lagged_mms, y, start_date='2018', end_date='2019', lookback_period=10 ) multi_linear_model_mms.df_result.plot( title='JPM actual versus predicted percentage returns', style=['-', '--'], figsize=(12,8) ) plt.show()

print_regression_metrics( multi_linear_model_mms.df_result )

########## Don't do it

Learning with ensembles

Suppose you ask a complex question to thousands of random people, then aggregate their answers. In many cases you will find that this aggregated answer is better than an expert's answer. This is called the wisdom智慧 of the crowd. Similarly, if you aggregate the predictions of a group of predictors (such as classifiers or regressors), you will often get better predictions(more accurate and robust) than with the best individual predictor. A group of predictors is called an ensemble; thus, this technique is called Ensemble Learning, and an Ensemble Learning algorithm is called an Ensemble method.

we will focus on the most popular ensemble methods that use the majority voting principle. Majority voting simply means that we select the class label that has been predicted by the majority of classifiers, that is, received more than 50 percent of the votes. Strictly speaking, the term "majority vote" refers to binary class settings only. However, it is easy to generalize the majority voting principle to multiclass settings, which is called plurality voting. Here, we select the class label that received the most votes (the mode). The following diagram illustrates the concept of majority and plurality voting for an ensemble of 10 classifiers, where each unique symbol (triangle, square, and circle) represents a unique class label:

==

Using the training dataset, we start by training m different classifiers ( ). Depending on the technique, the ensemble can

). Depending on the technique, the ensemble can

- be built from different classification algorithms(This increases the chance that they will make very different types of errors, improving the ensemble's accuracy.), for example, decision trees, support vector machines, logistic regression classifiers, and so on.

Figure 7-1. Training diverse classifiers Figure

Figure 7-1. Training diverse classifiers Figure - Alternatively, we can also use the same base classification algorithm, fitting different subsets of the training dataset. One prominent example of this approach is the random forest algorithm, which combines different decision tree classifiers.

- The following figure illustrates the concept of a general ensemble approach using majority voting ( This majority-vote classifier is called a called hard voting classifier):

<==>

To predict a class label via simple majority or plurality voting, we can combine the predicted class labels of each individual classifier, , and select the class label,

, that received the most votes:

(In statistics, the mode is the most frequent event or result in a set. For example, mode{1, 2, 1, 1, 2, 4, 5, 4} = 1.)

For example, in a binary classification task where and

, we can write the majority vote prediction as follows:

To illustrate why ensemble methods can work better than individual classifiers alone, let's apply the simple concepts of combinatorics/ˌkɒmbɪnəˈtɒrɪks /组合学. For the following example, we will make the assumption that all n-base classifiers for a binary classification task have an equal error rate, 𝜀 . Furthermore, we will assume that the classifiers are independent and the error rates are not correlated

( if all classifiers trained on the same data that will make correlated errors. These classifiers are likely to make the same types of errors, so there will be many majority votes for the wrong class, reducing the ensemble's accuracy. https://blog.csdn.net/Linli522362242/article/details/104771157

).

Under those assumptions, we can simply express the error probability of an ensemble of base classifiers as a probability mass function of a binomial distribution:

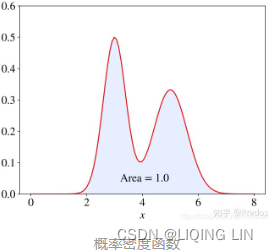

-

概率质量函数(Probability Mass Function, PMF,离散型数据)

是离散随机变量在各特定取值上的概率

是离散随机变量在各特定取值上的概率

概率质量函数PMF是对离散 随机变量定义的,本⾝代表该值的概率; - 概率密度函数(Probability Density Function, PDF, 连续型数据)

where n is the number of trials, p is the probability of success, and N is the number of successes.

where n is the number of trials, p is the probability of success, and N is the number of successes.

概率密度函数PDF是对连续 随机变量定义的,本⾝不是概率,只有对连续 随机变量的概率密度函数PDF在某区间内进⾏积分后才是概率 - The cumulative distribution function (cdf) or distribution function of a random variable X of the continuous type, defined in terms of the pdf of X, is given by

Here, again, F(x) accumulates (or, more simply, cumulates) all of the probability less

than or equal to x. From the fundamental theorem of calculus, we have, for x values

for which the derivative exists,

exists,  = f(x)=f(k) and we call f(x) the probability

= f(x)=f(k) and we call f(x) the probability

density function (pdf) of X.

Under those assumptions, we can simply express the error probability of an ensemble of base classifiers as a probability mass function of a binomial distribution:

Here,![]() is the binomial coefficient n choose k. In other words, we compute the probability that the prediction of the ensemble is wrong计算 集成预测错误的概率. Now, let's take a look at a more concrete example of 11 base classifiers (n = 11, k=6), where each classifier has an error rate of 0.25 (𝜀 = 0.25 ):

is the binomial coefficient n choose k. In other words, we compute the probability that the prediction of the ensemble is wrong计算 集成预测错误的概率. Now, let's take a look at a more concrete example of 11 base classifiers (n = 11, k=6), where each classifier has an error rate of 0.25 (𝜀 = 0.25 ):

:majority-vote (ensemble's prediction) requires at least 50% of predictors to be wrong

The binomial coefficient

The binomial coefficient refers to the number of ways we can choose subsets of k-unordered elements from a set of size n; thus, it is often called "n choose k." Since the order does not matter here, the binomial coefficient is also sometimes referred to as combination or combinatorial number, and in its unabbreviated form, it is written as follows:

Here, the symbol (!) stands for factorial—for example,

Here, the symbol (!) stands for factorial—for example,

3! = 3 ∙ 2 ∙ 1 = 6 .

As you can see, the error rate of the ensemble (0.034) is much lower than the error rate of each individual classifier (0.25) if all the assumptions are met. Note that, in this simplified illustration, a 50-50 split五五分 by an even number of classifiers, n, is treated as an error, whereas this is only true half of the time. To compare such an idealistic ensemble classifier to a base classifier over a range of different base error rates, let's implement the probability mass function in Python:

from scipy.special import comb

import math

def ensemble_error(n_classifier, error_rate):

# if n_classifier=11, then k_start=6

k_start = int( math.ceil(n_classifier/2.) )

probs = [ comb(n_classifier, k) *\

error_rate**k * (1-error_rate)**(n_classifier-k)

for k in range(k_start, n_classifier+1)

]

return sum(probs)

ensemble_error(n_classifier=11, error_rate=0.25)![]()

After we have implemented the ensemble_error function, we can compute the ensemble error rates for a range of different base errors from 0.0 to 1.0 to visualize the relationship between ensemble and base errors in a line graph:

import numpy as np

error_range = np.arange(0.0, 1.01, 0.01) # base errors from 0.0 to 1.0

ens_errors = [ ensemble_error(n_classifier=11, error_rate=error)

for error in error_range

]

import matplotlib.pyplot as plt

plt.plot(error_range, ens_errors, label="Ensemble error", lw=2)

plt.plot(error_range, error_range, ls='--', label="Base error", lw=2)

plt.xlabel('Base error')

plt.ylabel('Base/Ensemble error')

plt.legend(loc='upper left')

plt.grid(alpha=0.5)

plt.show() As you can see in the resulting plot, the error probability of an ensemble is always better than the error of an individual base classifier, as long as the base classifiers perform better than random guessing (𝜀 < 0.5 ). Note that the y axis depicts the base error (dotted line) as well as the ensemble error (continuous line):

Implementing a simple majority vote classifier

The algorithm that we are going to implement in this section will allow us to combine different classification algorithms associated with individual weights for confidence. Our goal is to build a stronger meta-classifier that balances out the individual classifiers' weaknesses on a particular dataset. In more precise mathematical terms, we can write the weighted majority vote as follows:

- Here,

is the predicted class label of the ensemble

is a weight associated with a base classifier,

is a weight associated with a base classifier, ;

is the set of unique class labels;

-

(Greek chi) is the characteristic function or indicator function, which returns 1 if the predicted class of the jth classifier matches the class label i (

and

). For equal weights, we can simplify this equation and write it as follows:

). For equal weights, we can simplify this equation and write it as follows:

To better understand the concept of weighting, we will now take a look at a more concrete example. Let's assume that we have an ensemble of three(m=3) base classifiers,![]() , and we want to predict the class label,

, and we want to predict the class label,![]() , of a given example, x. Two out of three base classifiers predict the class label

, of a given example, x. Two out of three base classifiers predict the class label , and one,

, predicts that the example belongs to class

.

- If we weight the predictions of each base classifier equally, the majority vote predicts that the example belongs to class

:

- Now, let's assign a weight of 0.6 to

, and

, and

let's weight and

and  by a coefficient of 0.2:

by a coefficient of 0.2: predicts that the example belongs to class 1

predicts that the example belongs to class 1 - More simply, since 3 × 0.2 = 0.6, we can say that the prediction made by

has three times more weight than the predictions by

has three times more weight than the predictions by  or

or  , which we can write as follows:

, which we can write as follows:

To translate the concept of the weighted majority vote into Python code, we can use NumPy's convenient argmax and bincount![]() functions:

functions:

# np.bincount( [0,0,1], weights=[0.2,0.2,0.6] ) ==> array([0.4, 0.6])

np.argmax(np.bincount([0,0,1], weights = [0.2,0.2,0.6]))

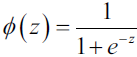

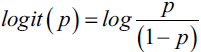

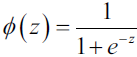

As you will remember from the discussion on logistic regression in Cp3, A Tour of Machine Learning Classifiers Using scikit-learn

https://blog.csdn.net/Linli522362242/article/details/96480059, certain classifiers in scikit-learn can also return the probability of a predicted class label via the predict_proba method. Using the predicted class probabilities instead of the class labels for majority voting can be useful if the classifiers in our ensemble are well calibrated['kælɪbreɪtɪd]校准. The modified version of the majority vote for predicting class labels from probabilities can be written as follows:

Here, is the predicted probability of the jth classifier for class label i.

To continue with our previous example, let's assume that we have a binary classification problem with class labels 𝑖 ∈ {0, 1} or![]() and an ensemble of three classifiers

and an ensemble of three classifiers ![]() .and Let's assume that the classifiers