In ts10_Univariate TS模型_circle mark pAcf_ETS_unpack product_darts_bokeh band interval_ljungbox_AIC_BIC_LIQING LIN的博客-CSDN博客ts10_2Univariate TS模型_pAcf_bokeh_AIC_BIC_combine seasonal_decompose twinx ylabel_bold partial title_LIQING LIN的博客-CSDN博客, Building Univariate Time Series Models Using Statistical Methods, you were introduced to exponential smoothing, non-seasonal ARIMA, and Seasonal ARIMA for building forecasting models. These are popular techniques and are referred to as classical or statistical forecasting methods. They are fast, simple to implement, and easy to interforecast.valuespret.

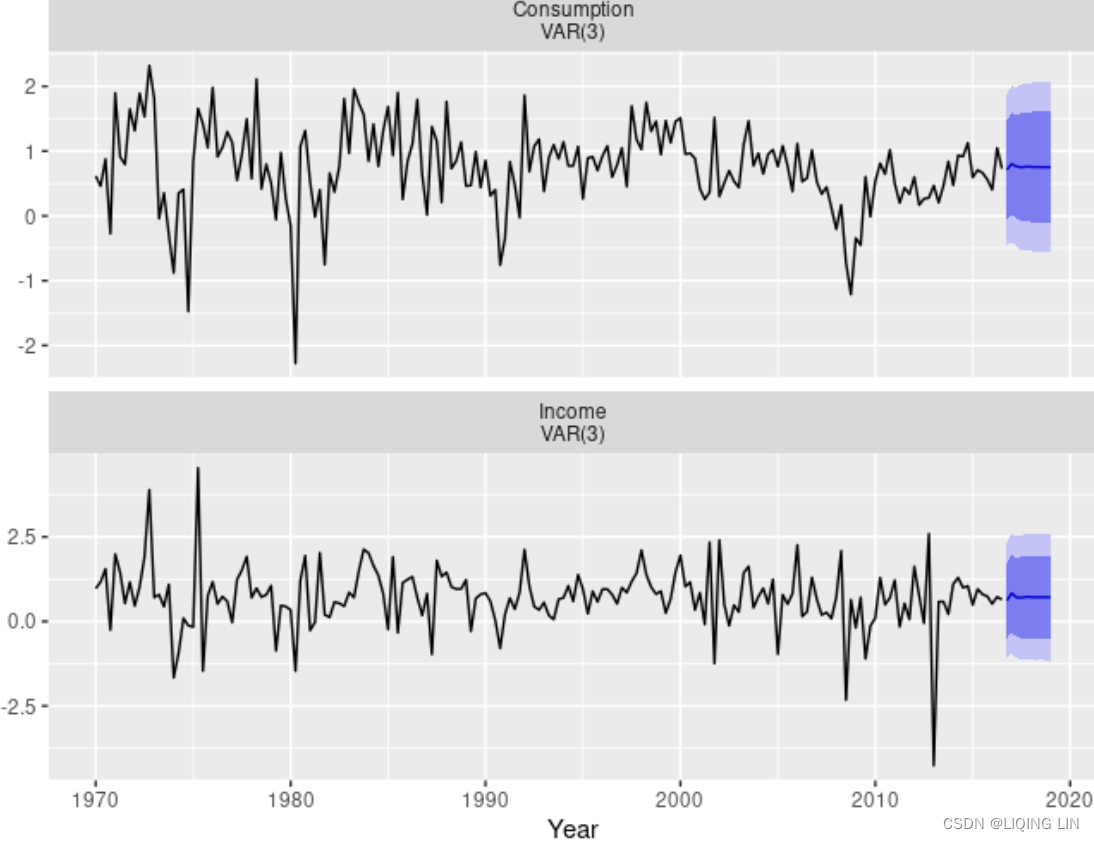

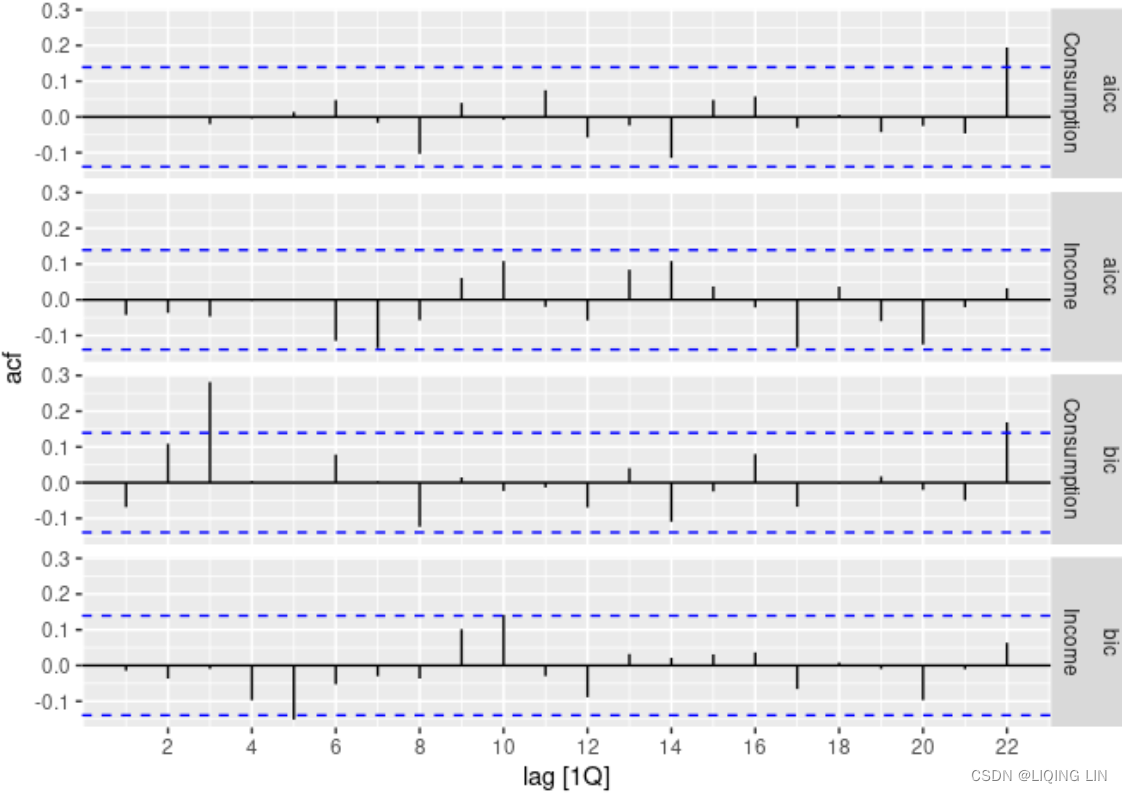

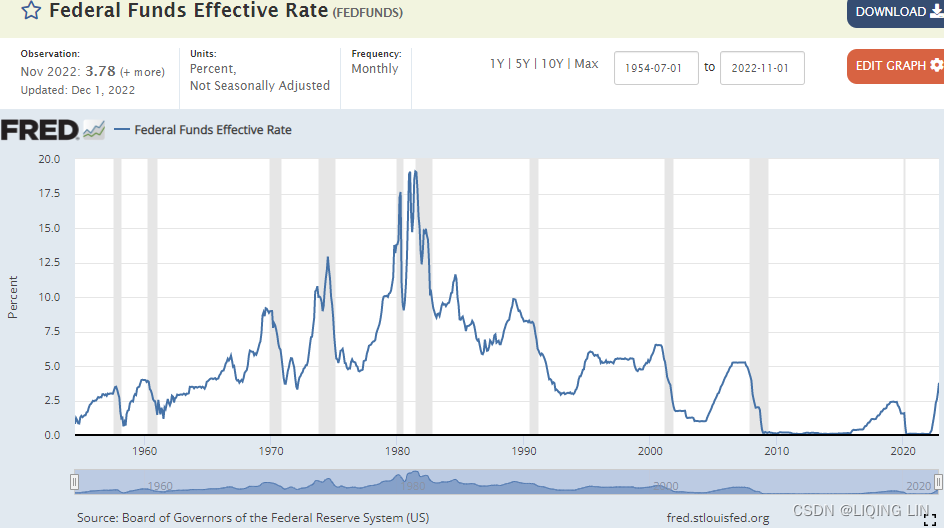

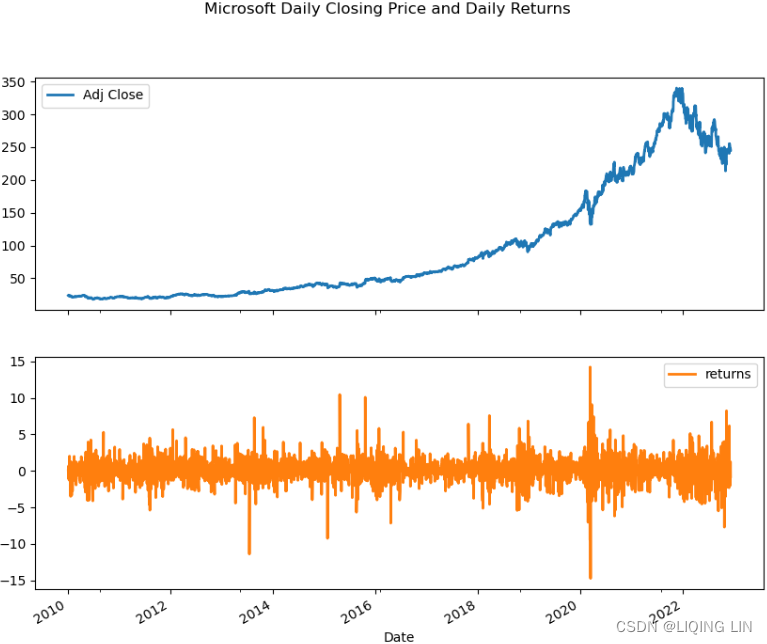

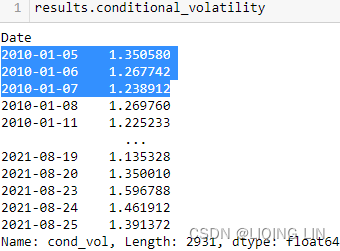

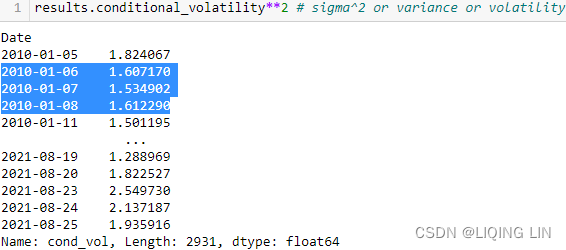

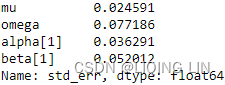

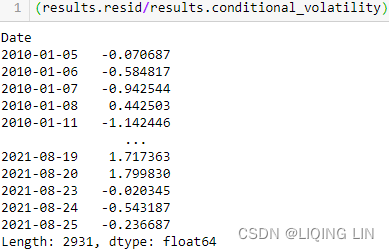

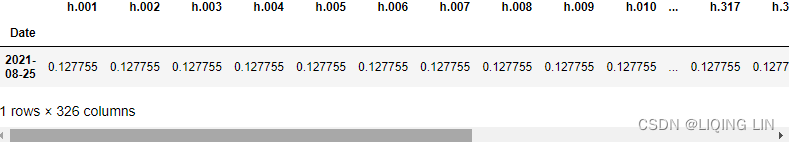

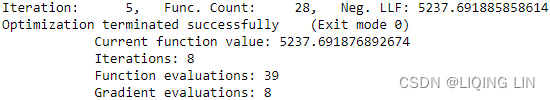

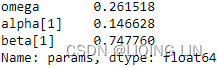

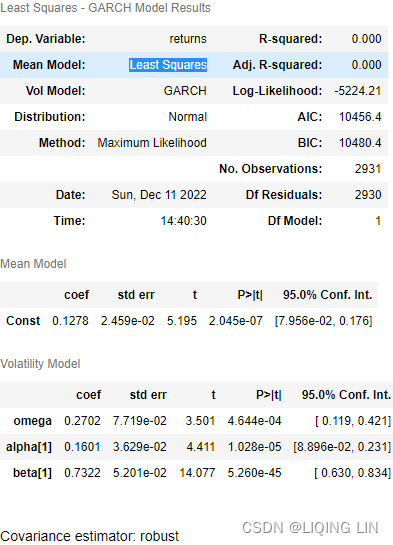

In this chapter, you will dive head-first and learn about additional statistical methods that build on the foundation you gained from the previous chapter. This chapter will introduce a few libraries that can automate time series forecasting and model optimization—for example, auto_arima and Facebook's Prophet library. Additionally, you will explore statsmodels' Vector AutoRegressive (VAR) class for working with multivariate time series and the arch library, which supports GARCH for modeling volatility in financial data.

The main goal of this chapter is to introduce you to the Prophet library (which is also an algorithm) and the concept of multivariate time series modeling with VAR models.

In this chapter, we will cover the following recipes:

- • Forecasting time series data using auto_arima

- • Forecasting time series data using Facebook Prophet

- • Forecasting multivariate time series data using VAR

- • Evaluating Vector AutoRegressive (VAR) models

- • Forecasting volatility in financial time series data with GARCH

Forecasting time series data using auto_arima

For this recipe, you must install pmdarima , a Python library that includes auto_arima for automating ARIMA hyperparameter optimization and model fitting. The auto_arima implementation in Python is inspired by the popular auto.arima from the forecast package in R.

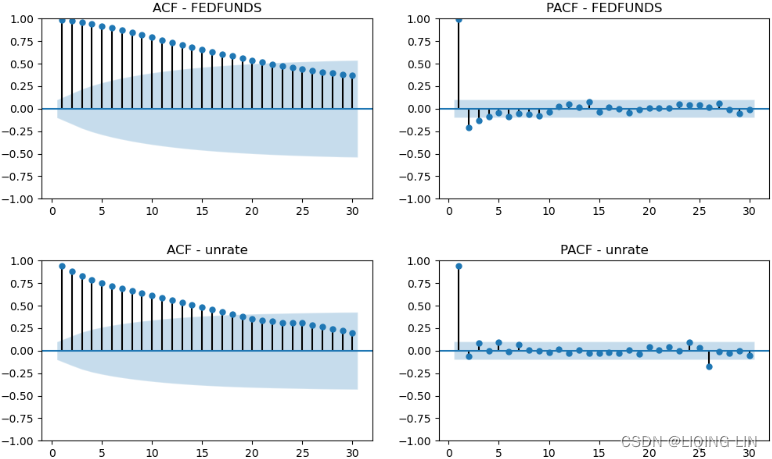

In Chapter 10, Building Univariate Time Series Models Using Statistical Methods, you learned that finding the proper orders for the AR and MA components is not simple. Although you explored useful techniques for estimating the orders, such as

- interpreting the Partial AutoCorrelation Function (PACF) and AutoCorrelation Function (ACF) plots,

- you may still need to train different models to find the optimal configurations (referred to as hyperparameter tuning).

This can be a time-consuming process and is where auto_arima shines.

Instead of the naive approach of training multiple models through grid search to cover every possible combination of parameter values, auto_arima automates the process for finding the optimal parameters. The auto_arima function uses a stepwise逐步 algorithm that is faster and more efficient than a full grid search or random search:

- • When stepwise=True, auto_arima performs a stepwise search (which is the default).

- • With stepwise=False, it performs a brute-force grid search (full search).

- • With random=True, it performs a random search.

The stepwise algorithm was proposed in 2008 by Rob Hyndman and Yeasmin Khandakar in the paper Automatic Time Series Forecasting: The forecast Package for R, which was published in the Journal of Statistical Sofware 27, no.3 (2008) ( https://www.jstatsoft.org/index.php/jss/article/view/v027i03/255 ). In a nutshell, stepwise is an optimization technique that

- utilizes grid search more efficiently.

- This is accomplished using unit root tests and minimizing information criteria (for example,

- Akaike Information Criterion (AIC) and

- Maximum Likelihood Estimation (MLE).

Additionally, auto_arima can handle Seasonal and non-seasonal ARIMA models. If seasonal ARIMA is desired, then you will need to set seasonal=True for auto_arima to optimize over the (P, D, Q) values.

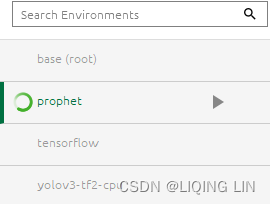

create a new virtual Python environment for Prophet

It is recommended that you create a new virtual Python environment for Prophet to avoid issues with your current environment.

conda create -n prophet python=3.8 -y

conda activate prophet![]()

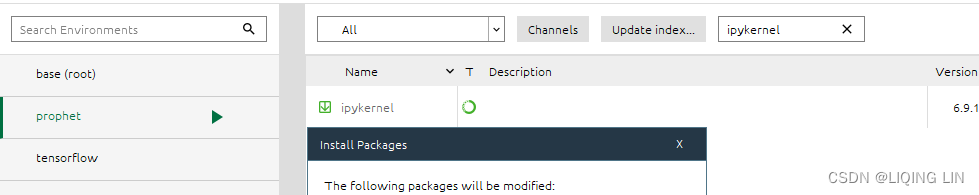

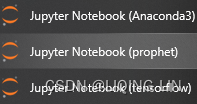

To make the new prophet environment visible within Jupyter, run the following code:

python -m ipykernel install --user --name prophet --display-name "fbProphet"I used

python -m ipykernel install --name prophet --display-name "fbProphet"![]()

To install Prophet using pip

To install Prophet using pip , you will need to install pystan first. Make sure you install the supported PyStan version. You can check the documentation at

https: //facebook.github.io/prophet/docs/installation.html#python for the latest instructions:

pip install pystan==2.19.1.1

Once installed, run the following command:

pip install prophet

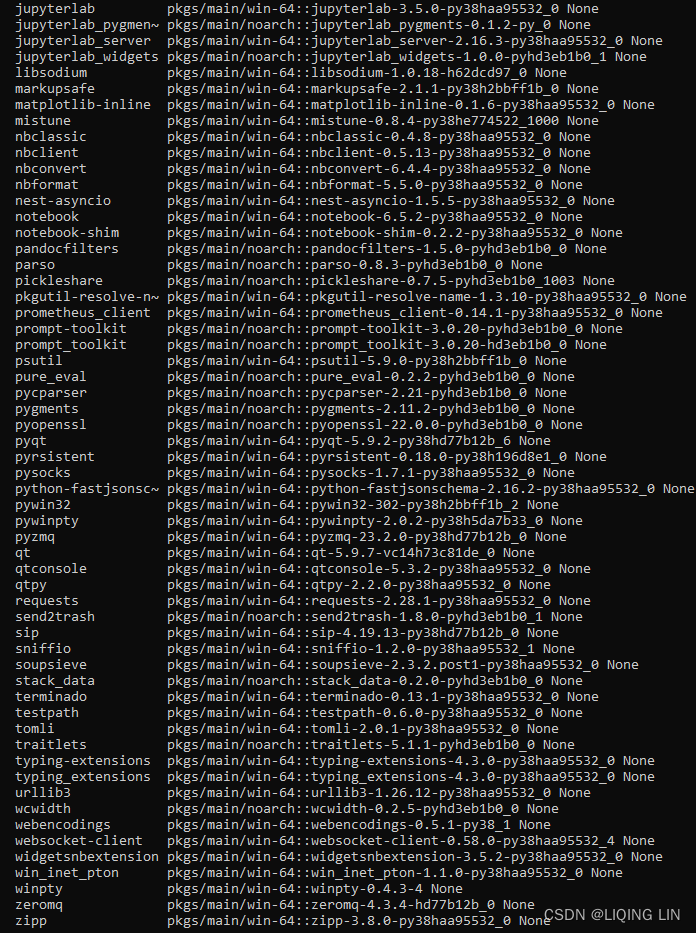

install Prophet use Conda

To install Prophet use Conda, which takes care of all the necessary dependencies, use the following command:

conda install -c conda-forge prophet you have to wait a few minutes

Jupyter notebook(prophet)

conda install jupyter notebook

==>

==>

You will need to install pmdarima before you can proceed with this recipe.

install pmdarima

To install it using pip, use the following command:

pip install pmdarima

OR To install it using conda , use the following command:

conda install -c conda-forge pmdarima

install hyplot

pip install hvplot

Note : restart kernel

install bokeh

pip install bokeh

install plotly

pip install plotly

install pandas_datareader

pip install pandas_datareader

Chapter 12 Advanced forecasting methods

12.1 Complex seasonality

So far, we have mostly considered relatively simple seasonal patterns such as quarterly and monthly data. However, higher frequency time series often exhibit more complicated seasonal patterns. For example,

- daily data may have a

- weekly pattern as well as an

- annual pattern.

- Hourly data usually has three types of seasonality:

- a daily pattern,

- a weekly pattern, and

- an annual pattern.

- Even weekly data can be challenging to forecast as there are not a whole number of weeks in a year, so the annual pattern has a seasonal period of 365.25/7≈52.179 on average.

Most of the methods we have considered so far are unable to deal with these seasonal complexities.

We don’t necessarily want to include all of the possible seasonal periods in our models — just the ones that are likely to be present in the data. For example, if we have only 180 days of data, we may ignore the annual seasonality. If the data are measurements of a natural phenomenon (e.g., temperature), we can probably safely ignore any weekly seasonality.

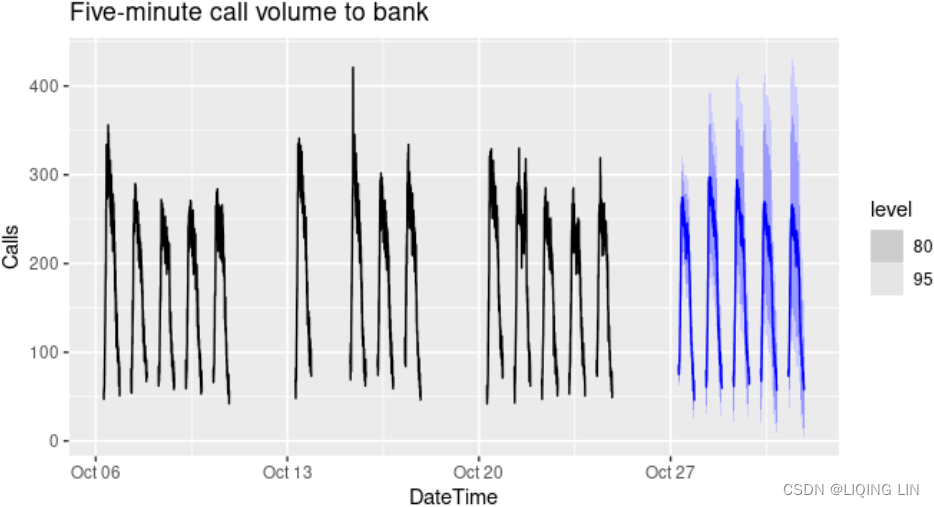

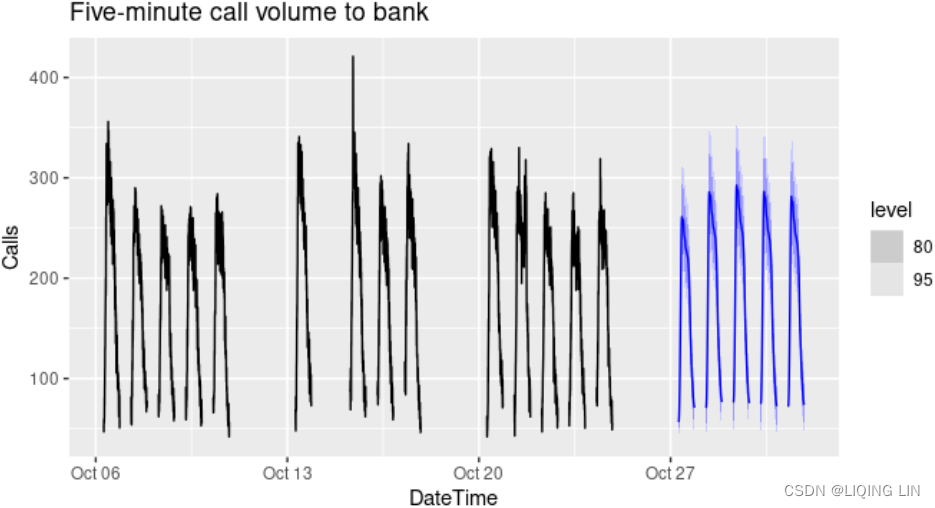

Figure 12.1: Five-minute call volume handled on weekdays between 7:00am and 9:05pm in a large North American commercial bank. Top panel: data from 3 March – 24 October 2003(weak weekly seasonal pattern). Bottom panel: first four weeks of data(strong daily seasonal pattern

.Call volumes on Mondays tend to be higher than the rest of the week.).

Figure 12.1 shows the number of calls to a North American commercial bank per 5-minute interval between 7:00am and 9:05pm each weekday over a 33 week period. The lower panel shows the first four weeks of the same time series. There is a strong daily seasonal pattern with period 169 (there are 169 5-minute intervals per day or 169(21.05-7)*60/5=168.6)(Call volumes on Mondays tend to be higher than the rest of the week.), and a weak weekly seasonal pattern with period 169×5=845. If a longer series of data were available, we may also have observed an annual seasonal pattern.

Apart from the multiple seasonal periods, this series has the additional complexity of missing values between the working periods.

STL with multiple seasonal periods

STL is an acronym/ ˈækrənɪm /首字母缩略词 for “Seasonal and Trend decomposition using Loess”, while Loess is a method for estimating nonlinear relationships.

The STL() function is designed to deal with multiple seasonality. It will return multiple seasonal components, as well as a trend and remainder component. In this case, we need to re-index the tsibble to avoid the missing values, and then explicitly give the seasonal periods.  Figure 12.2: STL decomposition with multiple seasonality for the call volume data.

Figure 12.2: STL decomposition with multiple seasonality for the call volume data.

There are two seasonal patterns shown, one for the time of day (the third panel: season_169), and one for the time of week (the fourth panel: season_845). To properly interpret this graph, it is important to notice the vertical scales. In this case, the trend and the weekly seasonality have wider bars (and therefore relatively narrower ranges) compared to the other components, because there is little trend seen in the data(the grey bar in trend panel is now much longer than either of the ones on the data or seasonal panel, indicating the variation attributed to the trend is much smaller than the seasonal component表明归因于趋势的变化比季节性成分小得多 and consequently only a small part of the variation in the data series.), and the weekly seasonality is weak.

The decomposition can also be used in forecasting, with

- each of the seasonal components forecast using a seasonal naïve method, and

- the seasonally adjusted data forecast using ETS.

The code is slightly more complicated than usual because we have to add back the time stamps that were lost when we re-indexed the tsibble to handle the periods of missing observations. The square root transformation used in the STL decomposition has ensured the forecasts remain positive.

Figure 12.3:

- Forecasts of the call volume data using an STL decomposition with

- the seasonal components forecast using a seasonal naïve method,

- and the seasonally adjusted data forecast using ETS.

# Forecasts from STL+ETS decomposition

my_dcmp_spec <- decomposition_model(

STL(sqrt(Calls) ~ season(period = 169) +

season(period = 5*169),

robust = TRUE),

ETS(season_adjust ~ season("N"))

)

fc <- calls %>%

model(my_dcmp_spec) %>%

forecast(h = 5 * 169)

# Add correct time stamps to fable

fc_with_times <- bank_calls %>%

new_data(n = 7 * 24 * 60 / 5) %>%

mutate(time = format(DateTime, format = "%H:%M:%S")) %>%

filter(

time %in% format(bank_calls$DateTime, format = "%H:%M:%S"),

wday(DateTime, week_start = 1) <= 5

) %>%

mutate(t = row_number() + max(calls$t)) %>%

left_join(fc, by = "t") %>%

as_fable(response = "Calls", distribution = Calls)

# Plot results with last 3 weeks of data

fc_with_times %>%

fill_gaps() %>%

autoplot(bank_calls %>% tail(14 * 169) %>% fill_gaps()) +

labs(y = "Calls",

title = "Five-minute call volume to bank")

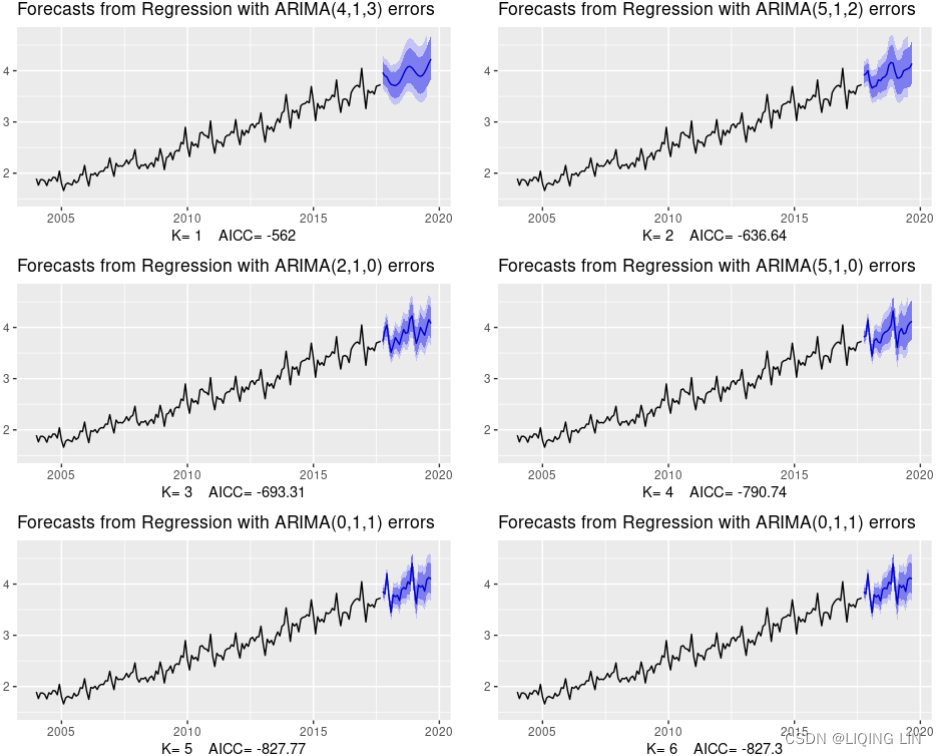

10.5 Dynamic harmonic regression

When there are long seasonal periods, a dynamic regression with Fourier terms is often better than other models we have considered in this book.

For example,

- daily data can have annual seasonality of length 365,

- weekly data has seasonal period of approximately 52,

- while half-hourly data can have several seasonal periods, the shortest of which is the daily pattern of period 48.

Seasonal versions of ARIMA and ETS models are designed for shorter periods such as 12 for monthly data or 4 for quarterly data. The ETS() model restricts seasonality to be a maximum period of 24 to allow hourly data but not data with a larger seasonal period. The problem is that there are m−1 parameters to be estimated for the initial seasonal states where m is the seasonal period. So for large m, the estimation becomes almost impossible.

The ARIMA() function will allow a seasonal period up to m=350, but in practice will usually run out of memory whenever the seasonal period is more than about 200. In any case, seasonal differencing of high order does not make a lot of sense — for daily data it involves comparing what happened today with what happened exactly a year ago and there is no constraint that the seasonal pattern is smooth.

So for such time series, we prefer a harmonic regression approach where the seasonal pattern is modelled using Fourier terms with short-term time series dynamics handled by an ARMA error.

The advantages of this approach are:

- it allows any length seasonality;

- for data with more than one seasonal period, Fourier terms of different frequencies can be included;

- the smoothness of the seasonal pattern can be controlled by K, the number of Fourier sin and cos pairs – the seasonal pattern is smoother for smaller values of K;

- the short-term dynamics are easily handled with a simple ARMA error.

The only real disadvantage (compared to a seasonal ARIMA model) is that the seasonality is assumed to be fixed — the seasonal pattern is not allowed to change over time(seasonal effect is additive:The seasonal magnitudes and variations over time seem to be steady). But in practice, seasonality is usually remarkably constant so this is not a big disadvantage except for long time series.

Example: Australian eating out expenditure

In this example we demonstrate combining Fourier terms for capturing seasonality with ARIMA errors capturing other dynamics in the data. For simplicity, we will use an example with monthly data. The same modelling approach using weekly data is discussed in Section13.1.

We use the total monthly expenditure on cafes, restaurants and takeaway food services in Australia ($billion) from 2004 up to 2016 and forecast 24 months ahead. We vary K, the number of Fourier sin and cos pairs, from K=1 to K=6 (which is equivalent to including seasonal dummies). Figure 10.11 shows the seasonal pattern projected forward as K increases. Notice that as K increases the Fourier terms capture and project a more “wiggly” seasonal pattern and simpler ARIMA models are required to capture other dynamics. The AICc value is minimised for K=5, with a significant jump going from K=4 to K=5, hence the forecasts generated from this model would be the ones used.

cafe04 <- window(auscafe, start=2004)

plots <- list()

for (i in seq(6)) {

fit <- auto.arima(cafe04, xreg = fourier(cafe04, K = i),

seasonal = FALSE, lambda = 0)

plots[[i]] <- autoplot(forecast(fit,

xreg=fourier(cafe04, K=i, h=24))) +

xlab(paste("K=",i," AICC=",round(fit[["aicc"]],2))) +

ylab("") + ylim(1.5,4.7)

}

gridExtra::grid.arrange(

plots[[1]],plots[[2]],plots[[3]],

plots[[4]],plots[[5]],plots[[6]], nrow=3) Figure 9.11: Using Fourier terms and ARIMA errors for forecasting monthly expenditure on eating out in Australia.

Figure 9.11: Using Fourier terms and ARIMA errors for forecasting monthly expenditure on eating out in Australia.

Dynamic harmonic regression with multiple seasonal periods

harmonic/ hɑːrˈmɑːnɪk /(有关)调和级数(或数列)的

With multiple seasonalities, we can use Fourier terms as we did in earlier chapters (see Sections 7.4 and 10.5). Because there are multiple seasonalities, we need to add Fourier terms for each seasonal period. In this case, the seasonal periods are 169 and 845, so the Fourier terms are of the form![]() for k=1,2,…. As usual, the

for k=1,2,…. As usual, the fourier() function can generate these for you.

We will fit a dynamic harmonic regression model with an ARIMA error structure. The total number of Fourier terms for each seasonal period could be selected to minimise the AICc. However, for high seasonal periods, this tends to over-estimate the number of terms required, so we will use a more subjective choice with 10 terms for the daily seasonality and 5 for the weekly seasonality. Again, we will use a square root transformation to ensure the forecasts and prediction intervals remain positive. We set D=d=0 in order to handle the non-stationarity through the regression terms, and P=Q=0 in order to handle the seasonality through the regression terms.

This is a large model, containing 33 parameters:

- 4(D=d=0 and P=Q=0) ARMA coefficients,

- 20(kx2=10x2) Fourier coefficients for period 169 (there are 169 5-minute intervals per day or 169

(21.05-7)*60/5=168.6), and

- 8(kx2=5x2=10 - 1x2 when k=5) Fourier coefficients for period 845=5*169. Not all of the Fourier terms for period 845 are used because there is some overlap with the terms of period 169 (since 845=5 weekdays ×169 for a week).

: is an ARIMA process.

fit <- calls %>%

model(

dhr = ARIMA(sqrt(Calls) ~ PDQ(0, 0, 0) + pdq(d = 0) +

fourier(period = 169, K = 10) +

fourier(period = 5*169, K = 5)))

fc <- fit %>% forecast(h = 5 * 169)

# Add correct time stamps to fable

fc_with_times <- bank_calls %>%

new_data(n = 7 * 24 * 60 / 5) %>%

mutate(time = format(DateTime, format = "%H:%M:%S")) %>%

filter(

time %in% format(bank_calls$DateTime, format = "%H:%M:%S"),

wday(DateTime, week_start = 1) <= 5

) %>%

mutate(t = row_number() + max(calls$t)) %>%

left_join(fc, by = "t") %>%

as_fable(response = "Calls", distribution = Calls)

# Plot results with last 3 weeks of data

fc_with_times %>%

fill_gaps() %>%

autoplot(bank_calls %>% tail(14 * 169) %>% fill_gaps()) +

labs(y = "Calls",

title = "Five-minute call volume to bank") Figure 12.4: Forecasts from a dynamic harmonic regression applied to the call volume data.

We will fit a dynamic harmonic regression model with an ARMA error structure. The total number of Fourier terms for each seasonal period have been chosen to minimise the AICc. We will use a log transformation (lambda=0) to ensure the forecasts and prediction intervals remain positive.

This is a large model, containing 40 parameters:

- 4(D=d=0 and P=Q=0) ARMA coefficients,

- 20(kx2=10x2) Fourier coefficients for period 169 (there are 169 5-minute intervals per day or 169

(21.05-7)*60/5=168.6), and

- 16(kx2=10x2=20 - 2x2 when k=5 and k=10) Fourier coefficients for period 845=5*169. Not all of the Fourier terms for period 845 are used because there is some overlap with the terms of period 169(when k=1, k=2) (since 845=5 weekdays ×169 for a week).

: is an ARIMA process.

fit <- auto.arima(calls, seasonal=FALSE, lambda=0,

xreg=fourier(calls, K=c(10,10)))

fit %>%

forecast(xreg=fourier(calls, K=c(10,10), h=2*169)) %>%

autoplot(include=5*169) +

ylab("Call volume") + xlab("Weeks") Figure 11.4: Forecasts from a dynamic harmonic regression applied to the call volume data.

Figure 11.4: Forecasts from a dynamic harmonic regression applied to the call volume data.

You will use the milk_production.csv data used in 'https://raw.githubusercontent.com/PacktPublishing/Time-Series-Analysis-with-Python-Cookbook/main/datasets/Ch10/milk_production.csv', Building Univariate Time Series Models Using Statistical Methods. Recall that the data contains both trend and seasonality, so you will be training a SARIMA model.

The pmdarima library wraps over the statsmodels library, so you will see familiar methods and attributes as you proceed.

1. Start by importing the necessary libraries and load the Monthly Milk Production dataset from the milk_production.csv fle:

import pmdarima as pm

import pandas as pd

milk_file='https://raw.githubusercontent.com/PacktPublishing/Time-Series-Analysis-with-Python-Cookbook/main/datasets/Ch10/milk_production.csv'

milk = pd.read_csv( milk_file,

index_col='month',

parse_dates=True,

)

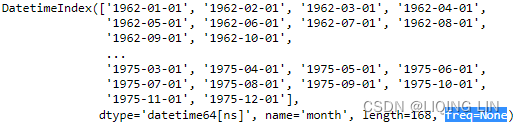

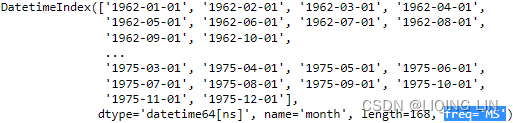

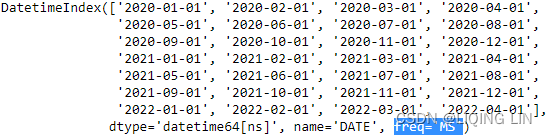

milk.index

Change the DataFrame's frequency to month start ( MS ) to refect how the data is being stored:

milk=milk.asfreq('MS') # OR milk.index.freq = 'MS'

milk.index

milk

2. Split the data into train and test sets using the train_test_split function from pmdarima . Tis is a wrapper to scikit-learn's train_test_split function but without shuffling. The following code shows how to use both; they will produce the same results:

from sklearn.model_selection import train_test_split

train, test = train_test_split(milk, test_size=0.10, shuffle=False)

print( f'Train: {train.shape}' )

print( f'Test: {test.shape}' )![]()

# same results using pmdarima : not shuffle

# https://alkaline-ml.com/pmdarima/modules/generated/pmdarima.model_selection.train_test_split.html

train, test = pm.model_selection.train_test_split( milk, test_size=0.10 )

print( f'Train: {train.shape}' )

print( f'Test: {test.shape}' ) ![]() There are 151 months in the training set and 17 months in the test set. You will use the test set to evaluate the model using out-of-sample data.

There are 151 months in the training set and 17 months in the test set. You will use the test set to evaluate the model using out-of-sample data.

pip install hvplot

Note : restart kernel

pip install bokeh

pip install plotly

from bokeh.plotting import figure, show

from bokeh.models import ColumnDataSource, HoverTool

#from bokeh.layouts import column

import numpy as np

import hvplot.pandas

hvplot.extension("bokeh")

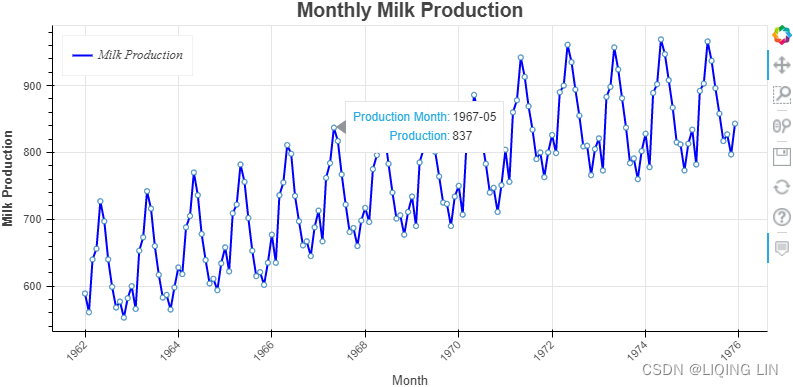

source = ColumnDataSource( data={'productionMonth':milk.index,

'production':milk['production'].values,

})

# def datetime(x):

# return np.array(x, dtype=datetime64)

p2 = figure( width=800, height=400,

title='Monthly Milk Production',

x_axis_type='datetime',

x_axis_label='Month', y_axis_label='Milk Production'

)

p2.xaxis.axis_label_text_font_style='normal'

p2.yaxis.axis_label_text_font_style='bold'

p2.xaxis.major_label_orientation=np.pi/4 # rotation

p2.title.align='center'

p2.title.text_font_size = '1.5em'

p2.line( x='productionMonth', y='production', source=source,

line_width=2, color='blue',

legend_label='Milk Production'

)

# https://docs.bokeh.org/en/latest/docs/first_steps/first_steps_3.html

p2.legend.location = "top_left"

p2.legend.label_text_font = "times"

p2.legend.label_text_font_style = "italic"

p2.circle(x='productionMonth', y='production', source=source,

fill_color='white', size=5

)

p2.add_tools( HoverTool( tooltips=[('Production Month', '@productionMonth{%Y-%m}'),

('Production', '@production{0}')

],

formatters={'@productionMonth':'datetime',

'@production':'numeral'

},

mode='vline'

)

)

show(p2)From Figure 10.1, we determined that both seasonality and trend exist(a positive (upward) trend and a repeating seasonality (every summer)). We could also see that the seasonal effect is additive(The seasonal magnitudes and variations over time seem to be steady).

import statsmodels.tsa.api as smt

from statsmodels.tsa.stattools import acf, pacf

from matplotlib.collections import PolyCollection

import numpy as np

import matplotlib.pyplot as plt

fig, axes = plt.subplots( 2,1, figsize=(14,13) )

lags=41 # including lag at 0: autocorrelation of the first observation on itself

acf_x=acf( milk, nlags=lags-1,alpha=0.05,

fft=False, qstat=False,

bartlett_confint=True,

adjusted=False,

missing='none',

)

acf_x, confint =acf_x[:2]

smt.graphics.plot_acf( milk, zero=False, ax=axes[0],

auto_ylims=True, lags=lags-1 )

for lag in [1,12, 24]:

axes[0].scatter( lag, acf_x[lag] , s=500 , facecolors='none', edgecolors='red' )

axes[0].text( lag-1.3, acf_x[lag]+0.1, 'Lag '+str(lag), color='red', fontsize='x-large')

pacf_x=pacf( milk, nlags=lags-1,alpha=0.05,

)

pacf_x, pconfint =pacf_x[:2]

smt.graphics.plot_pacf( milk, zero=False, ax=axes[1],

auto_ylims=False, lags=lags-1 )

for lag in [1,13,25,37]:

axes[1].scatter( lag, pacf_x[lag] , s=500 , facecolors='none', edgecolors='red' )

axes[1].text( lag-1.3, pacf_x[lag]-0.15, 'Lag '+str(lag), color='red', fontsize='large')

plt.show()

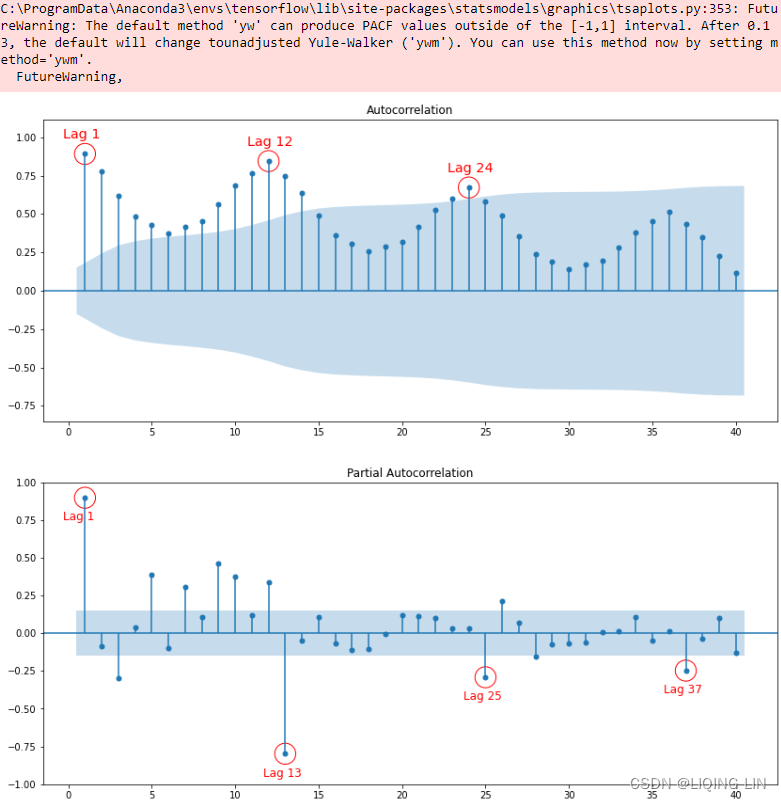

Figure 10.18 – ACF plot showing significant spikes at lags 1, 12, and 24, followed by a cut-off (no other significant lags afterward) + exponential decay in the following PACF plot==> MA

- [12,24] ==> S=12

- there is a significant spike at lag 1, followed by a cut-off, which represents the non-seasonal order for the MA process as q=1.

- The spike at lags 12 and 24 represents the seasonal order for the MA process as Q=1=12/S or Q=1=24/S.

The PACF plot :

- an exponential decay(which can be oscillating) at lags 13, 25, and 36 indicates an Seasonal MA model. So, the SARIMA model would be ARIMA (0, 0, 1) (0, 0, 1, 12) .

- SARIMA(p, d, q) (P, D, Q, S)

3. You will use the auto_arima function from pmdarima to optimize and find the best configuration for your SARIMA model. Prior knowledge about the milk data is key to obtaining the best results from auto_arima. You know the data has a seasonal pattern, so you will need to provide values for the two parameters: seasonal=True and m=12 (the number of periods in a season). If these values are not set, the model will only search for the non-seasonal orders (p, d, q).

###################ts9_annot_arrow_hvplot PyViz interacti_bokeh_STL_seasonal_decomp_HodrickP_KPSS_F-stati_Box-Cox_Ljung_LIQING LIN的博客-CSDN博客

You will explore two statistical tests, the Augmented Dickey-Fuller (ADF) test and the Kwiatkowski-Phillips-Schmidt-Shin (KPSS) test, using the statsmodels library. Both ADF and KPSS test for unit roots in a univariate time series process. Note that unit roots are just one cause for a time series to be non-stationary, but generally, the presence of unit roots indicates non-stationarity.

Both ADF and KPSS are based on linear regression and are a type of statistical hypothesis test. For example,

- the null hypothesis for ADF states that there is a unit root in the time series, and thus, it is non-stationary.

- On the other hand, KPSS has the opposite null hypothesis, which assumes the time series is stationary.

###################

The test parameter specifies the type of unit root test to use to detect stationarity to determine the differencing order ( d ). The default test is kpss . You will change the parameter to use adf instead (to be consistent with what you did in Chapter 10, Building Univariate Time Series Models Using Statistical Methods.) Similarly, seasonal_test is used to determine the order ( D ) for seasonal differencing. The default seasonal_test is OCSB (the Osborn-Chui-Smith-Birchenhall test), which you will keep as-is:

auto_model = pm.auto_arima( train,

seasonal=True, m=12,

test='adf',

stepwise=True # default: auto_arima performs a stepwise search

)

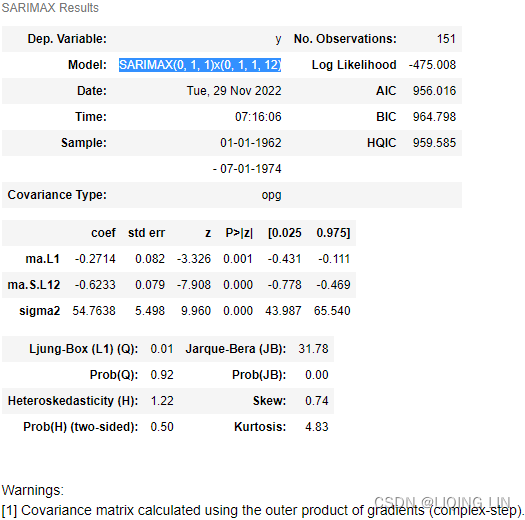

auto_model.summary() Figure 11.1 – Summary of the best SARIMA model selected using auto_arima.

Figure 11.1 – Summary of the best SARIMA model selected using auto_arima.

Interestingly, the best model is a SARIMA(0,1,1)(0,1,1,12), which is the same model you obtained in the Forecasting univariate time series data with seasonal ARIMA recipe in the previous chapter https://blog.csdn.net/Linli522362242/article/details/128067340. You estimated the non-seasonal order (p, q) and seasonal orders (P, Q) using the ACF and PACF plots.

4. If you want to observe the score of the trained model at each iteration, you can use trace=True :

trace : bool or int, optional (default=False)

Whether to print status on the fits. A value of False will print no debugging information. A value of True will print some. Integer values exceeding 1 will print increasing amounts of debug information at each fit

auto_model = pm.auto_arima( train,

seasonal=True, m=12,

test='adf',

stepwise=True,

trace=True

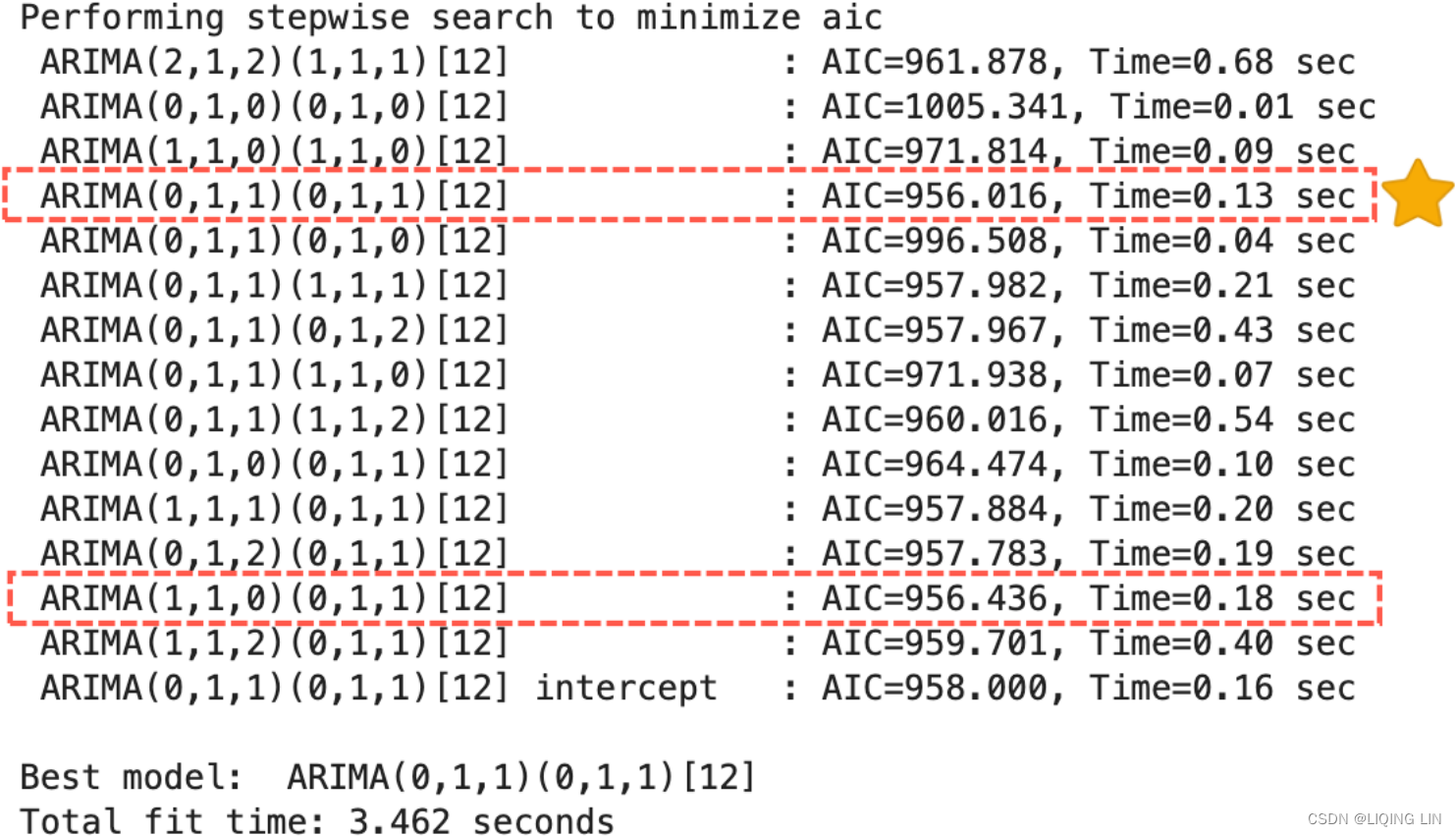

) This should print the AIC results for each model from the step-wise algorithm: Figure 11.2 – auto_arima evaluating different SARIMA models

Figure 11.2 – auto_arima evaluating different SARIMA models

The best model was selected based on AIC, which is controlled by the information_criterion parameter. This can be changed to any of the four supported information criterias aic (default), bic , hqic(Hannan–Quinn information criterion) , and oob (“out of bag”https://blog.csdn.net/Linli522362242/article/details/104771157).

In Figure 11.2 two models are highlighted with similar (close enough) AIC scores but with drastically/ˈdræstɪkli/ different截然不同 non-seasonal (p, q) orders.

- The winning model (marked with a star Seasonal ARIMA(0,1,1)(0,1,1,12)) does not have a non-seasonal AutoRegressive AR(p) component; instead, it assumes a moving average MA(q) process.

- On the other hand, the second highlighted model(Seasonal ARIMA(1,1,1)(0,1,1,12)) shows only an AR(p) process, for the non-seasonal component.

This demonstrates that even the best auto_arima still require your best judgment and analysis to evaluate the results.

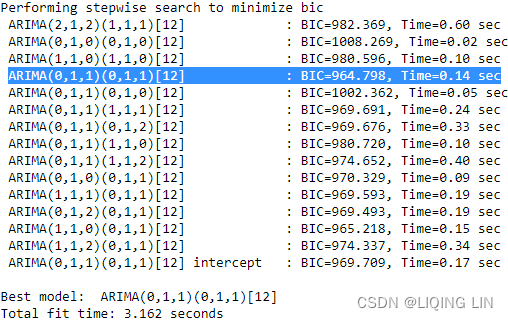

Choose BIC as the information criteria, as shown in the following code:

auto_model = pm.auto_arima( train,

seasonal=True, m=12,

test='adf',

information_criterion='bic',

stepwise=True,

trace=True

) The output will display the BIC for each iteration. Interestingly, the winning model will be the same as the one shown in Figure 11.2.

The output will display the BIC for each iteration. Interestingly, the winning model will be the same as the one shown in Figure 11.2.

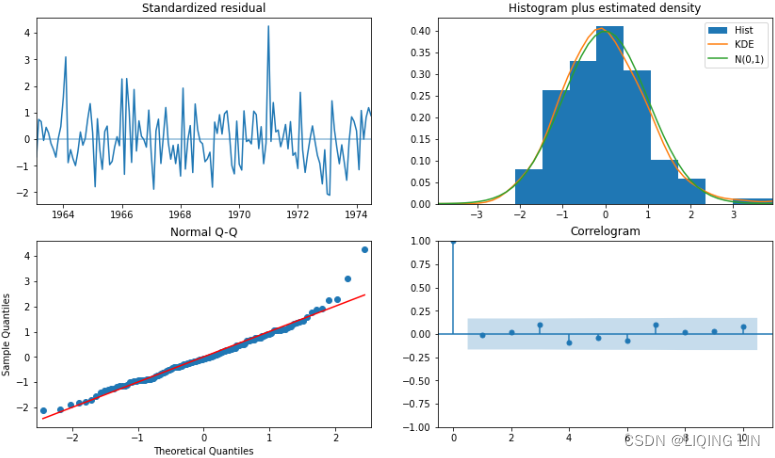

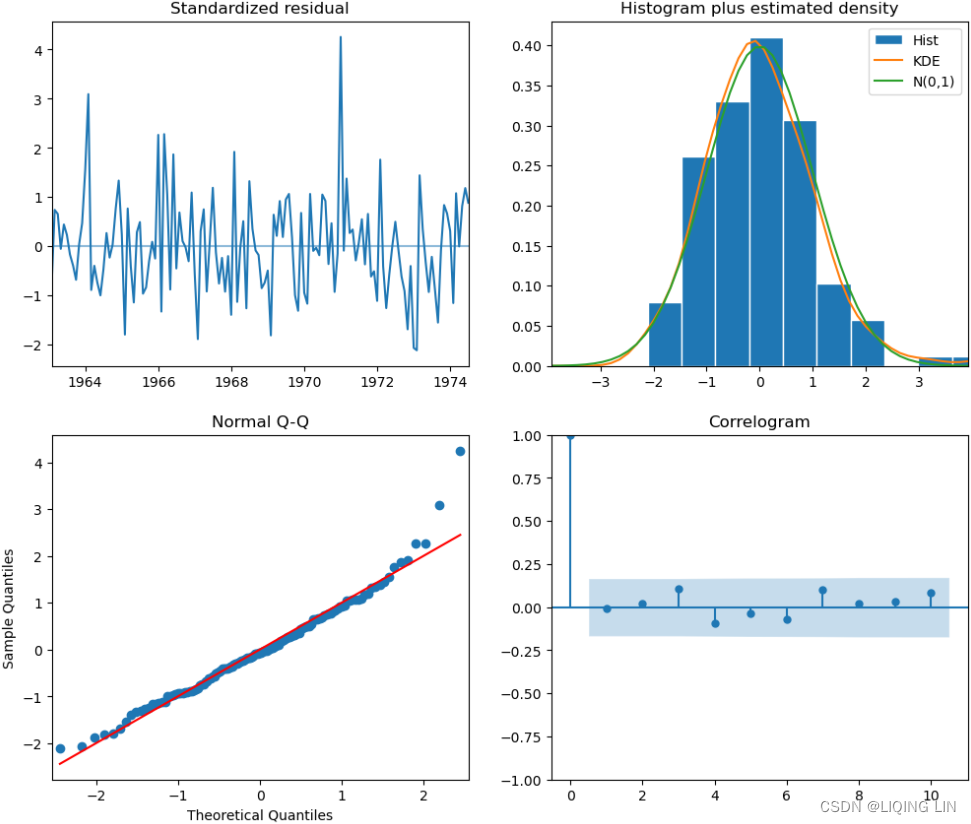

5. Inspect the residuals to gauge/ ɡeɪdʒ /估计,判断,测量 the overall performance of the model by using the plot_diagnostics method. This should produce the same results that were shown in Figure 10.24 from Chapter 10, Building Univariate Time Series Models Using Statistical Methods.

auto_model.plot_diagnostics( figsize=(14, 8) )

plt.show()

import matplotlib as mpl

mpl.rcParams['patch.force_edgecolor'] = True

mpl.rcParams['patch.edgecolor'] = 'white'

auto_model.plot_diagnostics( figsize=(12, 10) )

plt.show()

# https://alkaline-ml.com/pmdarima/modules/generated/pmdarima.arima.auto_arima.html

auto_model.scoring # return the default method ![]()

6. auto_model stored the winning SARIMA model.

auto_model![]()

New version:

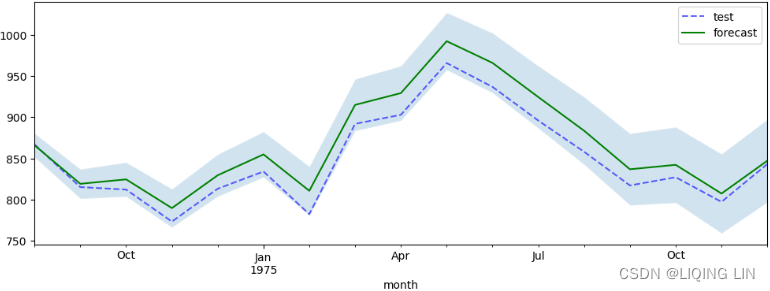

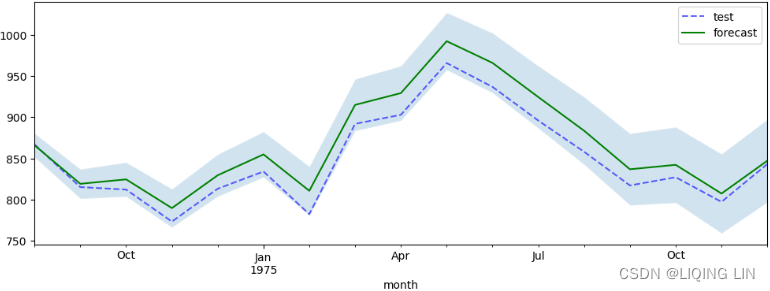

To make a prediction, you can use the predict method. You need to provide the number of periods to forecast forward into the future. You can obtain the confidence intervals with the prediction by updating the return_conf_int parameter from False to True . This allows you to plot the lower and upper confidence intervals using matplotlib's fill_between function. The following code uses the predict method, returns the confidence intervals, and plots the predicted values against the test set:

import pandas as pd

n = test.shape[0]

forecast, conf_intervals = auto_model.predict( n_periods=n,

return_conf_int=True

)# default alpha=0.05 : 95% confidence interval

lower_ci, upper_ci = zip( *conf_intervals ) # * https://blog.csdn.net/Linli522362242/article/details/118891490

index = test.index

ax = test.plot( style='--', color='blue',

alpha=0.6, figsize=(12,4)

)

pd.Series( forecast,

index=index

).plot( style='-', ax=ax, color='green' )

plt.fill_between( index, lower_ci, upper_ci, alpha=0.2 )

plt.legend( ['test', 'forecast'] )

plt.show()The shaded area is based on the lower and upper bounds of the confidence intervals:

Figure 11.3 – Plotting the forecast from auto_arima against actual test data with the confidence intervals

Figure 11.3 – Plotting the forecast from auto_arima against actual test data with the confidence intervals

The default confidence interval is set to 95% and can be controlled using the alpha parameter ( alpha=0.05 , which is 95% confidence interval) from the predict() method. The shaded area represents the likelihood that the real values would lie between this range. Ideally, you would prefer a narrower confidence interval range.

Notice that the forecast line lies in the middle of the shaded area. This represents the mean of the upper and lower bounds. The following code demonstrates this:

sum( forecast ) == sum( conf_intervals.mean(axis=1) )![]()

The auto_arima function from the pmdarima library is a wrapper for the statsmodels SARIMAX class. auto_arima intelligently attempts to automate the process of optimizing the training process to find the best model and parameters. There are three ways to do so:

- • When stepwise=True, auto_arima performs a stepwise search (which is the default).

- • With stepwise=False, it performs a brute-force grid search (full search).

- • With random=True, it performs a random search.

The pmdarima library offers a plethora/ˈpleθərə/过多,过剩 of useful functions to help you make informed decisions so that you can understand the data you are working with.

For example, the ndiff function performs stationarity tests to determine the differencing order, d , to make the time series stationary. The tests include

- the Augmented Dickey-Fuller ( ADF ) test,

In statistics and econometrics, an augmented Dickey–Fuller test (ADF) tests the null hypothesisof a unit root is present in a time series sample. The alternative hypothesis

is different depending on which version of the test is used, but is usually stationarity or trend-stationarity. It is an augmented version of the Dickey–Fuller test for a larger and more complicated set of time series models.t4_Trading Strategies(dual SMA_Naive_Turtle_pair_Pearson_z-scores_ARIMA_autoregressive_Dickey–Fuller_LIQING LIN的博客-CSDN博客

Here are some basic autoregression models for use in ADF testing:- No constant and no trend:

AR(1) :==>

the constant term c has been suppressed常数项已被抑制

Subtract from both sides==>

from both sides==>

OR

- if -1<

<1, the model would be stationary

<1, the model would be stationary  ==1, the series

==1, the series  is non-stationary,

is non-stationary,

>1,

>1,  , the series

, the series is divergent and the difference series is non-stationary

is divergent and the difference series is non-stationary- Therefore, judging whether a sequence is stable can be achieved by checking whether

is strictly less than 1.

is strictly less than 1. - A unit root is present if

=1. The model would be non-stationary in this case.

=1. The model would be non-stationary in this case.  is the first difference operator and

is the first difference operator and  This model can be estimated and testing for a unit root is equivalent to testing

This model can be estimated and testing for a unit root is equivalent to testing  Since the test is done over the residual term rather than raw data, it is not possible to use standard t-distribution to provide critical values. Therefore, this statistic t has a specific distribution simply known as the Dickey–Fuller table.

Since the test is done over the residual term rather than raw data, it is not possible to use standard t-distribution to provide critical values. Therefore, this statistic t has a specific distribution simply known as the Dickey–Fuller table.

https://blog.csdn.net/Linli522362242/article/details/121721868

- if -1<

- A constant without a trend:

- With a constant and trend:

Here, is the first difference operator and

is the first difference operator and

is the drift constant漂移常数,

is the coefficient on a time trend,

is the coefficient of our hypothesis, judging whether a sequence is stable can be achieved by checking whether

is strictly less than 0

is the lag order of the first-differences autoregressive process.

is white noise

- class

pmdarima.arima.ADFTest(alpha=0.05, k=None)

This test is generally used indirectly via the pmdarima.arima.ndiffs() function, which computes the differencing term,d.alpha : float, optional (default=0.05)

Level of the test

k : int, optional (default=None)

The drift parameter. Ifkis None, it will be set to:np.trunc( np.power(x.shape[0]-1, 1/3.0) )#The truncated value of the scalar x is the nearest integer i # which is closer to zero than x is. # In short, the fractional part of the signed number x is discarded. a = np.array([-1.7, -1.5, -0.2, 0.2, 1.5, 1.7, 2.0]) np.trunc(a) # array([-1., -1., -0., 0., 1., 1., 2.])

- No constant and no trend:

- the Kwiatkowski–Phillips–Schmidt–Shin ( kpss ) test,

In econometrics, Kwiatkowski–Phillips–Schmidt–Shin (KPSS) tests are used for testing a null hypothesisthat an observable time series is stationary around a deterministic trend (i.e. trend-stationary : A process that does not exhibit a trend.) against the alternative of a unit root.

This test is generally used indirectly via the pmdarima.arima.ndiffs() function, which computes the differencing term,d.alpha : float, optional (default=0.05)

Level of the test

null : str, optional (default=’level’)

Whether to fit the linear model on the one vector, or an arange. If

nullis ‘trend’, a linear model is fit on an arange, if ‘level’, it is fit on the one vector.lshort : bool, optional (default=True)

Whether or not to truncate the

lvalue in the C code. - and the Phillips-Perron

( pp : the Phillips-Perron test (named after Peter C. B. Phillips and Pierre Perron) is a unit root test. It is used in time series analysis to test the null hypothesisthat a time series is integrated of order 1 (trend d=1). It builds on the Dickey–Fuller test of the null hypothesis

or

in

, where

is the first difference operator :

is the first difference operator :  is non-stationary

is non-stationary) test. pmdarima.arima.PPTest — pmdarima 2.0.2 documentation

This test is generally used indirectly via the pmdarima.arima.ndiffs() function, which computes the differencing term,d.

This will return a boolean value(1 or 0), indicating whether the series is stationary or not. - class

pmdarima.arima.CHTest(m)

The Canova-Hansen test for seasonal differences. Canova and Hansen (1995) proposed a test statistic for the null hypothesis that the seasonal pattern is stable. The test statistic can be formulated in terms of seasonal dummies or seasonal cycles.

The former allows us to identify seasons (e.g. months or quarters) that are not stable, while the latter tests the stability of seasonal cycles (e.g. cycles of period 2 and 4 quarters in quarterly data).m : int

The seasonal differencing term. For monthly data, e.g., this would be 12. For quarterly, 4, etc. For the Canova-Hansen test to work,

mmust exceed 1.estimate_seasonal_differencing_term(x)

Estimate the seasonal differencing term. D - class

pmdarima.arima.OCSBTest(m, lag_method='aic', max_lag=3)

Compute the Osborn, Chui, Smith, and Birchenhall (OCSB) test for an input time series to determine whether it needs seasonal differencing. The regression equation may include lags of the dependent variable.

Whenlag_method= “fixed”, the lag order is fixed tomax_lag;

otherwise,max_lagis the maximum number of lags considered in a lag selection procedure that minimizes thelag_methodcriterion, which can be “aic”, “bic” or corrected AIC, “aicc”.

Critical values for the test are based on simulations, which have been smoothed over to produce critical values for all seasonal periodsm : int

The seasonal differencing term. For monthly data, e.g., this would be 12. For quarterly, 4, etc. For the OCSB test to work,

mmust exceed 1.lag_method : str, optional (default=”aic”)

The lag method to use. One of (“fixed”, “aic”, “bic”, “aicc”). The metric for assessing model performance after fitting a linear model.

max_lag : int, optional (default=3)

The maximum lag order to be considered by

lag_method.estimate_seasonal_differencing_term(x)

Estimate the seasonal differencing term. D pmdarima.arima.ndiffs(x, alpha=0.05, test='kpss', max_d=2, **kwargs)

Estimate ARIMA differencing term,d.

Perform a test of stationarity for different levels ofdto estimate the number of differences required to make a given time series stationary. Will select the maximum value ofdfor which the time series is judged stationary by the statistical test.

return d:

The estimated differencing term. This is the maximum value ofdsuch thatd <= max_dand the time series is judged stationary. If the time series is constant, will return 0.6. Tips to using auto_arima — pmdarima 2.0.2 documentation

Similarly, the nsdiff function helps estimate the number of seasonal differencing orders ( D ) that are needed. The implementation covers two tests–the Osborn-Chui-Smith-Birchenhall ( ocsb ) and Canova-Hansen ( ch ) tests:

from pmdarima.arima.utils import ndiffs, nsdiffs

# ADF test:

n_adf = ndiffs( milk, test='adf' )

# KPSS test:

# unitroot_ndiffs gives 'the number of differences' required

# to lead to a 'stationary' series based on the KPSS test

n_kpss = ndiffs( milk, test='kpss' )

# PP test:

n_pp = ndiffs( milk, test='pp' )

# OCSB test

oscb_max_D = nsdiffs(milk, test='ocsb', m=12, max_D=12)

# Ch test

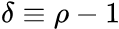

ch_max_D = nsdiffs(milk, test='ch', m=12, max_D=12)print( f'''

differencing (d) term using:

ADF: {n_adf},

KPSS: {n_kpss},

pp: {n_pp},

oscb: Seasonal differencing (D) term: {oscb_max_D},

ch_max_D: Seasonal differencing (D) term for oscb: {ch_max_D}

''') This is the maximum value of

This is the maximum value of d such that d<=max_d and the time series is judged stationary. If the time series is constant, will return 0.

auto_arima() gives you more control over the evaluation process by providing a min and max constraints. For example, you can provide min and max constraints for the non-seasonal autoregressive order, p , or the seasonal moving average, Q.

# https://alkaline-ml.com/pmdarima/modules/generated/pmdarima.arima.auto_arima.html

model = pm.auto_arima( train,

seasonal=True,

with_intercept = True, # If with_intercept is True, trend will be used.

d = 1, max_d=2,

start_p=0, max_p=2,

start_q=0, max_q=2,

m=12,

D = 1, max_D =2,

start_P=0, max_P=2,

start_Q=0, max_Q=2,

information_criterion='aic',

stepwise=False,

out_of_sample_size=25,

test='kpss',

score='mape', # Mean absolute percentage error

trace=True,

)With trace=True, executing the preceding code will show the AIC score for each fitted model. The following code shows the seasonal ARIMA model and the AIC scores for the all models:

Once completed, it should print out the best model.

Once completed, it should print out the best model.

If you run the preceding code, auto_arima will create different models for every combination of the parameter values based on the constraints you provided. Because stepwise is set to False , it becomes a brute-force grid search in which every permutation for the different variable combinations is tested one by one. Hence, this is generally a much slower process, but by providing these constraints, you can improve the search performance.

The approach that was taken here, with stepwise=False , should resemble the approach you took in Chapter 10, Building Univariate Time Series Models Using Statistical Methods, in the Forecasting univariate time series data with seasonal ARIMA recipe, in the There's more... section.

model.out_of_sample_size ![]()

The ARIMA class can fit only a portion of the data if specified, in order to retain an “out of bag”sample score. This is the number of examples from the tail of the time series to hold out and use as validation examples. The model will not be fit on these samples, but the observations will be added into the model’s endog and exog arrays so that future forecast values originate from the end of the endogenous vector.

model.summary()

model.plot_diagnostics( figsize=(12,7) )

plt.show()

Forecasting time series data using Facebook Prophet

The Prophet library is a popular open source project that was originally developed at Facebook (Meta) based on a 2017 paper that proposed an algorithm for time series forecasting titled Forecasting at Scale. The project soon gained popularity due to its simplicity, its ability to create compelling and performant forecasting models, and its ability to handle complex seasonality, holiday effects, missing data, and outliers. The Prophet library automates many aspects of designing a forecasting model while providing additional out-of-the-box visualizations开箱即用的可视化效果. The library offers additional capabilities, such as building growth models (saturated forecasts), working with uncertainty in trend and seasonality, and changepoint detection.

Overall, Prophet automated many aspects of building and optimizing the model. Building a complex model was straightforward with Prophet. However, you still had to provide some instructions initially to ensure the model was tuned properly. For example, you had to determine if the seasonal effect was additive or multiplicative when initializing the model. You also had to specify a monthly frequency when extending the periods into the future with freq=' MS'(Any valid frequency for pd.date_range,such as 'D' or 'M'.) in the make_future_dataframe method.

In this recipe, you will use the Milk Production dataset used in the previous recipe. This will help you understand the different forecasting approaches while using the same dataset for benchmarking.

The Prophet algorithm is an additive regression model that can handle non-linear trends and works well with strong seasonal effects. The algorithm decomposes a time series into three main components: trend, seasonality, and holidays. The model can be written as follows:

Here,

describes a piecewise-linear trend function, describes a piecewise-linear trend (or “growth term”)

represents the periodic seasonality function, describes the various seasonal patterns

- ℎ(

) captures the holiday effects, and

is the white noise error term (residual).

- The knots/nɑːts/ 结点 (or changepoints) for the piecewise-linear trend are automatically selected if not explicitly specified. Optionally, a logistic function can be used to set an upper bound on the trend.

- The seasonal component consists of Fourier terms傅立叶项 of the relevant periods. By default,

- order 10 is used for annual seasonality and

- order 3 is used for weekly seasonality.

-

Fourier terms are periodic and that makes them useful in describing a periodic pattern.

-

Fourier terms can be added linearly to describe more complex functions.

###############https://www.seas.upenn.edu/~kassam/tcom370/n99_2B.pdf

and:

assume:

assume:

##########https://sigproc.mit.edu/_static/fall19/lectures/lec02a.pdf

and:

assume:

assume:

if

:

###########################

We have for instance the following relationship with only two termsthe amplitude

and phase shift(相移)

.

The right side is not an easy function to work with. The phase shift θ makes the function non-linear and you can not use it in, for instance, linear regression.

With more Fourier terms you can describe more variations in the shape of function.

m : is the seasonal period,is an ARIMA process.

K : The total number of Fourier terms for each seasonal period could be selected to minimise the AICc.

If m is the seasonal period, then the first few Fourier terms are given by and so on. If we have monthly seasonality, and we use the first 11 of these predictor variables, then we will get exactly the same forecasts as using 11 dummy variables.

and so on. If we have monthly seasonality, and we use the first 11 of these predictor variables, then we will get exactly the same forecasts as using 11 dummy variables.

With Fourier terms, we often need fewer predictors than with dummy variables, especially when m is large. This makes them useful for weekly data, for example, where m≈52. For short seasonal periods (e.g., quarterly data, m=4), there is little advantage in using Fourier terms over seasonal dummy variables.

Trend

It is common for time series data to be trending. A linear trend can be modelled by simply usingas a predictor,

where t=1,…,T. A trend variable can be specified in theTSLM()function using thetrend()special. In Section 7.7 we discuss how we can also model nonlinear trends.

These Fourier terms are produced using thefourier()function. For example, the Australian beer quarterly data can be modelled like this.

TheKargument tofourier()specifies how many pairs of sin and cos terms to include. The maximum allowed is K=m/2 where m is the seasonal period. Because we have used the maximum here, the results are identical to those obtained when using seasonal dummy variables.fourier_beer <- recent_production %>% model(TSLM(Beer ~ trend() + fourier(K = 2))) report(fourier_beer) #> Series: Beer #> Model: TSLM #> #> Residuals: #> Min 1Q Median 3Q Max #> -42.90 -7.60 -0.46 7.99 21.79 #> #> Coefficients: #> Estimate Std. Error t value Pr(>|t|) #> (Intercept) 446.8792 2.8732 155.53 < 2e-16 *** #> trend() -0.3403 0.0666 -5.11 2.7e-06 *** #> fourier(K = 2)C1_4 8.9108 2.0112 4.43 3.4e-05 *** #> fourier(K = 2)S1_4 -53.7281 2.0112 -26.71 < 2e-16 *** #> fourier(K = 2)C2_4 -13.9896 1.4226 -9.83 9.3e-15 *** #> --- #> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 #> #> Residual standard error: 12.2 on 69 degrees of freedom #> Multiple R-squared: 0.924, Adjusted R-squared: 0.92 #> F-statistic: 211 on 4 and 69 DF, p-value: <2e-16If only the first two Fourier terms(K=1) are used (

and

), the seasonal pattern will follow a simple sine wave.

A regression model containing Fourier terms is often called a harmonic regression because the successive Fourier terms represent harmonics of the first two Fourier terms.

-

- Holiday effects are added as simple dummy variables.

Dummy variables

when forecasting daily sales and you want to take account of whether the day is a public holiday or not. So the predictor takes value “yes” on a public holiday, and “no” otherwise.

This situation can still be handled within the framework of multiple regression models by creating a “dummy variable” which takes value 1 corresponding to “yes” and 0 corresponding to “no”. A dummy variable is also known as an “indicator variable”.

For example, based on the gender variable, we can create a new variable that takes the form (3.26)

(3.26)

and use this variable as a predictor in the regression equation. This results in the model (3.27)

(3.27)

Nowcan be interpreted as the average credit card balance among males,

as the average credit card balance among females, and

as the average difference in credit card balance between females and males.

Table 3.7 displays the coefficient estimates and other information associated with the model (3.27). The average credit card debt for males is estimated to be $509.80, whereas females are estimated to carry $19.73 in additional debt for a total of $509.80 + $19.73 = $529.53.

displays the coefficient estimates and other information associated with the model (3.27). The average credit card debt for males is estimated to be $509.80, whereas females are estimated to carry $19.73 in additional debt for a total of $509.80 + $19.73 = $529.53. - The model is estimated using a Bayesian approach to allow for automatic selection of the changepoints and other model characteristics.

The algorithm uses Bayesian inferencing to automate many aspects of tuning the model and finding the optimized values for each component. Behind the scenes, Prophet uses Stan, a library for Bayesian inferencing, through the PyStan library as the Python interface to Stan.

The Prophet library is very opinionated when it comes to column names. The Prophet class does not expect a DatetimeIndex but rather a datetime column called ds and a variable column (that you want to forecast) called y. Note that if you have more than two columns, Prophet will only pick the ds and y columns and ignore the rest. If these two columns do not exist, it will throw an error. Follow these steps:

1. Start by reading the milk_productions.csv fle and rename the columns ds and y

milk_file='https://raw.githubusercontent.com/PacktPublishing/Time-Series-Analysis-with-Python-Cookbook/main/datasets/Ch10/milk_production.csv'

# milk = pd.read_csv( milk_file,

# index_col='month',

# parse_dates=True,

# )

# milk=milk.reset_index()

# milk.columns=['ds','y']

# OR

milk = pd.read_csv( milk_file,

parse_dates=['month']

)

milk.columns = ['ds', 'y'] # rename the column

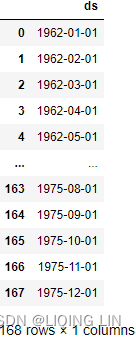

milk

Split the data into test and train sets. Let's go with a 90/10 split by using the following code:

idx = round( len(milk)*0.90 )

train, test = milk[:idx], milk[idx:]

print( f'Train: {train.shape}, Test: {test.shape}' ) ![]()

from bokeh.plotting import figure, show

from bokeh.models import ColumnDataSource, HoverTool

#from bokeh.layouts import column

import numpy as np

import hvplot.pandas

hvplot.extension("bokeh")

source = ColumnDataSource( data={'productionMonth':milk.ds.values,

'production':milk.y.values,

})

# def datetime(x):

# return np.array(x, dtype=datetime64)

p2 = figure( width=800, height=400,

title='Monthly Milk Production',

x_axis_type='datetime',

x_axis_label='ds', y_axis_label='Milk Production'

)

p2.xaxis.axis_label_text_font_style='normal'

p2.yaxis.axis_label_text_font_style='bold'

p2.xaxis.major_label_orientation=np.pi/4 # rotation

p2.title.align='center'

p2.title.text_font_size = '1.5em'

p2.line( x='productionMonth', y='production', source=source,

line_width=2, color='blue',

legend_label='y'

)

# https://docs.bokeh.org/en/latest/docs/first_steps/first_steps_3.html

p2.legend.location = "top_left"

p2.legend.label_text_font = "times"

p2.legend.label_text_font_style = "italic"

p2.circle(x='productionMonth', y='production', source=source,

fill_color='white', size=5

)

p2.add_tools( HoverTool( tooltips=[('Production Month', '@productionMonth{%Y-%m}'),

('Production', '@production{0}')

],

formatters={'@productionMonth':'datetime',

'@production':'numeral'

},

mode='vline'

)

)

show(p2)

Prophet

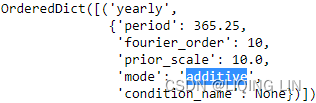

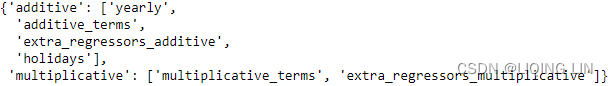

2. You can create an instance of the Prophet class and fit it on the train set in one line using the fit method. The milk production time series is monthly, with both trend and a steady seasonal fluctuation (additive: (The seasonal magnitudes and variations over time seem to be steady)).The default seasonality_mode in Prophet is additive, so leave it as-is:

from prophet import Prophet

model = Prophet().fit( train )![]() yearly_seasonality , weekly_seasonality , and daily_seasonality are set to auto by default, this allows Prophet to determine which ones to turn on or of based on the data.

yearly_seasonality , weekly_seasonality , and daily_seasonality are set to auto by default, this allows Prophet to determine which ones to turn on or of based on the data.

###########################

OR

from prophet.plot import plot_yearly

m = Prophet(yearly_seasonality=10).fit(train)

a = plot_yearly(m)

The default Fourier order for yearly seasonality is 10,

The default values are often appropriate, but they can be increased when the seasonality needs to fit higher-frequency changes, and generally be less smooth. The Fourier order can be specified for each built-in seasonality when instantiating the model, here it is increased to 20:

from prophet.plot import plot_yearly

m = Prophet(yearly_seasonality=20).fit(train)

a = plot_yearly(m)

###########################

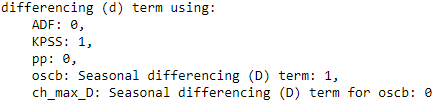

model.seasonalities

model.component_modes

3. Some setup needs to be done before you can use the model to make predictions. Use the make_future_dataframe method to extend the train DataFrame forward for a specific number of periods and at a specified frequency:

future = model.make_future_dataframe( len(test), #periods : number of periods to forecast forward

freq='MS', #Any valid frequency for pd.date_range,such as 'D' or 'M'.

include_history=True, # Boolean to include the historical

# dates in the data frame for predictions.

)

future.shape![]()

This extends the training data by 17 months (the number of periods in the test set : len(test) ). In total, you should have the exact number of periods that are in the milk DataFrame (train and test). The frequency is set to month start with freq=' MS' . The future object only contains one column, ds, of the datetime64[ns] type, which is used to populate the predicted values:

future

len( milk ) == len( future ) ![]()

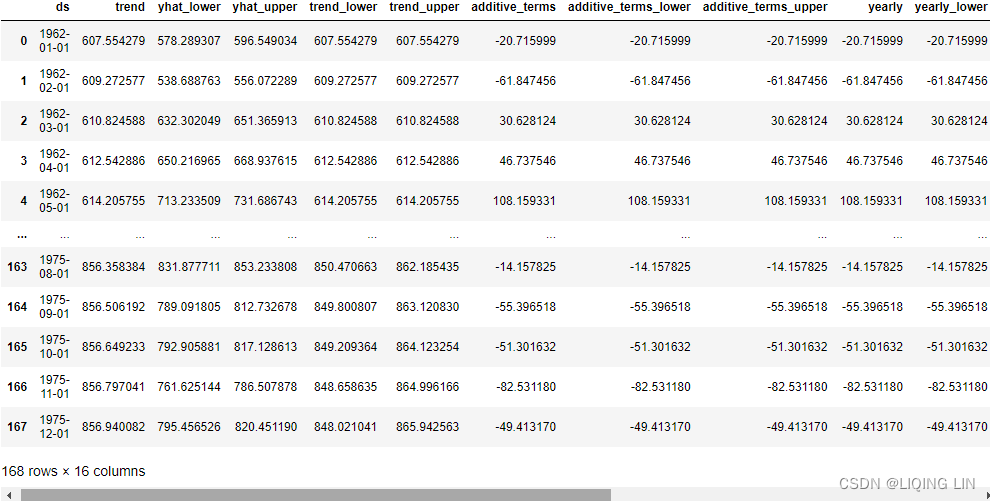

4. Now, use the predict method to take the future DataFrame and make predictions. The result will be a DataFrame that's the same length as forecast but now with additional columns:

forecast = model.predict( future )

forecast.columns

As per Prophet's documentation, there are three components for the uncertainty intervals (for example, yhat_lower and yhat_upper ):

- • Observation noise

- • Parameter uncertainty

- • Future trend uncertainty

By default, the uncertainty_samples parameter is set to 1000 , which is the number of simulations to estimate the uncertainty using the Hamiltonian Monte Carlo (HMC) algorithm. You can always reduce the number of samples that are simulated or set it to 0 or False to speed up the performance. If you set uncertainty_samples=0 or uncertainty_samples=False, the forecast's output will not contain any uncertainty interval calculations. For example, you will not have yhat_lower or yhat_upper.

help(Prophet)class Prophet(builtins.object)

| Prophet(growth='linear', changepoints=None, n_changepoints=25, changepoint_range=0.8, yearly_seasonality='auto', weekly_seasonality='auto', daily_seasonality='auto', holidays=None, seasonality_mode='additive', seasonality_prior_scale=10.0, holidays_prior_scale=10.0, changepoint_prior_scale=0.05, mcmc_samples=0, interval_width=0.8, uncertainty_samples=1000, stan_backend=None)

|

| Prophet forecaster.

|

| Parameters

| ----------

| growth: String 'linear', 'logistic' or 'flat' to specify a linear, logistic or

| flat trend.

| changepoints: List of dates at which to include potential changepoints. If

| not specified, potential changepoints are selected automatically.

| n_changepoints: Number of potential changepoints to include. Not used

| if input `changepoints` is supplied. If `changepoints` is not supplied,

| then n_changepoints potential changepoints are selected uniformly from

| the first `changepoint_range` proportion of the history.

| changepoint_range: Proportion of history in which trend changepoints will

| be estimated. Defaults to 0.8 for the first 80%. Not used if

| `changepoints` is specified.

| yearly_seasonality: Fit yearly seasonality.

| Can be 'auto', True, False, or a number of Fourier terms to generate.

| weekly_seasonality: Fit weekly seasonality.

| Can be 'auto', True, False, or a number of Fourier terms to generate.

| daily_seasonality: Fit daily seasonality.

| Can be 'auto', True, False, or a number of Fourier terms to generate.

| holidays: pd.DataFrame with columns holiday (string) and ds (date type)

| and optionally columns lower_window and upper_window which specify a

| range of days around the date to be included as holidays.

| lower_window=-2 will include 2 days prior to the date as holidays. Also

| optionally can have a column prior_scale specifying the prior scale for

| that holiday.

| seasonality_mode: 'additive' (default) or 'multiplicative'.

| seasonality_prior_scale: Parameter modulating the strength of the

| seasonality model. Larger values allow the model to fit larger seasonal

| fluctuations, smaller values dampen the seasonality. Can be specified

| for individual seasonalities using add_seasonality.

| holidays_prior_scale: Parameter modulating the strength of the holiday

| components model, unless overridden in the holidays input.

| changepoint_prior_scale: Parameter modulating the flexibility of the

| automatic changepoint selection. Large values will allow many

| changepoints, small values will allow few changepoints.

| mcmc_samples: Integer, if greater than 0, will do full Bayesian inference

| with the specified number of MCMC samples. If 0, will do MAP

| estimation.

| interval_width: Float, width of the uncertainty intervals provided

| for the forecast. If mcmc_samples=0, this will be only the uncertainty

| in the trend using the MAP estimate of the extrapolated generative

| model. If mcmc.samples>0, this will be integrated over all model

| parameters, which will include uncertainty in seasonality.

| uncertainty_samples: Number of simulated draws used to estimate

| uncertainty intervals. Settings this value to 0 or False will disable

| uncertainty estimation and speed up the calculation.

| stan_backend: str as defined in StanBackendEnum default: None - will try to

| iterate over all available backends and find the working one

Notice that Prophet returned a lot of details to help you understand how the model performs. Of interest are ds and the predicted value, yhat . Both yhat_lower and yhat_upper represent the uncertainty intervals for the prediction ( yhat ).

forecast

Create a cols object to store the columns of interest so that you can use them later:

cols = ['ds', 'yhat', 'yhat_lower', 'yhat_upper' ]visualize the datapoints, forecast and uncertainty interval

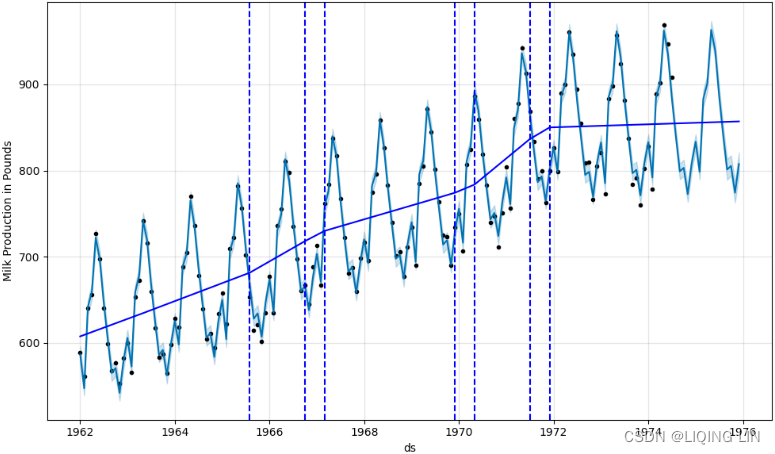

5. The model object provides two plotting methods: plot and plot_components . Start by using plot to visualize the forecast from Prophet:

fig, ax = plt.subplots(1,1, figsize=(10,6))

model.plot( forecast, ax=ax, ylabel='Milk Production in Pounds', include_legend=True )

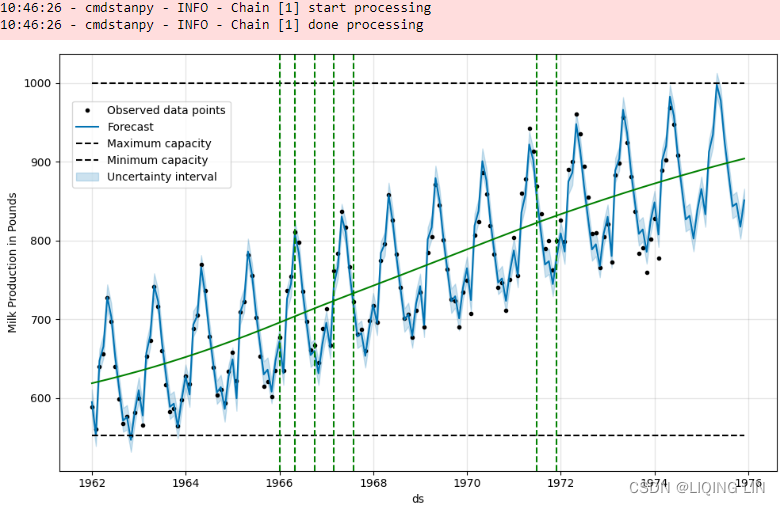

plt.show() This should produce a time series plot with two forecasts (predictions): the first segment can be distinguished by the dots (one for each training data point), while the line represents the estimated forecast for the historical data. This is followed by another segment that shows future predictions beyond the training data (no dots present): Figure 11.4 – Plotting the forecast (historical and future) using Prophet

Figure 11.4 – Plotting the forecast (historical and future) using Prophet

The shaded area in Figure 11.4 represents the uncertainty intervals. This is represented by the yhat_lower and yhat_upper columns in the forecast DataFrame.

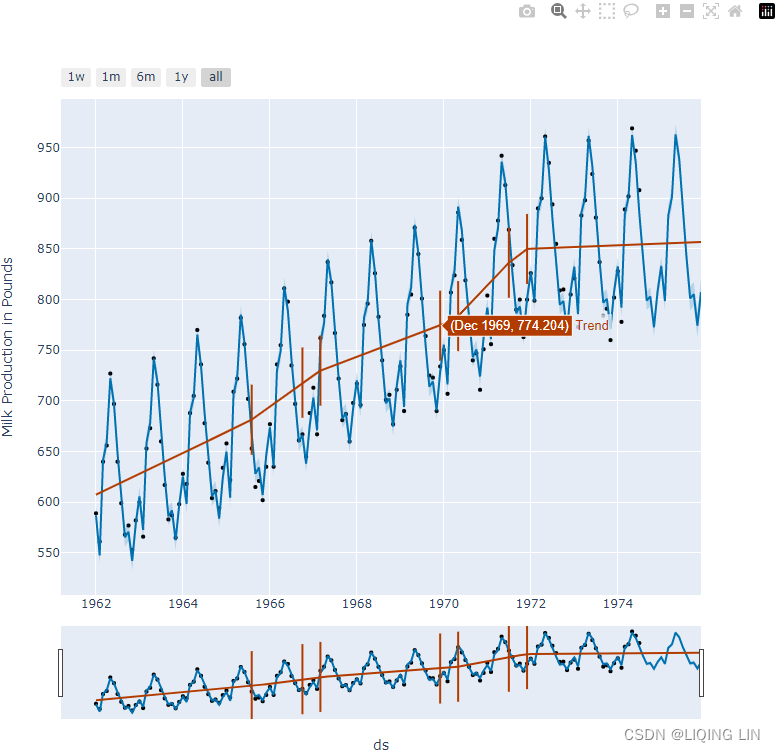

from prophet.plot import plot_plotly, plot_components_plotly

plot_plotly(model, forecast, figsize=(800,800))

Here, you can see the distinction between past and future estimates (forecast) more concretely by only plotting the future periods beyond the training set. You can accomplish this with the following code:

predicted = model.predict( test )

fig, ax = plt.subplots(1,1, figsize=(10,6))

model.plot( predicted, ax=ax, ylabel='Milk Production in Pounds', include_legend=True )

plt.show() This should produce a plot that's similar to the one shown in Figure 11.4, but it will only show the forecast line for the second segment(test set) – that is, the future forecast.

This should produce a plot that's similar to the one shown in Figure 11.4, but it will only show the forecast line for the second segment(test set) – that is, the future forecast.

plot_plotly( model, forecast[-len(test):],

ylabel='Milk Production in Pounds',figsize=(800, 600) )

plot identifed components(trend and seasonality (annual) ) in forecast

6. The next important plot deals with the forecast components. Use the plot_components method to plot the components:

model.plot_components( forecast, figsize=(10,6) )

plt.show()The number of subplots will depend on the number of components that have been identifed in the forecast. For example, if holiday was included, then it will show the holiday component. In our example, there will be two subplots: trend and yearly

The shaded area in the trend plot represents the uncertainty interval for estimating the trend. The data is stored in the trend_lower and trend_upper columns of the forecast DataFrame.

Figure 11.5 – Plotting the components showing trend and seasonality (annual)

Figure 11.5 – Plotting the components showing trend and seasonality (annual)

Figure 11.5 breaks down the trend and seasonality of the training data. If you look at Figure 11.4, you will see a positive upward trend that becomes steady (slows down) starting from 1972. The seasonal pattern shows an increase in production around summertime (this peaks in Figure 11.4).

from prophet.plot import plot_plotly, plot_components_plotly

plot_components_plotly(model, forecast, figsize=(800,400))The shaded area in the trend plot represents the uncertainty interval for estimating the trend. The data is stored in the trend_lower and trend_upper columns of the forecast DataFrame.

Actual VS Forecast

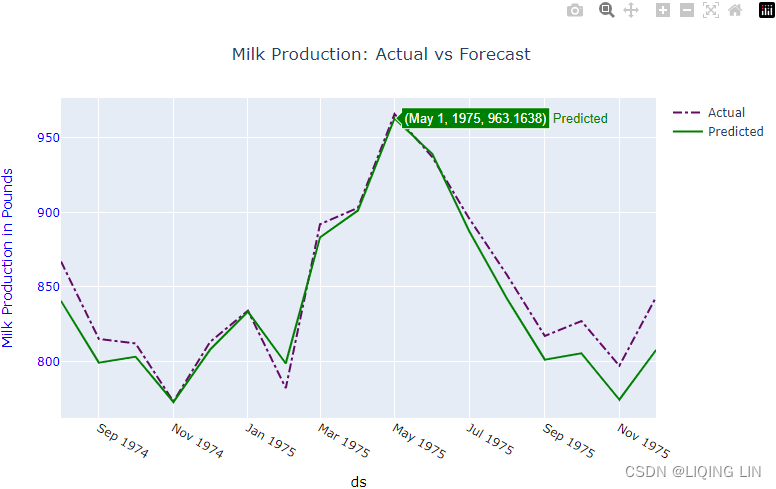

7. Finally, compare with out-of-sample data (the test data) to see how well the model performs:

ax = test.plot( x='ds', y='y',

label='Actual', style='-.', color='blue',

figsize=(12,8)

)

predicted.plot( x='ds', y='yhat',

label='Predicted', style='-', color='green',

ax=ax

)

plt.title('Milk Production: Actual vs Forecast')

plt.show()

test

import plotly.graph_objects as go

# predicted = model.predict( test )

# https://stackoverflow.com/questions/59953431/how-to-change-plotly-figure-size

layout=go.Layout(width=800, height=500,

title='Milk Production: Actual vs Forecast',

title_x=0.5, title_y=0.9,

xaxis=dict(title='ds', color='black', tickangle=30),

yaxis=dict(title='Milk Production in Pounds', color='blue')

)

fig = go.Figure(layout=layout)

# https://plotly.com/python/marker-style/#custom-marker-symbols

# circle-open-dot

# https://support.sisense.com/kb/en/article/changing-line-styling-plotly-python-and-r

fig.add_trace( go.Scatter( name='Actual',

mode ='lines', #marker_symbol='circle',

line=dict(shape = 'linear', color = 'rgb(100, 10, 100)',

width = 2, dash = 'dashdot'),

x=test['ds'], y=test['y'],

#title='Milk Production: Actual vs Forecast'

)

)

fig.add_trace( go.Scatter( name='Predicted',

mode ='lines',

marker_color='green', marker_line_color='green',

x=predicted['ds'], y=predicted['yhat'],

)

)

#fig.update_xaxes(showgrid=True, ticklabelmode="period")

fig.show() Figure 11.6 – Comparing Prophet's forecast against test data

Figure 11.6 – Comparing Prophet's forecast against test data

Compare this plot with Figure 11.3 to see how Prophet compares to the SARIMA model that you obtained using auto_arima

to see how Prophet compares to the SARIMA model that you obtained using auto_arima

Notice that for the highly seasonal milk production data, the model did a great job. Generally, Prophet shines when it's working with strong seasonal time series data一般来说, Prophet 在处理强季节性时间序列数据时表现出色.

Original data vs Changepoints

Prophet automatically detects changes or sudden fluctuations in the trend. Initially, it will place 25 points using the first 80% of the training data. You can change the number of changepoints with the n_changepoints parameter. Similarly, the proportion of past data to use to estimate the changepoints can be updated using changepoint_range , which defaults to 0.8 (or 80%). You can observe the 25 changepoints using the changepoints attribute from the model object. The following code shows the first five:

model.changepoint_range![]()

model.changepoints.shape![]()

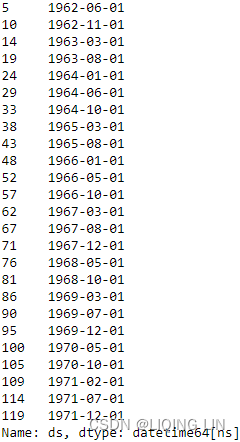

model.changepoints

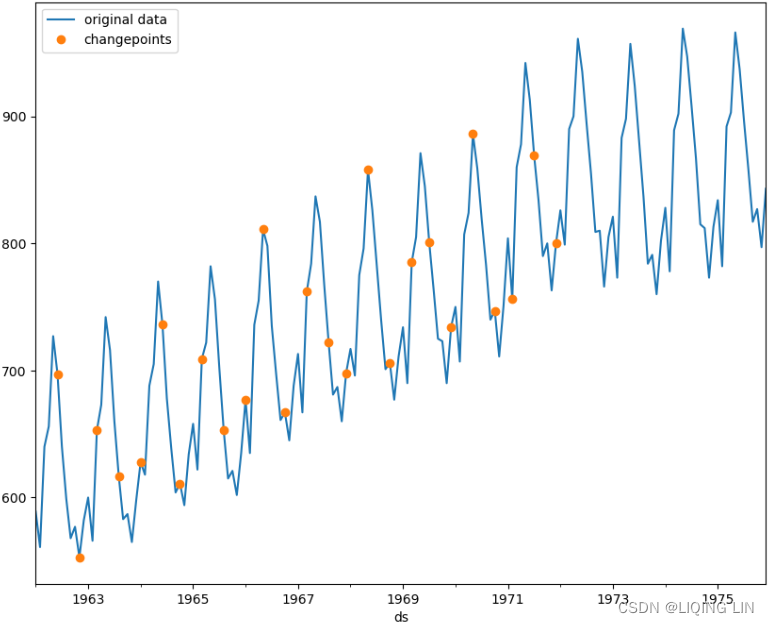

You can plot these points as well, as shown in the following code:

ax = milk.set_index('ds').plot( figsize=(10,8) )

milk.set_index('ds').loc[model.changepoints].plot( style='o',

ax=ax

)

plt.legend( ['original data', 'changepoints'] )

plt.show()This should produce a plot that contains the original time series data and the 25 potential changepoints that indicate changes in trend:

Figure 11.7 – The 25 potential changepoints, as identified by Prophet

Figure 11.7 – The 25 potential changepoints, as identified by Prophet

These potential changepoints were estimated from the first 80% of the training data.

Did you mean "domain"?

Valid properties:

anchor

If set to an opposite-letter axis id (e.g. `x2`, `y`),

this axis is bound to the corresponding opposite-letter

axis. If set to "free", this axis' position is

determined by `position`.

automargin

Determines whether long tick labels automatically grow

the figure margins.

autorange

Determines whether or not the range of this axis is

computed in relation to the input data. See `rangemode`

for more info. If `range` is provided, then `autorange`

is set to False.

autotypenumbers

Using "strict" a numeric string in trace data is not

converted to a number. Using *convert types* a numeric

string in trace data may be treated as a number during

automatic axis `type` detection. Defaults to

layout.autotypenumbers.

calendar

Sets the calendar system to use for `range` and `tick0`

if this is a date axis. This does not set the calendar

for interpreting data on this axis, that's specified in

the trace or via the global `layout.calendar`

categoryarray

Sets the order in which categories on this axis appear.

Only has an effect if `categoryorder` is set to

"array". Used with `categoryorder`.

categoryarraysrc

Sets the source reference on Chart Studio Cloud for

`categoryarray`.

categoryorder

Specifies the ordering logic for the case of

categorical variables. By default, plotly uses "trace",

which specifies the order that is present in the data

supplied. Set `categoryorder` to *category ascending*

or *category descending* if order should be determined

by the alphanumerical order of the category names. Set

`categoryorder` to "array" to derive the ordering from

the attribute `categoryarray`. If a category is not

found in the `categoryarray` array, the sorting

behavior for that attribute will be identical to the

"trace" mode. The unspecified categories will follow

the categories in `categoryarray`. Set `categoryorder`

to *total ascending* or *total descending* if order

should be determined by the numerical order of the

values. Similarly, the order can be determined by the

min, max, sum, mean or median of all the values.

color

Sets default for all colors associated with this axis

all at once: line, font, tick, and grid colors. Grid

color is lightened by blending this with the plot

background Individual pieces can override this.

constrain

If this axis needs to be compressed (either due to its

own `scaleanchor` and `scaleratio` or those of the

other axis), determines how that happens: by increasing

the "range", or by decreasing the "domain". Default is

"domain" for axes containing image traces, "range"

otherwise.

constraintoward

If this axis needs to be compressed (either due to its

own `scaleanchor` and `scaleratio` or those of the

other axis), determines which direction we push the

originally specified plot area. Options are "left",

"center" (default), and "right" for x axes, and "top",

"middle" (default), and "bottom" for y axes.

dividercolor

Sets the color of the dividers Only has an effect on

"multicategory" axes.

dividerwidth

Sets the width (in px) of the dividers Only has an

effect on "multicategory" axes.

domain

Sets the domain of this axis (in plot fraction).

dtick

Sets the step in-between ticks on this axis. Use with

`tick0`. Must be a positive number, or special strings

available to "log" and "date" axes. If the axis `type`

is "log", then ticks are set every 10^(n*dtick) where n

is the tick number. For example, to set a tick mark at

1, 10, 100, 1000, ... set dtick to 1. To set tick marks

at 1, 100, 10000, ... set dtick to 2. To set tick marks

at 1, 5, 25, 125, 625, 3125, ... set dtick to

log_10(5), or 0.69897000433. "log" has several special

values; "L<f>", where `f` is a positive number, gives

ticks linearly spaced in value (but not position). For

example `tick0` = 0.1, `dtick` = "L0.5" will put ticks

at 0.1, 0.6, 1.1, 1.6 etc. To show powers of 10 plus

small digits between, use "D1" (all digits) or "D2"

(only 2 and 5). `tick0` is ignored for "D1" and "D2".

If the axis `type` is "date", then you must convert the

time to milliseconds. For example, to set the interval

between ticks to one day, set `dtick` to 86400000.0.

"date" also has special values "M<n>" gives ticks

spaced by a number of months. `n` must be a positive

integer. To set ticks on the 15th of every third month,

set `tick0` to "2000-01-15" and `dtick` to "M3". To set

ticks every 4 years, set `dtick` to "M48"

exponentformat

Determines a formatting rule for the tick exponents.

For example, consider the number 1,000,000,000. If

"none", it appears as 1,000,000,000. If "e", 1e+9. If

"E", 1E+9. If "power", 1x10^9 (with 9 in a super

script). If "SI", 1G. If "B", 1B.

fixedrange

Determines whether or not this axis is zoom-able. If

true, then zoom is disabled.

gridcolor

Sets the color of the grid lines.

griddash

Sets the dash style of lines. Set to a dash type string

("solid", "dot", "dash", "longdash", "dashdot", or

"longdashdot") or a dash length list in px (eg

"5px,10px,2px,2px").

gridwidth

Sets the width (in px) of the grid lines.

hoverformat

Sets the hover text formatting rule using d3 formatting

mini-languages which are very similar to those in

Python. For numbers, see:

https://github.com/d3/d3-format/tree/v1.4.5#d3-format.

And for dates see: https://github.com/d3/d3-time-

format/tree/v2.2.3#locale_format. We add two items to

d3's date formatter: "%h" for half of the year as a

decimal number as well as "%{n}f" for fractional

seconds with n digits. For example, *2016-10-13

09:15:23.456* with tickformat "%H~%M~%S.%2f" would

display "09~15~23.46"

layer

Sets the layer on which this axis is displayed. If

*above traces*, this axis is displayed above all the

subplot's traces If *below traces*, this axis is

displayed below all the subplot's traces, but above the

grid lines. Useful when used together with scatter-like

traces with `cliponaxis` set to False to show markers

and/or text nodes above this axis.

linecolor

Sets the axis line color.

linewidth

Sets the width (in px) of the axis line.

matches

If set to another axis id (e.g. `x2`, `y`), the range

of this axis will match the range of the corresponding

axis in data-coordinates space. Moreover, matching axes

share auto-range values, category lists and histogram

auto-bins. Note that setting axes simultaneously in

both a `scaleanchor` and a `matches` constraint is

currently forbidden. Moreover, note that matching axes

must have the same `type`.

minexponent

Hide SI prefix for 10^n if |n| is below this number.

This only has an effect when `tickformat` is "SI" or

"B".

minor

:class:`plotly.graph_objects.layout.xaxis.Minor`

instance or dict with compatible properties

mirror

Determines if the axis lines or/and ticks are mirrored

to the opposite side of the plotting area. If True, the

axis lines are mirrored. If "ticks", the axis lines and

ticks are mirrored. If False, mirroring is disable. If

"all", axis lines are mirrored on all shared-axes

subplots. If "allticks", axis lines and ticks are

mirrored on all shared-axes subplots.

nticks

Specifies the maximum number of ticks for the

particular axis. The actual number of ticks will be

chosen automatically to be less than or equal to

`nticks`. Has an effect only if `tickmode` is set to

"auto".

overlaying

If set a same-letter axis id, this axis is overlaid on

top of the corresponding same-letter axis, with traces

and axes visible for both axes. If False, this axis

does not overlay any same-letter axes. In this case,

for axes with overlapping domains only the highest-

numbered axis will be visible.

position

Sets the position of this axis in the plotting space

(in normalized coordinates). Only has an effect if

`anchor` is set to "free".

range

Sets the range of this axis. If the axis `type` is

"log", then you must take the log of your desired range

(e.g. to set the range from 1 to 100, set the range

from 0 to 2). If the axis `type` is "date", it should

be date strings, like date data, though Date objects

and unix milliseconds will be accepted and converted to

strings. If the axis `type` is "category", it should be

numbers, using the scale where each category is

assigned a serial number from zero in the order it

appears.

rangebreaks

A tuple of

:class:`plotly.graph_objects.layout.xaxis.Rangebreak`

instances or dicts with compatible properties

rangebreakdefaults

When used in a template (as

layout.template.layout.xaxis.rangebreakdefaults), sets

the default property values to use for elements of

layout.xaxis.rangebreaks

rangemode