一、准备环境:(没有列出的其他包pip就行)

| python | 3.9.18 |

| torch | 2.1.1 |

| coppeliasim | 4.6.0 edu |

1. python和vrep联仿的几个组件

2. urdf 下载

https://github.com/unitreerobotics/unitree_ros/tree/master/robots/a1_description

(需要下载里面的mesh和urdf两个)

3* urdf 的修改

(1). 需要把里面的mesh文件地址改成绝对路径。

(2). 需要把urdf 某些定义的属性改成"static",脚本如下:

# Re-read the URDF content to make sure we have the latest version

with open(urdf_file_path, 'r') as file:

urdf_content = file.read()

# Parse the URDF content again

tree = ET.ElementTree(ET.fromstring(urdf_content))

root = tree.getroot()

# Helper function to find and correct issues in the URDF

def correct_urdf_structure(root):

# Iterate through all links and joints to ensure correct hierarchy and settings

for link in root.findall('link'):

# Check for respondable shapes and ensure they are set correctly

collision_elements = link.findall('collision')

for collision in collision_elements:

# Assuming 'respondable' is an attribute we need to check and correct

if 'respondable' in collision.attrib and collision.attrib['respondable'] == 'true':

# Set respondable to false for static parts

parent_joint = next((joint for joint in root.findall('joint') if joint.find('child').attrib['link'] == link.attrib['name']), None)

if parent_joint is not None and parent_joint.get('type') == 'fixed':

collision.attrib['respondable'] = 'false'

for joint in root.findall('joint'):

joint_type = joint.get('type')

if joint_type in ['revolute', 'prismatic', 'continuous']:

# Dynamic joints should remain unchanged

continue

else:

# Ensure all other joints are of type 'fixed'

joint.set('type', 'fixed')

return root

# Correct the URDF structure

corrected_root = correct_urdf_structure(root)

# Convert the corrected URDF back to a string

corrected_urdf_content = ET.tostring(corrected_root, encoding='unicode')

# Save the corrected URDF to a new file

corrected_urdf_file_path = 'to/your/own/path'

with open(corrected_urdf_file_path, 'w') as file:

file.write(corrected_urdf_content)二、vrep端

1. 导入urdf文件

2. 在整个scene的脚本里编辑:

2. 在整个scene的脚本里编辑:

这个是控制整个场景的仿真和python端同步开始的

--lua

function sysCall_init()

sim.setIntegerSignal('startSimulationSignal', 0)

jointHandles = {}

jointNames = {"FR_thigh_joint", "FL_thigh_joint", "RR_thigh_joint", "RL_thigh_joint", "FR_calf_joint", "FL_calf_joint", "RR_calf_joint", "RL_calf_joint"}

for i = 1, #jointNames, 1 do

local handle = sim.getObjectHandle(jointNames[i])

table.insert(jointHandles, handle)

end

end

function sysCall_actuation()

local startSignal = sim.getIntegerSignal('startSimulationSignal')

if startSignal == 1 then

sim.setIntegerSignal('startSimulationSignal', 0)

for i = 1, #jointHandles, 1 do

sim.setJointTargetVelocity(jointHandles[i], 0)

end

end

end

-- The main script is not supposed to be modified, except in special cases.

require('defaultMainScript')3. 给机器人关联一个script

在里面加上通信端口:

function sysCall_init()

simRemoteApi.start(19999)

end4. 最后给仿真环境加四堵墙(要不然仿真到一半机器人没了不说,极端数据对强化学习网络的影响也蛮大的)

这样勾好,让四堵墙固定好。

DONE~

三、Python端

1. 导入第三方库

import sim

import sys

import rl_utils

from tqdm import tqdm

import torch.nn.functional as F

import time

import matplotlib.pyplot as plt

import torch

import numpy as np

from scipy.interpolate import CubicSpline

import os(1)* rl_utils

这是一个.py的文件,可以在动手学强化学习的网站上下(https://hrl.boyuai.com/),放在同一个文件夹下就行

(2)* sim

这也是一个.py的文件,可以到coppeliasim文件目录里找,放到代码同目录下:

- remoteApi.dll

- vrep.py

- vrepConst.py

2. 两个比较好用的可视化方式

def print_progress_bar(j, total=200, length=50):

# check the steps progress in one episode

progress = j / total

block = int(length * progress)

bar = '█' * block + '-' * (length - block)

sys.stdout.write(f'\r[{bar}] {j}/{total}')

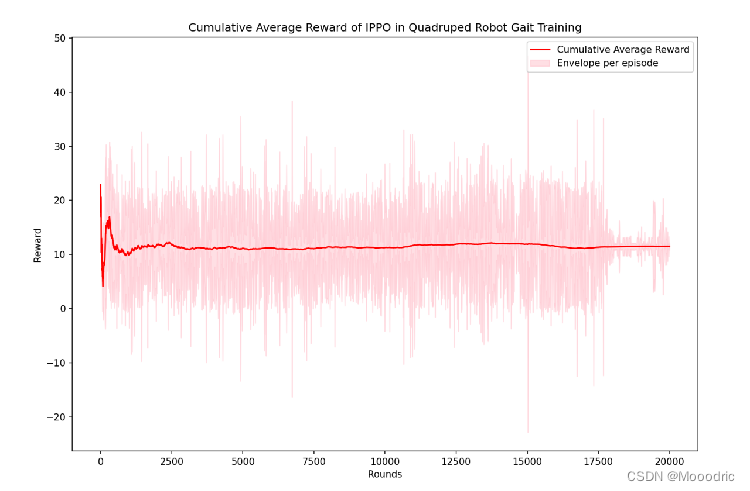

sys.stdout.flush()# cumulative reward with envelop curve

def cumulative_average_reward(episode_rewards, dis):

if len(episode_rewards) % dis:

pass

average_rewards = [np.mean(episode_rewards[i:i + dis]) for i in range(0, len(episode_rewards), dis)]

average_cumulative_rewards = np.cumsum(episode_rewards) / np.arange(1, len(episode_rewards) + 1)

rounds_dis = np.arange(dis, len(episode_rewards) + 1, dis)

diff = 0.6 * np.abs(np.array(average_rewards) - average_cumulative_rewards[rounds_dis - 1])

rounds_interpolated = np.linspace(rounds_dis.min(), rounds_dis.max(), num=len(episode_rewards))

cs_upper = CubicSpline(rounds_dis, average_cumulative_rewards[rounds_dis - 1] + diff)

cs_lower = CubicSpline(rounds_dis, average_cumulative_rewards[rounds_dis - 1] - diff)

upper_envelope = cs_upper(rounds_interpolated) + np.random.normal(1, 2, len(rounds_interpolated))

lower_envelope = cs_lower(rounds_interpolated) - np.random.normal(1, 2, len(rounds_interpolated))

plt.figure(figsize=(12, 8))

plt.plot(np.arange(1, len(episode_rewards) + 1), average_cumulative_rewards, label='Cumulative Average Reward', color='red')

# plt.plot(rounds_40, average_cumulative_rewards[rounds_40 - 1], 'o', label='Key Points for Envelope')

plt.fill_between(rounds_interpolated, upper_envelope, lower_envelope, color='pink', alpha=0.5,

label='Envelope per episode')

plt.legend()

plt.xlabel('Rounds')

plt.ylabel('Reward')

plt.title('Cumulative Average Reward of IPPO in Quadruped Robot Gait Training')

plt.show()3. 强化学习智能体的定义(基本上也是从动手学强化学习的网站上摘下来的)

# --------------------------------------------------

# reinforcement learning agent and policy network definition

class PolicyNet(torch.nn.Module):

def __init__(self, state_dim, hidden_dim, action_dim):

super(PolicyNet, self).__init__()

self.fc1 = torch.nn.Linear(state_dim, hidden_dim)

self.fc2 = torch.nn.Linear(hidden_dim, hidden_dim)

self.fc3 = torch.nn.Linear(hidden_dim, action_dim)

self.initialized = False

def _init_weights(self, layer):

if isinstance(layer, torch.nn.Linear):

torch.nn.init.kaiming_uniform_(layer.weight)

torch.nn.init.zeros_(layer.bias)

def initialize_weights(self):

if not self.initialized:

self.apply(self._init_weights)

self.initialized = True

def forward(self, x):

x = F.relu(self.fc1(x))

if torch.any(torch.isnan(x)):

print("NaN values found after fc1:", x)

x = F.relu(self.fc2(x))

if torch.any(torch.isnan(x)):

print("NaN values found after fc2:", x)

x = F.softmax(self.fc3(x), dim=1)

if torch.any(torch.isnan(x)):

print("NaN values found after fc3:", x)

return x

class ValueNet(torch.nn.Module):

def __init__(self, state_dim, hidden_dim):

super(ValueNet, self).__init__()

self.fc1 = torch.nn.Linear(state_dim, hidden_dim)

self.fc2 = torch.nn.Linear(hidden_dim, hidden_dim)

self.fc3 = torch.nn.Linear(hidden_dim, 1)

self.initialized = False

def _init_weights(self, layer):

if isinstance(layer, torch.nn.Linear):

torch.nn.init.kaiming_uniform_(layer.weight)

torch.nn.init.zeros_(layer.bias)

def initialize_weights(self):

if not self.initialized:

self.apply(self._init_weights)

self.initialized = True

def forward(self, x):

x = F.relu(self.fc1(x))

if torch.any(torch.isnan(x)):

print("NaN values found after fc1:", x)

x = F.relu(self.fc2(x))

if torch.any(torch.isnan(x)):

print("NaN values found after fc2:", x)

return self.fc3(x)

class PPO:

def __init__(self, state_dim, hidden_dim, action_dim, actor_lr, critic_lr, lmbda, eps, gamma, device, save_path):

self.actor = PolicyNet(state_dim, hidden_dim, action_dim).to(device)

self.critic = ValueNet(state_dim, hidden_dim).to(device)

self.actor_optimizer = torch.optim.Adam(self.actor.parameters(), lr=actor_lr)

self.critic_optimizer = torch.optim.Adam(self.critic.parameters(), lr=critic_lr)

self.gamma = gamma

self.lmbda = lmbda

self.eps = eps

self.device = device

self.save_path = save_path

if os.path.exists(self.save_path):

checkpoint = torch.load(self.save_path)

self.actor.load_state_dict(checkpoint['actor_state_dict'])

self.critic.load_state_dict(checkpoint['critic_state_dict'])

self.actor_optimizer.load_state_dict(checkpoint['actor_optimizer_state_dict'])

self.critic_optimizer.load_state_dict(checkpoint['critic_optimizer_state_dict'])

print(f"Loaded model parameters from {self.save_path}")

else:

self.actor.initialize_weights()

self.critic.initialize_weights()

print(f"No saved model found at {self.save_path}, starting from scratch")

def take_action(self, state):

state = np.array(state)

if np.any(np.isnan(state)) or np.any(np.isinf(state)):

raise ValueError("Invalid state input: contains NaN or Inf values")

state = torch.tensor([state], dtype=torch.float).to(self.device)

probs = self.actor(state)

if torch.any(torch.isnan(probs)):

print("NaN values found in probs:", probs)

action_dist = torch.distributions.Categorical(probs)

action = action_dist.sample()

return action.item()

def update(self, transition_dict):

states = torch.tensor(transition_dict['states'], dtype=torch.float).to(self.device)

actions = torch.tensor(transition_dict['actions']).view(-1, 1).to(self.device)

rewards = torch.tensor(transition_dict['rewards'], dtype=torch.float).view(-1, 1).to(self.device)

next_states = torch.tensor(transition_dict['next_states'], dtype=torch.float).to(self.device)

dones = torch.tensor(transition_dict['dones'], dtype=torch.float).view(-1, 1).to(self.device)

td_target = rewards + self.gamma * self.critic(next_states) * (1 - dones)

td_delta = td_target - self.critic(states)

advantage = rl_utils.compute_advantage(self.gamma, self.lmbda, td_delta.cpu()).to(self.device)

old_log_probs = torch.log(self.actor(states).gather(1, actions)).detach()

log_probs = torch.log(self.actor(states).gather(1, actions))

ratio = torch.exp(log_probs - old_log_probs)

surr1 = ratio * advantage

surr2 = torch.clamp(ratio, 1 - self.eps, 1 + self.eps) * advantage

actor_loss = torch.mean(-torch.min(surr1, surr2))

critic_loss = torch.mean(

F.mse_loss(self.critic(states), td_target.detach()))

self.actor_optimizer.zero_grad()

self.critic_optimizer.zero_grad()

actor_loss.backward()

critic_loss.backward()

self.actor_optimizer.step()

self.critic_optimizer.step()

torch.save({

'actor_state_dict': self.actor.state_dict(),

'critic_state_dict': self.critic.state_dict(),

'actor_optimizer_state_dict': self.actor_optimizer.state_dict(),

'critic_optimizer_state_dict': self.critic_optimizer.state_dict(),

}, self.save_path)额外加的一些逻辑:

(1)每一轮都会保存权重(因为是异端仿真,vrep基本上是定步长,跑一个episode都很慢)

(2)检查参数合法性

(3)检查是否为初始化

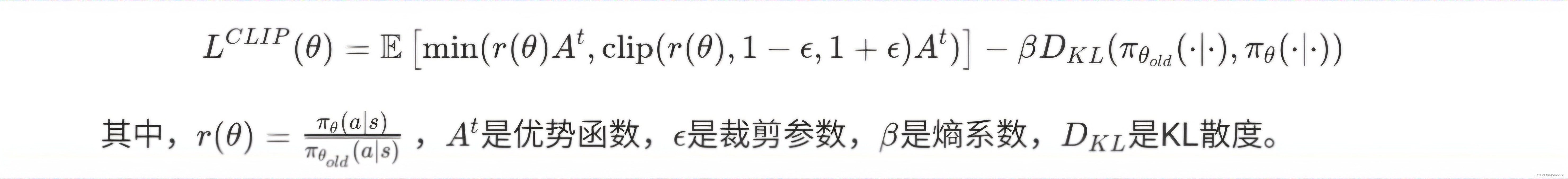

PPO优化目标:

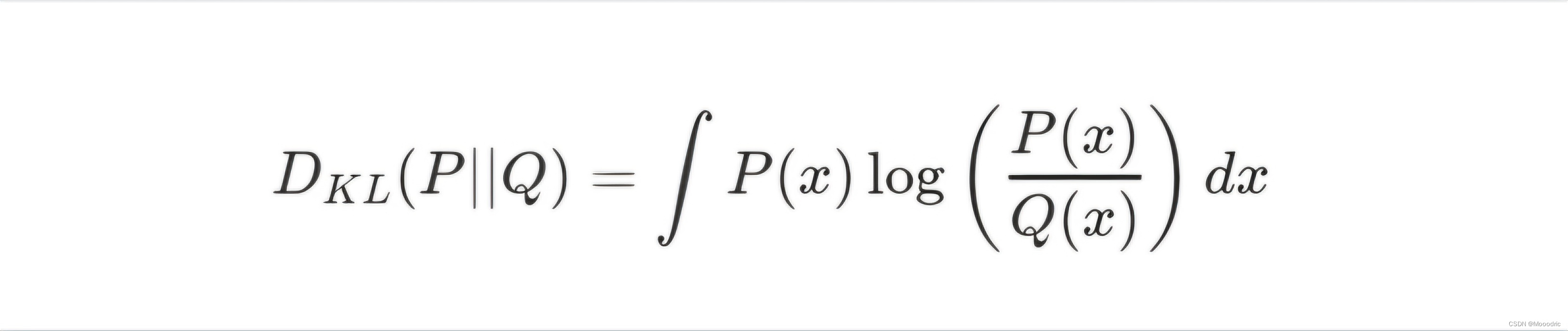

KL散度:

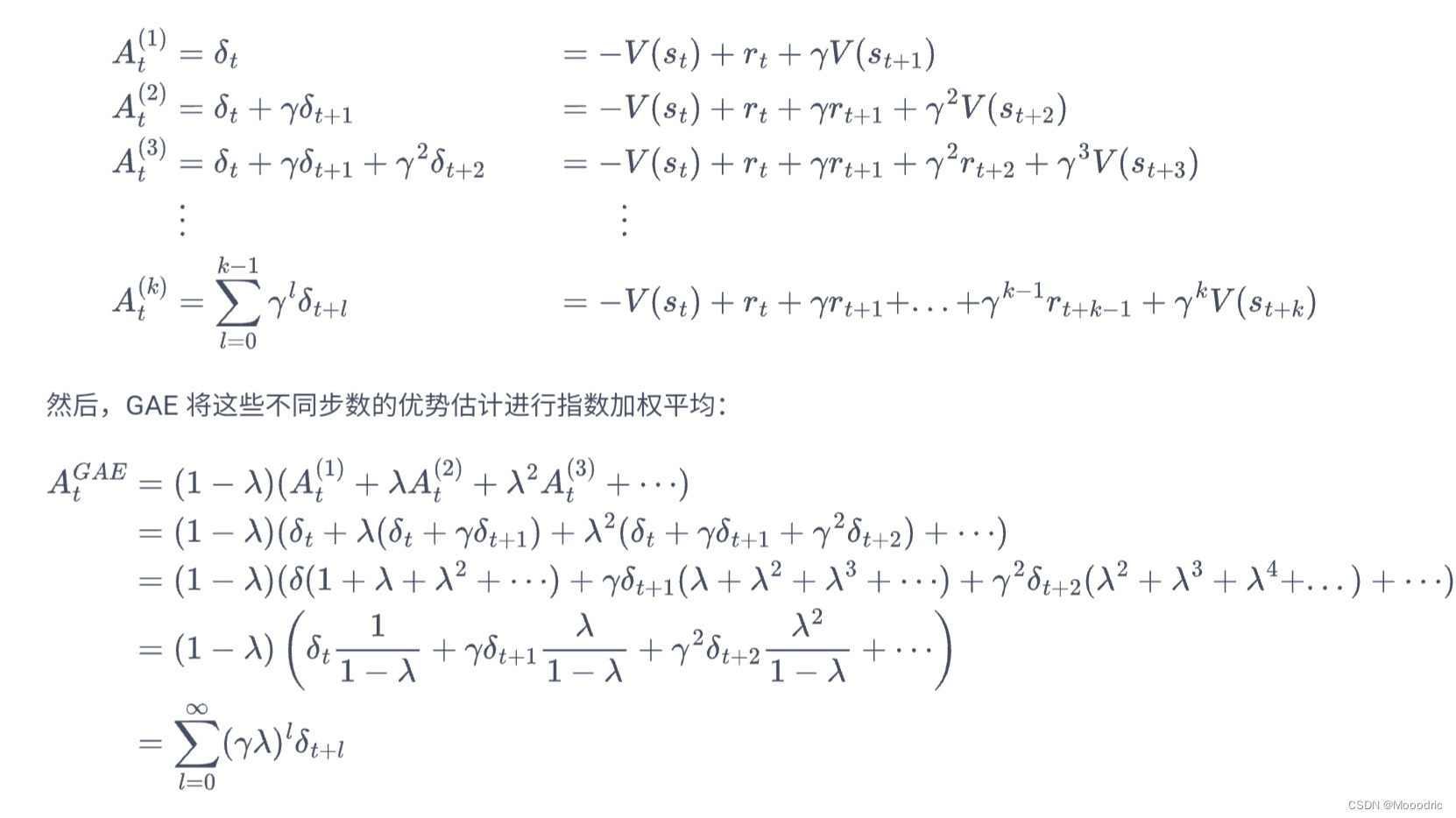

广义优势函数:

* lambda是平衡当前时刻未来时刻相对重要性的系数

以上图片均截自:https://hrl.boyuai.com/

take_action部分:将状态映射为各个动作的概率,使用降采样使得动作选择符合这一概率

update部分:其实就是对优化目标函数的构造,然后反向传播梯度下降

有一个注意的点*:

似乎old_log_probs和log_probs计算出来是一样的(除了梯度信息没有以外)

这个我也不太好解释(大概在这里的作用和时延环节差不多)

下面有一段简单的示例可以参考一下:

import torch

import torch.nn as nn

import torch.optim as optim

import numpy as np

class Actor(nn.Module):

def __init__(self, state_dim, action_dim):

super(Actor, self).__init__()

self.fc = nn.Linear(state_dim, action_dim)

def forward(self, x):

return torch.softmax(self.fc(x), dim=-1)

state_dim = 4

action_dim = 2

actor = Actor(state_dim, action_dim)

optimizer = optim.Adam(actor.parameters(), lr=1e-3)

states = torch.tensor(np.random.rand(10, state_dim), dtype=torch.float)

actions = torch.tensor(np.random.randint(0, action_dim, size=(10, 1)), dtype=torch.long)

log_probs = torch.log(actor(states).gather(1, actions))

old_log_probs = log_probs.detach() # 基于初始参数 params_0

print("第一次调用 update 方法:")

print("log_probs (params_0):", log_probs)

print("old_log_probs (params_0):", old_log_probs)

optimizer.zero_grad()

loss = -log_probs.mean()

loss.backward()

optimizer.step()

log_probs = torch.log(actor(states).gather(1, actions)) # 基于更新后的参数 params_1

print("\n第二次调用 update 方法:")

print("log_probs (params_1):", log_probs)

print("old_log_probs (params_0):", old_log_probs)

ratio = torch.exp(log_probs - old_log_probs)

print("ratio:", ratio)

advantage = torch.tensor(np.random.rand(10, 1), dtype=torch.float)

surr1 = ratio * advantage

surr2 = torch.clamp(ratio, 1 - 0.2, 1 + 0.2) * advantage

actor_loss = torch.mean(-torch.min(surr1, surr2))

optimizer.zero_grad()

actor_loss.backward()

optimizer.step()

print("\n策略更新后的参数:")

for param in actor.parameters():

print(param.data)

"""

final results:

log_probs (params_0): tensor([[-0.6989],

[-0.5411],

[-0.6948],

[-0.7734],

[-0.6519],

[-0.6381],

[-0.7840],

[-0.7692],

[-0.5858],

[-0.5342]], grad_fn=<LogBackward0>)

old_log_probs (params_0): tensor([[-0.6989],

[-0.5411],

[-0.6948],

[-0.7734],

[-0.6519],

[-0.6381],

[-0.7840],

[-0.7692],

[-0.5858],

[-0.5342]])

第二次调用 update 方法:

log_probs (params_1): tensor([[-0.6953],

[-0.5431],

[-0.6913],

[-0.7702],

[-0.6485],

[-0.6347],

[-0.7806],

[-0.7658],

[-0.5882],

[-0.5363]], grad_fn=<LogBackward0>)

old_log_probs (params_0): tensor([[-0.6989],

[-0.5411],

[-0.6948],

[-0.7734],

[-0.6519],

[-0.6381],

[-0.7840],

[-0.7692],

[-0.5858],

[-0.5342]])

ratio: tensor([[1.0036],

[0.9980],

[1.0035],

[1.0032],

[1.0034],

[1.0034],

[1.0034],

[1.0033],

[0.9975],

[0.9979]], grad_fn=<ExpBackward0>)

策略更新后的参数:

tensor([[ 0.0480, 0.2244, 0.1085, -0.4448],

[ 0.4112, -0.1155, -0.0652, -0.4690]])

tensor([0.0572, 0.1797])

"""

ps:这是简化后的参数更新流程,可以看到ratio不是一直为一的,而且从打印出来的网络参数可以看出old_log_probs用的是上一时刻的参数,log_probs用的是当前时刻。

Done~

4. 联仿交互过程及信息流向(动作选择和参数更新)

* 均为PPT根据自己理解作图,如有错误请纠正,如有不准确实在是图排版 ( ′ 3`) sigh~

这一部分可以结合之前的内容看看~ 具体的参数更新过程可以参考第三个小节

代码部分(句柄获取的部分比较长):

# -------------------------------------------------

# hyper-parameter definition

max_steps = 200

actor_lr = 3e-4

critic_lr = 1e-3

num_episodes = 100

hidden_dim = 64

gamma = 0.99

lmbda = 0.97

eps = 0.2

device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")

state_dim = 39

action_dim = 25

save_path = r"your/own/weights/path"

agent = PPO(state_dim, hidden_dim, action_dim, actor_lr, critic_lr, lmbda, eps, gamma, device, save_path)

# --------------------------------------------------

# simulation connection and handle acquirement

sim.simxFinish(-1)

clientID = sim.simxStart('127.0.0.1', 19999, True, True, 5000, 5)

if clientID != -1:

print('Connected to remote API server, simulation start')

else:

sys.exit('Failed connecting to remote API server')

_, trunk = sim.simxGetObjectHandle(clientID, 'trunk_respondable', sim.simx_opmode_oneshot_wait)

_, FR_hip = sim.simxGetObjectHandle(clientID, 'FR_hip_joint', sim.simx_opmode_oneshot_wait)

_, FL_hip = sim.simxGetObjectHandle(clientID, 'FL_hip_joint', sim.simx_opmode_oneshot_wait)

_, RR_hip = sim.simxGetObjectHandle(clientID, 'RR_hip_joint', sim.simx_opmode_oneshot_wait)

_, RL_hip = sim.simxGetObjectHandle(clientID, 'RL_hip_joint', sim.simx_opmode_oneshot_wait)

_, FR_thigh = sim.simxGetObjectHandle(clientID, 'FR_thigh_joint', sim.simx_opmode_oneshot_wait)

_, FL_thigh = sim.simxGetObjectHandle(clientID, 'FL_thigh_joint', sim.simx_opmode_oneshot_wait)

_, RR_thigh = sim.simxGetObjectHandle(clientID, 'RR_thigh_joint', sim.simx_opmode_oneshot_wait)

_, RL_thigh = sim.simxGetObjectHandle(clientID, 'RL_thigh_joint', sim.simx_opmode_oneshot_wait)

_, FR_calf = sim.simxGetObjectHandle(clientID, 'FR_calf_joint', sim.simx_opmode_oneshot_wait)

_, FL_calf = sim.simxGetObjectHandle(clientID, 'FL_calf_joint', sim.simx_opmode_oneshot_wait)

_, RR_calf = sim.simxGetObjectHandle(clientID, 'RR_calf_joint', sim.simx_opmode_oneshot_wait)

_, RL_calf = sim.simxGetObjectHandle(clientID, 'RL_calf_joint', sim.simx_opmode_oneshot_wait)

_, FR_foot = sim.simxGetObjectHandle(clientID, 'FR_foot_fixed', sim.simx_opmode_oneshot_wait)

_, FL_foot = sim.simxGetObjectHandle(clientID, 'FL_foot_fixed', sim.simx_opmode_oneshot_wait)

_, RR_foot = sim.simxGetObjectHandle(clientID, 'RR_foot_fixed', sim.simx_opmode_oneshot_wait)

_, RL_foot = sim.simxGetObjectHandle(clientID, 'RL_foot_fixed', sim.simx_opmode_oneshot_wait)

t_step = 0.1

action_list = [0, 0.5, 1, -0.5, -1]

sim.simxSetFloatParam(clientID, sim.sim_floatparam_simulation_time_step, t_step, sim.simx_opmode_oneshot)

sim.simxSynchronous(clientID, enable=True)

sim.simxStartSimulation(clientID, sim.simx_opmode_oneshot)

time.sleep(1)

best_reward = -float('inf')

best_action_dict = {

'actions_1': [],

'actions_2': [],

}

episode_rewards = []

while (sim.simxGetConnectionId(clientID) != -1):

"""

two models proposed:

1. FL and FR legs share the same action instruction while the RL and RR share the other,

which models after the walking gesture

2. legs both in the front or the back share the same action instructions

Default settings:

1. the hip joints are fixed

2. The thigh joint and calf joint is regarded as a whole, so action instruction must be

a 2-dimensional vector

3. action set is discrete, and thigh joints and calf joints share the same set

"""

for joint_handle in [FR_thigh, FL_thigh, RR_thigh, RL_thigh, FR_calf, FL_calf, RR_calf, RL_calf]:

sim.simxSetJointTargetVelocity(clientID, joint_handle, 0, sim.simx_opmode_oneshot)

V_FR_thigh = 0.

V_FL_thigh = 0.

V_RL_thigh = 0.

V_RR_thigh = 0.

v_FR_calf = 0.

v_FL_calf = 0.

v_RL_calf = 0.

v_RR_calf = 0.

sim.simxSetIntegerSignal(clientID, 'startSimulationSignal', 1, sim.simx_opmode_oneshot)

for i in range(10):

with tqdm(total=int(num_episodes / 10), desc='Iteration %d' % i) as pbar:

_, trunk_0 = sim.simxGetObjectPosition(clientID, trunk, -1, sim.simx_opmode_blocking)

_, L_0 = sim.simxGetObjectPosition(clientID, FL_hip, -1, sim.simx_opmode_blocking)

_, R_0 = sim.simxGetObjectPosition(clientID, RR_hip, -1, sim.simx_opmode_blocking)

for episode in range(int(num_episodes / 10)):

V_FR_thigh = V_FL_thigh = 0.

V_RL_thigh = V_RR_thigh = 0.

v_FR_calf = v_FL_calf = 0.

v_RL_calf = v_RR_calf = 0.

terminal = False

transition_dict_1 = {

'states': [],

'actions': [],

'next_states': [],

'rewards': [],

'dones': []

}

transition_dict_2 = {

'states': [],

'actions': [],

'next_states': [],

'rewards': [],

'dones': []

}

s = []

reward_list = []

for handle in [trunk, FR_thigh, FL_thigh, RR_thigh, RL_thigh, FR_calf, FL_calf, RR_calf, RL_calf,

FR_foot, FL_foot, RR_foot, RL_foot]:

err_code, position = sim.simxGetObjectPosition(clientID, handle, -1, sim.simx_opmode_blocking)

s.append(position)

s = np.array(s)

s = s.ravel()

j = 0

theta_s = 1.

theta_l = 1.

theta_r = 1.

while (j < max_steps):

s_next = []

a_1 = agent.take_action(s)

a_2 = agent.take_action(s)

"""

the output of the agent is a 25-dimensional, so it need to be converted to a vector with a shape

of (5, 2)

"""

V_FR_thigh = action_list[a_1 // 5]

V_FL_thigh = action_list[a_1 // 5]

v_FR_calf = action_list[a_1 % 5]

v_FL_calf = action_list[a_1 % 5]

V_RR_thigh = action_list[a_2 // 5]

V_RL_thigh = action_list[a_2 // 5]

v_RR_calf = action_list[a_2 % 5]

v_RL_calf = action_list[a_2 % 5]

sim.simxSetJointTargetVelocity(clientID, FR_thigh, V_FR_thigh, sim.simx_opmode_oneshot)

sim.simxSetJointTargetVelocity(clientID, FL_thigh, V_FL_thigh, sim.simx_opmode_oneshot)

sim.simxSetJointTargetVelocity(clientID, RR_thigh, V_RR_thigh, sim.simx_opmode_oneshot)

sim.simxSetJointTargetVelocity(clientID, RL_thigh, V_RL_thigh, sim.simx_opmode_oneshot)

sim.simxSetJointTargetVelocity(clientID, FR_calf, v_FR_calf, sim.simx_opmode_oneshot)

sim.simxSetJointTargetVelocity(clientID, FL_calf, v_FL_calf, sim.simx_opmode_oneshot)

sim.simxSetJointTargetVelocity(clientID, RR_calf, v_RR_calf, sim.simx_opmode_oneshot)

sim.simxSetJointTargetVelocity(clientID, RL_calf, v_RL_calf, sim.simx_opmode_oneshot)

for handle in [trunk, FR_thigh, FL_thigh, RR_thigh, RL_thigh, FR_calf, FL_calf, RR_calf, RL_calf,

FR_foot, FL_foot, RR_foot, RL_foot]:

_, position = sim.simxGetObjectPosition(clientID, handle, -1, sim.simx_opmode_blocking)

s_next.append(position)

s_next = np.array(s_next)

s_next = s_next.ravel()

transition_dict_1['actions'].append(a_1)

transition_dict_2['actions'].append(a_2)

transition_dict_1['states'].append(s)

transition_dict_2['states'].append(s)

transition_dict_1['next_states'].append(s_next)

transition_dict_2['next_states'].append(s_next)

_, trunk_i = sim.simxGetObjectPosition(clientID, trunk, -1, sim.simx_opmode_blocking)

_, L_i = sim.simxGetObjectPosition(clientID, trunk, -1, sim.simx_opmode_blocking)

_, R_i = sim.simxGetObjectPosition(clientID, trunk, -1, sim.simx_opmode_blocking)

if j:

if float(transition_dict_1['rewards'][-1]) > 0 and float(transition_dict_2['rewards'][-1]) > 0:

theta_s = min(20., 2 * theta_s)

elif float(transition_dict_1['rewards'][-1]) > 0:

theta_l = min(20., 2 * theta_l)

elif float(transition_dict_2['rewards'][-1]) > 0:

theta_r = min(20., 2 * theta_r)

else:

theta_s = 1.

theta_l = 1.

theta_r = 1.

reward_s = trunk_i[-1] - trunk_0[-1] * 0.6 * theta_s

reward_l = (L_i[-1] - L_0[-1] * 0.5) * theta_l

reward_r = R_i[-1] - R_0[-1] * 0.5 * theta_r

transition_dict_1['dones'].append(False)

transition_dict_2['dones'].append(False)

transition_dict_1['rewards'].append(reward_s + reward_l)

transition_dict_2['rewards'].append(reward_s + reward_r)

s = s_next

reward_list.append(reward_s)

j += 1

print_progress_bar(j)

# terminal = all(done)

# reset simulation environment after every episode

sys.stdout.write('\n')

temp = np.mean(np.array(reward_list))

episode_rewards.append(temp)

print(episode_rewards[-1])

if episode_rewards[-1] > best_reward:

best_action_dict['actions_1'] = transition_dict_1['actions']

best_action_dict['actions_2'] = transition_dict_2['actions']

sim.simxStopSimulation(clientID, sim.simx_opmode_oneshot_wait)

agent.update(transition_dict_1)

agent.update(transition_dict_2)

time.sleep(2)

if (episode + 1) % 10 == 0:

pbar.set_postfix({

'episode':

'%d' % (num_episodes / 10 * i + episode + 1),

'return_1':

'%.3f' % np.mean(transition_dict_1['rewards'][-100:]),

'return_2':

'%.3f' % np.mean(transition_dict_2['rewards'][-100:]),

})

pbar.update(1)

sim.simxStartSimulation(clientID, sim.simx_opmode_oneshot_wait)

sim.simxFinish(-1)

print(best_action_dict)Done~

四:回合奖励曲线应该怎么画

鄙人感觉这部分不能按照episode来画,因为每一个episode步数比较多,应该就是按照迭代的每一步作图。如果有好的建议请留言!

98

98

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?