使用BP神经网络完成对数据集分类,完整代码

分步讲解代码见:手动实现BP神经网络

'''搭建一个可以运行在不同优化器模式下的 3 层神经网络模型(网络层节点数 目分别为:5,2,1),对“月亮”数据集进行分类。

1) 在不使用优化器的情况下对数据集分类,并可视化表示。

2) 将优化器设置为具有动量的梯度下降算法,可视化表示分类结果。

3) 将优化器设置为 Adam 算法,可视化分类结果。

4) 总结不同算法的分类准确度以及代价曲线的平滑度。

注:以上算法均手动实现,提供数据集读取代码及相关方法代码。'''

# 导入需要的库

import numpy as np

import matplotlib.pyplot as plt

import sklearn

import sklearn.datasets

import math

# 定义列表,用于存储cost值,方便后期画曲线

loss = []

accuracy = []

################################################## 以下是题给代码 ###########################################

def sigmoid(x):

"""

Compute the sigmoid of x

Arguments:

x -- A scalar or numpy array of any size.

Return:

s -- sigmoid(x)

"""

s = 1 / (1 + np.exp(-x))

return s

def relu(x):

"""

Compute the relu of x

Arguments:

x -- A scalar or numpy array of any size.

Return:

s -- relu(x)

"""

s = np.maximum(0, x)

return s

def forward_propagation(X, parameters):

"""

Implements the forward propagation (and computes the loss) presented in Figure 2.

Arguments:

X -- input dataset, of shape (input size, number of examples)

parameters -- python dictionary containing your parameters "W1", "b1", "W2", "b2", "W3", "b3":

W1 -- weight matrix of shape ()

b1 -- bias vector of shape ()

W2 -- weight matrix of shape ()

b2 -- bias vector of shape ()

W3 -- weight matrix of shape ()

b3 -- bias vector of shape ()

Returns:

loss -- the loss function (vanilla logistic loss)

"""

# retrieve parameters

W1 = parameters["W1"]

b1 = parameters["b1"]

W2 = parameters["W2"]

b2 = parameters["b2"]

W3 = parameters["W3"]

b3 = parameters["b3"]

# LINEAR -> RELU -> LINEAR -> RELU -> LINEAR -> SIGMOID

z1 = np.dot(W1, X) + b1

a1 = relu(z1)

z2 = np.dot(W2, a1) + b2

a2 = relu(z2)

z3 = np.dot(W3, a2) + b3

a3 = sigmoid(z3)

cache = (z1, a1, W1, b1, z2, a2, W2, b2, z3, a3, W3, b3)

return a3, cache

def predict(X, y, parameters):

"""

This function is used to predict the results of a n-layer neural network.

Arguments:

X -- data set of examples you would like to label

parameters -- parameters of the trained model

Returns:

p -- predictions for the given dataset X

"""

m = X.shape[1]

p = np.zeros((1, m), dtype=int)

# Forward propagation

a3, caches = forward_propagation(X, parameters)

# convert probas to 0/1 predictions

for i in range(0, a3.shape[1]):

if a3[0, i] > 0.5:

p[0, i] = 1

else:

p[0, i] = 0

# print results

# print ("predictions: " + str(p[0,:]))

# print ("true labels: " + str(y[0,:]))

print("Accuracy: " + str(np.mean((p[0, :] == y[0, :]))))

return p

def predict_dec(X, parameters):

"""

Used for plotting decision boundary.

Arguments:

parameters -- python dictionary containing your parameters

X -- input data of size (m, K)

Returns

predictions -- vector of predictions of our model (red: 0 / blue: 1)

"""

# Predict using forward propagation and a classification threshold of 0.5

a3, cache = forward_propagation(X, parameters)

predictions = (a3 > 0.5)

return predictions

def load_dataset(is_plot=True):

np.random.seed(3)

train_X, train_Y = sklearn.datasets.make_moons(n_samples=300, noise=.2) # 300 #0.2

# Visualize the data

if is_plot:

plt.scatter(train_X[:, 0], train_X[:, 1], c=train_Y, s=40, cmap=plt.cm.Spectral)

plt.show() # 显示“月亮”数据

train_X = train_X.T

train_Y = train_Y.reshape((1, train_Y.shape[0]))

return train_X, train_Y

##可视化分割线

def plot_decision_boundary(model, X, y):

# Set min and max values and give it some padding

x_min, x_max = X[0, :].min() - 1, X[0, :].max() + 1

y_min, y_max = X[1, :].min() - 1, X[1, :].max() + 1

h = 0.01

# Generate a grid of points with distance h between them

xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

# Predict the function value for the whole grid

Z = model(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

# Plot the contour and training examples

plt.contourf(xx, yy, Z, cmap=plt.cm.Spectral)

plt.ylabel('x2')

plt.xlabel('x1')

plt.scatter(X[0, :], X[1, :], c=np.squeeze(y), cmap=plt.cm.Spectral)

plt.show()

############################################## 以上是题给代码 ###########################################

# 初始化模型的参数

def initialize_parameters(n_x, n1, n2, n_y):

"""

参数:

n_x - 输入层节点的数量 2

n1,n2 - 隐藏层节点的数量 5 2

n_y - 输出层节点的数量 1

返回:

parameters - 包含参数的字典

说明:如果允许将节点数改变,似效果更好些

"""

np.random.seed(3) # 指定一个随机种子

W1 = np.random.randn(n1, n_x) * 1.0

b1 = np.zeros((n1, 1))

W2 = np.random.randn(n2, n1) * 2.0

b2 = np.zeros((n2, 1))

W3 = np.random.randn(n_y, n2) * 1.0

b3 = np.zeros((n_y, 1))

parameters = {"W1": W1,

"b1": b1,

"W2": W2,

"b2": b2,

"W3": W3,

"b3": b3}

return parameters

# 反向传播,得到梯度

def backward_propagation(cache, X, Y):

m = X.shape[1]

(Z1, A1, W1, b1, Z2, A2, W2, b2, Z3, A3, W3, b3) = cache

dZ3 = 1./ m * (A3 - Y)

dW3 = np.dot(dZ3, A2.T)

db3 = np.sum(dZ3, axis=1, keepdims=True)

dA2 = np.dot(W3.T, dZ3)

dZ2 = np.multiply(dA2, np.int64(A2 > 0))

dW2 = np.dot(dZ2, A1.T)

db2 = np.sum(dZ2, axis=1, keepdims=True)

dA1 = np.dot(W2.T, dZ2)

dZ1 = np.multiply(dA1, np.int64(A1 > 0))

dW1 = np.dot(dZ1, X.T)

db1 = np.sum(dZ1, axis=1, keepdims=True)

grads = {"dW3": dW3, "db3": db3,

"dW2": dW2, "db2": db2,

"dW1": dW1, "db1": db1}

return grads

# 计算cost值

def cost_computing(A, Y):

m = Y.shape[1]

logloss = np.multiply(np.log(A), Y) + np.multiply((1 - Y), np.log(1 - A))

cost = - np.sum(logloss) / m

return cost

# SGD优化器

def sgd(parameters, grads, learning_rate):

W1, W2, W3 = parameters["W1"], parameters["W2"], parameters["W3"]

b1, b2, b3 = parameters["b1"], parameters["b2"], parameters["b3"]

dW1, dW2, dW3 = grads["dW1"], grads["dW2"], grads["dW3"]

db1, db2, db3 = grads["db1"], grads["db2"], grads["db3"]

W1 = W1 - learning_rate * dW1

b1 = b1 - learning_rate * db1

W2 = W2 - learning_rate * dW2

b2 = b2 - learning_rate * db2

W3 = W3 - learning_rate * dW3

b3 = b3 - learning_rate * db3

parameters = {"W1": W1,

"b1": b1,

"W2": W2,

"b2": b2,

"W3": W3,

"b3": b3}

return parameters

# 随机批次分配 本段参考csdn其他博主文章

def random_mini_batches(X, Y, mini_batch_size = 64, seed = 0):

"""

Creates a list of random minibatches from (X, Y)

Arguments:

X -- input data, of shape (input size, number of examples)

Y -- true "label" vector (1 for blue dot / 0 for red dot), of shape (1, number of examples)

mini_batch_size -- size of the mini-batches, integer

Returns:

mini_batches -- list of synchronous (mini_batch_X, mini_batch_Y)

"""

np.random.seed(seed) # To make your "random" minibatches the same as ours

m = X.shape[1] # number of training examples

mini_batches = []

# Step 1: Shuffle (X, Y)

permutation = list(np.random.permutation(m))

shuffled_X = X[:, permutation]

shuffled_Y = Y[:, permutation].reshape((1,m))

# Step 2: Partition (shuffled_X, shuffled_Y). Minus the end case.

num_complete_minibatches = math.floor(m/mini_batch_size) # number of mini batches of size mini_batch_size in your partitionning

for k in range(0, num_complete_minibatches):

### START CODE HERE ### (approx. 2 lines)

mini_batch_X = shuffled_X[:, k*mini_batch_size : (k+1)*mini_batch_size]

mini_batch_Y = shuffled_Y[:, k*mini_batch_size : (k+1)*mini_batch_size]

### END CODE HERE ###

mini_batch = (mini_batch_X, mini_batch_Y)

mini_batches.append(mini_batch)

# Handling the end case (last mini-batch < mini_batch_size)

if m % mini_batch_size != 0:

### START CODE HERE ### (approx. 2 lines)

mini_batch_X = shuffled_X[:, num_complete_minibatches*mini_batch_size : m]

mini_batch_Y = shuffled_Y[:, num_complete_minibatches*mini_batch_size : m]

### END CODE HERE ###

mini_batch = (mini_batch_X, mini_batch_Y)

mini_batches.append(mini_batch)

return mini_batches

# SGDM优化器(包含两个函数:初始化和参数更新迭代)

def initialize_sgdm(parameters):

dW1 = np.zeros(parameters["W1"].shape)

db1 = np.zeros(parameters["b1"].shape)

dW2 = np.zeros(parameters["W2"].shape)

db2 = np.zeros(parameters["b2"].shape)

dW3 = np.zeros(parameters["W3"].shape)

db3 = np.zeros(parameters["b3"].shape)

v = {"dW1": dW1, "db1": db1,

"dW2": dW2, "db2": db2,

"dW3": dW3, "db3": db3}

return v

def sgdm(parameters, grads, v, beta, learning_rate):

v["dW1"] = beta * v["dW1"] + (1-beta) * grads["dW1"]

v["db1"] = beta * v["db1"] + (1-beta) * grads["db1"]

v["dW2"] = beta * v["dW2"] + (1-beta) * grads["dW2"]

v["db2"] = beta * v["db2"] + (1-beta) * grads["db2"]

v["dW3"] = beta * v["dW3"] + (1-beta) * grads["dW3"]

v["db3"] = beta * v["db3"] + (1-beta) * grads["db3"]

parameters["W1"] = parameters["W1"] - learning_rate * v["dW1"]

parameters["b1"] = parameters["b1"] - learning_rate * v["db1"]

parameters["W2"] = parameters["W2"] - learning_rate * v["dW2"]

parameters["b2"] = parameters["b2"] - learning_rate * v["db2"]

parameters["W3"] = parameters["W3"] - learning_rate * v["dW3"]

parameters["b3"] = parameters["b3"] - learning_rate * v["db3"]

return parameters, v

# ADAM优化器(包含两个函数:初始化和参数更新迭代)

def initialize_adam(parameters) :

v = {}

s = {}

v["dW1"] = np.zeros(parameters["W1"].shape)

v["db1"] = np.zeros(parameters["b1"].shape)

s["dW1"] = np.zeros(parameters["W1"].shape)

s["db1"] = np.zeros(parameters["b1"].shape)

v["dW2"] = np.zeros(parameters["W2"].shape)

v["db2"] = np.zeros(parameters["b2"].shape)

s["dW2"] = np.zeros(parameters["W2"].shape)

s["db2"] = np.zeros(parameters["b2"].shape)

v["dW3"] = np.zeros(parameters["W3"].shape)

v["db3"] = np.zeros(parameters["b3"].shape)

s["dW3"] = np.zeros(parameters["W3"].shape)

s["db3"] = np.zeros(parameters["b3"].shape)

return v, s

# 本段程序关于v和s的更新参考CSDN他人代码,其中,ADAM优化器中参数的取值为ADAM算法作者建议的取值

def adam(parameters, grads, v, s, t, learning_rate, epsilon, beta1 = 0.9, beta2 = 0.999):

v_t = {}

s_t = {} # ADAM中包含了两个动量字典,要分别初始化,分别建立校正

v["dW1"] = beta1 * v["dW1"] + (1 - beta1) * grads['dW1']

v["db1"] = beta1 * v["db1"] + (1 - beta1) * grads['db1']

v["dW2"] = beta1 * v["dW2"] + (1 - beta1) * grads['dW2']

v["db2"] = beta1 * v["db2"] + (1 - beta1) * grads['db2']

v["dW3"] = beta1 * v["dW3"] + (1 - beta1) * grads['dW3']

v["db3"] = beta1 * v["db3"] + (1 - beta1) * grads['db3']

v_t["dW1"] = v["dW1"] / (1 - beta1 ** t)

v_t["db1"] = v["db1"] / (1 - beta1 ** t)

v_t["dW2"] = v["dW2"] / (1 - beta1 ** t)

v_t["db2"] = v["db2"] / (1 - beta1 ** t)

v_t["dW3"] = v["dW3"] / (1 - beta1 ** t)

v_t["db3"] = v["db3"] / (1 - beta1 ** t)

s["dW1"] = s["dW1"] + (1 - beta2) * (grads['dW1'] ** 2)

s["db1"] = s["db1"] + (1 - beta2) * (grads['db1'] ** 2)

s["dW2"] = s["dW2"] + (1 - beta2) * (grads['dW2'] ** 2)

s["db2"] = s["db2"] + (1 - beta2) * (grads['db2'] ** 2)

s["dW3"] = s["dW3"] + (1 - beta2) * (grads['dW3'] ** 2)

s["db3"] = s["db3"] + (1 - beta2) * (grads['db3'] ** 2)

s_t["dW1"] = s["dW1"] / (1 - beta2 ** t)

s_t["db1"] = s["db1"] / (1 - beta2 ** t)

s_t["dW2"] = s["dW2"] / (1 - beta2 ** t)

s_t["db2"] = s["db2"] / (1 - beta2 ** t)

s_t["dW3"] = s["dW3"] / (1 - beta2 ** t)

s_t["db3"] = s["db3"] / (1 - beta2 ** t)

mdW1 = v_t["dW1"] / (np.sqrt(s_t["dW1"]) + epsilon)

mdb1 = v_t["db1"] / (np.sqrt(s_t["db1"]) + epsilon)

mdW2 = v_t["dW2"] / (np.sqrt(s_t["dW2"]) + epsilon)

mdb2 = v_t["db2"] / (np.sqrt(s_t["db2"]) + epsilon)

mdW3 = v_t["dW3"] / (np.sqrt(s_t["dW3"]) + epsilon)

mdb3 = v_t["db3"] / (np.sqrt(s_t["db3"]) + epsilon)

parameters["W1"] = parameters["W1"] - learning_rate * mdW1

parameters["b1"] = parameters["b1"] - learning_rate * mdb1

parameters["W2"] = parameters["W2"] - learning_rate * mdW2

parameters["b2"] = parameters["b2"] - learning_rate * mdb2

parameters["W3"] = parameters["W3"] - learning_rate * mdW3

parameters["b3"] = parameters["b3"] - learning_rate * mdb3

return parameters, v, s

def model(Xtrain, Ytrain, n1, n2, EPOCHS, optimizer, learning_rate, epsilon, mompara, beta2):

"""

参数:

X - 数据集

Y - 标签

n1, n2 - 隐藏层节点数

iterations - 梯度下降循环中的迭代次数

optimizer - 优化器选择

learning_rate - 学习率

mompara - 动量系数

accplot - 绘图开关

返回:

parameters - 模型学习的参数,它们可以用来进行预测。

"""

global s

np.random.seed(3) # 指定随机种子

n_x, n_y = Xtrain.shape[0], Ytrain.shape[0]

t = 0

v = {}

seed = 10

cost = 0

parameters = initialize_parameters(n_x, n1, n2, n_y)

# 初始化动量

if optimizer == 'SGDM':

v = initialize_sgdm(parameters)

elif optimizer == 'ADAM':

v, s = initialize_adam(parameters)

else: pass # SGD不需要初始化动量

print("================== 训练神经网络 ======================")

for i in range(EPOCHS):

seed = seed + 1

minibatches = random_mini_batches(Xtrain, Ytrain, 64, seed)

for minibatch in minibatches:

(minibatch_X, minibatch_Y) = minibatch

A, cache = forward_propagation(minibatch_X, parameters)

cost = cost_computing(A, minibatch_Y)

predictions = predict(Xtest, Ytest, parameters)

accuracy.append(float((np.dot(Ytest, predictions.T) + np.dot(1 - Ytest, 1 - predictions.T)) / float(Ytest.size) * 100))

loss.append(cost)

grads = backward_propagation(cache, minibatch_X, minibatch_Y)

# 根据optimizer选择合适的优化器

if optimizer == "SGD":

parameters = sgd(parameters, grads, learning_rate)

elif optimizer == "SGDM":

parameters, v = sgdm(parameters, grads, v, mompara, learning_rate)

elif optimizer == "ADAM":

t = t + 1 # Adam counter

parameters, v, s = adam(parameters, grads, v, s, t, learning_rate, epsilon, mompara, beta2)

if i % (EPOCHS/10) == 0:

print("第 ", i, " 次循环,cost为:" + str(cost))

return parameters

##读取数据集代码

train_X, train_Y = load_dataset(is_plot=True) # 获取数据集

print(train_X.shape, train_Y.shape)

# 分割数据集为训练集和测试集

Xtrain = train_X[0:2, 0:270]

Ytrain = train_Y[0:1, 0:270]

Xtest = train_X[0:2 , 271:300]

Ytest = train_Y[0:1 , 271:300]

# 题目要求的层节点数量,输出是一个向量,默认就是1了,可以通过 Ytrain.shape[0] 求得

n1 = 5

n2 = 2

# 设置参数:迭代总数,使用的优化器,学习率,动量系数,所有的参数请在这里修改

EPOCHS = 1000

optimizer = 'ADAM' # 修改这里为'SGD' 'SGDM' 'ADAM' 以使用不同的优化器

learning_rate = 0.1

mompara = 0.9

beta2 = 0.999

epsilon = 1e-8

# 训练网络

parameters = model(Xtrain, Ytrain, n1, n2, EPOCHS, optimizer, learning_rate, epsilon, mompara, beta2)

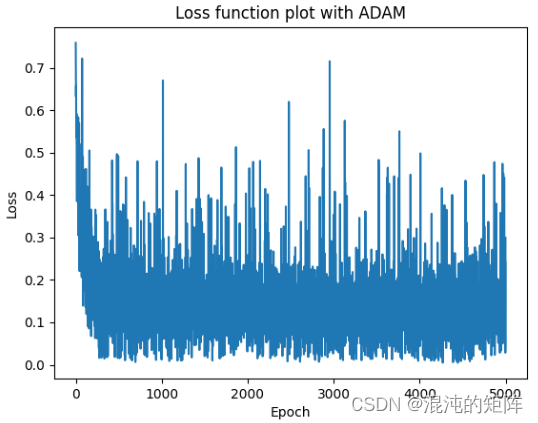

# 绘制损失函数曲线

plt.plot(np.array(loss))

plt.title('Loss function plot with ' + optimizer)

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.show()

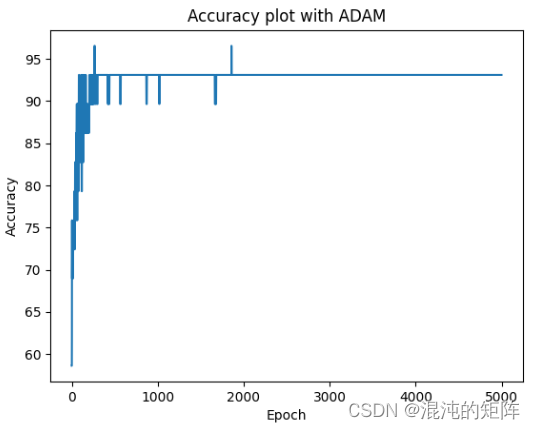

# 绘制准确率曲线

plt.plot(np.array(accuracy))

plt.title('Accuracy plot with ' + optimizer)

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.show()

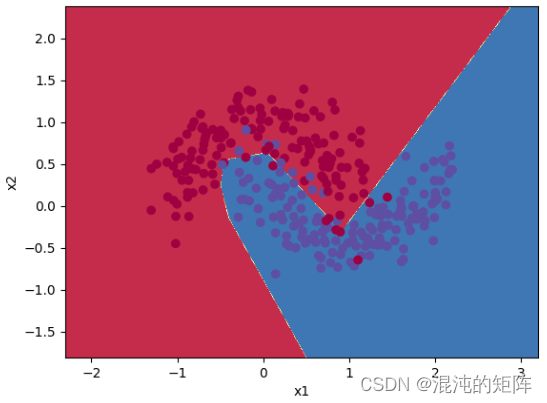

# 绘制边界

print("=================================== 绘制边界 ===================================")

axes = plt.gca()

plot_decision_boundary(lambda x: predict_dec(x.T,parameters), train_X, train_Y)

predictions = predict(Xtest, Ytest, parameters)

print('准确率: %d' % float((np.dot(Ytest, predictions.T) + np.dot(1 - Ytest, 1 - predictions.T)) / float(Ytest.size) * 100) + '%')

运行结果(以Adam为例):

9462

9462

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?