ex2-线性可分

逻辑回归

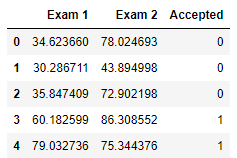

在训练的初始阶段,我们将要构建一个逻辑回归模型来预测,某个学生是否被大学录取。 设想你是大学相关部分的管理者,想通过申请学生两次测试的评分,来决定他们是否被录取。 现在你拥有之前申请学生的可以用于训练逻辑回归的训练样本集。对于每一个训练样本,你有他们两次测试。

import numpy as np

import pandas as pd

import matplotlib.pyplot as pltdata = pd.read_csv('ex2data1.txt',names=['Exam 1','Exam 2','Accepted'])

data.head()

fig,ax = plt.subplots()

ax.scatter(data[data['Accepted']==0]['Exam 1'],data[data['Accepted']==0]['Exam 2'],c='r',marker='x',label='y=0')

ax.scatter(data[data['Accepted']==1]['Exam 1'],data[data['Accepted']==1]['Exam 2'],c='blue',marker='o',label='y=1')

ax.legend()

ax.set(xlabel = 'exam1',

ylabel = 'exam2')

plt.show()

# 获取数据集 X,y

def get_Xy(data):

data.insert(0,'ones',1)

cols = data.shape[1] #读取矩阵的第二个维度

X = data.iloc[:,:-1]

y = data.iloc[:,cols-1:cols]

X = np.matrix(X.values)

y = np.matrix(y.values)

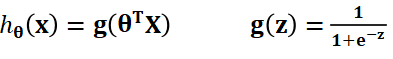

return X,y# 激活函数

def sigmoid(z):

return 1/(1+np.exp(-z))

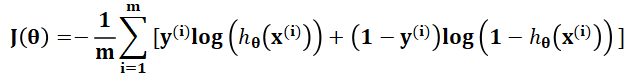

# 代价函数

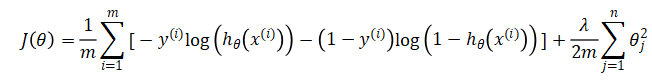

def costFunction(X,y,theta):

A = sigmoid(X*theta)

first = np.multiply(y,np.log(A))

second = np.multiply((1-y),np.log(1-A))

return -np.sum(first+second)/len(X)

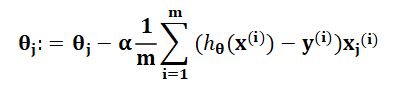

# 梯度下降

def gradientDescent(X, y, theta, alpha, iters):

m = len(X)

costs = []

for i in range(iters):

A = sigmoid(X * theta)

theta = theta- (alpha/m)* X.T *(A-y)

cost = costFunction(X,y,theta)

costs.append(cost)

#if i%1000==0:

#print(cost)

return costs,thetaX,y = get_Xy(data)

theta = np.matrix(np.zeros((3,1)))

cost_init = costFunction(X,y,theta)

cost_init

#0.6931471805599453alpha = 0.004

iters = 500000

costs,theta_final = gradientDescent(X,y,theta,alpha,iters)

theta_final

'''

matrix([[-24.32515839],

[ 0.19954416],

[ 0.19470508]])

'''当 theta * X = 0 时 为分界线

coef1 = -theta_final[0,0]/theta_final[2,0]

coef2 = -theta_final[1,0]/theta_final[2,0]

x = np.linspace(data['Exam 1'].min(),data['Exam 1'].max(),100)

f = coef1+coef2*x

fig,ax = plt.subplots()

ax.scatter(data[data['Accepted']==0]['Exam 1'],data[data['Accepted']==0]['Exam 2'],c='r',marker='x',label='y=0')

ax.scatter(data[data['Accepted']==1]['Exam 1'],data[data['Accepted']==1]['Exam 2'],c='blue',marker='o',label='y=1')

ax.legend()

ax.set(xlabel = 'exam1',ylabel = 'exam2')

ax.plot(x,f,c='g')

plt.show()

# 准确率

def predict(X,theta):

prob = sigmoid(X*theta)

return [1 if x>=0.5 else 0 for x in prob]

y_ = np.array(predict(X,theta_final))

y_pre = y_.reshape(len(y_),1)

acc = np.mean(y_pre == y)

acc0.89

ex2-非线性可分

设想你是工厂的生产主管,你有一些芯片在两次测试中的测试结果,测试结果决定是否芯片要被接受或抛弃。你有一些历史数据,帮助你构建一个逻辑回归模型。

import numpy as np

import pandas as pd

import matplotlib.pyplot as pltdata = pd.read_csv('ex2data2.txt',names=['Test 1','Test 2','Accepted'])

data.head()fig,ax = plt.subplots()

ax.scatter(data[data['Accepted']==0]['Test 1'],data[data['Accepted']==0]['Test 2'],c='r',marker='x',label='y=0')

ax.scatter(data[data['Accepted']==1]['Test 1'],data[data['Accepted']==1]['Test 2'],c='blue',marker='o',label='y=1')

ax.legend()

ax.set(xlabel = 'Test1',

ylabel = 'Test2')

plt.show()# 特征映射

def feature_mapping(x1,x2,power):

data={}

for i in np.arange(power+1):

for j in np.arange(i+1):

data['F{}{}'.format(i-j,j)]=np.power(x1,i-j)*np.power(x2,j)

return pd.DataFrame(data)x1 = data['Test 1']

x2 = data['Test 2']

data2 = feature_mapping(x1,x2,6)

data2.head()

X = np.matrix(data2.values)

cols = data.shape[1]

y = data.iloc[:,cols-1:cols].values#激活函数

def sigmoid(z):

return 1/(1+np.exp(-z))

# 代价函数(带正则化)

def costFunction(X,y,theta,lamda):

A = sigmoid(X*theta)

first = np.multiply(y,np.log(A))

second = np.multiply((1-y),np.log(1-A))

reg = np.sum(np.power(theta[1:],2))*(lamda/(2 * len(X)))

return -np.sum(first+second)/len(X) + reg

# 梯度下降

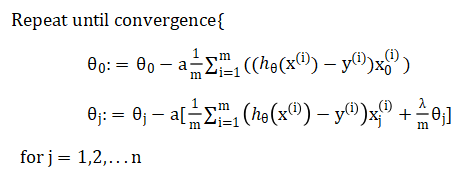

def gradientDescent(X, y, theta, alpha, iters,lamda):

costs = []

for i in range(iters):

reg = theta[1:]*(lamda/len(X))

reg = np.insert(reg,0,values=0,axis=0)

A = sigmoid(X * theta)

theta = theta-X.T*(A-y)*alpha/len(X)- reg*alpha

cost = costFunction(X,y,theta,lamda)

costs.append(cost)

if i%1000==0:

print(cost)

return costs,thetatheta = np.matrix(np.zeros((28,1)))

lamda = 1

cost_init = costFunction(X,y,theta,lamda)

cost_init

# 0.6931471805599454alpha = 0.001

iters = 200000

lamda = 1

costs,theta_final = gradientDescent(X, y, theta, alpha, iters,lamda)

theta_final

'''

matrix([[ 1.2052072 ],

[ 0.5825871 ],

[ 1.13133192],

[-1.92011714],

[-0.83055737],

[-1.28494641],

[ 0.10189184],

[-0.34252061],

[-0.3413474 ],

[-0.18126064],

[-1.40955679],

[-0.06604286],

[-0.58032027],

[-0.2460958 ],

[-1.14671096],

[-0.24322073],

[-0.20113005],

[-0.05872139],

[-0.25848869],

[-0.27166559],

[-0.4910192 ],

[-1.01575376],

[ 0.01109593],

[-0.28015729],

[ 0.00479456],

[-0.30974881],

[-0.12601945],

[-0.94013426]])

'''# 准确率

def predict(X,theta):

prob = sigmoid(X*theta)

return [1 if x>=0.5 else 0 for x in prob]

y_ = np.array(predict(X,theta_final))

y_pre = y_.reshape(len(y_),1)

acc = np.mean(y_pre == y)

acc

# 0.8305084745762712x = np.linspace(-1.2,1.2,200)

xx,yy = np.meshgrid(x,x)

z = feature_mapping(xx.ravel(),yy.ravel(),6).values

zz = z*theta_final

zz = zz.reshape(xx.shape)

fig,ax = plt.subplots()

ax.scatter(data[data['Accepted']==0]['Test 1'],data[data['Accepted']==0]['Test 2'],c='r',marker='x',label='y=0')

ax.scatter(data[data['Accepted']==1]['Test 1'],data[data['Accepted']==1]['Test 2'],c='blue',marker='o',label='y=1')

ax.legend()

ax.set(xlabel = 'Test1',

ylabel = 'Test2')

plt.contour(xx,yy,zz,0)

plt.show()

1166

1166

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?