请看下面的实例,功能是使用Keras OpenCV来初始预处理,然后使用 TensorFlow对某个视频进行无监督学习,在本实例中,将视频(.mp4 格式)转换为 256 列向量,这样就可以在广告推荐系统中进一步使用。程序文件Main.ipynb的具体实现流程如下所示。

(1)准备要处理的MP4视频文件,代码如下所示。

from PIL import Image

import os, sys

path = "/home/til/Video/code/Images/"

path1 = "/home/til/Video/code/resize/"

dirs = sorted(os.listdir( path ))

print (dirs)

def resize():

for item in dirs:

if os.path.isfile(path+item):

im = Image.open(path+item).convert("RGB")

f, e = os.path.splitext(path+item)

imResize = im.resize((96,64), Image.ANTIALIAS)

imResize.save(f + '.jpg', 'JPEG', quality=90)

resize()

['frame0.jpg', 'frame1.jpg', 'frame10.jpg', 'frame11.jpg', 'frame12.jpg', 'frame13.jpg', 'frame14.jpg', 'frame15.jpg', 'frame16.jpg', 'frame17.jpg', 'frame18.jpg', 'frame19.jpg', 'frame2.jpg', 'frame20.jpg', 'frame21.jpg', 'frame22.jpg', 'frame23.jpg', 'frame24.jpg', 'frame25.jpg', 'frame26.jpg', 'frame27.jpg', 'frame28.jpg', 'frame29.jpg', 'frame3.jpg', 'frame30.jpg', 'frame31.jpg', 'frame32.jpg', 'frame33.jpg', 'frame34.jpg', 'frame35.jpg', 'frame36.jpg', 'frame37.jpg', 'frame38.jpg', 'frame39.jpg', 'frame4.jpg', 'frame40.jpg', 'frame41.jpg', 'frame42.jpg', 'frame43.jpg', 'frame44.jpg', 'frame45.jpg', 'frame46.jpg', 'frame47.jpg', 'frame48.jpg', 'frame49.jpg', 'frame5.jpg', 'frame50.jpg', 'frame51.jpg', 'frame52.jpg', 'frame53.jpg', 'frame54.jpg', 'frame55.jpg', 'frame56.jpg', 'frame57.jpg', 'frame58.jpg', 'frame59.jpg', 'frame6.jpg', 'frame60.jpg', 'frame61.jpg', 'frame62.jpg', 'frame7.jpg', 'frame8.jpg', 'frame9.jpg'](3)加载处理其中的一帧图片,在标准化处理后可以将其转换为JPG格式或数组格式。

from keras.preprocessing.image import load_img

#载入图片

img = load_img('/home/til/Video/code/Images/frame1.jpg')

# report details about the image

print(type(img))

print(img.format)

print(img.mode)

print(img.size)

#显示图像

img.show()

images = []

for item in dirs:

if os.path.isfile(path+item):

img = cv2.imread(path+item)

img_gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

X = np.array(img_gray)

X = X.astype('float32')

#标准化X

X /= 255.0

images.append(X)

#如果需要,转换为RGB

# img = img.convert('RGB')

#转换为数组

images = np.array(images)

print(images.shape)执行后会输出:

<class 'PIL.JpegImagePlugin.JpegImageFile'>

JPEG

RGB

(96, 64)

(63, 64, 96)(4)开始训练图片,设置训练超参数,创建编码模型,使用函数summary()打印输出概览信息。

x_train = images

warnings.filterwarnings('ignore', category=UserWarning, module='skimage')

seed = 42

random.seed = seed

np.random.seed = seed

IMG_WIDTH = 96

IMG_HEIGHT = 64

IMG_CHANNELS = 3

INPUT_SHAPE = (64, 96, 1)

from keras.layers import Input, Dense, UpSampling2D, Flatten, Reshape

def Encoder():

inp = Input(shape=INPUT_SHAPE)

x = Conv2D(128, (4, 4), activation='elu', padding='same',name='encode1')(inp)

x = Conv2D(64, (3, 3), activation='elu', padding='same',name='encode2')(x)

x = MaxPooling2D((2, 2), padding='same')(x)

x = Conv2D(64, (3, 3), activation='elu', padding='same',name='encode3')(x)

x = Conv2D(32, (2, 2), activation='elu', padding='same',name='encode4')(x)

x = MaxPooling2D((2, 2), padding='same')(x)

x = Conv2D(64, (3, 3), activation='elu', padding='same',name='encode5')(x)

x = Conv2D(32, (2, 2), activation='elu', padding='same',name='encode6')(x)

x = MaxPooling2D((2, 2), padding='same')(x)

x = Conv2D(64, (3, 3), activation='elu', padding='same',name='encode7')(x)

x = Conv2D(32, (2, 2), activation='elu', padding='same',name='encode8')(x)

x = MaxPooling2D((2, 2), padding='same')(x)

x = Conv2D(32, (3, 3), activation='elu', padding='same',name='encode9')(x)

x = Flatten()(x)

x = Dense(256, activation='elu',name='encode10')(x)

encoded = Dense(128, activation='sigmoid',name='encode11')(x)

return Model(inp, encoded)

encoder = Encoder()

encoder.summary()执行后会输出:

Model: "model_9"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_7 (InputLayer) (None, 64, 96, 1) 0

_________________________________________________________________

encode1 (Conv2D) (None, 64, 96, 128) 2176

_________________________________________________________________

encode2 (Conv2D) (None, 64, 96, 64) 73792

_________________________________________________________________

max_pooling2d_17 (MaxPooling (None, 32, 48, 64) 0

_________________________________________________________________

encode3 (Conv2D) (None, 32, 48, 64) 36928

_________________________________________________________________

encode4 (Conv2D) (None, 32, 48, 32) 8224

_________________________________________________________________

max_pooling2d_18 (MaxPooling (None, 16, 24, 32) 0

_________________________________________________________________

encode5 (Conv2D) (None, 16, 24, 64) 18496

_________________________________________________________________

encode6 (Conv2D) (None, 16, 24, 32) 8224

_________________________________________________________________

max_pooling2d_19 (MaxPooling (None, 8, 12, 32) 0

_________________________________________________________________

encode7 (Conv2D) (None, 8, 12, 64) 18496

_________________________________________________________________

encode8 (Conv2D) (None, 8, 12, 32) 8224

_________________________________________________________________

max_pooling2d_20 (MaxPooling (None, 4, 6, 32) 0

_________________________________________________________________

encode9 (Conv2D) (None, 4, 6, 32) 9248

_________________________________________________________________

flatten_5 (Flatten) (None, 768) 0

_________________________________________________________________

encode10 (Dense) (None, 256) 196864

_________________________________________________________________

encode11 (Dense) (None, 128) 32896

=================================================================

Total params: 413,568

Trainable params: 413,568

Non-trainable params: 0

_________________________________________________________________(5)为了在训练过程中缩小学习率,进而提升模型,在本实例中使用了Keras中的回调函数ReduceLROnPlateau(),最后保存训练的模型。

learning_rate_reduction = ReduceLROnPlateau(monitor='val_loss',

patience=4,

verbose=1,

factor=0.5,

min_lr=0.00001)

checkpoint = ModelCheckpoint("Dancer_Auto_Model.hdf5",

save_best_only=True,

monitor='val_loss',

mode='min')

early_stopping = EarlyStopping(monitor='val_loss',

patience=8,

verbose=1,

mode='min',

restore_best_weights=True)(6)在训练完毕后,使用imshow()输出显示指定大小的图像。

class ImgSample(Callback):

def __init__(self):

super(Callback, self).__init__()

def on_epoch_end(self, epoch, logs={}):

sample_img = x_train[50]

sample_img = sample_img.reshape(1, IMG_HEIGHT, IMG_WIDTH, 1)

sample_img = self.model.predict(sample_img)[0]

imshow(sample_img.reshape(IMG_HEIGHT,IMG_WIDTH))

plt.show()

imgsample = ImgSample()

model_callbacks = [learning_rate_reduction, checkpoint, early_stopping, imgsample]

imshow(x_train[50].reshape(IMG_HEIGHT,IMG_WIDTH))执行效果如图12-1所示。

图12-1 执行效果

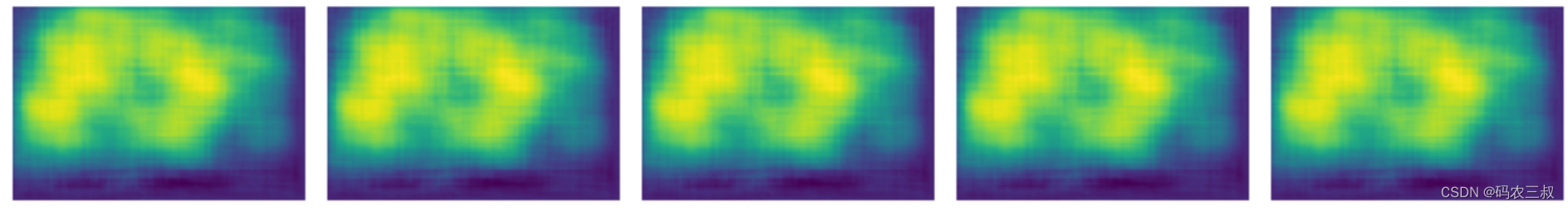

(7)调用前面训练的模型文件,调用函数decoder.predict()对测试集进行预测,最后输出预测解码图像。

encoder = Encoder()

encoder.load_weights("Auto_Weights.hdf5", by_name=True)

encoder.save('Encoder_Model.hdf5')

decoder.save_weights("Decoder_Weights.hdf5")

encoder.save_weights("Encoder_Weights.hdf5")

encoder_imgs = encoder.predict(x1)

print(encoder_imgs.shape)

np.save('Encoded.npy',encoder_imgs)

decoded_imgs = decoder.predict(encoder_imgs[0:11])

plt.figure(figsize=(20, 4))

for i in range(5,10):

# reconstruction

plt.subplot(1, 10, i + 1)

plt.imshow(decoded_imgs[i].reshape(IMG_HEIGHT, IMG_WIDTH))

plt.axis('off')

plt.tight_layout()

plt.show()执行效果如图12-2所示。

图12-2 预测图像

(8)创建LSTM网络,设置训练超参数,创建编码模型,使用函数summary()打印输出概览信息。

seed = 42

random.seed = seed

np.random.seed = seed

IMG_WIDTH = 96

IMG_HEIGHT = 64

IMG_CHANNELS = 3

from keras.layers import Input, Dense, UpSampling2D, Flatten, Reshape

def Encoder():

inp = Input(shape=INPUT_SHAPE)

x = Conv2D(128, (4, 4), activation='elu', padding='same',name='encode1')(inp)

x = Conv2D(64, (3, 3), activation='elu', padding='same',name='encode2')(x)

x = MaxPooling2D((2, 2), padding='same')(x)

x = Conv2D(64, (3, 3), activation='elu', padding='same',name='encode3')(x)

x = Conv2D(32, (2, 2), activation='elu', padding='same',name='encode4')(x)

x = MaxPooling2D((2, 2), padding='same')(x)

x = Conv2D(64, (3, 3), activation='elu', padding='same',name='encode5')(x)

x = Conv2D(32, (2, 2), activation='elu', padding='same',name='encode6')(x)

x = MaxPooling2D((2, 2), padding='same')(x)

x = Conv2D(64, (3, 3), activation='elu', padding='same',name='encode7')(x)

x = Conv2D(32, (2, 2), activation='elu', padding='same',name='encode8')(x)

x = MaxPooling2D((2, 2), padding='same')(x)

x = Conv2D(32, (3, 3), activation='elu', padding='same',name='encode9')(x)

x = Flatten()(x)

x = Dense(256, activation='elu',name='encode10')(x)

encoded = Dense(128, activation='sigmoid',name='encode11')(x)

return Model(inp, encoded)

encoder = Encoder()

encoder.summary()

Model: "model_9"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_7 (InputLayer) (None, 64, 96, 1) 0

_________________________________________________________________

encode1 (Conv2D) (None, 64, 96, 128) 2176

_________________________________________________________________

encode2 (Conv2D) (None, 64, 96, 64) 73792

_________________________________________________________________

max_pooling2d_17 (MaxPooling (None, 32, 48, 64) 0

_________________________________________________________________

encode3 (Conv2D) (None, 32, 48, 64) 36928

_________________________________________________________________

encode4 (Conv2D) (None, 32, 48, 32) 8224

_________________________________________________________________

max_pooling2d_18 (MaxPooling (None, 16, 24, 32) 0

_________________________________________________________________

encode5 (Conv2D) (None, 16, 24, 64) 18496

_________________________________________________________________

encode6 (Conv2D) (None, 16, 24, 32) 8224

_________________________________________________________________

max_pooling2d_19 (MaxPooling (None, 8, 12, 32) 0

_________________________________________________________________

encode7 (Conv2D) (None, 8, 12, 64) 18496

_________________________________________________________________

encode8 (Conv2D) (None, 8, 12, 32) 8224

_________________________________________________________________

max_pooling2d_20 (MaxPooling (None, 4, 6, 32) 0

_________________________________________________________________

encode9 (Conv2D) (None, 4, 6, 32) 9248

_________________________________________________________________

flatten_5 (Flatten) (None, 768) 0

_________________________________________________________________

encode10 (Dense) (None, 256) 196864

_________________________________________________________________

encode11 (Dense) (None, 128) 32896

=================================================================

Total params: 413,568

Trainable params: 413,568

Non-trainable params: 0

_________________________________________________________________(9)创建模型后保存,然后使用函数imshow()输出显示指定大小的图像。

learning_rate_reduction = ReduceLROnPlateau(monitor='val_loss',

patience=4,

verbose=1,

factor=0.5,

min_lr=0.00001)

checkpoint = ModelCheckpoint("Dancer_Auto_Model.hdf5",

save_best_only=True,

monitor='val_loss',

mode='min')

early_stopping = EarlyStopping(monitor='val_loss',

patience=8,

verbose=1,

mode='min',

restore_best_weights=True)

class ImgSample(Callback):

def __init__(self):

super(Callback, self).__init__()

def on_epoch_end(self, epoch, logs={}):

sample_img = x_train[50]

sample_img = sample_img.reshape(1, IMG_HEIGHT, IMG_WIDTH, 1)

sample_img = self.model.predict(sample_img)[0]

imshow(sample_img.reshape(IMG_HEIGHT,IMG_WIDTH))

plt.show()

imgsample = ImgSample()

model_callbacks = [learning_rate_reduction, checkpoint, early_stopping, imgsample]

imshow(x_train[50].reshape(IMG_HEIGHT,IMG_WIDTH))

854

854

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?