0. Statement. 😄

Today, I summarize the details of the problems I encountered through the NLP experiments I did before. Although it is a small detail, it will determine whether the experiment can go on or not, so it is very important for us. 🏫

1. Complete the classification problem of the iris dataset using LSTM + Embedding. 😇

import torch

import numpy as np

import pandas as pd

from sklearn.metrics import accuracy_score, f1_score

from torch.utils.data.dataset import Dataset

from torch.utils.data.dataloader import DataLoader

from torch import nn

# 设置随机种子

seed = 1

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed) # if you are using multi-GPU.

np.random.seed(seed) # Numpy module.

torch.manual_seed(seed)

# 超参数

Max_epoch = 100

batch_size = 6

learning_rate = 0.05

path = "Iris.csv"

train_rate = 0.8

df = pd.read_csv(path)

# pandas 打乱数据

df = df.sample(frac=1)

cols_feature = ['SepalLengthCm', 'SepalWidthCm', 'PetalLengthCm', 'PetalWidthCm']

df_features = df[cols_feature]

df_categories = df['Species']

# 类别字符串转换为数字。

categories_digitization = []

for i in df_categories:

if i == 'Iris-setosa':

categories_digitization.append(0)

elif i == 'Iris-versicolor':

categories_digitization.append(1)

else:

categories_digitization.append(2)

features = torch.from_numpy(np.float32(df_features.values))

print(features)

categories_digitization = np.array(categories_digitization)

categories_digitization = torch.from_numpy(categories_digitization)

print("categories_digitization",categories_digitization)

# 定义MyDataset类,继承Dataset方法,并重写__getitem__()和__len__()方法

class MyDataset(Dataset):

# 初始化函数,得到数据

def __init__(self, datas, labels):

self.datas = datas

self.labels = labels

# print(df_categories_digitization)

# index是根据batchsize划分数据后得到的索引,最后将data和对应的labels进行一起返回

def __getitem__(self, index):

features = self.datas[index]

categories_digitization = self.labels[index]

return features, categories_digitization

# 该函数返回数据大小长度,目的是DataLoader方便划分,如果不知道大小,DataLoader会一脸懵逼

def __len__(self):

return len(self.labels)

num_train = int(features.shape[0] * train_rate)

train_x = features[:num_train]

train_y = categories_digitization[:num_train]

test_x = features[num_train:]

test_y = categories_digitization[num_train:]

# 通过MyDataset将数据进行加载,返回Dataset对象,包含data和labels

train_data = MyDataset(train_x, train_y)

test_data = MyDataset(test_x, test_y)

# 读取数据

train_dataloader = DataLoader(train_data, batch_size=batch_size, shuffle=True, drop_last=False, num_workers=0)

test_dataloader = DataLoader(test_data, batch_size=batch_size, shuffle=True, drop_last=False, num_workers=0)

class MyModel(nn.Module):

def __init__(self):

super().__init__()

self.embedding = nn.Embedding(num_embeddings=100, embedding_dim=300)

self.lstm = nn.LSTM(input_size=300, hidden_size=128, num_layers=2, batch_first=True)

self.fc = nn.Linear(128, 3)

def forward(self, x):

print("刚输入进网络",x.shape)

x = self.embedding(x)

print("embedding 后",x.shape)

x, _ = self.lstm(x)

print("lstm 后", x.shape)

x = self.fc(x[:, -1, :])

return x

# 实例化模型

model = MyModel()

print(model)

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters())

# 训练模型

for epoch in range(10):

for step, batch in enumerate(test_dataloader):

batch_x, batch_y = batch

# 获取训练数据,并进行预处理

# inputs, targets = get_data()

# inputs = torch.LongTensor(inputs)

print("batch_x.dtype:",batch_x.dtype)

batch_x = batch_x.type(torch.LongTensor)

print("batch_x.dtype:",batch_x.dtype)

x = torch.LongTensor(batch_x)

# targets = torch.FloatTensor(targets)

print(batch_y.dtype)

batch_y = batch_y.type(torch.LongTensor)

print(batch_y.dtype)

y = torch.LongTensor(batch_y)

print("y.shape(类别classes):",y.shape)

# 清零梯度

optimizer.zero_grad()

# 前向传播

print("x.shape:",x.shape)

outputs = model(x)

print("outputs.shape:", outputs.shape)

# 计算损失

loss = criterion(outputs, y)

# 反向传播

loss.backward()

# 更新参数

optimizer.step()

# 输出每个 epoch 的 loss

print(f"epoch {epoch+1}: loss = {loss.item()}")

model.eval() #测试的时候需要net.eval()

test_pred = []

test_true = []

for step, batch in enumerate(test_dataloader):

batch_x, batch_y = batch

batch_x = batch_x.type(torch.LongTensor)

x = torch.LongTensor(batch_x)

logistics = model(x)

# 取每个[]中最大的值。

pred_y = logistics.argmax(1)

test_true.extend(batch_y.numpy().tolist())

test_pred.extend(pred_y.detach().numpy().tolist())

print(f"测试集预测{test_pred}")

print(f"测试集准确{test_true}")

accuracy = accuracy_score(test_true, test_pred)

f1 = f1_score(test_true, test_pred, average = "macro")

print(f"Accuracy的值为:{accuracy}")

print(f"F1的值为:{f1}")

print(model)

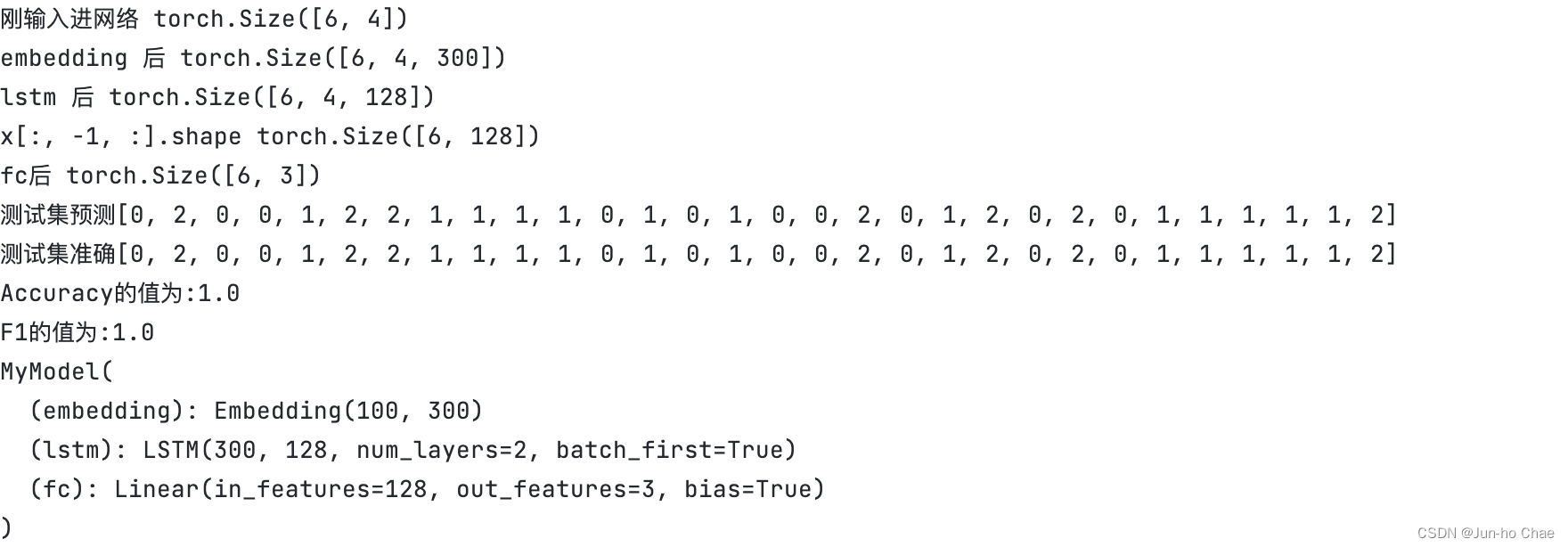

2. The shape of the embedding layer. 🤠

The input shape of the embedding layer is (batch_size, sequence_length). In this case, batch_size represents the number of samples in the batch and sequence_length represents the length of the sequence in each sample. For example, for a text classification task, the sequence may contain words or sentences of words, and sequence_length is the number of words in the sentence.

For example, in the following iris dataset👇, I extract the first 6 samples as a batch, so batch_size = 6, and there are 4 features in the dataset except for Id, so sequence_length is 4. In summary, (batch_size, sequence_length) = (6, 4).

Id, SepalLengthCm, SepalWidthCm, PetalLengthCm, PetalWidthCm, Species

1,5.1,3.5,1.4,0.2,Iris-setosa

2,4.9,3.0,1.4,0.2,Iris-setosa

3,4.7,3.2,1.3,0.2,Iris-setosa

4,4.6,3.1,1.5,0.2,Iris-setosa

5,5.0,3.6,1.4,0.2,Iris-setosa

6,5.4,3.9,1.7,0.4,Iris-setosa

3. The shape of the LSTM layer. 🥸

When batch_first=True, the input shape of the LSTM layer is (batch_size, sequence_length, input_dim). In this case, batch_size denotes the number of samples in the batch, sequence_length denotes the length of the sequence in each sample, and input_dim denotes the number of features in each cell. For example, for a text classification task, the sequence may contain words or sentences composed of words. input_dim is the number of features in each word (e.g., the dimensionality of the word vector).

The size of num_embeddings is related to the size of the vocabulary. num_embeddings refers to the number of different words in the vocabulary and is used to initialize the word embedding matrix, so if the vocabulary is large, then num_embeddings should be increased accordingly.👇

4. Why use x = self.fc(x[:, -1, :])? 🤓

The reason for using x[:, -1, :] is that the LSTM layer processes each cell in the sequence through a circular mechanism and remembers the previous information in the sequence, so the last cell in the sequence is usually considered to contain the information in the whole sequence and is more easily classified by the classifier.

Finally 🤩

Thank you for the current age of knowledge sharing and the people willing to share it, thank you!

1683

1683

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?