We know that images can be treated as 2d matrix. But we can also treat it as a function, where we give the location of a pixel (x,y) and get the intensity of that pixel.

Now what we can do for denoising is Moving average. Imagine we have a imgae below:

Most of the pixels around is dark (intensity:0) while the center is bright (intensity:90), but we find two noise points. Then what we can do is selecting a window size (usually in odd like 3x3): and taking the average in the window, and we take the output in the center cell of the window. Then we move this window one column over and take average again...

(little thought: why odd size rather than even size? We always need an exact center cell!)

Finally we can get:

We have to take this simple example in mind, because this is basically how convolution works in computer vision: A window is moving around in an image and we perform some operations in the window.

Convolution is an example of Linear Filter.

Here we say we take function F and convolves it with G (the star sign means convolution) .

How it works is just like the process we show above that a window moves around in the image(function F), now G is exactly the same size of that window, and we are actually taking the inner product of G and each window we showed above.

Here we could realize why convolution is so powerful: we can change the filter G with whatever number we like to achieve our specific goal.

Interpretation: We take F convolves with G, then the output of location (x,y) is a sum over the "window" (in the size of filter G). It is basically the inner product of two vectors, one from F and another from G.

(if we have 3x3 window, then i=1,2,3 and j=1,2,3)

********

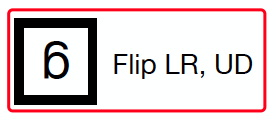

Note: the minus sign for x-i, y-j actually flips the filter (Flip left to right, filp up to down)

we have kernel G and image F, but when we do convolution over them, we are actually using the flipped window of G moving around the image:

This might change the result we want if the filter is not symmetric, but we will talk about this later.

Takeaways: Convolution is basically an inner product that you just slide around the image.

![]()

Let's see some examples of different filters on the same image:

The output is exactly the same.

------

It is going to shift the image by one pixel to the right. (Not tranlation of left because of the minus sign we talked about in the above note)

How? For example, we have the window around the eyeball in the image, and we are actually using the flipped filter to calculate inner product. It means that we take every number in the window and multiply with 0 except the left number. Then we will have the result in the center of that window. This means that we only move a left number to the center, literally moves a pixel to the right. And we do this for every pixel in the image. That makes a right-translation filter.

------

-----

This is kind of tricky. In this rotation process, the center of the eye doesn't move too much, but the nose moves really far away. Every pixel is treated differently so this rotation cannot be done by convolution. Remember that convolution is a linear filter that does the same thing to every pixel.

------

This is a cool filter. We are duplicating this image by 2 (brighten it) and then remove a blur version of the image. Let's see this filter in another way:

This is a cool filter. We are duplicating this image by 2 (brighten it) and then remove a blur version of the image. Let's see this filter in another way:

We get the detail image by have the original one subtracted by blurred one. Then we add this detail back on the original image, making the detail amplified. That's how we get a sharpened image.

We get the detail image by have the original one subtracted by blurred one. Then we add this detail back on the original image, making the detail amplified. That's how we get a sharpened image.

We have some properties of convolution and we will not prove them, just good to know.

The first three properties are true just because we have the minus sign that mentioned above.

-- Commutative property is a little counter-intuitive. We use filter to go through image, how can we use image to move in the filter?? The answer is, we don't do that. But this property is mathmetically true.

-- Associative property is an important rule when we want to make our code faster. Maybe one order of the operations is faster than the others.

-- Distributive property also makes things faster. Convolution is very computational expensive, so it would be good if we can do summation to reduce the time of convolution.

-- Shift Invariance: it's equvilant to shift the image and then use the filter or use the filter then shift the filtered image.

Little thoughts:

In convolution, we are doing the same thing to every pixel with the filter moving around. We see a lot of redundancy. How do we make this more efficient? That's why GPU is so important in machine learning. GPUs are basically hardwares designed to do this in parallel. GPUs treat every window as seperate threads and parallelize them, they can do thousands of parallel jobs at the same time. That's why they are so fast.

The only difference between cross-correlation and convolution is that we don't flip the filter.

(Gaussian Filter is symmetric)

(Gaussian Filter is symmetric)

When sigma is increasing, we will get a more blur image.

If the mean is not zero, it will cause a shift plus a blur.

For a single window, we have to do mutiplication for every number in it. So we have to do m^2 times multiplication. And we have to do this for every pixel, we have n^2 pixels. So the time comlexity is O(n^2*m^2). We can see this is really expensive.

One method to speed up, and this method is not true for all filters. But some filters like Guassian Filter can apply:

The PDF of 2-dimentional Gaussian can be written as two single Gaussian PDF. We call this separable for convolution and we also take advantage of the associative property -- the order of operation doesn't matter.

For the vertical window (size m x 1) , we do convolution and have n^2*m multiplication, then we do with the horizontal window (size 1 x m), we again have n^2*m multiplication. In total we have 2* n^2* m. So the complexity is O(n^2*m).

Image gradient is another good point that we regard image as function.

It makes sense when we compare the first derivative between x and y by seeing the column after Alma. If we see from left to right, we find that the intensity of column changes a lot, and in the derivative of x, the change also shows up from left to right around the column. But if we see it up down on the column, the vertical derivative is empty since there is not much change on vertical direction of this column.

The filter [-1,1] is just the difference of intensity between two neighboring pixels, which makes the gradient of discrete function.

So the gradient is some way of calculating what are the changes in the image.

So we create the Laplacian Filter, it firstly blur the image with Gaussian Filter then take the second derivative.This is the most common filter we use to find edges.

Another type of Laplacian Filter:

For each pixel, if the sum of second derivative is greater than a threshold, we put a white pixel in the corresponding location of output image.

269

269

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?