前情提要:第一次玩yolov8,记录下踩坑过程,本篇指南主要参考YOLOv8训练自己的数据集,同时记录了自己在这个过程中遇到的其他问题以及解决的办法。

代码下载地址:ultralytics/ultralytics: NEW - YOLOv8 🚀 in PyTorch > ONNX > OpenVINO > CoreML > TFLite (github.com)

解压后重命名文件夹,我把文件夹‘ultralytics-main'改成了'yolov8'的名字。

目录

一、搭建conda环境

1、创建新环境

按下'win'+'r',输入cmd,然后输入以下命令:

conda create -n yolov8 python=3.8

2、激活环境:conda activate yolov8

3、切换当前路径到文件夹下:cd yolov8

4、安装环境包:pip install ultralytics -i https://pypi.tuna.tsinghua.edu.cn/simple

(这个我看有的帖子还让安装requirements.txt,但本人没在下载的文件夹里找到,调研了原因是因为相关配置要求都集成到ultralytics里啦,所以只用安装ultralytics)

二、数据集准备

2.1 数据标注

1、准备好自己的图片数据

2、在当前环境中安装labelimg(图片标注工具)

pip install labelimg -i https://pypi.tuna.tsinghua.edu.cn/simple

我安装的是1.8.6版本

3、在cmd中打开labelimg

![]()

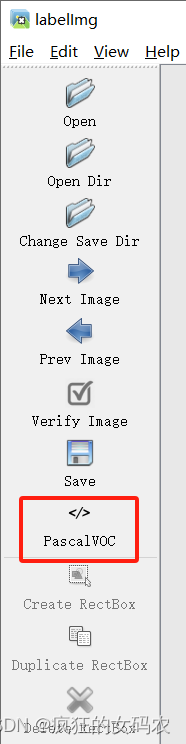

4、在labelimg界面中标注图片,我保存成了VOC格式,即标注文件以.xml为后缀

2.2 数据集划分

5、将数据集随机划分为 train\test\val 三个类别

在项目文件夹yolov8目录下新建一个文件夹mydata。 在mydata文件夹下分别建立dataset、images、labels、xml四个文件夹,其中将图像数据都放到images中,将标注好的xml文件放到xml文件夹中。

然后在mydata文件夹下新建一个名为split_train_val.py的文件,用于实现训练集/测试集/验证集的划分,具体代码如下:

# coding:utf-8

import os

import random

import argparse

parser = argparse.ArgumentParser()

# xml文件的地址,根据自己的数据进行修改 xml一般存放在Annotations下

parser.add_argument('--xml_path', default='xml', type=str, help='input xml label path')

# 数据集的划分,地址选择自己数据下的ImageSets/Main

parser.add_argument('--txt_path', default='dataset', type=str, help='output txt label path')

opt = parser.parse_args()

trainval_percent = 0.9

train_percent = 0.7

xmlfilepath = opt.xml_path

txtsavepath = opt.txt_path

total_xml = os.listdir(xmlfilepath)

if not os.path.exists(txtsavepath):

os.makedirs(txtsavepath)

num = len(total_xml)

list_index = range(num)

tv = int(num * trainval_percent)

tr = int(tv * train_percent)

trainval = random.sample(list_index, tv)

train = random.sample(trainval, tr)

file_trainval = open(txtsavepath + '/trainval.txt', 'w')

file_test = open(txtsavepath + '/test.txt', 'w')

file_train = open(txtsavepath + '/train.txt', 'w')

file_val = open(txtsavepath + '/val.txt', 'w')

for i in list_index:

name = total_xml[i][:-4] + '\n'

if i in trainval:

file_trainval.write(name)

if i in train:

file_train.write(name)

else:

file_val.write(name)

else:

file_test.write(name)

file_trainval.close()

file_train.close()

file_val.close()

file_test.close()

运行以上程序后,就将图片名划分到了不同的数据集中。

2.3 数据集格式转换

6、将VOC格式的数据集转为YOLO格式

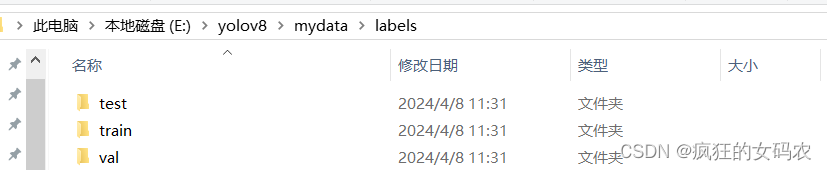

① 在labels文件夹下新建如下文件夹:

② 在mydata文件夹下新建一个名为voc_label.py的文件并运行,具体内容如下:

# -*- coding: utf-8 -*-

import xml.etree.ElementTree as ET

import os

from os import getcwd

sets = ['train', 'val', 'test']

classes = ["dog", "cat", "rabbit", "people", "car"] # 改成自己的类别

abs_path = os.getcwd()

print(abs_path)

def convert(size, box):

dw = 1. / (size[0])

dh = 1. / (size[1])

x = (box[0] + box[1]) / 2.0 - 1

y = (box[2] + box[3]) / 2.0 - 1

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return x, y, w, h

def convert_annotation(image_id, image_set):

in_file = open('./xml/%s.xml' % (image_id), encoding='UTF-8')

out_file = open('./labels/%s/%s.txt' % (image_set, image_id), 'w')

tree = ET.parse(in_file)

root = tree.getroot()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

difficult = obj.find('difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult) == 1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text),

float(xmlbox.find('ymax').text))

b1, b2, b3, b4 = b

# 标注越界修正

if b2 > w:

b2 = w

if b4 > h:

b4 = h

b = (b1, b2, b3, b4)

bb = convert((w, h), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

wd = getcwd()

for image_set in sets:

if not os.path.exists('./labels/' + image_set + '/'):

os.makedirs('./labels/' + image_set + '/')

image_ids = open('./dataset/%s.txt' % (image_set)).read().strip().split()

list_file = open('./labels/%s.txt' % (image_set), 'w')

for image_id in image_ids:

list_file.write(abs_path + '\images\%s.jpg\n' % (image_id))

convert_annotation(image_id, image_set)

list_file.close()

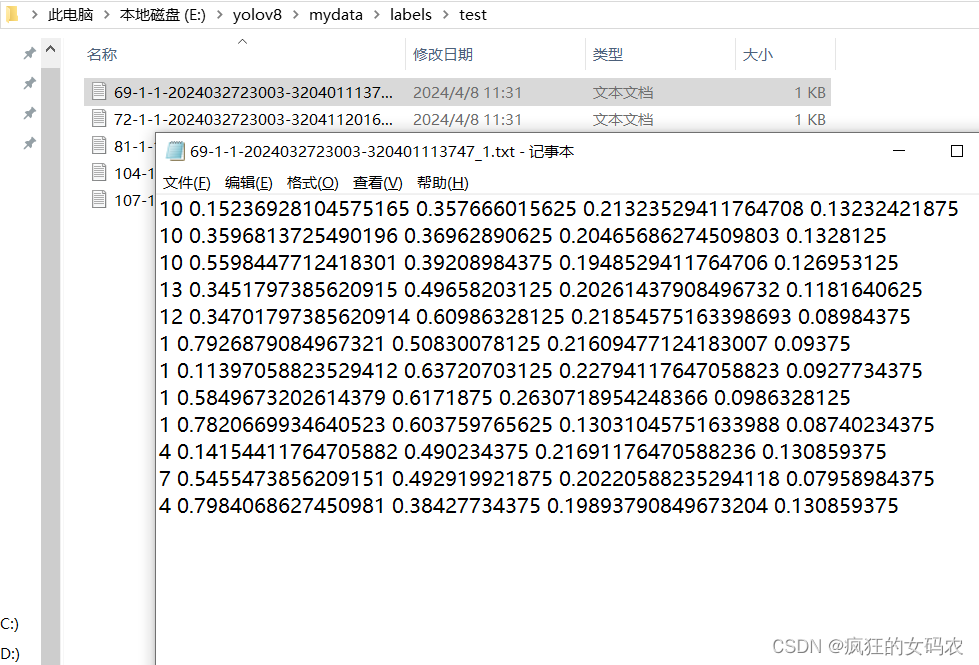

按照以上步骤,我们就成功将VOC格式的数据转为了YOLO格式,并将新的数据保存在了yolov8/mydata/labels下的train、test、val中,如下图所示,每一行代表一个标定框,第一位数字表示标签序号,后边四位数字表示标定框的长宽及位置信息。

同理,在images文件夹下也新建train、test、val文件夹,并将对应的图片也划分到对应的数据集文件夹种。

2.4 数据集制作

在mydata文件夹下,此时已经有了images、labels两个文件夹,我这里还有test_images文件夹,里面放了几张图片是用来做测试的,当然test_images这个文件夹也可以没有。

然后新建一个data_nc5.yaml文件,文件名可以自己命名,后缀需是yaml。文件内容如下:

# path

train: E:/yolov8/mydata/images/train

val: E:/yolov8/mydata/images/val

# number of classes

nc: 5

# class names

names: ["dog", "cat", "rabbit", "people", "car"]

至此,数据集就制作好了。

三、训练

3.1 新建train.py文件

在yolov8路径下新建一个train.py文件,内容如下:

from ultralytics import YOLO

model = YOLO('yolov8n.yaml')

results = model.train(data='./mydata/data_nc5.yaml', batch=8, epochs=300)3.2 修改参数文件

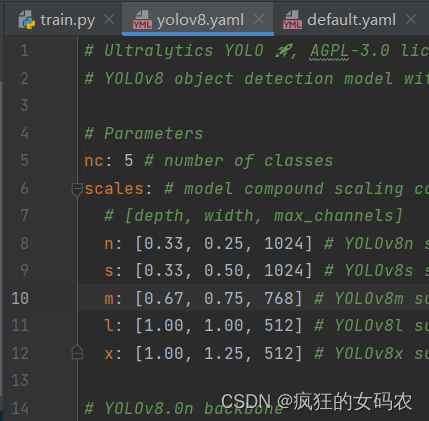

首先,在yolov8\ultralytics\cfg\models\v8\下有yolov8.yaml这个文件,将该文件中的nc: 80改成我们数据集中标签的类别数量nc: 5。

在yolov8\ultralytics\cfg\下有个default.yaml文件,按需求修改相关参数。对于这些参数的解释,可以参考:YOLOv8参数详解

我是在windows的gpu上运行的,已经不记得改了哪些参数,就把参数都贴出来吧:

# Ultralytics YOLO 🚀, AGPL-3.0 license

# Default training settings and hyperparameters for medium-augmentation COCO training

task: detect # (str) YOLO task, i.e. detect, segment, classify, pose

mode: train # (str) YOLO mode, i.e. train, val, predict, export, track, benchmark

# Train settings -------------------------------------------------------------------------------------------------------

model: # (str, optional) path to model file, i.e. yolov8n.pt, yolov8n.yaml

data: # (str, optional) path to data file, i.e. coco128.yaml

epochs: 100 # (int) number of epochs to train for

time: # (float, optional) number of hours to train for, overrides epochs if supplied

patience: 100 # (int) epochs to wait for no observable improvement for early stopping of training

batch: 8 # (int) number of images per batch (-1 for AutoBatch)

imgsz: 640 # (int | list) input images size as int for train and val modes, or list[w,h] for predict and export modes

save: True # (bool) save train checkpoints and predict results

save_period: -1 # (int) Save checkpoint every x epochs (disabled if < 1)

cache: False # (bool) True/ram, disk or False. Use cache for data loading

device: 0 # (int | str | list, optional) device to run on, i.e. cuda device=0 or device=0,1,2,3 or device=cpu

workers: 0 # (int) number of worker threads for data loading (per RANK if DDP)

project: # (str, optional) project name

name: # (str, optional) experiment name, results saved to 'project/name' directory

exist_ok: False # (bool) whether to overwrite existing experiment

pretrained: False # (bool | str) whether to use a pretrained model (bool) or a model to load weights from (str)

optimizer: auto # (str) optimizer to use, choices=[SGD, Adam, Adamax, AdamW, NAdam, RAdam, RMSProp, auto]

verbose: True # (bool) whether to print verbose output

seed: 0 # (int) random seed for reproducibility

deterministic: True # (bool) whether to enable deterministic mode

single_cls: False # (bool) train multi-class data as single-class

rect: False # (bool) rectangular training if mode='train' or rectangular validation if mode='val'

cos_lr: False # (bool) use cosine learning rate scheduler

close_mosaic: 10 # (int) disable mosaic augmentation for final epochs (0 to disable)

resume: False # (bool) resume training from last checkpoint

amp: False # (bool) Automatic Mixed Precision (AMP) training, choices=[True, False], True runs AMP check

fraction: 1.0 # (float) dataset fraction to train on (default is 1.0, all images in train set)

profile: False # (bool) profile ONNX and TensorRT speeds during training for loggers

freeze: None # (int | list, optional) freeze first n layers, or freeze list of layer indices during training

multi_scale: False # (bool) Whether to use multiscale during training

# Segmentation

overlap_mask: True # (bool) masks should overlap during training (segment train only)

mask_ratio: 4 # (int) mask downsample ratio (segment train only)

# Classification

dropout: 0.0 # (float) use dropout regularization (classify train only)

# Val/Test settings ----------------------------------------------------------------------------------------------------

val: True # (bool) validate/test during training

split: val # (str) dataset split to use for validation, i.e. 'val', 'test' or 'train'

save_json: False # (bool) save results to JSON file

save_hybrid: False # (bool) save hybrid version of labels (labels + additional predictions)

conf: # (float, optional) object confidence threshold for detection (default 0.25 predict, 0.001 val)

iou: 0.7 # (float) intersection over union (IoU) threshold for NMS

max_det: 300 # (int) maximum number of detections per image

half: False # (bool) use half precision (FP16)

dnn: False # (bool) use OpenCV DNN for ONNX inference

plots: True # (bool) save plots and images during train/val

# Predict settings -----------------------------------------------------------------------------------------------------

source: # (str, optional) source directory for images or videos

vid_stride: 1 # (int) video frame-rate stride

stream_buffer: False # (bool) buffer all streaming frames (True) or return the most recent frame (False)

visualize: False # (bool) visualize model features

augment: False # (bool) apply image augmentation to prediction sources

agnostic_nms: False # (bool) class-agnostic NMS

classes: # (int | list[int], optional) filter results by class, i.e. classes=0, or classes=[0,2,3]

retina_masks: False # (bool) use high-resolution segmentation masks

embed: # (list[int], optional) return feature vectors/embeddings from given layers

# Visualize settings ---------------------------------------------------------------------------------------------------

show: False # (bool) show predicted images and videos if environment allows

save_frames: False # (bool) save predicted individual video frames

save_txt: False # (bool) save results as .txt file

save_conf: False # (bool) save results with confidence scores

save_crop: False # (bool) save cropped images with results

show_labels: True # (bool) show prediction labels, i.e. 'person'

show_conf: True # (bool) show prediction confidence, i.e. '0.99'

show_boxes: True # (bool) show prediction boxes

line_width: # (int, optional) line width of the bounding boxes. Scaled to image size if None.

# Export settings ------------------------------------------------------------------------------------------------------

format: torchscript # (str) format to export to, choices at https://docs.ultralytics.com/modes/export/#export-formats

keras: False # (bool) use Kera=s

optimize: False # (bool) TorchScript: optimize for mobile

int8: False # (bool) CoreML/TF INT8 quantization

dynamic: False # (bool) ONNX/TF/TensorRT: dynamic axes

simplify: False # (bool) ONNX: simplify model

opset: # (int, optional) ONNX: opset version

workspace: 4 # (int) TensorRT: workspace size (GB)

nms: False # (bool) CoreML: add NMS

# Hyperparameters ------------------------------------------------------------------------------------------------------

lr0: 0.01 # (float) initial learning rate (i.e. SGD=1E-2, Adam=1E-3)

lrf: 0.01 # (float) final learning rate (lr0 * lrf)

momentum: 0.937 # (float) SGD momentum/Adam beta1

weight_decay: 0.0005 # (float) optimizer weight decay 5e-4

warmup_epochs: 3.0 # (float) warmup epochs (fractions ok)

warmup_momentum: 0.8 # (float) warmup initial momentum

warmup_bias_lr: 0.1 # (float) warmup initial bias lr

box: 7.5 # (float) box loss gain

cls: 0.5 # (float) cls loss gain (scale with pixels)

dfl: 1.5 # (float) dfl loss gain

pose: 12.0 # (float) pose loss gain

kobj: 1.0 # (float) keypoint obj loss gain

label_smoothing: 0.0 # (float) label smoothing (fraction)

nbs: 64 # (int) nominal batch size

hsv_h: 0.015 # (float) image HSV-Hue augmentation (fraction)

hsv_s: 0.7 # (float) image HSV-Saturation augmentation (fraction)

hsv_v: 0.4 # (float) image HSV-Value augmentation (fraction)

degrees: 0.0 # (float) image rotation (+/- deg)

translate: 0.1 # (float) image translation (+/- fraction)

scale: 0.5 # (float) image scale (+/- gain)

shear: 0.0 # (float) image shear (+/- deg)

perspective: 0.0 # (float) image perspective (+/- fraction), range 0-0.001

flipud: 0.0 # (float) image flip up-down (probability)

fliplr: 0.5 # (float) image flip left-right (probability)

bgr: 0.0 # (float) image channel BGR (probability)

mosaic: 1.0 # (float) image mosaic (probability)

mixup: 0.0 # (float) image mixup (probability)

copy_paste: 0.0 # (float) segment copy-paste (probability)

auto_augment: randaugment # (str) auto augmentation policy for classification (randaugment, autoaugment, augmix)

erasing: 0.4 # (float) probability of random erasing during classification training (0-0.9), 0 means no erasing, must be less than 1.0.

crop_fraction: 1.0 # (float) image crop fraction for classification (0.1-1), 1.0 means no crop, must be greater than 0.

# Custom config.yaml ---------------------------------------------------------------------------------------------------

cfg: # (str, optional) for overriding defaults.yaml

# Tracker settings ------------------------------------------------------------------------------------------------------

tracker: botsort.yaml # (str) tracker type, choices=[botsort.yaml, bytetrack.yaml]

3.3 启动训练

在终端激活环境:activate yolov8

cd到yolov8路径下: cd yolov8

启动训练:python train.py(如果提示no module named ****,按要求pip install即可)

3.4 查看训练结果

训练结束后,结果会保存在runs/detect/中,可以查看训练的效果

四、测试

在yolov8文件夹下新建文件predict.py,内容如下(其他参数设置详见YOLOv8参数详解):

from ultralytics import YOLO

model = YOLO('./runs/detect/train/weights/best.pt')

results = model.predict(source="./mydata/images/val", save=True, save_txt=True, conf=0.4)然后在终端运行:python predict.py

运行完毕后,测试结果会保存在runs/detect/predict中。

本文详细描述了使用YOLOv8进行深度学习模型训练的过程,包括搭建conda环境、数据集的准备(包括数据标注、划分和格式转换)、训练参数设置、训练过程以及测试与模型部署的基本步骤。

本文详细描述了使用YOLOv8进行深度学习模型训练的过程,包括搭建conda环境、数据集的准备(包括数据标注、划分和格式转换)、训练参数设置、训练过程以及测试与模型部署的基本步骤。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?