众所周知,对于Deepseek框架,其中两大模型架构优化分别为:MLA & DeepseekMOE。今天我们结合原理&代码,把DeepSeek的两部分创新点代码理解+手撕部分合并,整合起来观看效果更佳。

MLA:

Multi-Head Latent Attention核心原理:

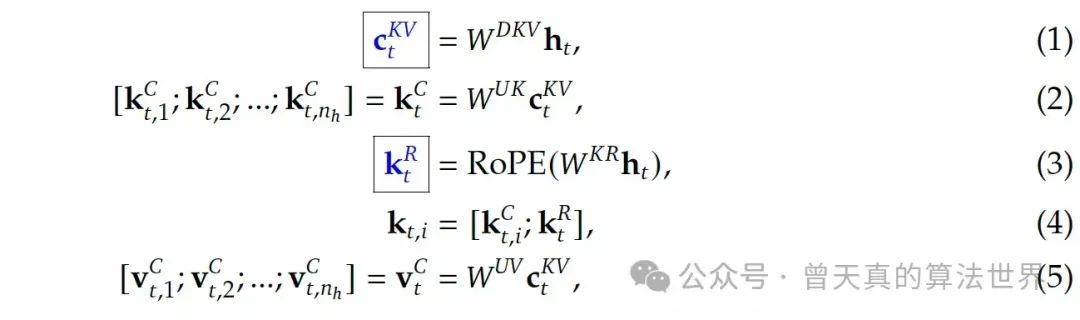

核心思路通过低秩联合压缩,来减少注意力键(keys)和值(values)在推理过程中的缓存,从而提高推理效率,原文公式。

Key:

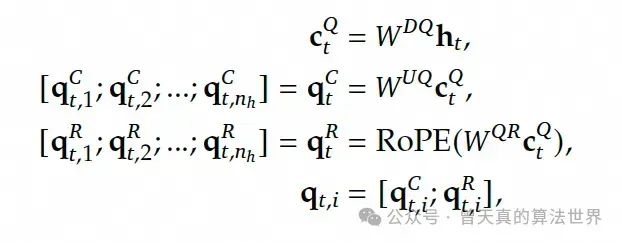

Query:

对于Query,也执行相似的操作:

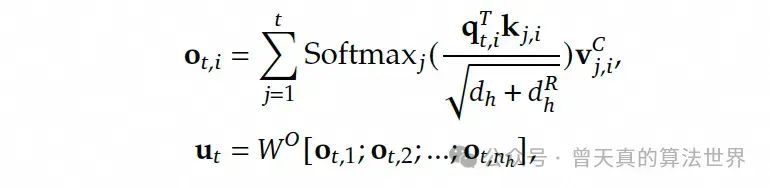

Attention:

最终的注意力输出 u_t_ 是通过将Query q_t_ 与Key k_t_ 进行softmax归一化后的点积,再乘以值 v_t_ 来获得。

关键代码:

本期解读采用deepseek-V2的MLA实现。

class MLA(torch.nn.Module):` `def __init__(self, d_model, n_heads, max_len=1024, rope_theta=10000.0):` `super().__init__()` `self.d_model = d_model` `self.n_heads = n_heads` `self.dh = d_model // n_heads` `self.q_proj_dim = d_model // 2` `self.kv_proj_dim = (2*d_model) // 3`` ` `self.qk_nope_dim = self.dh // 2` `self.qk_rope_dim = self.dh // 2`` ` `## Q projections` `# Lora` `self.W_dq = torch.nn.Parameter(0.01*torch.randn((d_model, self.q_proj_dim)))` `self.W_uq = torch.nn.Parameter(0.01*torch.randn((self.q_proj_dim, self.d_model)))` `self.q_layernorm = torch.nn.LayerNorm(self.q_proj_dim)`` ` `## KV projections` `# Lora` `self.W_dkv = torch.nn.Parameter(0.01*torch.randn((d_model, self.kv_proj_dim + self.qk_rope_dim)))` `self.W_ukv = torch.nn.Parameter(0.01*torch.randn((self.kv_proj_dim,` `self.d_model + (self.n_heads * self.qk_nope_dim))))` `self.kv_layernorm = torch.nn.LayerNorm(self.kv_proj_dim)`` ` `# output projection` `self.W_o = torch.nn.Parameter(0.01*torch.randn((d_model, d_model)))`` ` `# RoPE` `self.max_seq_len = max_len` `self.rope_theta = rope_theta`` ` `# https://github.com/lucidrains/rotary-embedding-torch/tree/main` `# visualize emb later to make sure it looks ok` `# we do self.dh here instead of self.qk_rope_dim because its better` `freqs = 1.0 / (rope_theta ** (torch.arange(0, self.dh, 2).float() / self.dh))` `emb = torch.outer(torch.arange(self.max_seq_len).float(), freqs)` `cos_cached = emb.cos()[None, None, :, :]` `sin_cached = emb.sin()[None, None, :, :]`` ` `# https://pytorch.org/docs/stable/generated/torch.nn.Module.html#torch.nn.Module.register_buffer` `# This is like a parameter but its a constant so we can use register_buffer` `self.register_buffer("cos_cached", cos_cached)` `self.register_buffer("sin_cached", sin_cached)

MLA中的Key操作:

`if kv_cache is None:` `compressed_kv = x @ self.W_dkv` `KV_for_lora, K_for_rope = torch.split(compressed_kv,` `[self.kv_proj_dim, self.qk_rope_dim],` `dim=-1)` `KV_for_lora = self.kv_layernorm(KV_for_lora)` `else:` `new_kv = x @ self.W_dkv` `compressed_kv = torch.cat([kv_cache, new_kv], dim=1)` `new_kv, new_K_for_rope = torch.split(new_kv,` `[self.kv_proj_dim, self.qk_rope_dim],` `dim=-1)` `old_kv, old_K_for_rope = torch.split(kv_cache,` `[self.kv_proj_dim, self.qk_rope_dim],` `dim=-1)` `new_kv = self.kv_layernorm(new_kv)` `old_kv = self.kv_layernorm(old_kv)` `KV_for_lora = torch.cat([old_kv, new_kv], dim=1)` `K_for_rope = torch.cat([old_K_for_rope, new_K_for_rope], dim=1)` `KV = KV_for_lora @ self.W_ukv` `KV = KV.view(B, -1, self.n_heads, self.dh+self.qk_nope_dim).transpose(1,2)` `K, V = torch.split(KV, [self.qk_nope_dim, self.dh], dim=-1)` `S_full = K.size(2)` ` ` `# K Rope` `K_for_rope = K_for_rope.view(B, -1, 1, self.qk_rope_dim).transpose(1,2)` `cos_k = self.cos_cached[:, :, :S_full, :self.qk_rope_dim//2].repeat(1, 1, 1, 2)` `sin_k = self.sin_cached[:, :, :S_full, :self.qk_rope_dim//2].repeat(1, 1, 1, 2)` `K_for_rope = apply_rope_x(K_for_rope, cos_k, sin_k)`` ` `# apply position encoding to each head` `K_for_rope = K_for_rope.repeat(1, self.n_heads, 1, 1)`

MLA中的Query操作:

` `B, S, D = x.size()`` ` `# Q Projections` `compressed_q = x @ self.W_dq` `compressed_q = self.q_layernorm(compressed_q)` `Q = compressed_q @ self.W_uq` `Q = Q.view(B, -1, self.n_heads, self.dh).transpose(1,2)` `Q, Q_for_rope = torch.split(Q, [self.qk_nope_dim, self.qk_rope_dim], dim=-1)`` ` `# Q Decoupled RoPE` `cos_q = self.cos_cached[:, :, past_length:past_length+S, :self.qk_rope_dim//2].repeat(1, 1, 1, 2)` `sin_q = self.sin_cached[:, :, past_length:past_length+S, :self.qk_rope_dim//2].repeat(1, 1, 1, 2)` `Q_for_rope = apply_rope_x(Q_for_rope, cos_q, sin_q)``

最后注意力机制输出:

`# split into multiple heads` `q_heads = torch.cat([Q, Q_for_rope], dim=-1)` `k_heads = torch.cat([K, K_for_rope], dim=-1)` `v_heads = V # already reshaped before the split`` ` `# make attention mask` `mask = torch.ones((S,S_full), device=x.device)` `mask = torch.tril(mask, diagonal=past_length)` `mask = mask[None, None, :, :]`` ` `sq_mask = mask == 1`` ` `# attention` `x = torch.nn.functional.scaled_dot_product_attention(` `q_heads, k_heads, v_heads,` `attn_mask=sq_mask` `)`` ` `x = x.transpose(1, 2).reshape(B, S, D)`` ` `# apply projection` `x = x @ self.W_o.T`` ` `return x, compressed_kv`

下面我们围绕Deepseek-MoE模块进行解读。

Deepseek_MOE:

用通俗易懂的话来说:将专家网络Expert区分为Routed /Shared ,其中Shared Expert 全部参与最终的结果决策,而Routed Expert 则根据Router的结果,选择Top-k“最相关”的专家网络参与决策。

那这边目标就很明确了,我们将两个核心模块分别进行拆解:

1)Router 2)Expert

Router:

控制各个专家网络的输出权重,我们将这段代码中的关键模块加上注释。

输出TOP-K专家网络权重。

class Gate(nn.Module):` `"""` `Gating mechanism for routing inputs in a mixture-of-experts (MoE) model.`` ` `Attributes:` `dim (int): Dimensionality of input features.` `topk (int): Number of top experts activated for each input.` `n_groups (int): Number of groups for routing.` `topk_groups (int): Number of groups to route inputs to.` `score_func (str): Scoring function ('softmax' or 'sigmoid').` `route_scale (float): Scaling factor for routing weights.` `weight (torch.nn.Parameter): Learnable weights for the gate.` `bias (Optional[torch.nn.Parameter]): Optional bias term for the gate.` `"""` `def __init__(self, args: ModelArgs):` `"""` `Initializes the Gate module.`` ` `Args:` `args (ModelArgs): Model arguments containing gating parameters.` `"""` `super().__init__()` `self.dim = args.dim` `self.topk = args.n_activated_experts` `self.n_groups = args.n_expert_groups` `self.topk_groups = args.n_limited_groups` `self.score_func = args.score_func` `self.route_scale = args.route_scale` `self.weight = nn.Parameter(torch.empty(args.n_routed_experts, args.dim))` `self.bias = nn.Parameter(torch.empty(args.n_routed_experts)) if self.dim == 7168 else None`` ` `def forward(self, x: torch.Tensor) -> Tuple[torch.Tensor, torch.Tensor]:` `"""` `Forward pass for the gating mechanism.`` ` `Args:` `x (torch.Tensor): Input tensor.`` ` `Returns:` `Tuple[torch.Tensor, torch.Tensor]: Routing weights and selected expert indices.` `"""` `# 计算分数,使用输入张量与权重参数进行线性变换。` `scores = linear(x, self.weight)`` ` `# 根据评分函数选择,应用softmax或sigmoid对分数进行归一化处理。` `if self.score_func == "softmax":` `scores = scores.softmax(dim=-1, dtype=torch.float32)` `else:` `scores = scores.sigmoid()` `original_scores = scores`` ` `# 如果存在偏置项,将其加到分数上。` `if self.bias is not None:` `scores = scores + self.bias` `if self.n_groups > 1:` `scores = scores.view(x.size(0), self.n_groups, -1)` `if self.bias is None:` `group_scores = scores.amax(dim=-1)` `else:` `group_scores = scores.topk(2, dim=-1)[0].sum(dim=-1)` `# 找到前多组得分的索引。` `indices = group_scores.topk(self.topk_groups, dim=-1)[1]` `mask = torch.zeros_like(scores[..., 0]).scatter_(1, indices, True)` `scores = (scores * mask.unsqueeze(-1)).flatten(1)`` ` `# 在每个输入上选择topk个专家的索引。` `indices = torch.topk(scores, self.topk, dim=-1)[1]`` ` `# 从原始分数中获取相应选中专家的权重。` `weights = original_scores.gather(1, indices)` `if self.score_func == "sigmoid":` `weights /= weights.sum(dim=-1, keepdim=True)`` ` `# 使用权重缩放因子。` `weights *= self.route_scale` `return weights.type_as(x), indices

Expert专家网络:

简单基础专家网络的实现。没有额外处理优化。

class Expert(nn.Module):` `"""` `Expert layer for Mixture-of-Experts (MoE) models.`` ` `Attributes:` `w1 (nn.Module): Linear layer for input-to-hidden transformation.` `w2 (nn.Module): Linear layer for hidden-to-output transformation.` `w3 (nn.Module): Additional linear layer for feature transformation.` `"""` `def __init__(self, dim: int, inter_dim: int):` `"""` `Initializes the Expert layer.`` ` `Args:` `dim (int): Input and output dimensionality.` `inter_dim (int): Hidden layer dimensionality.` `"""` `super().__init__()` `self.w1 = Linear(dim, inter_dim)` `self.w2 = Linear(inter_dim, dim)` `self.w3 = Linear(dim, inter_dim)`` ` `def forward(self, x: torch.Tensor) -> torch.Tensor:` `"""` `Forward pass for the Expert layer.`` ` `Args:` `x (torch.Tensor): Input tensor.`` ` `Returns:` `torch.Tensor: Output tensor after expert computation.` `"""` `return self.w2(F.silu(self.w1(x)) * self.w3(x))

MOE:

最后我们再回到MOE整体架构。

将上一步骤中的Gates输出(权重),以及Expert网络输出进行相乘,最终输出整个专家网络的结果。

class MoE(nn.Module):` `"""` `Mixture-of-Experts (MoE) module.`` ` `Attributes:` `dim (int): Dimensionality of input features.` `n_routed_experts (int): Total number of experts in the model.` `n_local_experts (int): Number of experts handled locally in distributed systems.` `n_activated_experts (int): Number of experts activated for each input.` `gate (nn.Module): Gating mechanism to route inputs to experts.` `experts (nn.ModuleList): List of expert modules.` `shared_experts (nn.Module): Shared experts applied to all inputs.` `"""` `def __init__(self, args: ModelArgs):` `"""` `Initializes the MoE module.`` ` `Args:` `args (ModelArgs): Model arguments containing MoE parameters.` `"""` `super().__init__()` `self.dim = args.dim` `assert args.n_routed_experts % world_size == 0` `self.n_routed_experts = args.n_routed_experts` `self.n_local_experts = args.n_routed_experts // world_size` `self.n_activated_experts = args.n_activated_experts` `self.experts_start_idx = rank * self.n_local_experts` `self.experts_end_idx = self.experts_start_idx + self.n_local_experts` `self.gate = Gate(args)` `self.experts = nn.ModuleList([Expert(args.dim, args.moe_inter_dim) if self.experts_start_idx <= i < self.experts_end_idx else None` `for i in range(self.n_routed_experts)])` `self.shared_experts = MLP(args.dim, args.n_shared_experts * args.moe_inter_dim)`` ` `def forward(self, x: torch.Tensor) -> torch.Tensor:` `"""` `Forward pass for the MoE module.`` ` `Args:` `x (torch.Tensor): Input tensor.`` ` `Returns:` `torch.Tensor: Output tensor after expert routing and computation.` `"""` `shape = x.size()` `x = x.view(-1, self.dim)` `weights, indices = self.gate(x)` `y = torch.zeros_like(x)` `counts = torch.bincount(indices.flatten(), minlength=self.n_routed_experts).tolist()` `for i in range(self.experts_start_idx, self.experts_end_idx):` `if counts[i] == 0:` `continue` `expert = self.experts[i]` `idx, top = torch.where(indices == i)` `y[idx] += expert(x[idx]) * weights[idx, top, None]` `z = self.shared_experts(x)` `if world_size > 1:` `dist.all_reduce(y)` `return (y + z).view(shape)

如何学习大模型 AI ?

由于新岗位的生产效率,要优于被取代岗位的生产效率,所以实际上整个社会的生产效率是提升的。

但是具体到个人,只能说是:

“最先掌握AI的人,将会比较晚掌握AI的人有竞争优势”。

这句话,放在计算机、互联网、移动互联网的开局时期,都是一样的道理。

我在一线互联网企业工作十余年里,指导过不少同行后辈。帮助很多人得到了学习和成长。

我意识到有很多经验和知识值得分享给大家,也可以通过我们的能力和经验解答大家在人工智能学习中的很多困惑,所以在工作繁忙的情况下还是坚持各种整理和分享。但苦于知识传播途径有限,很多互联网行业朋友无法获得正确的资料得到学习提升,故此将并将重要的AI大模型资料包括AI大模型入门学习思维导图、精品AI大模型学习书籍手册、视频教程、实战学习等录播视频免费分享出来。

第一阶段(10天):初阶应用

该阶段让大家对大模型 AI有一个最前沿的认识,对大模型 AI 的理解超过 95% 的人,可以在相关讨论时发表高级、不跟风、又接地气的见解,别人只会和 AI 聊天,而你能调教 AI,并能用代码将大模型和业务衔接。

- 大模型 AI 能干什么?

- 大模型是怎样获得「智能」的?

- 用好 AI 的核心心法

- 大模型应用业务架构

- 大模型应用技术架构

- 代码示例:向 GPT-3.5 灌入新知识

- 提示工程的意义和核心思想

- Prompt 典型构成

- 指令调优方法论

- 思维链和思维树

- Prompt 攻击和防范

- …

第二阶段(30天):高阶应用

该阶段我们正式进入大模型 AI 进阶实战学习,学会构造私有知识库,扩展 AI 的能力。快速开发一个完整的基于 agent 对话机器人。掌握功能最强的大模型开发框架,抓住最新的技术进展,适合 Python 和 JavaScript 程序员。

- 为什么要做 RAG

- 搭建一个简单的 ChatPDF

- 检索的基础概念

- 什么是向量表示(Embeddings)

- 向量数据库与向量检索

- 基于向量检索的 RAG

- 搭建 RAG 系统的扩展知识

- 混合检索与 RAG-Fusion 简介

- 向量模型本地部署

- …

第三阶段(30天):模型训练

恭喜你,如果学到这里,你基本可以找到一份大模型 AI相关的工作,自己也能训练 GPT 了!通过微调,训练自己的垂直大模型,能独立训练开源多模态大模型,掌握更多技术方案。

到此为止,大概2个月的时间。你已经成为了一名“AI小子”。那么你还想往下探索吗?

- 为什么要做 RAG

- 什么是模型

- 什么是模型训练

- 求解器 & 损失函数简介

- 小实验2:手写一个简单的神经网络并训练它

- 什么是训练/预训练/微调/轻量化微调

- Transformer结构简介

- 轻量化微调

- 实验数据集的构建

- …

第四阶段(20天):商业闭环

对全球大模型从性能、吞吐量、成本等方面有一定的认知,可以在云端和本地等多种环境下部署大模型,找到适合自己的项目/创业方向,做一名被 AI 武装的产品经理。

- 硬件选型

- 带你了解全球大模型

- 使用国产大模型服务

- 搭建 OpenAI 代理

- 热身:基于阿里云 PAI 部署 Stable Diffusion

- 在本地计算机运行大模型

- 大模型的私有化部署

- 基于 vLLM 部署大模型

- 案例:如何优雅地在阿里云私有部署开源大模型

- 部署一套开源 LLM 项目

- 内容安全

- 互联网信息服务算法备案

- …

学习是一个过程,只要学习就会有挑战。天道酬勤,你越努力,就会成为越优秀的自己。

如果你能在15天内完成所有的任务,那你堪称天才。然而,如果你能完成 60-70% 的内容,你就已经开始具备成为一名大模型 AI 的正确特征了。

这份完整版的大模型 AI 学习资料已经上传CSDN,朋友们如果需要可以微信扫描下方CSDN官方认证二维码免费领取【保证100%免费】

3751

3751

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?