lecture 9:Residual Network (ResNet)

目录

1、残差网络基础

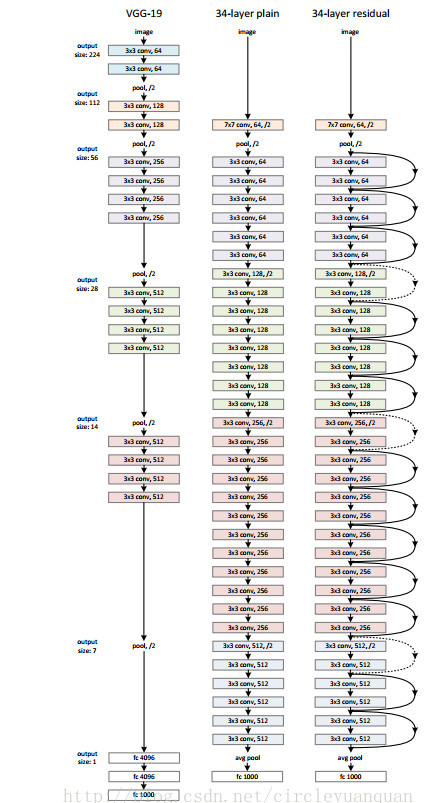

1.1 VGG19、ResNet34结构图

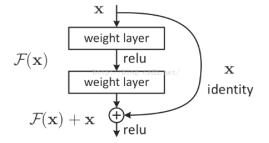

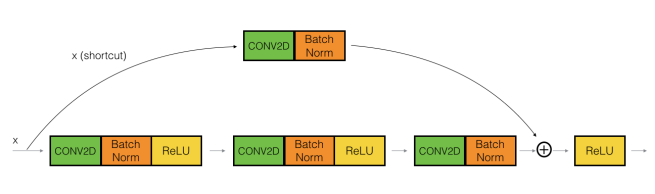

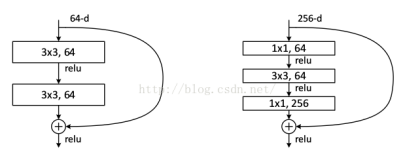

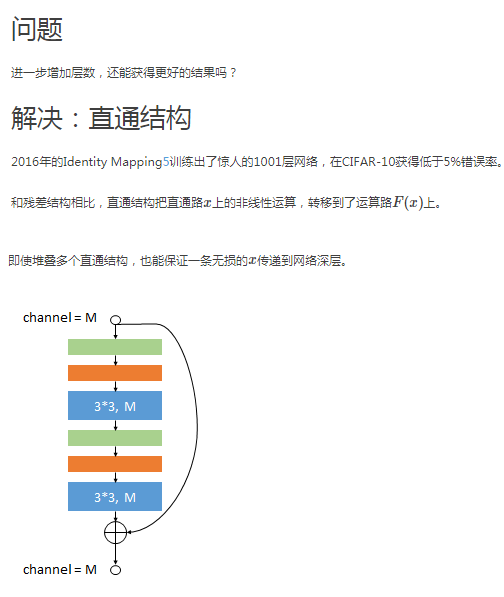

1.2 ResNet残差块

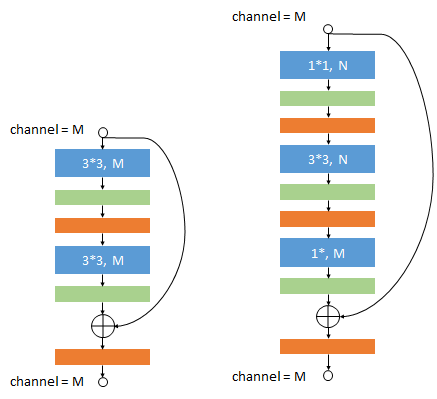

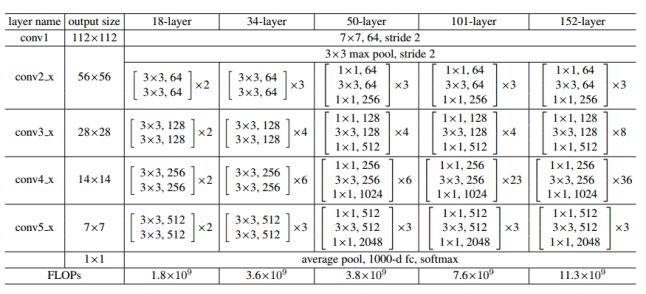

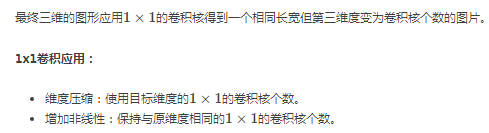

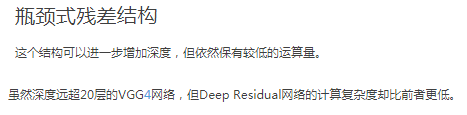

考虑计算成本,对残差块做了计算优化,即将两个3x3的卷积层替换为1x1 + 3x3 + 1x1。

新结构中的中间3x3的卷积层首先在一个降维1x1卷积层下减少了计算,然后在另一个1x1的卷积层下做了还原,既保持了精度又减少了计算量。

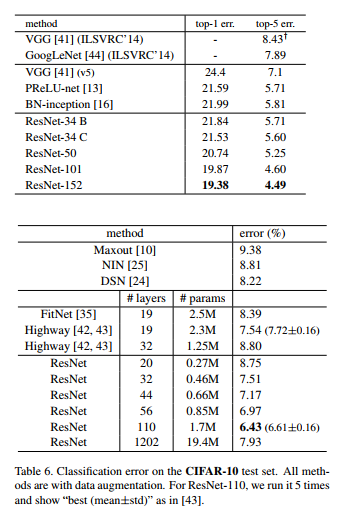

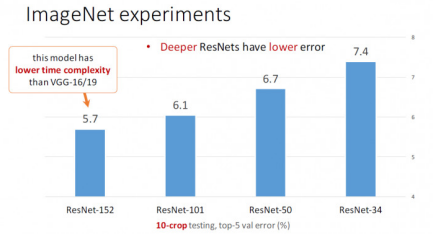

ResNet在ImageNet2015夺得冠军

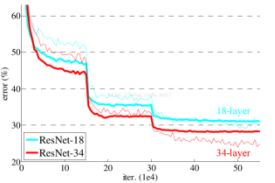

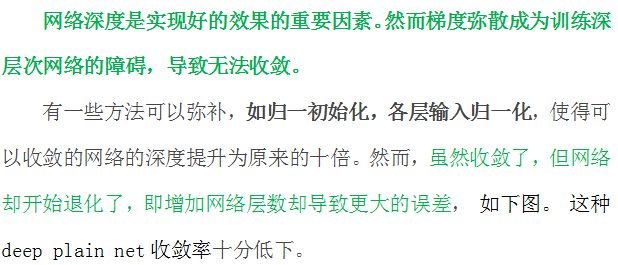

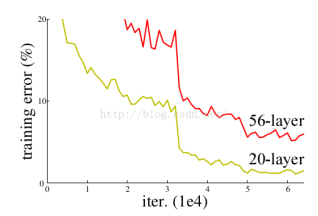

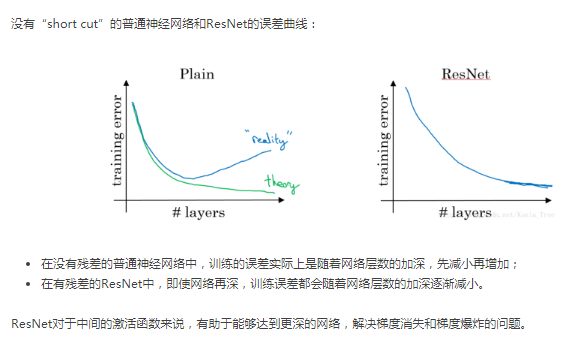

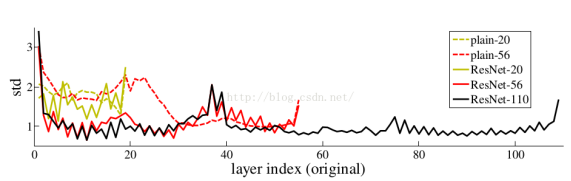

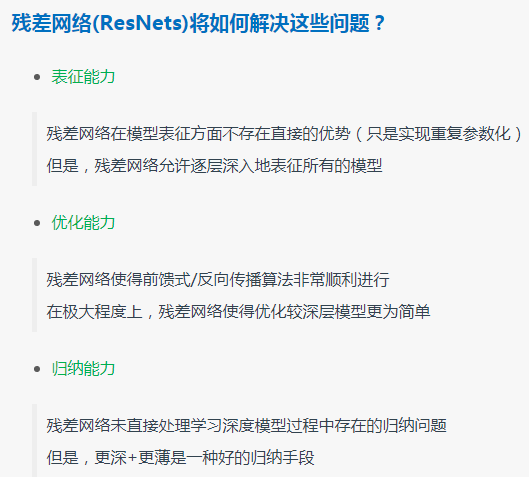

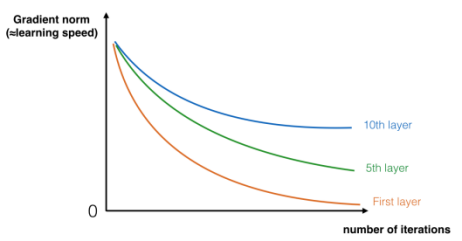

1.3 梯度弥散和网络退化

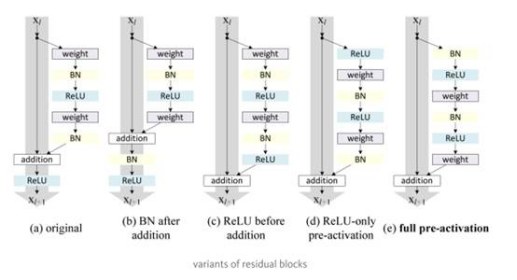

1.4 残差块变体

自AlexNet以来,state-of-the-art的CNN结构都在不断地变深。VGG和GoogLeNet分别有19个和22个卷积层,而AlexNet只有5个。

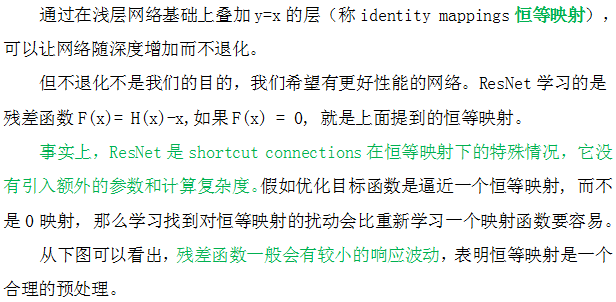

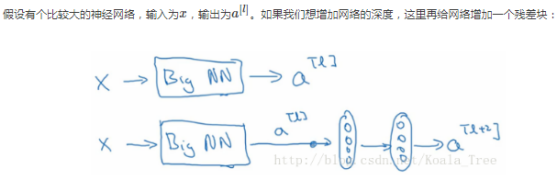

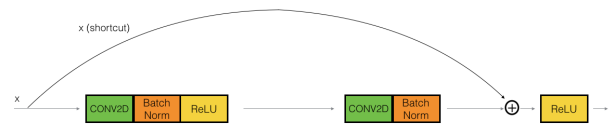

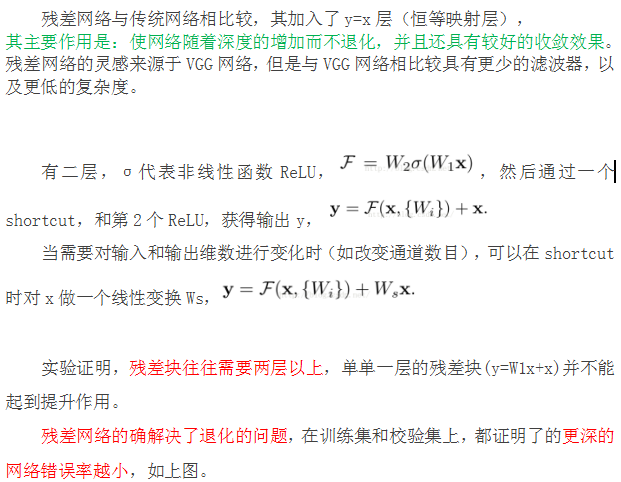

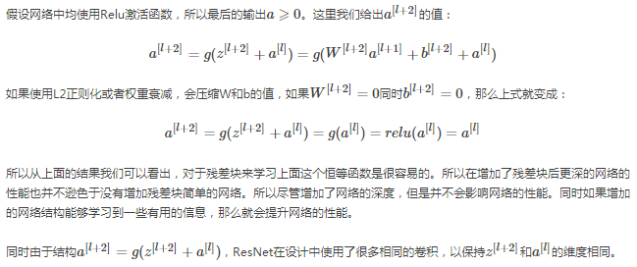

ResNet基本思想:引入跳过一层或多层的shortcut connection。

1.5 ResNet模型变体

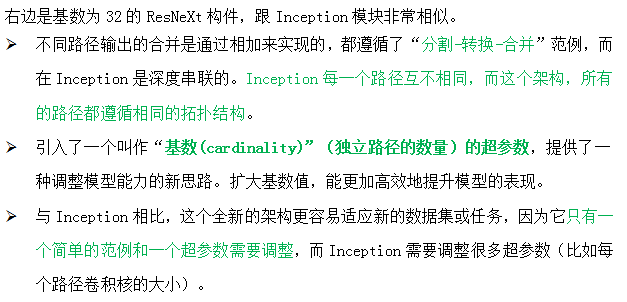

(1)ResNeXt

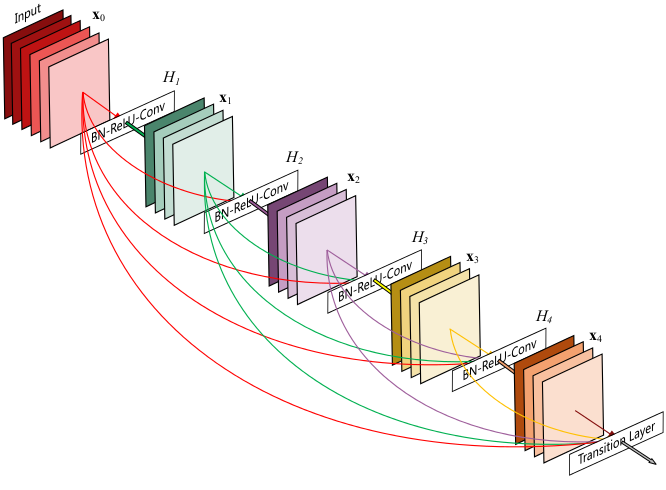

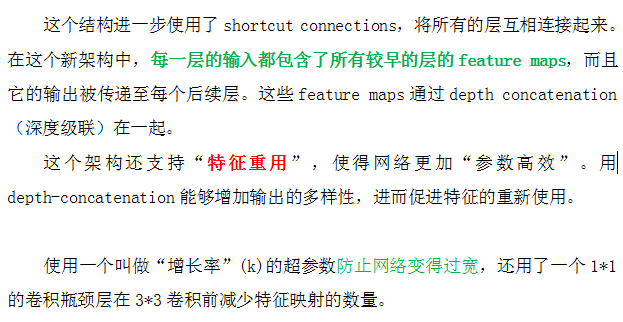

(2)DenseNet

1.6 Residual Network补充

(1)short connect/skip connect

(2)残差网络表现好的原因

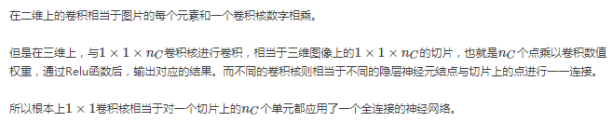

1.7 1*1卷积核

1*1的卷积核相当于对一个切片上的nc个单元,都应用了一个全连接的神经网络

2、ResNet(何凯明PPT)

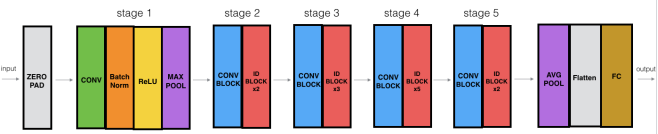

3、ResNet-50(Ng)

3.1 非常深的神经网络问题

3.2 建立一个残差网络

使用 short connect的残差块,能很容易的学习标识功能。在其他ResNet块上进行堆栈,不会损害训练集的性能。

- 残差块:主路径 + short connect

- 残差块:indentity block & convolutional block,取决于输入/输出的尺寸是否相同

(1)identity block (标准块)

卷积层滤波器:

Filter1 : shape=[1,1], strides=[1,1], padding=’valid’, name=’2a’

Filter2: shape=[f,f], strides=[1,1], padding=’same’, name=’2b’

Filter3: shape=[1,1], strides=[1,1], padding=’valid’, name=’2c’

BatchNorm 归一化 channels axis

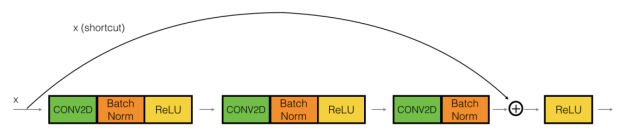

(2)convolutional block

当输入和输出的尺寸不匹配时,使用

Short connect 中的卷积层用于调整X的尺寸,使得匹配后面的加法。

Filter1 : shape=[1,1], strides=[s,s], padding=’valid’, name=’2a’

Filter2: shape=[f,f], strides=[1,1], padding=’same’, name=’2b’

Filter3: shape=[1,1], strides=[1,1], padding=’valid’, name=’2c’

Short connect Filter: shape=[1,1], strides=[s,s], ‘valid’, name=’1’

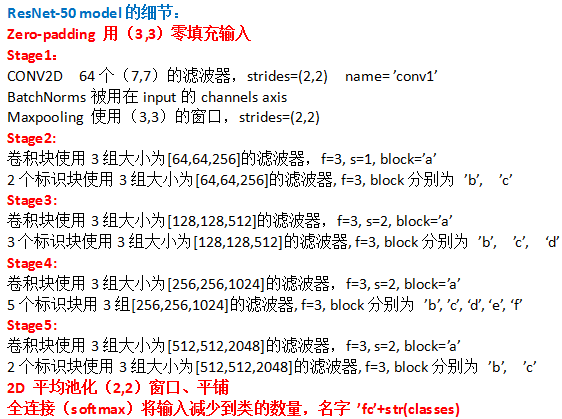

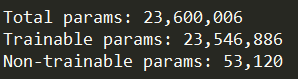

3.3 first ResNet model(50layers)

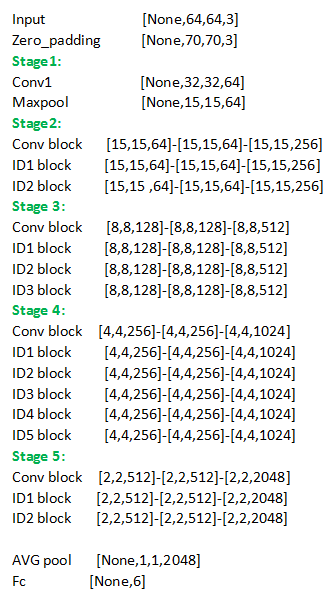

ResNet-50 每一层输出shape

卷积/池化前后图像大小:(n+2p-f)/s+1

3.4 代码

def identity_block(X, f, filters, stage, block):

"""

X -- 输入tensor, shape=(m, n_H_prev, n_W_prev, n_C_prev)

f -- identity block主路径,中间卷积层的滤波器大小

filters -- [f1,f2,f3]对应主路径中三个卷积层的滤波器个数

stage -- 用于命名层,阶段(数字表示:1/2/3/4/5...)

block -- 用于命名层,每个阶段中的残差块(字符表示:a/b/c/d/e/f...)

Return X -- identity block 输出tensor shape=(m, n_H, n_W, n_C)

"""

"define name basis"

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

"get filter number"

F1, F2, F3 = filters

"save the input value"

X_shortcut = X

"主路径的第一层:conv-bn-relu"

X = Conv2D(filters=F1, kernel_size=(1,1), strides=(1,1), padding='valid',

name=conv_name_base+'2a', kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base+'2a')(X)

X = Activation('relu')(X)

"主路径的第二层:conv-bn-relu"

X = Conv2D(filters=F2, kernel_size=(f,f), strides=(1,1), padding='same',

name=conv_name_base+'2b', kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base+'2b')(X)

X = Activation('relu')(X)

"主路径的第三层:conv-bn"

X = Conv2D(filters=F3, kernel_size=(1,1), strides=(1,1), padding='valid',

name=conv_name_base+'2c', kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base+'2c')(X)

"add shortcut and relu"

X = Add()([X, X_shortcut])

X = Activation('relu')(X)

return X

"""s -- convolutional block中,主路径第一个卷积层和shortcut卷积层,滤波器滑动步长,s=2,用来降低图片大小"""

def convolutional_block(X, f, filters, stage, block, s=2):

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

F1, F2, F3 = filters

X_shortcut = X

"1st layer"

X = Conv2D(filters=F1, kernel_size=(1,1), strides=(s,s), padding='valid',

name=conv_name_base+'2a', kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base+'2a')(X)

X = Activation('relu')(X)

"2nd layer"

X = Conv2D(filters=F2, kernel_size=(f,f), strides=(1,1), padding='same',

name=conv_name_base+'2b', kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base+'2b')(X)

X = Activation('relu')(X)

"3rd layer"

X = Conv2D(filters=F3, kernel_size=(1,1), strides=(1,1), padding='valid',

name=conv_name_base+'2c', kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base+'2c')(X)

"shortcut path"

X_shortcut = Conv2D(filters=F3, kernel_size=(1,1), strides=(s,s), padding='valid',

name=conv_name_base+'1', kernel_initializer=glorot_uniform(seed=0))(X_shortcut)

X_shortcut = BatchNormalization(axis=3, name=bn_name_base+'1')(X_shortcut)

X = Add()([X, X_shortcut])

X = Activation('relu')(X)

return X

def ResNet50(input_shape=(64,64,3), classes=6):

"""

input - zero padding

stage1:conv - BatchNorm - relu - maxpool

stage2: conv block - identity block *2

stage3: conv block - identity block *3

stage4: conv block - identity block *5

stage5: conv block - identity block *2

avgpool - flatten - fully connect

input_shape -- 输入图像的shape

classes -- 类别数

Return: a model() instance in Keras

"""

"用input_shape定义一个输入tensor"

X_input = Input(input_shape)

"zero padding"

X = ZeroPadding2D((3,3))(X_input)

"stage 1"

X = Conv2D(filters=64, kernel_size=(7,7), strides=(2,2), padding='valid',

name='conv1', kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name='bn_conv1')(X)

X = MaxPooling2D((3,3), strides=(2,2))(X)

"stage 2"

X = convolutional_block(X, f=3, filters=[64,64,256], stage=2, block='a', s=1)

X = identity_block(X,f=3, filters=[64,64,256], stage=2, block='b')

X = identity_block(X,f=3, filters=[64,64,256], stage=2, block='c')

"stage 3"

X = convolutional_block(X, f=3, filters=[128,128,512], stage=3, block='a', s=2)

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='b')

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='c')

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='d')

"stage 4"

X = convolutional_block(X, f=3, filters=[256,256,1024], stage=4, block='a', s=2)

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='b')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='c')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='d')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='e')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='f')

"stage 5"

X = convolutional_block(X, f=3, filters=[512,512,2048], stage=5, block='a', s=2)

X = identity_block(X, f=3, filters=[512,512,2048], stage=5, block='b')

X = identity_block(X, f=3, filters=[512,512,2048], stage=5, block='c')

"fully connect"

X = AveragePooling2D((2,2), name='avg_pool')(X)

X = Flatten()(X)

X = Dense(classes, activation='softmax', name='fc'+str(classes),

kernel_initializer=glorot_uniform(seed=0))(X)

"a model() instance in Keras"

model = Model(inputs=X_input, outputs=X, name='ResNet50')

return model

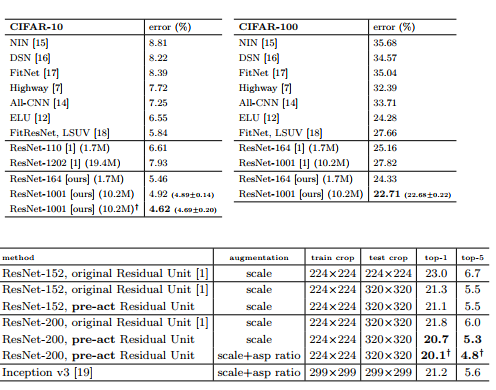

4、ResNet 100-1000层

4.1 152层

4.2 1000层

4.3 何凯明paper

4.4代码

def ResNet101(input_shape=(64,64,3), classes=6):

"""

input - zero padding

stage1:conv - BatchNorm - relu - maxpool

stage2: conv block - identity block *2

stage3: conv block - identity block *3

stage4: conv block - identity block *22

stage5: conv block - identity block *2

avgpool - flatten - fully connect

input_shape -- 输入图像的shape

classes -- 类别数

Return: a model() instance in Keras

"""

"用input_shape定义一个输入tensor"

X_input = Input(input_shape)

"zero padding"

X = ZeroPadding2D((3,3))(X_input)

"stage 1"

X = Conv2D(filters=64, kernel_size=(7,7), strides=(2,2), padding='valid',

name='conv1', kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name='bn_conv1')(X)

X = MaxPooling2D((3,3), strides=(2,2))(X)

"stage 2"

X = convolutional_block(X, f=3, filters=[4,4,16], stage=2, block='a', s=1)

X = identity_block(X,f=3, filters=[4,4,16], stage=2, block='b')

X = identity_block(X,f=3, filters=[4,4,16], stage=2, block='c')

"stage 3"

X = convolutional_block(X, f=3, filters=[8,8,32], stage=3, block='a', s=2)

X = identity_block(X, f=3, filters=[8,8,32], stage=3, block='b')

X = identity_block(X, f=3, filters=[8,8,32], stage=3, block='c')

X = identity_block(X, f=3, filters=[8,8,32], stage=3, block='d')

"stage 4"

"identity block from 5(50 layers) to 22(101 layers)"

X = convolutional_block(X, f=3, filters=[16,16,64], stage=4, block='a', s=2)

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='b')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='c')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='d')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='e')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='f')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='g')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='h')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='i')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='j')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='k')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='l')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='m')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='n')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='o')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='p')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='q')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='r')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='s')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='t')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='u')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='v')

X = identity_block(X, f=3, filters=[16,16,64], stage=4, block='w')

"stage 5"

X = convolutional_block(X, f=3, filters=[32,32,128], stage=5, block='a', s=2)

X = identity_block(X, f=3, filters=[32,32,128], stage=5, block='b')

X = identity_block(X, f=3, filters=[32,32,128], stage=5, block='c')

"fully connect"

X = AveragePooling2D((2,2), name='avg_pool')(X)

X = Flatten()(X)

X = Dense(classes, activation='softmax', name='fc'+str(classes),

kernel_initializer=glorot_uniform(seed=0))(X)

"a model() instance in Keras"

model = Model(inputs=X_input, outputs=X, name='ResNet101')

return model

def ResNet152(input_shape=(64,64,3), classes=6):

"""

input - zero padding

stage1:conv - BatchNorm - relu - maxpool

stage2: conv block - identity block *2

stage3: conv block - identity block *7

stage4: conv block - identity block *35

stage5: conv block - identity block *2

avgpool - flatten - fully connect

input_shape -- 输入图像的shape

classes -- 类别数

Return: a model() instance in Keras

"""

"用input_shape定义一个输入tensor"

X_input = Input(input_shape)

"zero padding"

X = ZeroPadding2D((3,3))(X_input)

"stage 1"

X = Conv2D(filters=64, kernel_size=(7,7), strides=(2,2), padding='valid',

name='conv1', kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name='bn_conv1')(X)

X = MaxPooling2D((3,3), strides=(2,2))(X)

"stage 2"

X = convolutional_block(X, f=3, filters=[64,64,256], stage=2, block='a', s=1)

X = identity_block(X,f=3, filters=[64,64,256], stage=2, block='b')

X = identity_block(X,f=3, filters=[64,64,256], stage=2, block='c')

"stage 3"

"identity block from 3(101 layers) to 7(152 layers)"

X = convolutional_block(X, f=3, filters=[128,128,512], stage=3, block='a', s=2)

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='b')

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='c')

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='d')

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='e')

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='f')

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='g')

X = identity_block(X, f=3, filters=[128,128,512], stage=3, block='h')

"stage 4"

"identity block from 22(101 layers) to 35(152 layers)"

X = convolutional_block(X, f=3, filters=[256,256,1024], stage=4, block='a', s=2)

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='b')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='c')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='d')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='e')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='f')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='g')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='h')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='i')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='j')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='k')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='l')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='m')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='n')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='o')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='p')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='q')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='r')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='s')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='t')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='u')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='v')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='w')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='x')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='y')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='z')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='z1')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='z2')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='z3')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='z4')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='z5')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='z6')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='z7')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='z8')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='z9')

X = identity_block(X, f=3, filters=[256,256,1024], stage=4, block='z10')

"stage 5"

X = convolutional_block(X, f=3, filters=[512,512,2048], stage=5, block='a', s=2)

X = identity_block(X, f=3, filters=[512,512,2048], stage=5, block='b')

X = identity_block(X, f=3, filters=[512,512,2048], stage=5, block='c')

"fully connect"

X = AveragePooling2D((2,2), name='avg_pool')(X)

X = Flatten()(X)

X = Dense(classes, activation='softmax', name='fc'+str(classes),

kernel_initializer=glorot_uniform(seed=0))(X)

"a model() instance in Keras"

model = Model(inputs=X_input, outputs=X, name='ResNet152')

return model

2014

2014

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?