目录:

一、[VinBigData]-Train-EfficientNet-2-classes

转自:https://www.kaggle.com/philyyong/vinbigdata-train-efficientnet-2-classes/edit

1、添加环境,这就可以直接访问包而不用安装

package_paths = [

'../input/pytorch-image-models/pytorch-image-models-master', #'../input/efficientnet-pytorch-07/efficientnet_pytorch-0.7.0'

'../input/image-fmix/FMix-master'

]

import sys;

for pth in package_paths:

sys.path.append(pth)

from fmix import sample_mask, make_low_freq_image, binarise_mask

2、导入需要的模块

from glob import glob

from sklearn.model_selection import GroupKFold, StratifiedKFold

import cv2

from skimage import io

import torch

from torch import nn

import os

from datetime import datetime

import time

import random

import cv2

import torchvision

from torchvision import transforms

import pandas as pd

import numpy as np

from tqdm import tqdm

import matplotlib.pyplot as plt

from torch.utils.data import Dataset,DataLoader

from torch.utils.data.sampler import SequentialSampler, RandomSampler

from torch.cuda.amp import autocast, GradScaler

from torch.nn.modules.loss import _WeightedLoss

import torch.nn.functional as F

import timm

import sklearn

import warnings

import joblib

from sklearn.metrics import roc_auc_score, log_loss

from sklearn import metrics

import warnings

import cv2

import pydicom

#from efficientnet_pytorch import EfficientNet

from scipy.ndimage.interpolation import zoom

3、配置文件

CFG = {

'fold_num': 5,

'seed': 2021,

'model_arch': 'tf_efficientnet_b6_ns',

'img_size': 512,

'epochs': 1,

'train_bs': 8,

'valid_bs': 8,

'T_0': 10,

'lr': 1e-4,

'min_lr': 1e-6,

'weight_decay':1e-6,

'num_workers': 4,

'accum_iter': 2, # suppoprt to do batch accumulation for backprop with effectively larger batch size

'verbose_step': 1,

'device': 'cuda:0',

'path_to_train_data' : '../input/vinbigdata-512-image-dataset/vinbigdata/train/'

}

4、查看数据集形式

train_ori = pd.read_csv('../input/vinbigdata-512-image-dataset/vinbigdata/train.csv')

train_ori.head() #将df第一行看作表头,显示出前五行数据

img_names = list(train_ori['image_id'].unique())

train = pd.DataFrame({'image_id' : img_names})

#making the labels

for img in img_names:

sub_df = train_ori.loc[train_ori['image_id'] == img]

no_finding_classes = sub_df[sub_df['class_name'] == 'No finding']

if (no_finding_classes.shape[0] > (sub_df.shape[0]/ 2)): #this line can be modified if you want

train.loc[train['image_id'] == img, 'target'] = 1

else:

train.loc[train['image_id'] == img, 'target'] = 0

train.target.value_counts()

5、Helper Functions

def seed_everything(seed):

random.seed(seed)

os.environ['PYTHONHASHSEED'] = str(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = True

def get_img(path):

im_bgr = cv2.imread(path)

im_rgb = im_bgr[:, :, ::-1]

#print(im_rgb)

return im_rgb

img = get_img('../input/vinbigdata-512-image-dataset/vinbigdata/train/0005e8e3701dfb1dd93d53e2ff537b6e.png')

plt.imshow(img)

plt.show()

6、Define Train\Validation Image Augmentations

from albumentations import (

HorizontalFlip, VerticalFlip, IAAPerspective, ShiftScaleRotate, CLAHE, RandomRotate90,

Transpose, ShiftScaleRotate, Blur, OpticalDistortion, GridDistortion, HueSaturationValue,

IAAAdditiveGaussianNoise, GaussNoise, MotionBlur, MedianBlur, IAAPiecewiseAffine, RandomResizedCrop,

IAASharpen, IAAEmboss, RandomBrightnessContrast, Flip, OneOf, Compose, Normalize, Cutout, CoarseDropout, ShiftScaleRotate, CenterCrop, Resize

)

from albumentations.pytorch import ToTensorV2

def get_train_transforms():

return Compose([

RandomResizedCrop(CFG['img_size'], CFG['img_size']),

Transpose(p=0.5),

HorizontalFlip(p=0.5),

#VerticalFlip(p=0.5),

ShiftScaleRotate(p=0.5),

HueSaturationValue(hue_shift_limit=0.2, sat_shift_limit=0.2, val_shift_limit=0.2, p=0.5),

RandomBrightnessContrast(brightness_limit=(-0.1,0.1), contrast_limit=(-0.1, 0.1), p=0.5),

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225], max_pixel_value=255.0, p=1.0),

#CoarseDropout(p=0.5),

#Cutout(p=0.5),

ToTensorV2(p=1.0),

], p=1.)

def get_valid_transforms():

return Compose([

CenterCrop(CFG['img_size'], CFG['img_size'], p=1.),

Resize(CFG['img_size'], CFG['img_size']),

Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225], max_pixel_value=255.0, p=1.0),

ToTensorV2(p=1.0),

], p=1.)

7、Dataset

def rand_bbox(size, lam):

W = size[0]

H = size[1]

cut_rat = np.sqrt(1. - lam)

cut_w = np.int(W * cut_rat)

cut_h = np.int(H * cut_rat)

# uniform

cx = np.random.randint(W)

cy = np.random.randint(H)

bbx1 = np.clip(cx - cut_w // 2, 0, W)

bby1 = np.clip(cy - cut_h // 2, 0, H)

bbx2 = np.clip(cx + cut_w // 2, 0, W)

bby2 = np.clip(cy + cut_h // 2, 0, H)

return bbx1, bby1, bbx2, bby2

class ChestXRayDataset(Dataset):

def __init__(self, df, data_root,

transforms=None,

output_label=True,

one_hot_label=False,

do_fmix=False,

fmix_params={

'alpha': 1.,

'decay_power': 3.,

'shape': (CFG['img_size'], CFG['img_size']),

'max_soft': True,

'reformulate': False

},

do_cutmix=False,

cutmix_params={

'alpha': 1,

}

):

super().__init__()

self.df = df.reset_index(drop=True).copy()

self.transforms = transforms

self.data_root = data_root

self.do_fmix = do_fmix

self.fmix_params = fmix_params

self.do_cutmix = do_cutmix

self.cutmix_params = cutmix_params

self.output_label = output_label

self.one_hot_label = one_hot_label

if output_label == True:

self.labels = self.df['target'].values

#print(self.labels)

if one_hot_label is True:

self.labels = np.eye(self.df['target'].max()+1)[self.labels]

#print(self.labels)

def __len__(self):

return self.df.shape[0]

def __getitem__(self, index: int):

# get labels

if self.output_label:

target = self.labels[index]

img = get_img("{}/{}.png".format(self.data_root, self.df.loc[index]['image_id']))

if self.transforms:

img = self.transforms(image=img)['image']

if self.do_fmix and np.random.uniform(0., 1., size=1)[0] > 0.5:

with torch.no_grad():

#lam, mask = sample_mask(**self.fmix_params)

lam = np.clip(np.random.beta(self.fmix_params['alpha'], self.fmix_params['alpha']),0.6,0.7)

# Make mask, get mean / std

mask = make_low_freq_image(self.fmix_params['decay_power'], self.fmix_params['shape'])

mask = binarise_mask(mask, lam, self.fmix_params['shape'], self.fmix_params['max_soft'])

fmix_ix = np.random.choice(self.df.index, size=1)[0]

fmix_img = get_img("{}/{}".format(self.data_root, self.df.iloc[fmix_ix]['image_id']))

if self.transforms:

fmix_img = self.transforms(image=fmix_img)['image']

mask_torch = torch.from_numpy(mask)

# mix image

img = mask_torch*img+(1.-mask_torch)*fmix_img

#print(mask.shape)

#assert self.output_label==True and self.one_hot_label==True

# mix target

rate = mask.sum()/CFG['img_size']/CFG['img_size']

target = rate*target + (1.-rate)*self.labels[fmix_ix]

#print(target, mask, img)

#assert False

if self.do_cutmix and np.random.uniform(0., 1., size=1)[0] > 0.5:

#print(img.sum(), img.shape)

with torch.no_grad():

cmix_ix = np.random.choice(self.df.index, size=1)[0]

cmix_img = get_img("{}/{}".format(self.data_root, self.df.iloc[cmix_ix]['image_id']))

if self.transforms:

cmix_img = self.transforms(image=cmix_img)['image']

lam = np.clip(np.random.beta(self.cutmix_params['alpha'], self.cutmix_params['alpha']),0.3,0.4)

bbx1, bby1, bbx2, bby2 = rand_bbox((CFG['img_size'], CFG['img_size']), lam)

img[:, bbx1:bbx2, bby1:bby2] = cmix_img[:, bbx1:bbx2, bby1:bby2]

rate = 1 - ((bbx2 - bbx1) * (bby2 - bby1) / (CFG['img_size'] * CFG['img_size']))

target = rate*target + (1.-rate)*self.labels[cmix_ix]

#print('-', img.sum())

#print(target)

#assert False

# do label smoothing

#print(type(img), type(target))

if self.output_label == True:

return img, target

else:

return img

8、Model

class ChestXrayImgClassifier(nn.Module):

def __init__(self, model_arch, n_class, pretrained=False):

super().__init__()

self.model = timm.create_model(model_arch, pretrained=pretrained)

n_features = self.model.classifier.in_features

self.model.classifier = nn.Linear(n_features, n_class)

'''

self.model.classifier = nn.Sequential(

nn.Dropout(0.3),

#nn.Linear(n_features, hidden_size,bias=True), nn.ELU(),

nn.Linear(n_features, n_class, bias=True)

)

'''

def forward(self, x):

x = self.model(x)

return x

9、Training APIs

def prepare_dataloader(df, trn_idx, val_idx, data_root='../input/cassava-leaf-disease-classification/train_images/'):

from catalyst.data.sampler import BalanceClassSampler

train_ = df.loc[trn_idx,:].reset_index(drop=True)

valid_ = df.loc[val_idx,:].reset_index(drop=True)

train_ds = ChestXRayDataset(train_, data_root, transforms=get_train_transforms(), output_label=True, one_hot_label=False, do_fmix=False, do_cutmix=False)

valid_ds = ChestXRayDataset(valid_, data_root, transforms=get_valid_transforms(), output_label=True)

train_loader = torch.utils.data.DataLoader(

train_ds,

batch_size=CFG['train_bs'],

pin_memory=False,

drop_last=False,

shuffle=True,

num_workers=CFG['num_workers'],

#sampler=BalanceClassSampler(labels=train_['label'].values, mode="downsampling")

)

val_loader = torch.utils.data.DataLoader(

valid_ds,

batch_size=CFG['valid_bs'],

num_workers=CFG['num_workers'],

shuffle=False,

pin_memory=False,

)

return train_loader, val_loader

def train_one_epoch(epoch, model, loss_fn, optimizer, train_loader, device, scheduler=None, schd_batch_update=False):

model.train()

t = time.time()

running_loss = None

pbar = tqdm(enumerate(train_loader), total=len(train_loader))

for step, (imgs, image_labels) in pbar:

imgs = imgs.to(device).float()

image_labels = image_labels.to(device).long()

#print(image_labels.shape, exam_label.shape)

with autocast():

image_preds = model(imgs) #output = model(input)

#print(image_preds.shape, exam_pred.shape)

loss = loss_fn(image_preds, image_labels)

scaler.scale(loss).backward()

if running_loss is None:

running_loss = loss.item()

else:

running_loss = running_loss * .99 + loss.item() * .01

if ((step + 1) % CFG['accum_iter'] == 0) or ((step + 1) == len(train_loader)):

# may unscale_ here if desired (e.g., to allow clipping unscaled gradients)

scaler.step(optimizer)

scaler.update()

optimizer.zero_grad()

if scheduler is not None and schd_batch_update:

scheduler.step()

if ((step + 1) % CFG['verbose_step'] == 0) or ((step + 1) == len(train_loader)):

description = f'epoch {epoch} loss: {running_loss:.4f}'

pbar.set_description(description)

if scheduler is not None and not schd_batch_update:

scheduler.step()

def valid_one_epoch(epoch, model, loss_fn, val_loader, device, best_val, fold, scheduler=None, schd_loss_update=False):

model.eval()

t = time.time()

loss_sum = 0

sample_num = 0

image_preds_all = []

image_targets_all = []

pbar = tqdm(enumerate(val_loader), total=len(val_loader))

for step, (imgs, image_labels) in pbar:

imgs = imgs.to(device).float()

image_labels = image_labels.to(device).long()

image_preds = model(imgs) #output = model(input)

#print(image_preds.shape, exam_pred.shape)

image_preds_all += [torch.argmax(image_preds, 1).detach().cpu().numpy()]

image_targets_all += [image_labels.detach().cpu().numpy()]

loss = loss_fn(image_preds, image_labels)

loss_sum += loss.item()*image_labels.shape[0]

sample_num += image_labels.shape[0]

if ((step + 1) % CFG['verbose_step'] == 0) or ((step + 1) == len(val_loader)):

description = f'epoch {epoch} loss: {loss_sum/sample_num:.4f}'

pbar.set_description(description)

image_preds_all = np.concatenate(image_preds_all)

image_targets_all = np.concatenate(image_targets_all)

valid_acc = (image_preds_all==image_targets_all).mean()

if (valid_acc > best_val):

best_val = valid_acc

torch.save(model.state_dict(), f'./best_model_fold{fold}.pt')

print('validation multi-class accuracy = {:.4f}'.format(valid_acc))

if scheduler is not None:

if schd_loss_update:

scheduler.step(loss_sum/sample_num)

else:

scheduler.step()

return best_val

# reference: https://www.kaggle.com/c/siim-isic-melanoma-classification/discussion/173733

class MyCrossEntropyLoss(_WeightedLoss):

def __init__(self, weight=None, reduction='mean'):

super().__init__(weight=weight, reduction=reduction)

self.weight = weight

self.reduction = reduction

def forward(self, inputs, targets):

lsm = F.log_softmax(inputs, -1)

if self.weight is not None:

lsm = lsm * self.weight.unsqueeze(0)

loss = -(targets * lsm).sum(-1)

if self.reduction == 'sum':

loss = loss.sum()

elif self.reduction == 'mean':

loss = loss.mean()

return loss

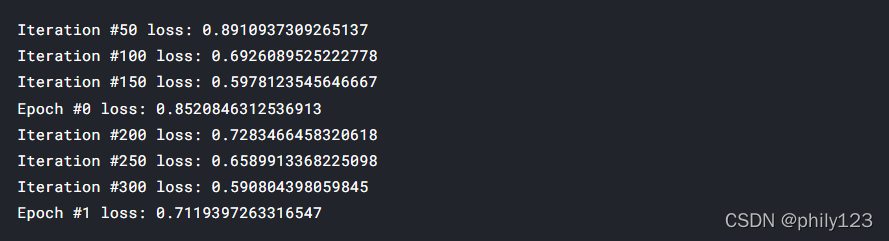

10 、Main Loop

if __name__ == '__main__':

# for training only, need nightly build pytorch

seed_everything(CFG['seed'])

#mskf = MultilabelStratifiedKFold(n_splits=5, shuffle=True, random_state=42)

folds = StratifiedKFold(n_splits=CFG['fold_num'], shuffle=True, random_state=CFG['seed']).split(np.arange(train.shape[0]), train.target.values)

for fold, (trn_idx, val_idx) in enumerate(folds):

# we'll train fold 0 first

if fold > 0:

break

print('Training with {} started'.format(fold))

print(len(trn_idx), len(val_idx))

train_loader, val_loader = prepare_dataloader(train, trn_idx, val_idx, data_root=CFG['path_to_train_data'])

device = torch.device(CFG['device'])

model = ChestXrayImgClassifier(CFG['model_arch'], train.target.nunique(), pretrained=True).to(device)

scaler = GradScaler()

optimizer = torch.optim.Adam(model.parameters(), lr=CFG['lr'], weight_decay=CFG['weight_decay'])

#scheduler = torch.optim.lr_scheduler.StepLR(optimizer, gamma=0.1, step_size=CFG['epochs']-1)

scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0=CFG['T_0'], T_mult=1, eta_min=CFG['min_lr'], last_epoch=-1)

#scheduler = torch.optim.lr_scheduler.OneCycleLR(optimizer=optimizer, pct_start=0.1, div_factor=25,

# max_lr=CFG['lr'], epochs=CFG['epochs'], steps_per_epoch=len(train_loader))

loss_tr = nn.CrossEntropyLoss().to(device) #MyCrossEntropyLoss().to(device)

loss_fn = nn.CrossEntropyLoss().to(device)

best_val = 0

for epoch in range(CFG['epochs']):

train_one_epoch(epoch, model, loss_tr, optimizer, train_loader, device, scheduler=scheduler, schd_batch_update=False)

with torch.no_grad():

best_val = valid_one_epoch(epoch, model, loss_fn, val_loader, device, best_val, fold, scheduler=None, schd_loss_update=False)

torch.save(model.state_dict(),'{}_fold_{}_{}'.format(CFG['model_arch'], fold, epoch))

#torch.save(model.cnn_model.state_dict(),'{}/cnn_model_fold_{}_{}'.format(CFG['model_path'], fold, CFG['tag']))

del model, optimizer, train_loader, val_loader, scaler, scheduler

torch.cuda.empty_cache()

二、Pytorch Starter - FasterRCNN Train

转自:https://www.kaggle.com/pestipeti/pytorch-starter-fasterrcnn-train

FasterRCNN from torchvision

Use Resnet50 backbone

Albumentation enabled (simple flip for now)

import pandas as pd

import numpy as np

import cv2

import os

import re

from PIL import Image

import albumentations as A

from albumentations.pytorch.transforms import ToTensorV2

import torch

import torchvision

from torchvision.models.detection.faster_rcnn import FastRCNNPredictor

from torchvision.models.detection import FasterRCNN

from torchvision.models.detection.rpn import AnchorGenerator

from torch.utils.data import DataLoader, Dataset

from torch.utils.data.sampler import SequentialSampler

from matplotlib import pyplot as plt

DIR_INPUT = '/kaggle/input/global-wheat-detection'

DIR_TRAIN = f'{DIR_INPUT}/train'

DIR_TEST = f'{DIR_INPUT}/test'

train_df = pd.read_csv(f'{DIR_INPUT}/train.csv')

train_df.shape

train_df['x'] = -1

train_df['y'] = -1

train_df['w'] = -1

train_df['h'] = -1

def expand_bbox(x):

r = np.array(re.findall("([0-9]+[.]?[0-9]*)", x))

if len(r) == 0:

r = [-1, -1, -1, -1]

return r

train_df[['x', 'y', 'w', 'h']] = np.stack(train_df['bbox'].apply(lambda x: expand_bbox(x)))

train_df.drop(columns=['bbox'], inplace=True)

train_df['x'] = train_df['x'].astype(np.float)

train_df['y'] = train_df['y'].astype(np.float)

train_df['w'] = train_df['w'].astype(np.float)

train_df['h'] = train_df['h'].astype(np.float)

image_ids = train_df['image_id'].unique()

valid_ids = image_ids[-665:]

train_ids = image_ids[:-665]

valid_df = train_df[train_df['image_id'].isin(valid_ids)]

train_df = train_df[train_df['image_id'].isin(train_ids)]

valid_df.shape, train_df.shape

class WheatDataset(Dataset):

def __init__(self, dataframe, image_dir, transforms=None):

super().__init__()

self.image_ids = dataframe['image_id'].unique()

self.df = dataframe

self.image_dir = image_dir

self.transforms = transforms

def __getitem__(self, index: int):

image_id = self.image_ids[index]

records = self.df[self.df['image_id'] == image_id]

image = cv2.imread(f'{self.image_dir}/{image_id}.jpg', cv2.IMREAD_COLOR)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB).astype(np.float32)

image /= 255.0

boxes = records[['x', 'y', 'w', 'h']].values

boxes[:, 2] = boxes[:, 0] + boxes[:, 2]

boxes[:, 3] = boxes[:, 1] + boxes[:, 3]

area = (boxes[:, 3] - boxes[:, 1]) * (boxes[:, 2] - boxes[:, 0])

area = torch.as_tensor(area, dtype=torch.float32)

# there is only one class

labels = torch.ones((records.shape[0],), dtype=torch.int64)

# suppose all instances are not crowd

iscrowd = torch.zeros((records.shape[0],), dtype=torch.int64)

target = {}

target['boxes'] = boxes

target['labels'] = labels

# target['masks'] = None

target['image_id'] = torch.tensor([index])

target['area'] = area

target['iscrowd'] = iscrowd

if self.transforms:

sample = {

'image': image,

'bboxes': target['boxes'],

'labels': labels

}

sample = self.transforms(**sample)

image = sample['image']

target['boxes'] = torch.stack(tuple(map(torch.tensor, zip(*sample['bboxes'])))).permute(1, 0)

return image, target, image_id

def __len__(self) -> int:

return self.image_ids.shape[0]

# Albumentations

def get_train_transform():

return A.Compose([

A.Flip(0.5),

ToTensorV2(p=1.0)

], bbox_params={'format': 'pascal_voc', 'label_fields': ['labels']})

def get_valid_transform():

return A.Compose([

ToTensorV2(p=1.0)

], bbox_params={'format': 'pascal_voc', 'label_fields': ['labels']})

1、Create the model

# load a model; pre-trained on COCO

model = torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=True)

num_classes = 2 # 1 class (wheat) + background

# get number of input features for the classifier

in_features = model.roi_heads.box_predictor.cls_score.in_features

# replace the pre-trained head with a new one

model.roi_heads.box_predictor = FastRCNNPredictor(in_features, num_classes)

class Averager:

def __init__(self):

self.current_total = 0.0

self.iterations = 0.0

def send(self, value):

self.current_total += value

self.iterations += 1

@property

def value(self):

if self.iterations == 0:

return 0

else:

return 1.0 * self.current_total / self.iterations

def reset(self):

self.current_total = 0.0

self.iterations = 0.0

def collate_fn(batch):

return tuple(zip(*batch))

train_dataset = WheatDataset(train_df, DIR_TRAIN, get_train_transform())

valid_dataset = WheatDataset(valid_df, DIR_TRAIN, get_valid_transform())

# split the dataset in train and test set

indices = torch.randperm(len(train_dataset)).tolist()

train_data_loader = DataLoader(

train_dataset,

batch_size=16,

shuffle=False,

num_workers=4,

collate_fn=collate_fn

)

valid_data_loader = DataLoader(

valid_dataset,

batch_size=8,

shuffle=False,

num_workers=4,

collate_fn=collate_fn

)

device = torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu')

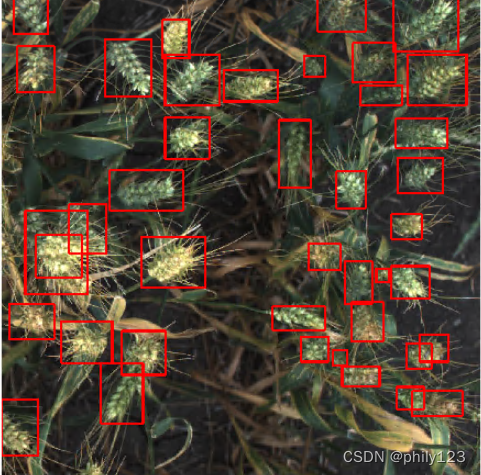

2、Sample

images, targets, image_ids = next(iter(train_data_loader))

images = list(image.to(device) for image in images)

targets = [{k: v.to(device) for k, v in t.items()} for t in targets]

boxes = targets[2]['boxes'].cpu().numpy().astype(np.int32)

sample = images[2].permute(1,2,0).cpu().numpy()

fig, ax = plt.subplots(1, 1, figsize=(16, 8))

for box in boxes:

cv2.rectangle(sample,

(box[0], box[1]),

(box[2], box[3]),

(220, 0, 0), 3)

ax.set_axis_off()

ax.imshow(sample)

3、Train

model.to(device)

params = [p for p in model.parameters() if p.requires_grad]

optimizer = torch.optim.SGD(params, lr=0.005, momentum=0.9, weight_decay=0.0005)

# lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=3, gamma=0.1)

lr_scheduler = None

num_epochs = 2

loss_hist = Averager()

itr = 1

for epoch in range(num_epochs):

loss_hist.reset()

for images, targets, image_ids in train_data_loader:

images = list(image.to(device) for image in images)

targets = [{k: v.to(device) for k, v in t.items()} for t in targets]

loss_dict = model(images, targets)

losses = sum(loss for loss in loss_dict.values())

loss_value = losses.item()

loss_hist.send(loss_value)

optimizer.zero_grad()

losses.backward()

optimizer.step()

if itr % 50 == 0:

print(f"Iteration #{itr} loss: {loss_value}")

itr += 1

# update the learning rate

if lr_scheduler is not None:

lr_scheduler.step()

print(f"Epoch #{epoch} loss: {loss_hist.value}")

images, targets, image_ids = next(iter(valid_data_loader))

images = list(img.to(device) for img in images)

targets = [{k: v.to(device) for k, v in t.items()} for t in targets]

boxes = targets[1]['boxes'].cpu().numpy().astype(np.int32)

sample = images[1].permute(1,2,0).cpu().numpy()

model.eval()

cpu_device = torch.device("cpu")

outputs = model(images)

outputs = [{k: v.to(cpu_device) for k, v in t.items()} for t in outputs]

fig, ax = plt.subplots(1, 1, figsize=(16, 8))

for box in boxes:

cv2.rectangle(sample,

(box[0], box[1]),

(box[2], box[3]),

(220, 0, 0), 3)

ax.set_axis_off()

ax.imshow(sample)

torch.save(model.state_dict(), 'fasterrcnn_resnet50_fpn.pth')

三、Barrier Reef YOLOv5 [Training]

转自:https://www.kaggle.com/ammarnassanalhajali/barrier-reef-yolov5-training

6217

6217

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?