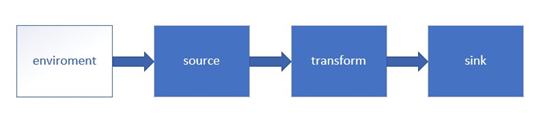

Flink 流处理API

Environment

getExecutionEnvironment

- 创建一个执行环境,表示当前执行程序的上下文。getExecutionEnvironment会根据查询运行的方式决定返回什么样的运行环境,是最常用的一种创建执行环境的方式。

ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

- 如果没有设置并行度,会以flink-conf.yaml中的配置为准,默认是1。

createLocalEnvironment

- 返回本地执行环境,需要在调用时指定默认的并行度。

LocalStreamEnvironment env = StreamExecutionEnvironment.createLocalEnvironment(1);

createRemoteEnvironment

- 返回集群执行环境,将Jar提交到远程服务器。需要在调用时指定JobManager的IP和端口号,并指定要在集群中运行的Jar包。

StreamExecutionEnvironment env = StreamExecutionEnvironment.createRemoteEnvironment( "jobmanage-hostname", 6123,

"YOURPATH//WordCount.jar");

Source

从集合读取数据

java代码:

package apitest.source;

import apitest.beans.SensorReading;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.util.Arrays;

public class SourceTest1_Collection {

public static void main(String[] args) throws Exception {

// 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 设置env并行度1,使得整个任务抢占同一个线程执行

env.setParallelism(1);

// Source: 从集合Collection中获取数据

DataStream<SensorReading> dataStream = env.fromCollection(

Arrays.asList(

new SensorReading("sensor_1", 1547718199L, 35.8),

new SensorReading("sensor_6", 1547718201L, 15.4),

new SensorReading("sensor_7", 1547718202L, 6.7),

new SensorReading("sensor_10", 1547718205L, 38.1)

)

);

DataStream<Integer> intStream = env.fromElements(1,2,3,4,5,6,7,8,9);

// 打印输出

dataStream.print("SENSOR");

intStream.print("INT");

// 执行

env.execute("JobName");

}

}

输出:

INT> 1

INT> 2

SENSOR> SensorReading{id='sensor_1', timestamp=1547718199, temperature=35.8}

INT> 3

SENSOR> SensorReading{id='sensor_6', timestamp=1547718201, temperature=15.4}

INT> 4

SENSOR> SensorReading{id='sensor_7', timestamp=1547718202, temperature=6.7}

INT> 5

SENSOR> SensorReading{id='sensor_10', timestamp=1547718205, temperature=38.1}

INT> 6

INT> 7

INT> 8

INT> 9

从文件读取数据

java代码如下:

package apitest.source;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class SourceTest2_File {

public static void main(String[] args) throws Exception {

// 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 使得任务抢占同一个线程

env.setParallelism(1);

// 从文件中获取数据输出

DataStream<String> dataStream = env.readTextFile("/tmp/Flink_Tutorial/src/main/resources/sensor.txt");

dataStream.print();

env.execute();

}

}

sensor.txt文件内容

sensor_1,1547718199,35.8

sensor_6,1547718201,15.4

sensor_7,1547718202,6.7

sensor_10,1547718205,38.1

sensor_1,1547718207,36.3

sensor_1,1547718209,32.8

sensor_1,1547718212,37.1

输出:

sensor_1,1547718199,35.8

sensor_6,1547718201,15.4

sensor_7,1547718202,6.7

sensor_10,1547718205,38.1

sensor_1,1547718207,36.3

sensor_1,1547718209,32.8

sensor_1,1547718212,37.1

从Kafka读取数据

-

pom依赖

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>org.example</groupId> <artifactId>Flink_Tutorial</artifactId> <version>1.0-SNAPSHOT</version> <properties> <maven.compiler.source>8</maven.compiler.source> <maven.compiler.target>8</maven.compiler.target> <flink.version>1.12.1</flink.version> <scala.binary.version>2.12</scala.binary.version> </properties> <dependencies> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-java</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-scala_${scala.binary.version}</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-clients_${scala.binary.version}</artifactId> <version>${flink.version}</version> </dependency> <!-- kafka --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka_${scala.binary.version}</artifactId> <version>${flink.version}</version> </dependency> </dependencies> </project> -

启动zookeeper

$ bin/zookeeper-server-start.sh config/zookeeper.properties -

启动kafka服务

$ bin/kafka-server-start.sh config/server.properties -

启动kafka生产者

$ bin/kafka-console-producer.sh --broker-list localhost:9092 --topic sensor -

编写java代码

package apitest.source; import org.apache.flink.api.common.serialization.SimpleStringSchema; import org.apache.flink.streaming.api.datastream.DataStream; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer; import java.util.Properties; public class SourceTest3_Kafka { public static void main(String[] args) throws Exception { // 创建执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); // 设置并行度1 env.setParallelism(1); Properties properties = new Properties(); properties.setProperty("bootstrap.servers", "localhost:9092"); // 下面这些次要参数 properties.setProperty("group.id", "consumer-group"); properties.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); properties.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); properties.setProperty("auto.offset.reset", "latest"); // flink添加外部数据源 DataStream<String> dataStream = env.addSource(new FlinkKafkaConsumer<String>("sensor", new SimpleStringSchema(),properties)); // 打印输出 dataStream.print(); env.execute(); } } -

运行java代码,在Kafka生产者console中输入

$ bin/kafka-console-producer.sh --broker-list localhost:9092 --topic sensor >sensor_1,1547718199,35.8 >sensor_6,1547718201,15.4 > -

java输出

sensor_1,1547718199,35.8 sensor_6,1547718201,15.4

自定义Source

java代码:

package apitest.source;

import apitest.beans.SensorReading;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import java.util.HashMap;

import java.util.Random;

public class SourceTest4_UDF {

public static void main(String[] args) throws Exception {

// 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStream<SensorReading> dataStream = env.addSource(new MySensorSource());

dataStream.print();

env.execute();

}

// 实现自定义的SourceFunction

public static class MySensorSource implements SourceFunction<SensorReading> {

// 标示位,控制数据产生

private volatile boolean running = true;

@Override

public void run(SourceContext<SensorReading> ctx) throws Exception {

//定义一个随机数发生器

Random random = new Random();

// 设置10个传感器的初始温度

HashMap<String, Double> sensorTempMap = new HashMap<>();

for (int i = 0; i < 10; ++i) {

sensorTempMap.put("sensor_" + (i + 1), 60 + random.nextGaussian() * 20);

}

while (running) {

for (String sensorId : sensorTempMap.keySet()) {

// 在当前温度基础上随机波动

Double newTemp = sensorTempMap.get(sensorId) + random.nextGaussian();

sensorTempMap.put(sensorId, newTemp);

ctx.collect(new SensorReading(sensorId,System.currentTimeMillis(),newTemp));

}

// 控制输出频率

Thread.sleep(2000L);

}

}

@Override

public void cancel() {

this.running = false;

}

}

}

输出:

7> SensorReading{id='sensor_9', timestamp=1612091759321, temperature=83.80320976056609}

15> SensorReading{id='sensor_10', timestamp=1612091759321, temperature=68.77967856820972}

1> SensorReading{id='sensor_1', timestamp=1612091759321, temperature=45.75304941852771}

6> SensorReading{id='sensor_6', timestamp=1612091759321, temperature=71.80036477804133}

3> SensorReading{id='sensor_7', timestamp=1612091759321, temperature=55.262086521569564}

2> SensorReading{id='sensor_2', timestamp=1612091759321, temperature=64.0969570576537}

5> SensorReading{id='sensor_5', timestamp=1612091759321, temperature=51.09761352612651}

14> SensorReading{id='sensor_3', timestamp=1612091759313, temperature=32.49085393551031}

4> SensorReading{id='sensor_8', timestamp=1612091759321, temperature=64.83732456896752}

16> SensorReading{id='sensor_4', timestamp=1612091759321, temperature=88.88318538017865}

12> SensorReading{id='sensor_2', timestamp=1612091761325, temperature=65.21522804626638}

16> SensorReading{id='sensor_6', timestamp=1612091761325, temperature=70.49210870668041}

15> SensorReading{id='sensor_5', timestamp=1612091761325, temperature=50.32349231082738}

....

Transform

map、flatMap、filter通常被统一称为基本转换算子(简单转换算子)。

基本转换算子

map、flatMap、filter

java代码:

package apitest.transform;

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class TransformTest1_Base {

public static void main(String[] args) throws Exception {

// 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 使得任务抢占同一个线程

env.setParallelism(1);

// 从文件中获取数据输出

DataStream<String> dataStream = env.readTextFile("/tmp/Flink_Tutorial/src/main/resources/sensor.txt");

// 1. map, String => 字符串长度INT

DataStream<Integer> mapStream = dataStream.map(new MapFunction<String, Integer>() {

@Override

public Integer map(String value) throws Exception {

return value.length();

}

});

// 2. flatMap,按逗号分割字符串

DataStream<String> flatMapStream = dataStream.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String value, Collector<String> out) throws Exception {

String[] fields = value.split(",");

for(String field:fields){

out.collect(field);

}

}

});

// 3. filter,筛选"sensor_1"开头的数据

DataStream<String> filterStream = dataStream.filter(new FilterFunction<String>() {

@Override

public boolean filter(String value) throws Exception {

return value.startsWith("sensor_1");

}

});

// 打印输出

mapStream.print("map");

flatMapStream.print("flatMap");

filterStream.print("filter");

env.execute();

}

}

输出:

map> 24

flatMap> sensor_1

flatMap> 1547718199

flatMap> 35.8

filter> sensor_1,1547718199,35.8

map> 24

flatMap> sensor_6

flatMap> 1547718201

flatMap> 15.4

map> 23

flatMap> sensor_7

flatMap> 1547718202

flatMap> 6.7

map> 25

flatMap> sensor_10

flatMap> 1547718205

flatMap> 38.1

filter> sensor_10,1547718205,38.1

map> 24

flatMap> sensor_1

flatMap> 1547718207

flatMap> 36.3

filter> sensor_1,1547718207,36.3

map> 24

flatMap> sensor_1

flatMap> 1547718209

flatMap> 32.8

filter> sensor_1,1547718209,32.8

map> 24

flatMap> sensor_1

flatMap> 1547718212

flatMap> 37.1

filter> sensor_1,1547718212,37.1

聚合操作算子

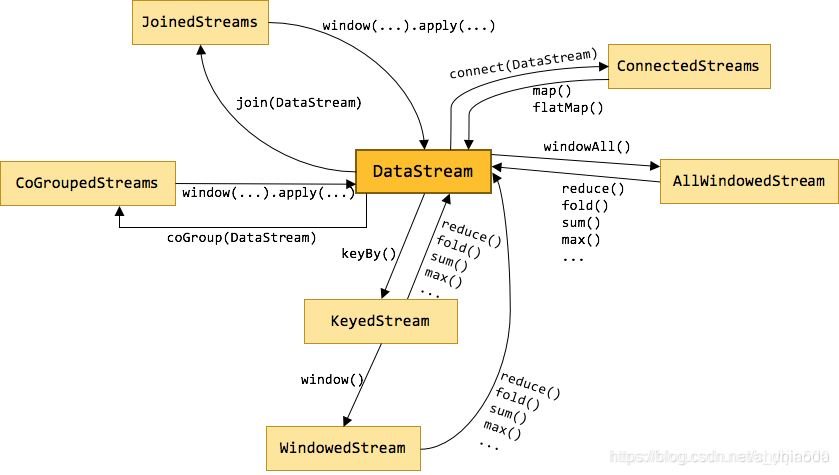

- DataStream里没有reduce和sum这类聚合操作的方法,因为Flink设计中,所有数据必须先分组才能做聚合操作。

- 先keyBy得到KeyedStream,然后调用其reduce、sum等聚合操作方法。(先分组后聚合)

常见的聚合操作算子主要有:

- keyBy

- 滚动聚合算子Rolling Aggregation

- reduce

keyBy

DataStream -> KeyedStream:逻辑地将一个流拆分成不相交的分区,每个分区包含具有相同key的元素,在内部以hash的形式实现的。

1、KeyBy会重新分区; 2、不同的key有可能分到一起,因为是通过hash原理实现的;

Rolling Aggregation

这些算子可以针对KeyedStream的每一个支流做聚合。

- sum()

- min()

- max()

- minBy()

- maxBy()

- 滚动聚合,max和maxBy区别在于,maxBy除了用于max比较的字段以外,其他字段也会更新成最新的,而max只有比较的字段更新,其他字段不变

测试maxBy的java代码一

package apitest.transform;

import apitest.beans.SensorReading;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class TransformTest2_RollingAggregation {

public static void main(String[] args) throws Exception {

// 创建 执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 执行环境并行度设置1

env.setParallelism(1);

DataStream<String> dataStream = env.readTextFile("/tmp/Flink_Tutorial/src/main/resources/sensor.txt");

// DataStream<SensorReading> sensorStream = dataStream.map(new MapFunction<String, SensorReading>() {

// @Override

// public SensorReading map(String value) throws Exception {

// String[] fields = value.split(",");

// return new SensorReading(fields[0],new Long(fields[1]),new Double(fields[2]));

// }

// });

// lambda表达式

DataStream<SensorReading> sensorStream = dataStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

// 先分组再聚合

// 分组

KeyedStream<SensorReading, Tuple> keyedStream = sensorStream.keyBy("id");

//KeyedStream<SensorReading, String> keyedStream = sensorStream.keyBy(SensorReading::getId);

// 滚动聚合,max和maxBy区别在于,maxBy除了用于max比较的字段以外,其他字段也会更新成最新的,而max只有比较的字段更新,其他字段不变

DataStream<SensorReading> resultStream = keyedStream.maxBy("temsperature");

resultStream.print("result");

env.execute();

}

}

其中sensor.txt文件内容如下

sensor_1,1547718199,35.8

sensor_6,1547718201,15.4

sensor_7,1547718202,6.7

sensor_10,1547718205,38.1

sensor_1,1547718207,36.3

sensor_1,1547718209,32.8

sensor_1,1547718212,37.1

输出如下:

由于是滚动更新,每次输出历史最大值,所以下面36.3才会出现两次

result> SensorReading{id='sensor_1', timestamp=1547718199, temperature=35.8}

result> SensorReading{id='sensor_6', timestamp=1547718201, temperature=15.4}

result> SensorReading{id='sensor_7', timestamp=1547718202, temperature=6.7}

result> SensorReading{id='sensor_10', timestamp=1547718205, temperature=38.1}

result> SensorReading{id='sensor_1', timestamp=1547718207, temperature=36.3}

result> SensorReading{id='sensor_1', timestamp=1547718207, temperature=36.3}

result> SensorReading{id='sensor_1', timestamp=1547718212, temperature=37.1}

reduce

Reduce适用于更加一般化的聚合操作场景。java中需要实现ReduceFunction函数式接口。

在前面Rolling Aggregation的前提下,对需求进行修改。获取同组历史温度最高的传感器信息,同时要求实时更新其时间戳信息。

java代码如下:

package apitest.transform;

import apitest.beans.SensorReading;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.kafka.common.metrics.stats.Max;

public class TransformTest3_Reduce {

public static void main(String[] args) throws Exception {

// 创建 执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 执行环境并行度设置1

env.setParallelism(1);

DataStream<String> dataStream = env.readTextFile("/tmp/Flink_Tutorial/src/main/resources/sensor.txt");

DataStream<SensorReading> sensorStream = dataStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

// 先分组再聚合

// 分组

KeyedStream<SensorReading, String> keyedStream = sensorStream.keyBy(SensorReading::getId);

// reduce,自定义规约函数,获取max温度的传感器信息以外,时间戳要求更新成最新的

DataStream<SensorReading> resultStream = keyedStream.reduce(

(curSensor,newSensor)->new SensorReading(curSensor.getId(),newSensor.getTimestamp(), Math.max(curSensor.getTemperature(), newSensor.getTemperature()))

);

//

keyedStream.reduce(new ReduceFunction<SensorReading>(){

@Override

public SensorReading reduce (SensorReading value1, SensorReading value2) throws Exception {

return new SensorReading(value1. getId(), value2. getTimestamp(), Math .max(value1. getTemperature(),

}

});

resultStream.print("result");

env.execute();

}

}

sensor.txt文件内容如下:

sensor_1,1547718199,35.8

sensor_6,1547718201,15.4

sensor_7,1547718202,6.7

sensor_10,1547718205,38.1

sensor_1,1547718207,36.3

sensor_1,1547718209,32.8

sensor_1,1547718212,37.1

输出如下:

和前面“Rolling Aggregation”小节不同的是,倒数第二条数据的时间戳用了当前比较时最新的时间戳。

result> SensorReading{id='sensor_1', timestamp=1547718199, temperature=35.8}

result> SensorReading{id='sensor_6', timestamp=1547718201, temperature=15.4}

result> SensorReading{id='sensor_7', timestamp=1547718202, temperature=6.7}

result> SensorReading{id='sensor_10', timestamp=1547718205, temperature=38.1}

result> SensorReading{id='sensor_1', timestamp=1547718207, temperature=36.3}

result> SensorReading{id='sensor_1', timestamp=1547718209, temperature=36.3}

result> SensorReading{id='sensor_1', timestamp=1547718212, temperature=37.1}

多流转换算子

多流转换算子一般包括:

- Split和Select (新版已经移除)

- Connect和CoMap

- Union

Connect

DataStream -> SplitStream:根据某些特征把DataStream拆分成SplitStream;

SplitStream虽然看起来像是两个Stream,但是其实它是一个特殊的Stream;

DataStream,DataStream -> ConnectedStreams: 连接两个保持他们类型的数据流,两个数据流被Connect 之后,只是被放在了一个流中,内部依然保持各自的数据和形式不发生任何变化,两个流相互独立。

DataStream,DataStream -> ConnectedStreams: 连接两个保持他们类型的数据流,两个数据流被Connect 之后,只是被放在了一个流中,内部依然保持各自的数据和形式不发生任何变化,两个流相互独立。

CoMap

ConnectedStreams -> DataStream: 作用于ConnectedStreams 上,功能与map和flatMap一样,对ConnectedStreams 中的每一个Stream分别进行map和flatMap操作;

ConnectedStreams -> DataStream: 作用于ConnectedStreams 上,功能与map和flatMap一样,对ConnectedStreams 中的每一个Stream分别进行map和flatMap操作;

虽然Flink1.12.1的DataStream有connect和map方法,但是教程基于前面的split和select编写,所以这里直接附上教程的代码:

package com.atguigu.apitest.transform;

import com.atguigu.apitest.beans.SensorReading;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.streaming.api.collector.selector.OutputSelector;

import org.apache.flink.streaming.api.datastream.ConnectedStreams;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.datastream.SplitStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.CoMapFunction;

import java.util.Collections;

public class TransformTest4_MultipleStreams {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 从文件读取数据

DataStream<String> inputStream = env.readTextFile("D:\\Projects\\BigData\\FlinkTutorial\\src\\main\\resources\\sensor.txt");

// 转换成SensorReading

DataStream<SensorReading> dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

} );

// 1. 分流,按照温度值30度为界分为两条流

SplitStream<SensorReading> splitStream = dataStream.split(new OutputSelector<SensorReading>() {

@Override

public Iterable<String> select(SensorReading value) {

return (value.getTemperature() > 30) ? Collections.singletonList("high") : Collections.singletonList("low");

}

});

DataStream<SensorReading> highTempStream = splitStream.select("high");

DataStream<SensorReading> lowTempStream = splitStream.select("low");

DataStream<SensorReading> allTempStream = splitStream.select("high", "low");

// highTempStream.print("high");

// lowTempStream.print("low");

// allTempStream.print("all");

// 2. 合流 connect,将高温流转换成二元组类型,与低温流连接合并之后,输出状态信息

DataStream<Tuple2<String, Double>> warningStream = highTempStream.map(new MapFunction<SensorReading, Tuple2<String, Double>>() {

@Override

public Tuple2<String, Double> map(SensorReading value) throws Exception {

return new Tuple2<>(value.getId(), value.getTemperature());

}

});

ConnectedStreams<Tuple2<String, Double>, SensorReading> connectedStreams = warningStream.connect(lowTempStream);

DataStream<Object> resultStream = connectedStreams.map(new CoMapFunction<Tuple2<String, Double>, SensorReading, Object>() {

@Override

public Object map1(Tuple2<String, Double> value) throws Exception {

return new Tuple3<>(value.f0, value.f1, "high temp warning");

}

@Override

public Object map2(SensorReading value) throws Exception {

return new Tuple2<>(value.getId(), "normal");

}

});

resultStream.print();

}

}

输出如下:

(sensor_1,35.8,high temp warning)

(sensor_6,normal)

(sensor_10,38.1,high temp warning)

(sensor_7,normal)

(sensor_1,36.3,high temp warning)

(sensor_1,32.8,high temp warning)

(sensor_1,37.1,high temp warning)

Union

DataStream -> DataStream:对两个或者两个以上的DataStream进行Union操作,产生一个包含多有DataStream元素的新DataStream。

问题:和Connect的区别?

- Connect 的数据类型可以不同,Connect 只能合并两个流;

- Union可以合并多条流,Union的数据结构必须是一样的;

// 3. union联合多条流

// warningStream.union(lowTempStream); 这个不行,因为warningStream类型是DataStream<Tuple2<String, Double>>,而highTempStream是DataStream<SensorReading>

highTempStream.union(lowTempStream, allTempStream);

算子转换

在Storm中,我们常常用Bolt的层级关系来表示各个数据的流向关系,组成一个拓扑。

在Flink中,Transformation算子就是将一个或多个DataStream转换为新的DataStream,可以将多个转换组合成复杂的数据流拓扑。 如下图所示,DataStream会由不同的Transformation操作,转换、过滤、聚合成其他不同的流,从而完成我们的业务要求。

支持的数据类型

Flink流应用程序处理的是以数据对象表示的事件流。所以在Flink内部,我们需要能够处理这些对象。它们需要被序列化和反序列化,以便通过网络传送它们;或者从状态后端、检查点和保存点读取它们。为了有效地做到这一点,Flink需要明确知道应用程序所处理的数据类型。Flink使用类型信息的概念来表示数据类型,并为每个数据类型生成特定的序列化器、反序列化器和比较器。

Flink还具有一个类型提取系统,该系统分析函数的输入和返回类型,以自动获取类型信息,从而获得序列化器和反序列化器。但是,在某些情况下,例如lambda函数或泛型类型,需要显式地提供类型信息,才能使应用程序正常工作或提高其性能。

Flink支持Java和Scala中所有常见数据类型。使用最广泛的类型有以下几种。

基础数据类型

Flink支持所有的Java和Scala基础数据类型,Int, Double, Long, String, …

DataStream<Integer> numberStream = env.fromElements(1, 2, 3, 4);

numberStream.map(data -> data * 2);

Java和Scala元组(Tuples)

java不像Scala天生支持元组Tuple类型,java的元组类型由Flink的包提供,默认提供Tuple0~Tuple25

DataStream<Tuple2<String, Integer>> personStream = env.fromElements(

new Tuple2("Adam", 17),

new Tuple2("Sarah", 23)

);

personStream.filter(p -> p.f1 > 18);

Scala样例类(case classes)

case class Person(name:String,age:Int)

val numbers: DataStream[(String,Integer)] = env.fromElements(

Person("张三",12),

Person("李四",23)

)

Java简单对象(POJO)

java的POJO这里要求必须提供无参构造函数

- 成员变量要求都是public(或者private但是提供get、set方法)

public class Person{

public String name;

public int age;

public Person() {}

public Person( String name , int age) {

this.name = name;

this.age = age;

}

}

DataStream Pe rson > persons = env.fromElements(

new Person (" Alex", 42),

new Person (" Wendy",23)

);

其他(Arrays, Lists, Maps, Enums)

Flink对Java和Scala中的一些特殊目的的类型也都是支持的,比如Java的ArrayList,HashMap,Enum等等。

实现UDF函数——更细粒度的控制流

函数类(Function Classes)

Flink暴露了所有UDF函数的接口(实现方式为接口或者抽象类)。例如MapFunction, FilterFunction, ProcessFunction等等。

下面例子实现了FilterFunction接口:

DataStream<String> flinkTweets = tweets.filter(new FlinkFilter());

public static class FlinkFilter implements FilterFunction<String> {

@Override public boolean filter(String value) throws Exception {

return value.contains("flink");

}

}

还可以将函数实现成匿名类

DataStream<String> flinkTweets = tweets.filter(

new FilterFunction<String>() {

@Override public boolean filter(String value) throws Exception {

return value.contains("flink");

}

}

);

我们filter的字符串"flink"还可以当作参数传进去。

DataStream<String> tweets = env.readTextFile("INPUT_FILE ");

DataStream<String> flinkTweets = tweets.filter(new KeyWordFilter("flink"));

public static class KeyWordFilter implements FilterFunction<String> {

private String keyWord;

KeyWordFilter(String keyWord) {

this.keyWord = keyWord;

}

@Override public boolean filter(String value) throws Exception {

return value.contains(this.keyWord);

}

}

匿名函数(Lambda Functions)

DataStream<String> tweets = env.readTextFile("INPUT_FILE");

DataStream<String> flinkTweets = tweets.filter( tweet -> tweet.contains("flink") );

富函数(Rich Functions)

“富函数”是DataStream API提供的一个函数类的接口,所有Flink函数类都有其Rich版本。

它与常规函数的不同在于,可以获取运行环境的上下文,并拥有一些生命周期方法,所以可以实现更复杂的功能。

- RichMapFunction

- RichFlatMapFunction

- RichFilterFunction

- …

Rich Function有一个生命周期的概念。典型的生命周期方法有:

open()方法是rich function的初始化方法,当一个算子例如map或者filter被调用之前open()会被调用。close()方法是生命周期中的最后一个调用的方法,做一些清理工作。getRuntimeContext()方法提供了函数的RuntimeContext的一些信息,例如函数执行的并行度,任务的名字,以及state状态

public static class MyMapFunction extends RichMapFunction<SensorReading, Tuple2<Integer, String>> {

@Override public Tuple2<Integer, String> map(SensorReading value) throws Exception {

return new Tuple2<>(getRuntimeContext().getIndexOfThisSubtask(), value.getId());

}

@Override public void open(Configuration parameters) throws Exception {

System.out.println("my map open"); // 以下可以做一些初始化工作,例如建立一个和HDFS的连接

}

@Override public void close() throws Exception {

System.out.println("my map close"); // 以下做一些清理工作,例如断开和HDFS的连接

}

}

测试代码:

package apitest.transform;

import apitest.beans.SensorReading;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.RichMapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class TransformTest5_RichFunction {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(4);

DataStream<String> inputStream = env.readTextFile("/tmp/Flink_Tutorial/src/main/resources/sensor.txt");

// 转换成SensorReading类型

DataStream<SensorReading> dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

DataStream<Tuple2<String, Integer>> resultStream = dataStream.map( new MyMapper() );

resultStream.print();

env.execute();

}

// 传统的Function不能获取上下文信息,只能处理当前数据,不能和其他数据交互

public static class MyMapper0 implements MapFunction<SensorReading, Tuple2<String, Integer>> {

@Override

public Tuple2<String, Integer> map(SensorReading value) throws Exception {

return new Tuple2<>(value.getId(), value.getId().length());

}

}

// 实现自定义富函数类(RichMapFunction是一个抽象类)

public static class MyMapper extends RichMapFunction<SensorReading, Tuple2<String, Integer>> {

@Override

public Tuple2<String, Integer> map(SensorReading value) throws Exception {

// RichFunction可以获取State状态

// getRuntimeContext().getState();

return new Tuple2<>(value.getId(), getRuntimeContext().getIndexOfThisSubtask());

}

@Override

public void open(Configuration parameters) throws Exception {

// 初始化工作,一般是定义状态,或者建立数据库连接

System.out.println("open");

}

@Override

public void close() throws Exception {

// 一般是关闭连接和清空状态的收尾操作

System.out.println("close");

}

}

}

输出如下:

由于设置了执行环境env的并行度为4,所以有4个slot执行自定义的RichFunction,输出4次open和close

open

open

open

open

4> (sensor_1,3)

4> (sensor_6,3)

close

2> (sensor_1,1)

2> (sensor_1,1)

close

3> (sensor_1,2)

close

1> (sensor_7,0)

1> (sensor_10,0)

close

数据重分区操作

重分区操作,在DataStream类中可以看到很多Partitioner字眼的类。

其中partitionCustom(...)方法用于自定义重分区。

java代码:

package apitest.transform;

import apitest.beans.SensorReading;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class TransformTest6_Partition {

public static void main(String[] args) throws Exception{

// 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 设置并行度 = 4

env.setParallelism(4);

// 从文件读取数据

DataStream<String> inputStream = env.readTextFile("/tmp/Flink_Tutorial/src/main/resources/sensor.txt");

// 转换成SensorReading类型

DataStream<SensorReading> dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

// SingleOutputStreamOperator多并行度默认就rebalance,轮询方式分配

dataStream.print("input");

// 1. shuffle (并非批处理中的获取一批后才打乱,这里每次获取到直接打乱且分区)

DataStream<String> shuffleStream = inputStream.shuffle();

shuffleStream.print("shuffle");

// 2. keyBy (Hash,然后取模)

dataStream.keyBy(SensorReading::getId).print("keyBy");

// 3. global (直接发送给第一个分区,少数特殊情况才用)

dataStream.global().print("global");

env.execute();

}

}

输出:

input:3> SensorReading{id='sensor_1', timestamp=1547718199, temperature=35.8}

input:3> SensorReading{id='sensor_6', timestamp=1547718201, temperature=15.4}

input:1> SensorReading{id='sensor_1', timestamp=1547718207, temperature=36.3}

input:1> SensorReading{id='sensor_1', timestamp=1547718209, temperature=32.8}

shuffle:2> sensor_6,1547718201,15.4

shuffle:1> sensor_1,1547718199,35.8

input:4> SensorReading{id='sensor_7', timestamp=1547718202, temperature=6.7}

input:4> SensorReading{id='sensor_10', timestamp=1547718205, temperature=38.1}

shuffle:1> sensor_1,1547718207,36.3

shuffle:2> sensor_1,1547718209,32.8

global:1> SensorReading{id='sensor_1', timestamp=1547718199, temperature=35.8}

keyBy:3> SensorReading{id='sensor_1', timestamp=1547718199, temperature=35.8}

global:1> SensorReading{id='sensor_6', timestamp=1547718201, temperature=15.4}

keyBy:3> SensorReading{id='sensor_6', timestamp=1547718201, temperature=15.4}

keyBy:3> SensorReading{id='sensor_1', timestamp=1547718207, temperature=36.3}

keyBy:3> SensorReading{id='sensor_1', timestamp=1547718209, temperature=32.8}

global:1> SensorReading{id='sensor_1', timestamp=1547718207, temperature=36.3}

shuffle:1> sensor_7,1547718202,6.7

global:1> SensorReading{id='sensor_1', timestamp=1547718209, temperature=32.8}

shuffle:2> sensor_10,1547718205,38.1

input:2> SensorReading{id='sensor_1', timestamp=1547718212, temperature=37.1}

global:1> SensorReading{id='sensor_7', timestamp=1547718202, temperature=6.7}

keyBy:4> SensorReading{id='sensor_7', timestamp=1547718202, temperature=6.7}

keyBy:2> SensorReading{id='sensor_10', timestamp=1547718205, temperature=38.1}

global:1> SensorReading{id='sensor_10', timestamp=1547718205, temperature=38.1}

shuffle:1> sensor_1,1547718212,37.1

keyBy:3> SensorReading{id='sensor_1', timestamp=1547718212, temperature=37.1}

global:1> SensorReading{id='sensor_1', timestamp=1547718212, temperature=37.1}

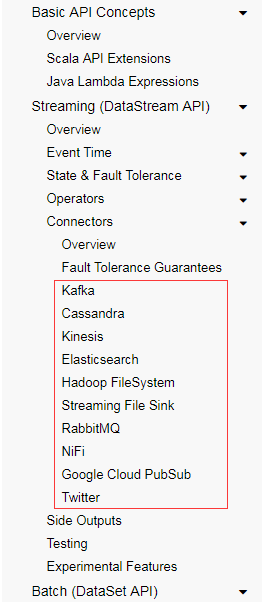

Sink

Flink没有类似于spark中foreach方法,让用户进行迭代的操作。虽有对外的输出操作都要利用Sink完成。最后通过类似如下方式完成整个任务最终输出操作。

stream.addSink(new MySink(xxxx))

官方提供了一部分的框架的sink。除此以外,需要用户自定义实现sink。

Kafka

-

pom依赖

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>org.example</groupId> <artifactId>Flink_Tutorial</artifactId> <version>1.0-SNAPSHOT</version> <properties> <maven.compiler.source>8</maven.compiler.source> <maven.compiler.target>8</maven.compiler.target> <flink.version>1.12.1</flink.version> <scala.binary.version>2.12</scala.binary.version> </properties> <dependencies> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-java</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-scala_${scala.binary.version}</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-clients_${scala.binary.version}</artifactId> <version>${flink.version}</version> </dependency> <!-- kafka --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka_${scala.binary.version}</artifactId> <version>${flink.version}</version> </dependency> </dependencies> </project> -

编写java代码

package apitest.sink; import apitest.beans.SensorReading; import org.apache.flink.api.common.serialization.SimpleStringSchema; import org.apache.flink.streaming.api.datastream.DataStream; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer; import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer; import java.util.Properties; public class SinkTest1_Kafka { public static void main(String[] args) throws Exception{ // 创建执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); // 并行度设置为1 env.setParallelism(1); Properties properties = new Properties(); properties.setProperty("bootstrap.servers", "localhost:9092"); properties.setProperty("group.id", "consumer-group"); properties.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); properties.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); properties.setProperty("auto.offset.reset", "latest"); // 从Kafka中读取数据 DataStream<String> inputStream = env.addSource( new FlinkKafkaConsumer<String>("sensor", new SimpleStringSchema(), properties)); // 序列化从Kafka中读取的数据 DataStream<String> dataStream = inputStream.map(line -> { String[] fields = line.split(","); return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2])).toString(); }); // 将数据写入Kafka dataStream.addSink( new FlinkKafkaProducer<String>("localhost:9092", "sinktest", new SimpleStringSchema())); env.execute(); } } -

启动zookeeper

$ bin/zookeeper-server-start.sh config/zookeeper.properties -

启动kafka服务

$ bin/kafka-server-start.sh config/server.properties -

新建kafka生产者console

$ bin/kafka-console-producer.sh --broker-list localhost:9092 --topic sensor -

新建kafka消费者console

$ bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic sinktest -

运行Flink程序,在kafka生产者console输入数据,查看kafka消费者console的输出结果

输入(kafka生产者console)

>sensor_1,1547718199,35.8 >sensor_6,1547718201,15.4输出(kafka消费者console)

SensorReading{id='sensor_1', timestamp=1547718199, temperature=35.8} SensorReading{id='sensor_6', timestamp=1547718201, temperature=15.4}

这里Flink的作用相当于pipeline了。

Redis

查询Flink连接器,最简单的就是查询关键字

flink-connector-

这里将Redis当作sink的输出对象。

-

pom依赖

这个可谓相当老的依赖了,2017年的。

<!-- https://mvnrepository.com/artifact/org.apache.bahir/flink-connector-redis --> <dependency> <groupId>org.apache.bahir</groupId> <artifactId>flink-connector-redis_2.11</artifactId> <version>1.0</version> </dependency> -

编写java代码

package apitest.sink; import apitest.beans.SensorReading; import org.apache.flink.streaming.api.datastream.DataStream; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.connectors.redis.RedisSink; import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisPoolConfig; import org.apache.flink.streaming.connectors.redis.common.mapper.RedisCommand; import org.apache.flink.streaming.connectors.redis.common.mapper.RedisCommandDescription; import org.apache.flink.streaming.connectors.redis.common.mapper.RedisMapper; public class SinkTest2_Redis { public static void main(String[] args) throws Exception { StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); // 从文件读取数据 DataStream<String> inputStream = env.readTextFile("/tmp/Flink_Tutorial/src/main/resources/sensor.txt"); // 转换成SensorReading类型 DataStream<SensorReading> dataStream = inputStream.map(line -> { String[] fields = line.split(","); return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2])); }); // 定义jedis连接配置(我这里连接的是docker的redis) FlinkJedisPoolConfig config = new FlinkJedisPoolConfig.Builder() .setHost("localhost") .setPort(6379) .setPassword("123456") .setDatabase(0) .build(); dataStream.addSink(new RedisSink<>(config, new MyRedisMapper())); env.execute(); } // 自定义RedisMapper public static class MyRedisMapper implements RedisMapper<SensorReading> { // 定义保存数据到redis的命令,存成Hash表,hset sensor_temp id temperature @Override public RedisCommandDescription getCommandDescription() { return new RedisCommandDescription(RedisCommand.HSET, "sensor_temp"); } @Override public String getKeyFromData(SensorReading data) { return data.getId(); } @Override public String getValueFromData(SensorReading data) { return data.getTemperature().toString(); } } } -

启动redis服务(我这里是docker里的)

-

启动Flink程序

-

查看Redis里的数据

因为最新数据覆盖前面的,所以最后redis里呈现的是最新的数据。

localhost:0>hgetall sensor_temp 1) "sensor_1" 2) "37.1" 3) "sensor_6" 4) "15.4" 5) "sensor_7" 6) "6.7" 7) "sensor_10" 8) "38.1"

Elasticsearch

-

pom依赖

<!-- ElasticSearch7 --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-elasticsearch7_2.12</artifactId> <version>1.12.1</version> </dependency> -

编写java代码

package apitest.sink; import apitest.beans.SensorReading; import org.apache.flink.api.common.functions.RuntimeContext; import org.apache.flink.streaming.api.datastream.DataStream; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.connectors.elasticsearch.ElasticsearchSinkFunction; import org.apache.flink.streaming.connectors.elasticsearch.RequestIndexer; import org.apache.flink.streaming.connectors.elasticsearch7.ElasticsearchSink; import org.apache.http.HttpHost; import org.elasticsearch.action.index.IndexRequest; import org.elasticsearch.client.Requests; import java.util.ArrayList; import java.util.HashMap; import java.util.List; public class SinkTest3_Es { public static void main(String[] args) throws Exception { StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); // 从文件读取数据 DataStream<String> inputStream = env.readTextFile("/tmp/Flink_Tutorial/src/main/resources/sensor.txt"); // 转换成SensorReading类型 DataStream<SensorReading> dataStream = inputStream.map(line -> { String[] fields = line.split(","); return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2])); }); // 定义es的连接配置 List<HttpHost> httpHosts = new ArrayList<>(); httpHosts.add(new HttpHost("localhost", 9200)); dataStream.addSink( new ElasticsearchSink.Builder<SensorReading>(httpHosts, new MyEsSinkFunction()).build()); env.execute(); } // 实现自定义的ES写入操作 public static class MyEsSinkFunction implements ElasticsearchSinkFunction<SensorReading> { @Override public void process(SensorReading element, RuntimeContext ctx, RequestIndexer indexer) { // 定义写入的数据source HashMap<String, String> dataSource = new HashMap<>(); dataSource.put("id", element.getId()); dataSource.put("temp", element.getTemperature().toString()); dataSource.put("ts", element.getTimestamp().toString()); // 创建请求,作为向es发起的写入命令(ES7统一type就是_doc,不再允许指定type) IndexRequest indexRequest = Requests.indexRequest() .index("sensor") .source(dataSource); // 用index发送请求 indexer.add(indexRequest); } } } -

启动ElasticSearch(我这里是docker启动的

-

运行Flink程序,查看ElasticSearch是否新增数据

$ curl "localhost:9200/sensor/_search?pretty" { "took" : 1, "timed_out" : false, "_shards" : { "total" : 1, "successful" : 1, "skipped" : 0, "failed" : 0 }, "hits" : { "total" : { "value" : 7, "relation" : "eq" }, "max_score" : 1.0, "hits" : [ { "_index" : "sensor", "_type" : "_doc", "_id" : "jciyWXcBiXrGJa12kSQt", "_score" : 1.0, "_source" : { "temp" : "35.8", "id" : "sensor_1", "ts" : "1547718199" } }, { "_index" : "sensor", "_type" : "_doc", "_id" : "jsiyWXcBiXrGJa12kSQu", "_score" : 1.0, "_source" : { "temp" : "15.4", "id" : "sensor_6", "ts" : "1547718201" } }, { "_index" : "sensor", "_type" : "_doc", "_id" : "j8iyWXcBiXrGJa12kSQu", "_score" : 1.0, "_source" : { "temp" : "6.7", "id" : "sensor_7", "ts" : "1547718202" } }, { "_index" : "sensor", "_type" : "_doc", "_id" : "kMiyWXcBiXrGJa12kSQu", "_score" : 1.0, "_source" : { "temp" : "38.1", "id" : "sensor_10", "ts" : "1547718205" } }, { "_index" : "sensor", "_type" : "_doc", "_id" : "kciyWXcBiXrGJa12kSQu", "_score" : 1.0, "_source" : { "temp" : "36.3", "id" : "sensor_1", "ts" : "1547718207" } }, { "_index" : "sensor", "_type" : "_doc", "_id" : "ksiyWXcBiXrGJa12kSQu", "_score" : 1.0, "_source" : { "temp" : "32.8", "id" : "sensor_1", "ts" : "1547718209" } }, { "_index" : "sensor", "_type" : "_doc", "_id" : "k8iyWXcBiXrGJa12kSQu", "_score" : 1.0, "_source" : { "temp" : "37.1", "id" : "sensor_1", "ts" : "1547718212" } } ] } }

JDBC自定义sink

JDBC Connector <= 官方目前没有专门针对MySQL的,我们自己实现就好了

这里测试的是连接MySQL。

-

pom依赖(我本地docker里的mysql是8.0.19版本的)

<!-- https://mvnrepository.com/artifact/mysql/mysql-connector-java --> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>8.0.19</version> </dependency> -

启动mysql服务(我本地是docker启动的)

-

新建数据库

CREATE DATABASE `flink_test` DEFAULT CHARACTER SET utf8 COLLATE utf8_general_ci; -

新建schema

CREATE TABLE `sensor_temp` ( `id` varchar(32) NOT NULL, `temp` double NOT NULL ) ENGINE=InnoDB DEFAULT CHARSET=utf8; -

编写java代码

package apitest.sink; import apitest.beans.SensorReading; import apitest.source.SourceTest4_UDF; import org.apache.flink.configuration.Configuration; import org.apache.flink.streaming.api.datastream.DataStream; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.api.functions.sink.RichSinkFunction; import java.sql.Connection; import java.sql.DriverManager; import java.sql.PreparedStatement; public class SinkTest4_Jdbc { public static void main(String[] args) throws Exception { // 创建执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); // 设置并行度 = 1 env.setParallelism(1); // 从文件读取数据 // DataStream<String> inputStream = env.readTextFile("/tmp/Flink_Tutorial/src/main/resources/sensor.txt"); // // // 转换成SensorReading类型 // DataStream<SensorReading> dataStream = inputStream.map(line -> { // String[] fields = line.split(","); // return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2])); // }); // 使用之前编写的随机变动温度的SourceFunction来生成数据 DataStream<SensorReading> dataStream = env.addSource(new SourceTest4_UDF.MySensorSource()); dataStream.addSink(new MyJdbcSink()); env.execute(); } // 实现自定义的SinkFunction public static class MyJdbcSink extends RichSinkFunction<SensorReading> { // 声明连接和预编译语句 Connection connection = null; PreparedStatement insertStmt = null; PreparedStatement updateStmt = null; @Override public void open(Configuration parameters) throws Exception { connection = DriverManager.getConnection("jdbc:mysql://localhost:3306/flink_test?useUnicode=true&serverTimezone=Asia/Shanghai&characterEncoding=UTF-8&useSSL=false", "root", "example"); insertStmt = connection.prepareStatement("insert into sensor_temp (id, temp) values (?, ?)"); updateStmt = connection.prepareStatement("update sensor_temp set temp = ? where id = ?"); } // 每来一条数据,调用连接,执行sql @Override public void invoke(SensorReading value, Context context) throws Exception { // 直接执行更新语句,如果没有更新那么就插入 updateStmt.setDouble(1, value.getTemperature()); updateStmt.setString(2, value.getId()); updateStmt.execute(); if (updateStmt.getUpdateCount() == 0) { insertStmt.setString(1, value.getId()); insertStmt.setDouble(2, value.getTemperature()); insertStmt.execute(); } } @Override public void close() throws Exception { insertStmt.close(); updateStmt.close(); connection.close(); } } } -

运行Flink程序,查看MySQL数据(可以看到MySQL里的数据一直在变动)

mysql> SELECT * FROM sensor_temp; +-----------+--------------------+ | id | temp | +-----------+--------------------+ | sensor_3 | 20.489172407885917 | | sensor_10 | 73.01289164711463 | | sensor_4 | 43.402500895809744 | | sensor_1 | 6.894772325662007 | | sensor_2 | 101.79309911751122 | | sensor_7 | 63.070612021580324 | | sensor_8 | 63.82606628090501 | | sensor_5 | 57.67115738487047 | | sensor_6 | 50.84442627975055 | | sensor_9 | 52.58400793021675 | +-----------+--------------------+ 10 rows in set (0.00 sec) mysql> SELECT * FROM sensor_temp; +-----------+--------------------+ | id | temp | +-----------+--------------------+ | sensor_3 | 19.498209543035923 | | sensor_10 | 71.92981963197121 | | sensor_4 | 43.566017489470426 | | sensor_1 | 6.378208186786803 | | sensor_2 | 101.71010087830145 | | sensor_7 | 62.11402602179431 | | sensor_8 | 64.33196455020062 | | sensor_5 | 56.39071692662006 | | sensor_6 | 48.952784757264894 | | sensor_9 | 52.078086096436685 | +-----------+--------------------+ 10 rows in set (0.00 sec)

4409

4409

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?