2D + 3D DenseNet代码

网络结构

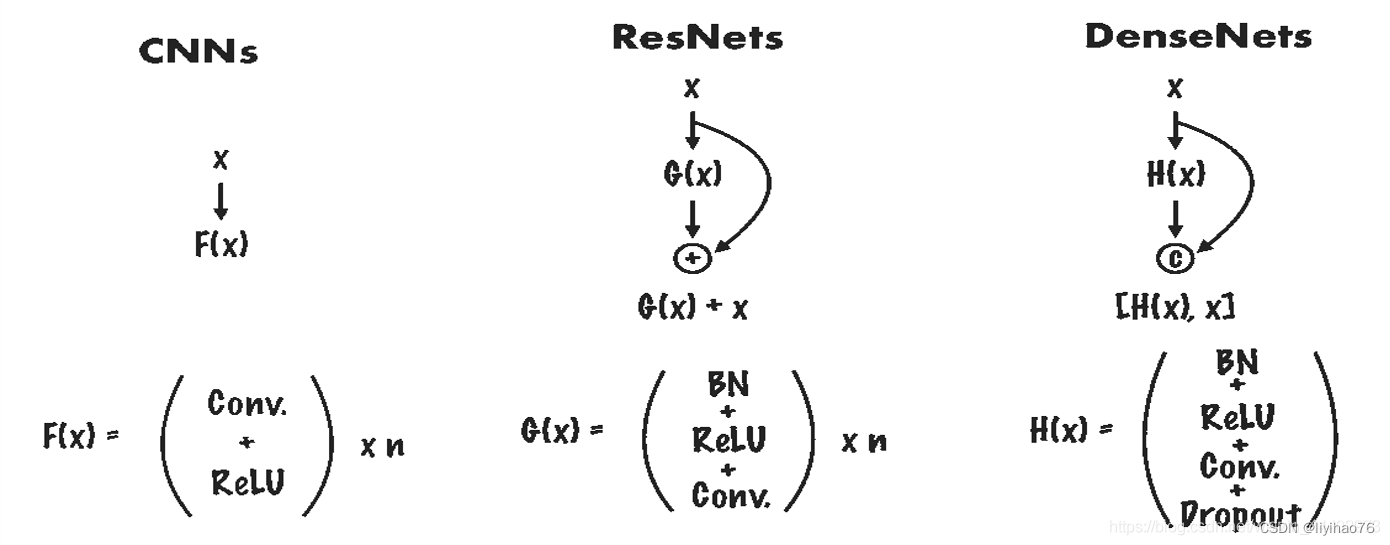

本文只介绍DenseNet的代码实现,需要对其论文有一定基础的了解。代码参考

torchvision-densenet

monai-desenet

torchvision里densenet代码分析

DenseNet代码研读

3d-densenet

DenseNet 2D

import re

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.model_zoo as model_zoo

from collections import OrderedDict

from typing import Any, List, Optional, Tuple

class _DenseLayer(nn.Module):

# 1.Dense layer的结构参照了ResNetV2的结构,BN->ReLU->Conv

# 2.与ResNet的bottleneck稍有不同的是,此处仅做两次conv(1*1conv,3*3conv),不需要第三次1*1conv将channel拉升回去

# 由于Dense block中Tensor的channel数是随着Dense layer不断增加的

# 所以Dense layer设计的就很”窄“(channel数很小,固定为growth_rate),每层仅学习很少一部分的特征

# 3.想象一下,如果设计的和bottleneck一样,输出的channel为[256, 512, 1024, 2048],那么几次堆叠之后会非常的大

def __init__(self, num_input_features: int, growth_rate: int, bn_size: int, drop_rate: float):

# num_input_features : number of the input channel.

# growth_rate: 代表每一层有多少个新的feature层添加进去 (paper里面是k). 增长速率,第二个卷积层输出特征图

# bn_size: multiplicative factor for number of bottle neck layers.

# (i.e. bn_size * k features in the bottleneck layer 第一个卷积层输出特征图)

super().__init__()

self.layers = nn.Sequential()

self.layers.add_module('norm1', nn.BatchNorm2d(num_input_features)) #这里要看到denselayer里其实主要包括两个卷积层,而且他们的channel数值得关注 #

self.layers.add_module('relu1', nn.ReLU(inplace=True))

self.layers.add_module('conv1', nn.Conv2d(num_input_features, bn_size *

growth_rate, kernel_size=1, stride=1, bias=False))

self.layers.add_module('norm2', nn.BatchNorm2d(bn_size * growth_rate))

self.layers.add_module('relu2', nn.ReLU(inplace=True))

self.layers.add_module('conv2', nn.Conv2d(bn_size * growth_rate, growth_rate,

kernel_size=3, stride=1, padding=1, bias=False)) #这里注意的是输出的channel数是growth_rate #

# 每一Dense layer结束后dropout丢弃的feature maps的比例

self.drop_rate = drop_rate

def forward(self, x):#这里是前传,主要解决的就是要把输出整形,把layer的输出和输入要cat在一起 #

new_features = self.layers(x)

if self.drop_rate > 0:

# 若设置了dropout丢弃比例,则按比例”丢弃一部分的features“(将该部分features置为0),channel数仍为growth_rate

new_features = F.dropout(new_features, p=self.drop_rate, training=self.training)

# 最后将新生成的featrue map和输入的feature map在channel维度上concat起来

# 1.不需要像ResNet一样将x进行变换使得channel数相等

# 因为DenseNet 3*3conv stride=1 不会改变Tensor的h,w,并且最后是channel维度上的堆叠而不是相加

# 2.原文中提到的内存消耗多也是因为这步,在一个block中需要把所有layer生成的feature都保存下来

return torch.cat([x, new_features], 1) #在channel上cat在一起,以形成dense连接 #

class _DenseBlock(nn.Sequential):

def __init__(self, num_layers, num_input_features, bn_size, growth_rate, drop_rate):

# num_layers: number of layers in the block.

super().__init__()

# for循环就是根据num_layers逐层添加Dense Block注意每一层的输入num_input features不一样,

# 是因为每一个DenseLayer之后feature的数目都会增加growth rate。

# 直观一点理解,其实就是每一层的DenseLayer附带了自己学习到的feature还有自己本身一起传入下一个DenseLayer。

for i in range(num_layers):

# 由于一个DenseBlock中,每经过一个layer,宽度(channel)就会堆叠增加growth_rate,所以仅需要改变num_input_features即可

layer = _DenseLayer(num_input_features + i * growth_rate, growth_rate, bn_size, drop_rate)

self.add_module('denselayer%d' % (i + 1), layer)

# 在DenseBlock之间,还添加了Transition层,这个层的作用是为了进一步提高模型的紧凑性,可以减少过渡层的特征图的数量。

class _Transition(nn.Sequential):

''' 过渡层,两个作用 '''

def __init__(self, num_input_features, num_output_features):

super().__init__()

self.add_module('norm', nn.BatchNorm2d(num_input_features))

self.add_module('relu', nn.ReLU(inplace=True))

# 作用一:即使每一层Dense layer都采取了很小的growth_rate,但是堆叠之后channel数难免会越来越大

# 所以需要在每一个Dense block之后接transition层用1*1conv将channel再拉回到一个相对较低的值(一般为输入的一半)

self.add_module('conv', nn.Conv2d(num_input_features, num_output_features,

kernel_size=1, stride=1, bias=False))

# 作用而:用average pooling改变图像分辨率,下采样

self.add_module('pool', nn.AvgPool2d(kernel_size=2, stride=2))

class DenseNet(nn.Module):

"""

Args:

growth_rate (int) - how many filters to add each layer (`k` in paper)

block_config (list of 4 ints) - how many layers in each pooling block

num_init_features (int) - the number of filters to learn in the first convolution layer

bn_size (int) - multiplicative factor for number of bottle neck layers

(i.e. bn_size * k features in the bottleneck layer)

drop_rate (float) - dropout rate after each dense layer

num_classes (int) - number of classification classes

"""

def __init__(

self,

growth_rate: int = 32,

block_config: Tuple[int, int, int, int] = (6, 12, 24, 16),

num_init_features: int = 64,

bn_size: int = 4,

drop_rate: float = 0,

num_classes: int = 1000

):

super().__init__()

# First convolution 初始化层,图像进来后不是直接进入denseblock,先使用大的卷积核,大步长,进一步压缩图像尺寸

# 注意的是nn.Sequential的用法,ordereddict使用的方法,给layer命名,

# 还有就是各层的排列,conv->bn->relu->pool 经过这一个操作就是尺寸就成为了1/4,数据量压缩了

self.features = nn.Sequential(OrderedDict([

('conv0', nn.Conv2d(3, num_init_features, kernel_size=7, stride=2, padding=3, bias=False)),

('norm0', nn.BatchNorm2d(num_init_features)),

('relu0', nn.ReLU(inplace=True)),

('pool0', nn.MaxPool2d(kernel_size=3, stride=2, padding=1)),

])) #这里使用了batchnorm2d batchnorm 最近有group norm 是否可以换

# Each denseblock 创建denseblock

num_features = num_init_features

for i, num_layers in enumerate(block_config):

block = _DenseBlock(num_layers=num_layers, num_input_features=num_features,

bn_size=bn_size, growth_rate=growth_rate, drop_rate=drop_rate)

self.features.add_module('denseblock%d' % (i + 1), block)

num_features = num_features + num_layers * growth_rate

# 第四个Dense block后不再连接Transition层

if i != len(block_config) - 1:

# 此处可以看到,默认过渡层将channel变为原来输入的一半

trans = _Transition(num_input_features=num_features, num_output_features=num_features // 2)

self.features.add_module('transition%d' % (i + 1), trans)

num_features = num_features // 2

# Final batch norm

self.features.add_module("norm5", nn.BatchNorm2d(num_features))

# Linear layer

self.classifier = nn.Linear(num_features, num_classes)

# Official init from torch repo.

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.constant_(m.bias, 0)

def forward(self, x):

features = self.features(x)

out = F.relu(features, inplace=True)

out = F.adaptive_avg_pool2d(out, (1, 1))

out = torch.flatten(out, 1)

out = self.classifier(out)

return out

densenet121 = DenseNet(num_init_features=64, growth_rate=32, block_config=(6, 12, 24, 16))

densenet169 = DenseNet(num_init_features=64, growth_rate=32, block_config=(6, 12, 32, 32))

densenet201 = DenseNet(num_init_features=64, growth_rate=32, block_config=(6, 12, 48, 32))

densenet264 = DenseNet(num_init_features=64, growth_rate=32, block_config=(6, 12, 64, 48))

可视化

x=torch.randn(1,3,224,224)

X=densenet121(x)

print(X.shape) # torch.Size([1, 1000])

import netron

import torch.onnx

import onnx

modelData ='demo.onnx' # 定义模型数据保存的路径

torch.onnx.export(densenet121, x, modelData) # 将 pytorch 模型以 onnx 格式导出并保存

onnx.save(onnx.shape_inference.infer_shapes(onnx.load(modelData)), modelData)

netron.start(modelData)

改写

class DenseNet_2d(nn.Module):

"""

Args:

growth_rate (int) - how many filters to add each layer (`k` in paper)

block_config (list of 4 ints) - how many layers in each pooling block

num_init_features (int) - the number of filters to learn in the first convolution layer

bn_size (int) - multiplicative factor for number of bottle neck layers

(i.e. bn_size * k features in the bottleneck layer)

drop_rate (float) - dropout rate after each dense layer

num_classes (int) - number of classification classes

"""

def __init__(

self,

growth_rate: int = 32,

block_config: Tuple[int, int, int, int] = (6, 12, 24, 16),

num_init_features: int = 64,

bn_size: int = 4,

drop_rate: float = 0,

num_classes: int = 1000

):

super().__init__()

# First convolution 初始化层,图像进来后不是直接进入denseblock,先使用大的卷积核,大步长,进一步压缩图像尺寸

# 注意的是nn.Sequential的用法,ordereddict使用的方法,给layer命名,

# 还有就是各层的排列,conv->bn->relu->pool 经过这一个操作就是尺寸就成为了1/4,数据量压缩了

self.Conv1 = nn.Sequential(OrderedDict([

('conv0', nn.Conv2d(3, num_init_features, kernel_size=7, stride=2, padding=3, bias=False)),

('norm0', nn.BatchNorm2d(num_init_features)),

('relu0', nn.ReLU(inplace=True)),

('pool0', nn.MaxPool2d(kernel_size=3, stride=2, padding=1)),

])) #这里使用了batchnorm2d batchnorm 最近有group norm 是否可以换

# Each denseblock 创建denseblock

num_features = num_init_features

##############################

self.Dense_Block_1 = _DenseBlock(num_layers=block_config[0], num_input_features=num_features,

bn_size=bn_size, growth_rate=growth_rate, drop_rate=drop_rate)

num_features1_T = num_features + block_config[0] * growth_rate

self.Transition_Layer_1 = _Transition(num_input_features=num_features1_T, num_output_features=num_features1_T // 2)

num_features2 = num_features1_T // 2

################################

self.Dense_Block_2 = _DenseBlock(num_layers=block_config[1], num_input_features=num_features2,

bn_size=bn_size, growth_rate=growth_rate, drop_rate=drop_rate)

num_features2_T = num_features2 + block_config[1] * growth_rate

self.Transition_Layer_2 = _Transition(num_input_features=num_features2_T, num_output_features=num_features2_T // 2)

num_features3 = num_features2_T // 2

################################

self.Dense_Block_3 = _DenseBlock(num_layers=block_config[2], num_input_features=num_features3,

bn_size=bn_size, growth_rate=growth_rate, drop_rate=drop_rate)

num_features3_T = num_features3 + block_config[2] * growth_rate

self.Transition_Layer_3 = _Transition(num_input_features=num_features3_T, num_output_features=num_features3_T // 2)

num_features4 = num_features3_T // 2

################################

self.Dense_Block_4 = _DenseBlock(num_layers=block_config[3], num_input_features=num_features4,

bn_size=bn_size, growth_rate=growth_rate, drop_rate=drop_rate)

num_features5 = num_features4 + block_config[3] * growth_rate

self.finale_bn = nn.BatchNorm2d(num_features5)

# for i, num_layers in enumerate(block_config):

# block = _DenseBlock(num_layers=num_layers, num_input_features=num_features,

# bn_size=bn_size, growth_rate=growth_rate, drop_rate=drop_rate)

# self.features.add_module('denseblock%d' % (i + 1), block)

# num_features = num_features + num_layers * growth_rate

# # 第四个Dense block后不再连接Transition层

# if i != len(block_config) - 1:

# # 此处可以看到,默认过渡层将channel变为原来输入的一半

# trans = _Transition(num_input_features=num_features, num_output_features=num_features // 2)

# self.features.add_module('transition%d' % (i + 1), trans)

# num_features = num_features // 2

# Final batch norm

#self.features.add_module("norm5", nn.BatchNorm2d(num_features))

# Linear layer

self.classifier = nn.Linear(num_features5, num_classes)

# Official init from torch repo.

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.constant_(m.bias, 0)

def forward(self, x):

#features = self.features(x)

out = self.Conv1(x)

out = self.Dense_Block_1(out)

out = self.Transition_Layer_1(out)

out = self.Dense_Block_2(out)

out = self.Transition_Layer_2(out)

out = self.Dense_Block_3(out)

out = self.Transition_Layer_3(out)

out = self.Dense_Block_4(out)

out = self.finale_bn(out)

out = F.relu(out, inplace=True)

out = F.adaptive_avg_pool2d(out, (1, 1))

out = torch.flatten(out, 1)

out = self.classifier(out)

return out

DenseNet 3D

class _DenseLayer_3d(nn.Sequential):

def __init__(self, num_input_features, growth_rate, bn_size, drop_rate):

super().__init__()

self.add_module('norm1', nn.BatchNorm3d(num_input_features))

self.add_module('relu1', nn.ReLU(inplace=True))

self.add_module(

'conv1',

nn.Conv3d(num_input_features,

bn_size * growth_rate,

kernel_size=1,

stride=1,

bias=False))

self.add_module('norm2', nn.BatchNorm3d(bn_size * growth_rate))

self.add_module('relu2', nn.ReLU(inplace=True))

self.add_module(

'conv2',

nn.Conv3d(bn_size * growth_rate,

growth_rate,

kernel_size=3,

stride=1,

padding=1,

bias=False))

self.drop_rate = drop_rate

def forward(self, x):

new_features = super().forward(x)

if self.drop_rate > 0:

new_features = F.dropout(new_features,

p=self.drop_rate,

training=self.training)

return torch.cat([x, new_features], 1)

class _DenseBlock_3d(nn.Sequential):

def __init__(self, num_layers, num_input_features, bn_size, growth_rate, drop_rate):

super().__init__()

for i in range(num_layers):

layer = _DenseLayer_3d(num_input_features + i * growth_rate,

growth_rate, bn_size, drop_rate)

self.add_module('denselayer{}'.format(i + 1), layer)

class _Transition_3d(nn.Sequential):

def __init__(self, num_input_features, num_output_features):

super().__init__()

self.add_module('norm', nn.BatchNorm3d(num_input_features))

self.add_module('relu', nn.ReLU(inplace=True))

self.add_module(

'conv',

nn.Conv3d(num_input_features,

num_output_features,

kernel_size=1,

stride=1,

bias=False))

self.add_module('pool', nn.AvgPool3d(kernel_size=2, stride=2))

class DenseNet_3d(nn.Module):

"""Densenet-BC model class

Args:

growth_rate (int) - how many filters to add each layer (k in paper)

block_config (list of 4 ints) - how many layers in each pooling block

num_init_features (int) - the number of filters to learn in the first convolution layer

bn_size (int) - multiplicative factor for number of bottle neck layers

(i.e. bn_size * k features in the bottleneck layer)

drop_rate (float) - dropout rate after each dense layer

num_classes (int) - number of classification classes

"""

def __init__(self,

growth_rate=32,

block_config=(6, 12, 24, 16),

num_init_features=64,

bn_size=4,

drop_rate=0,

num_classes=1000):

super().__init__()

# First convolution

self.features = nn.Sequential(OrderedDict([

('conv1',nn.Conv3d(1,num_init_features,

kernel_size=(7, 7, 7),

stride=(2, 2, 2),

padding=(3, 3, 3),

bias=False)),

('norm1', nn.BatchNorm3d(num_init_features)),

('relu1', nn.ReLU(inplace=True)),

('pool1', nn.MaxPool3d(kernel_size=3, stride=2, padding=1))]))

# Each denseblock

num_features = num_init_features

for i, num_layers in enumerate(block_config):

block = _DenseBlock_3d(num_layers=num_layers,

num_input_features=num_features,

bn_size=bn_size,

growth_rate=growth_rate,

drop_rate=drop_rate)

self.features.add_module('denseblock{}'.format(i + 1), block)

num_features = num_features + num_layers * growth_rate

if i != len(block_config) - 1:

trans = _Transition_3d(num_input_features=num_features,num_output_features=num_features // 2)

self.features.add_module('transition{}'.format(i + 1), trans)

num_features = num_features // 2

# Final batch norm

self.features.add_module('norm5', nn.BatchNorm3d(num_features))

for m in self.modules():

if isinstance(m, nn.Conv3d):

m.weight = nn.init.kaiming_normal(m.weight, mode='fan_out')

elif isinstance(m, nn.BatchNorm3d) or isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

# Linear layer

self.classifier = nn.Linear(num_features, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv3d):

nn.init.kaiming_normal_(m.weight,

mode='fan_out',

nonlinearity='relu')

elif isinstance(m, nn.BatchNorm3d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.constant_(m.bias, 0)

def forward(self, x):

features = self.features(x)

out = F.relu(features, inplace=True)

out = F.adaptive_avg_pool3d(out, output_size=(1, 1, 1))

out = torch.flatten(out,1)

out = self.classifier(out)

return out

densenet121_3d = DenseNet_3d(num_init_features=64, growth_rate=32, block_config=(6, 12, 24, 16))

densenet169_3d = DenseNet_3d(num_init_features=64, growth_rate=32, block_config=(6, 12, 32, 32))

densenet201_3d = DenseNet_3d(num_init_features=64, growth_rate=32, block_config=(6, 12, 48, 32))

densenet264_3d = DenseNet_3d(num_init_features=64, growth_rate=32, block_config=(6, 12, 64, 48))

x=torch.randn(1,1,224,224,224)

X=densenet121_3d(x)

print(X.shape) # torch.Size([1, 1000])

import netron

import torch.onnx

import onnx

modelData ='demo.onnx' # 定义模型数据保存的路径

torch.onnx.export(densenet121_3d, x, modelData) # 将 pytorch 模型以 onnx 格式导出并保存

onnx.save(onnx.shape_inference.infer_shapes(onnx.load(modelData)), modelData)

netron.start(modelData)

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?