本教程将带你一步步了解如何使用LangChain和智谱清言的API构建一个自我增强的RAG(Retrieval-Augmented Generation)流程。该流程包括文档检索、生成回答、评估回答的相关性和准确性,并根据需要重新生成问题或回答。

前期准备

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import WebBaseLoader

from langchain_community.vectorstores import Chroma

urls = [

"https://lilianweng.github.io/posts/2023-06-23-agent/",

"https://lilianweng.github.io/posts/2023-03-15-prompt-engineering/",

"https://lilianweng.github.io/posts/2023-10-25-adv-attack-llm/",

]

docs = [WebBaseLoader(url).load() for url in urls]

docs_list = [item for sublist in docs for item in sublist]

text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

chunk_size=250, chunk_overlap=0

)

doc_splits = text_splitter.split_documents(docs_list)

from langchain_community.embeddings import ZhipuAIEmbeddings

embed = ZhipuAIEmbeddings(

model="Embedding-3",

api_key="your api key",

)

# Add to vectorDB

batch_size = 10

for i in range(0, len(doc_splits), batch_size):

# 确保切片不会超出数组边界

batch = doc_splits[i:min(i + batch_size, len(doc_splits))]

vectorstore = Chroma.from_documents(

documents=batch,

collection_name="rag-chroma",

embedding=embed,

persist_directory="./chroma_db"

)

retriever = vectorstore.as_retriever()

1. 初始化和配置

首先,我们需要导入必要的库并配置智谱清言的API。

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

from pydantic import BaseModel, Field

配置模型

llm = ChatOpenAI(

temperature=0,

model="GLM-4-plus",

openai_api_key="your api key",

openai_api_base="https://open.bigmodel.cn/api/paas/v4/"

)

2. 定义数据模型

我们定义一个数据模型来评估检索到的文档的相关性。

class GradeDocuments(BaseModel):

"""Binary score for relevance check on retrieved documents."""

binary_score: str = Field(description="Documents are relevant to the question, 'yes' or 'no'")

3. 创建评估器

使用定义的数据模型创建一个结构化的LLM评估器。

structured_llm_grader = llm.with_structured_output(GradeDocuments)

4. 定义评估提示

创建一个提示模板,用于评估文档的相关性。

system = """You are a grader assessing relevance of a retrieved document to a user question. \n

It does not need to be a stringent test. The goal is to filter out erroneous retrievals. \n

If the document contains keyword(s) or semantic meaning related to the user question, grade it as relevant. \n

Give a binary score 'yes' or 'no' score to indicate whether the document is relevant to the question."""

grade_prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "Retrieved document: \n\n {document} \n\n User question: {question}"),

]

)

retrieval_grader = grade_prompt | structured_llm_grader

5. 检索和评估文档

使用检索器获取文档并进行评估。

question = "agent memory"

docs = retriever.invoke(question)

doc_txt = docs[1].page_content

print(retrieval_grader.invoke({"question": question, "document": doc_txt}))

输出结果:

binary_score='yes'

查看检索到的文档:

docs

[Document(metadata={'description': 'Building agents with LLM (large language model) as its core controller is a cool concept. Several proof-of-concepts demos, such as AutoGPT, GPT-Engineer and BabyAGI, serve as inspiring examples. The potentiality of LLM extends beyond generating well-written copies, stories, essays and programs; it can be framed as a powerful general problem solver.\nAgent System Overview In a LLM-powered autonomous agent system, LLM functions as the agent’s brain, complemented by several key components:', 'language': 'en', 'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'title': "LLM Powered Autonomous Agents | Lil'Log"}, page_content="LLM Powered Autonomous Agents | Lil'Log\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nLil'Log\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nPosts\n\n\n\n\nArchive\n\n\n\n\nSearch\n\n\n\n\nTags\n\n\n\n\nFAQ\n\n\n\n\nemojisearch.app\n\n\n\n\n\n\n\n\n\n LLM Powered Autonomous Agents\n \nDate: June 23, 2023 | Estimated Reading Time: 31 min | Author: Lilian Weng\n\n\n \n\n\nTable of Contents\n\n\n\nAgent System Overview\n\nComponent One: Planning\n\nTask Decomposition\n\nSelf-Reflection\n\n\nComponent Two: Memory\n\nTypes of Memory\n\nMaximum Inner Product Search (MIPS)\n\n\nComponent Three: Tool Use\n\nCase Studies\n\nScientific Discovery Agent\n\nGenerative Agents Simulation\n\nProof-of-Concept Examples\n\n\nChallenges\n\nCitation\n\nReferences"),

Document(metadata={'description': 'Building agents with LLM (large language model) as its core controller is a cool concept. Several proof-of-concepts demos, such as AutoGPT, GPT-Engineer and BabyAGI, serve as inspiring examples. The potentiality of LLM extends beyond generating well-written copies, stories, essays and programs; it can be framed as a powerful general problem solver.\nAgent System Overview In a LLM-powered autonomous agent system, LLM functions as the agent’s brain, complemented by several key components:', 'language': 'en', 'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'title': "LLM Powered Autonomous Agents | Lil'Log"}, page_content="LLM Powered Autonomous Agents | Lil'Log\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nLil'Log\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nPosts\n\n\n\n\nArchive\n\n\n\n\nSearch\n\n\n\n\nTags\n\n\n\n\nFAQ\n\n\n\n\nemojisearch.app\n\n\n\n\n\n\n\n\n\n LLM Powered Autonomous Agents\n \nDate: June 23, 2023 | Estimated Reading Time: 31 min | Author: Lilian Weng\n\n\n \n\n\nTable of Contents\n\n\n\nAgent System Overview\n\nComponent One: Planning\n\nTask Decomposition\n\nSelf-Reflection\n\n\nComponent Two: Memory\n\nTypes of Memory\n\nMaximum Inner Product Search (MIPS)\n\n\nComponent Three: Tool Use\n\nCase Studies\n\nScientific Discovery Agent\n\nGenerative Agents Simulation\n\nProof-of-Concept Examples\n\n\nChallenges\n\nCitation\n\nReferences"),

Document(metadata={'description': 'Building agents with LLM (large language model) as its core controller is a cool concept. Several proof-of-concepts demos, such as AutoGPT, GPT-Engineer and BabyAGI, serve as inspiring examples. The potentiality of LLM extends beyond generating well-written copies, stories, essays and programs; it can be framed as a powerful general problem solver.\nAgent System Overview In a LLM-powered autonomous agent system, LLM functions as the agent’s brain, complemented by several key components:', 'language': 'en', 'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'title': "LLM Powered Autonomous Agents | Lil'Log"}, page_content='Weng, Lilian. (Jun 2023). “LLM-powered Autonomous Agents”. Lil’Log. https://lilianweng.github.io/posts/2023-06-23-agent/.'),

Document(metadata={'description': 'Building agents with LLM (large language model) as its core controller is a cool concept. Several proof-of-concepts demos, such as AutoGPT, GPT-Engineer and BabyAGI, serve as inspiring examples. The potentiality of LLM extends beyond generating well-written copies, stories, essays and programs; it can be framed as a powerful general problem solver.\nAgent System Overview In a LLM-powered autonomous agent system, LLM functions as the agent’s brain, complemented by several key components:', 'language': 'en', 'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'title': "LLM Powered Autonomous Agents | Lil'Log"}, page_content='Weng, Lilian. (Jun 2023). “LLM-powered Autonomous Agents”. Lil’Log. https://lilianweng.github.io/posts/2023-06-23-agent/.')]

查看特定文档的内容:

docs[1].page_content

"LLM Powered Autonomous Agents | Lil'Log\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nLil'Log\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\n\nPosts\n\n\n\n\nArchive\n\n\n\n\nSearch\n\n\n\n\nTags\n\n\n\n\nFAQ\n\n\n\n\nemojisearch.app\n\n\n\n\n\n\n\n\n\n LLM Powered Autonomous Agents\n \nDate: June 23, 2023 | Estimated Reading Time: 31 min | Author: Lilian Weng\n\n\n \n\n\nTable of Contents\n\n\n\nAgent System Overview\n\nComponent One: Planning\n\nTask Decomposition\n\nSelf-Reflection\n\n\nComponent Two: Memory\n\nTypes of Memory\n\nMaximum Inner Product Search (MIPS)\n\n\nComponent Three: Tool Use\n\nCase Studies\n\nScientific Discovery Agent\n\nGenerative Agents Simulation\n\nProof-of-Concept Examples\n\n\nChallenges\n\nCitation\n\nReferences"

6. 生成回答

使用LangChain的hub获取提示模板,并创建生成链。

from langchain import hub

from langchain_core.output_parsers import StrOutputParser

# Prompt

prompt = hub.pull("rlm/rag-prompt")

# Post-processing

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

# Chain

rag_chain = prompt | llm | StrOutputParser()

# Run

generation = rag_chain.invoke({"context": docs, "question": question})

print(generation)

d:\soft\anaconda\envs\langchain\Lib\site-packages\langsmith\client.py:354: LangSmithMissingAPIKeyWarning: API key must be provided when using hosted LangSmith API

warnings.warn(

In a LLM-powered autonomous agent system, memory is a crucial component. It involves different types of memory and utilizes techniques like Maximum Inner Product Search (MIPS) for efficient information retrieval. This memory component complements the LLM, which acts as the agent's brain, enhancing its problem-solving capabilities.

7. 评估生成回答的准确性

定义一个新的数据模型来评估生成回答的准确性。

class GradeHallucinations(BaseModel):

"""Binary score for hallucination present in generation answer."""

binary_score: str = Field(description="Answer is grounded in the facts, 'yes' or 'no'")

创建评估器并定义提示模板。

structured_llm_grader = llm.with_structured_output(GradeHallucinations)

system = """You are a grader assessing whether an LLM generation is grounded in / supported by a set of retrieved facts. \n

Give a binary score 'yes' or 'no'. 'Yes' means that the answer is grounded in / supported by the set of facts."""

hallucination_prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "Set of facts: \n\n {documents} \n\n LLM generation: {generation}"),

]

)

hallucination_grader = hallucination_prompt | structured_llm_grader

hallucination_grader.invoke({"documents": docs, "generation": generation})

输出结果:

GradeHallucinations(binary_score='yes')

8. 评估回答是否解决了问题

定义一个新的数据模型来评估回答是否解决了问题。

class GradeAnswer(BaseModel):

"""Binary score to assess answer addresses question."""

binary_score: str = Field(description="Answer addresses the question, 'yes' or 'no'")

创建评估器并定义提示模板。

structured_llm_grader = llm.with_structured_output(GradeAnswer)

system = """You are a grader assessing whether an answer addresses / resolves a question \n

Give a binary score 'yes' or 'no'. Yes' means that the answer resolves the question."""

answer_prompt = ChatPromptTemplate.from_messages(

[

("system", system),

("human", "User question: \n\n {question} \n\n LLM generation: {generation}"),

]

)

answer_grader = answer_prompt | structured_llm_grader

answer_grader.invoke({"question": question, "generation": generation})

输出结果:

GradeAnswer(binary_score='yes')

9. 重写问题

定义一个提示模板来重写问题,使其更适合向量存储检索。

system = """You a question re-writer that converts an input question to a better version that is optimized \n

for vectorstore retrieval. Look at the input and try to reason about the underlying semantic intent / meaning."""

re_write_prompt = ChatPromptTemplate.from_messages(

[

("system", system),

(

"human",

"Here is the initial question: \n\n {question} \n Formulate an improved question.",

),

]

)

question_rewriter = re_write_prompt | llm | StrOutputParser()

question_rewriter.invoke({"question": question})

'To optimize the initial question "agent memory" for vectorstore retrieval, it\'s important to clarify the intent and context. The term "agent memory" could refer to various concepts, such as memory in AI agents, memory management in software agents, or even human agents\' memory in certain contexts. \n\nImproved Question: "What is the concept and functionality of memory in AI agents?"\n\nThis version is more specific and likely to retrieve relevant information from a vectorstore by clearly indicating the focus on AI agents and the aspects of memory related to them.'

10. 定义图状态

定义一个类型字典来表示图的状态。

from typing import List

from typing_extensions import TypedDict

class GraphState(TypedDict):

"""

Represents the state of our graph.

Attributes:

question: question

generation: LLM generation

documents: list of documents

"""

question: str

generation: str

documents: List[str]

11. 定义节点函数

定义各个节点函数,包括检索、生成、评估文档、转换查询等。

def retrieve(state):

"""

Retrieve documents

Args:

state (dict): The current graph state

Returns:

state (dict): New key added to state, documents, that contains retrieved documents

"""

print("---RETRIEVE---")

question = state["question"]

documents = retriever.invoke(question)

return {"documents": documents, "question": question}

def generate(state):

"""

Generate answer

Args:

state (dict): The current graph state

Returns:

state (dict): New key added to state, generation, that contains LLM generation

"""

print("---GENERATE---")

question = state["question"]

documents = state["documents"]

generation = rag_chain.invoke({"context": documents, "question": question})

return {"documents": documents, "question": question, "generation": generation}

def grade_documents(state):

"""

Determines whether the retrieved documents are relevant to the question.

Args:

state (dict): The current graph state

Returns:

state (dict): Updates documents key with only filtered relevant documents

"""

print("---CHECK DOCUMENT RELEVANCE TO QUESTION---")

question = state["question"]

documents = state["documents"]

filtered_docs = []

for d in documents:

score = retrieval_grader.invoke({"question": question, "document": d.page_content})

grade = score.binary_score

if grade == "yes":

print("---GRADE: DOCUMENT RELEVANT---")

filtered_docs.append(d)

else:

print("---GRADE: DOCUMENT NOT RELEVANT---")

continue

return {"documents": filtered_docs, "question": question}

def transform_query(state):

"""

Transform the query to produce a better question.

Args:

state (dict): The current graph state

Returns:

state (dict): Updates question key with a re-phrased question

"""

print("---TRANSFORM QUERY---")

question = state["question"]

documents = state["documents"]

better_question = question_rewriter.invoke({"question": question})

return {"documents": documents, "question": better_question}

12. 定义边函数

定义决策函数,决定下一步的操作。

def decide_to_generate(state):

"""

Determines whether to generate an answer, or re-generate a question.

Args:

state (dict): The current graph state

Returns:

str: Binary decision for next node to call

"""

print("---ASSESS GRADED DOCUMENTS---")

filtered_documents = state["documents"]

if not filtered_documents:

print("---DECISION: ALL DOCUMENTS ARE NOT RELEVANT TO QUESTION, TRANSFORM QUERY---")

return "transform_query"

else:

print("---DECISION: GENERATE---")

return "generate"

def grade_generation_v_documents_and_question(state):

"""

Determines whether the generation is grounded in the document and answers question.

Args:

state (dict): The current graph state

Returns:

str: Decision for next node to call

"""

print("---CHECK HALLUCINATIONS---")

question = state["question"]

documents = state["documents"]

generation = state["generation"]

score = hallucination_grader.invoke({"documents": documents, "generation": generation})

grade = score.binary_score

if grade == "yes":

print("---DECISION: GENERATION IS GROUNDED IN DOCUMENTS---")

score = answer_grader.invoke({"question": question, "generation": generation})

grade = score.binary_score

if grade == "yes":

print("---DECISION: GENERATION ADDRESSES QUESTION---")

return "useful"

else:

print("---DECISION: GENERATION DOES NOT ADDRESS QUESTION---")

return "not useful"

else:

print("---DECISION: GENERATION IS NOT GROUNDED IN DOCUMENTS, RE-TRY---")

return "not supported"

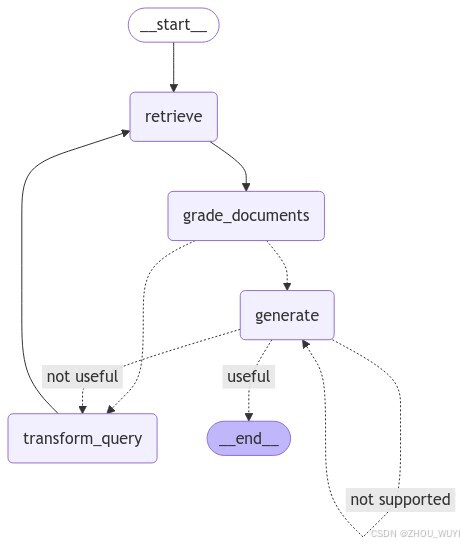

13. 构建和编译工作流

使用LangGraph构建和编译工作流。

from langgraph.graph import END, StateGraph, START

workflow = StateGraph(GraphState)

# Define the nodes

workflow.add_node("retrieve", retrieve)

workflow.add_node("grade_documents", grade_documents)

workflow.add_node("generate", generate)

workflow.add_node("transform_query", transform_query)

# Build graph

workflow.add_edge(START, "retrieve")

workflow.add_edge("retrieve", "grade_documents")

workflow.add_conditional_edges(

"grade_documents",

decide_to_generate,

{

"transform_query": "transform_query",

"generate": "generate",

},

)

workflow.add_edge("transform_query", "retrieve")

workflow.add_conditional_edges(

"generate",

grade_generation_v_documents_and_question,

{

"not supported": "generate",

"useful": END,

"not useful": "transform_query",

},

)

# Compile

app = workflow.compile()

14. 可视化工作流

尝试可视化工作流图。

from IPython.display import Image, display

try:

display(Image(app.get_graph(xray=True).draw_mermaid_png()))

except Exception:

pass

15. 运行工作流

运行工作流并打印每一步的结果。

from pprint import pprint

# Run

inputs = {"question": "Explain how the different types of agent memory work?"}

for output in app.stream(inputs):

for key, value in output.items():

pprint(f"Node '{key}':")

pprint("\n---\n")

# Final generation

pprint(value["generation"])

---RETRIEVE---

"Node 'retrieve':"

'\n---\n'

---CHECK DOCUMENT RELEVANCE TO QUESTION---

---GRADE: DOCUMENT RELEVANT---

---GRADE: DOCUMENT RELEVANT---

---GRADE: DOCUMENT RELEVANT---

---GRADE: DOCUMENT RELEVANT---

---ASSESS GRADED DOCUMENTS---

---DECISION: GENERATE---

"Node 'grade_documents':"

'\n---\n'

---GENERATE---

---CHECK HALLUCINATIONS---

---DECISION: GENERATION IS GROUNDED IN DOCUMENTS---

---GRADE GENERATION vs QUESTION---

---DECISION: GENERATION ADDRESSES QUESTION---

"Node 'generate':"

'\n---\n'

('The different types of agent memory in LLM-powered autonomous systems '

'include Sensory Memory, which retains sensory information briefly after '

'stimuli end, with subcategories like iconic (visual), echoic (auditory), and '

'haptic (touch) memory. These memory types enable agents to process and '

'recall sensory data, aiding in decision-making and problem-solving tasks. '

'The specific mechanisms and applications of these memories can vary based on '

"the agent's design and objectives.")

运行其他问题:

inputs = {"question": "Explain how chain of thought prompting works?"}

for output in app.stream(inputs):

for key, value in output.items():

pprint(f"Node '{key}':")

pprint("\n---\n")

# Final generation

pprint(value["generation"])

---RETRIEVE---

"Node 'retrieve':"

'\n---\n'

---CHECK DOCUMENT RELEVANCE TO QUESTION---

---GRADE: DOCUMENT RELEVANT---

---GRADE: DOCUMENT RELEVANT---

---GRADE: DOCUMENT RELEVANT---

---GRADE: DOCUMENT RELEVANT---

---ASSESS GRADED DOCUMENTS---

---DECISION: GENERATE---

"Node 'grade_documents':"

'\n---\n'

---GENERATE---

---CHECK HALLUCINATIONS---

---DECISION: GENERATION IS GROUNDED IN DOCUMENTS---

---GRADE GENERATION vs QUESTION---

---DECISION: GENERATION ADDRESSES QUESTION---

"Node 'generate':"

'\n---\n'

('Chain of thought (CoT) prompting is a method in prompt engineering used to '

'guide language models (LLMs) by providing a step-by-step reasoning process '

'within the prompt. This technique helps the model to generate more coherent '

'and contextually appropriate responses by following the logical sequence '

'outlined in the prompt. The effectiveness of CoT prompting can vary among '

'different models, necessitating experimentation and heuristics to optimize '

'its use.')

通过以上步骤,我们构建了一个自我增强的RAG流程,能够根据问题检索文档、生成回答,并评估回答的相关性和准确性,必要时重新生成问题或回答。

注意事项:

运行完全跟文档无关的问题时会陷入死循环:

inputs = {"question": "中文字数"}

for output in app.stream(inputs):

for key, value in output.items():

pprint(f"Node '{key}':")

pprint("\n---\n")

# Final generation

pprint(value["generation"])

---RETRIEVE---

"Node 'retrieve':"

'\n---\n'

---CHECK DOCUMENT RELEVANCE TO QUESTION---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---ASSESS GRADED DOCUMENTS---

---DECISION: ALL DOCUMENTS ARE NOT RELEVANT TO QUESTION, TRANSFORM QUERY---

"Node 'grade_documents':"

'\n---\n'

---TRANSFORM QUERY---

"Node 'transform_query':"

'\n---\n'

---RETRIEVE---

"Node 'retrieve':"

'\n---\n'

---CHECK DOCUMENT RELEVANCE TO QUESTION---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---ASSESS GRADED DOCUMENTS---

---DECISION: ALL DOCUMENTS ARE NOT RELEVANT TO QUESTION, TRANSFORM QUERY---

"Node 'grade_documents':"

'\n---\n'

---TRANSFORM QUERY---

---------------------------------------------------------------------------

KeyboardInterrupt

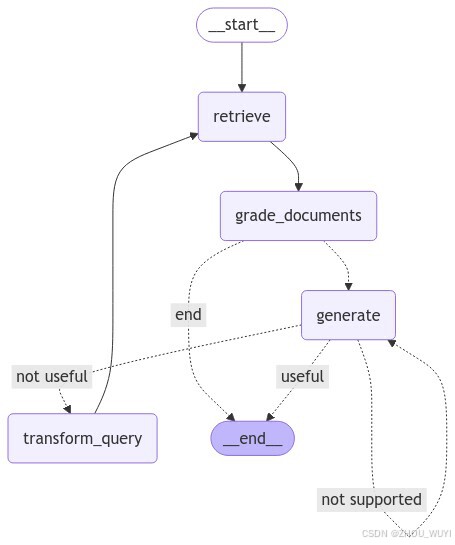

修改方案:如果第一次没有召回向量数据库的任何内容,就结束运行,如图:

具体代码:

def decide_to_generate(state):

"""

Determines whether to generate an answer, or re-generate a question.

Args:

state (dict): The current graph state

Returns:

str: Binary decision for next node to call

"""

print("---ASSESS GRADED DOCUMENTS---")

state["question"]

filtered_documents = state["documents"]

if not filtered_documents:

# All documents have been filtered check_relevance

# We will re-generate a new query

print(

"---DECISION: ALL DOCUMENTS ARE NOT RELEVANT TO QUESTION, TRANSFORM QUERY---"

)

return "end"

else:

# We have relevant documents, so generate answer

print("---DECISION: GENERATE---")

return "generate"

from langgraph.graph import END, StateGraph, START

workflow = StateGraph(GraphState)

# Define the nodes

workflow.add_node("retrieve", retrieve) # retrieve

workflow.add_node("grade_documents", grade_documents) # grade documents

workflow.add_node("generate", generate) # generatae

workflow.add_node("transform_query", transform_query) # transform_query

# Build graph

workflow.add_edge(START, "retrieve")

workflow.add_edge("retrieve", "grade_documents")

workflow.add_conditional_edges(

"grade_documents",

decide_to_generate,

{

"end": END,

"generate": "generate",

},

)

workflow.add_edge("transform_query", "retrieve")

workflow.add_conditional_edges(

"generate",

grade_generation_v_documents_and_question,

{

"not supported": "generate",

"useful": END,

"not useful": "transform_query",

},

)

# Compile

app = workflow.compile()

from IPython.display import Image, display

try:

display(Image(app.get_graph(xray=True).draw_mermaid_png()))

except Exception:

# This requires some extra dependencies and is optional

pass

inputs = {"question": "中文字数"}

for output in app.stream(inputs):

for key, value in output.items():

# Node

pprint(f"Node '{key}':")

# Optional: print full state at each node

# pprint.pprint(value["keys"], indent=2, width=80, depth=None)

pprint("\n---\n")

---RETRIEVE---

"Node 'retrieve':"

'\n---\n'

---CHECK DOCUMENT RELEVANCE TO QUESTION---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---GRADE: DOCUMENT NOT RELEVANT---

---ASSESS GRADED DOCUMENTS---

---DECISION: ALL DOCUMENTS ARE NOT RELEVANT TO QUESTION, TRANSFORM QUERY---

"Node 'grade_documents':"

'\n---\n'

参考链接:https://langchain-ai.github.io/langgraph/tutorials/rag/langgraph_self_rag/#setup

如果有任何问题,欢迎在评论区提问。

378

378

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?