一、单目标定

1.1 标定原理

对于单目的相机,存在投影关系:

P

u

v

=

1

s

K

⋅

[

R

c

w

∣

T

c

w

]

⋅

P

w

即

[

x

y

]

=

1

s

K

⋅

[

r

1

r

2

r

3

t

]

⋅

[

X

Y

Z

1

]

P_{uv}=\frac 1 {s} K\cdot[R_{cw}\space|\space T_{cw}]\cdot P_w\\~\\即\quad \begin{bmatrix} x\\y\end{bmatrix} = \frac 1 {s} K\cdot \begin{bmatrix}r_1\space \space r_2\space\space r_3 \space\space t\end{bmatrix}\cdot \begin{bmatrix} X\\Y\\Z\\1\end{bmatrix}

Puv=s1K⋅[Rcw ∣ Tcw]⋅Pw 即[xy]=s1K⋅[r1 r2 r3 t]⋅⎣⎢⎢⎡XYZ1⎦⎥⎥⎤

其中

P

u

v

P_{uv}

Puv是像素坐标,

R

c

w

R_{cw}

Rcw 和

T

c

w

T_{cw}

Tcw分别是从世界坐标到相机坐标的变换矩阵,

P

w

P_w

Pw是世界坐标系下的点,

K

K

K为相机内参。

标定主要就是求解相机的内参矩阵

K

K

K以及畸变矩阵(径向畸变

k

k

k以及切向畸变

p

p

p),消除畸变的公式:

[

x

p

y

p

]

=

(

1

+

k

1

r

2

+

k

2

r

4

+

k

3

)

[

x

d

y

d

]

+

[

2

p

1

x

d

y

d

+

p

2

(

r

2

+

2

x

d

2

)

p

1

(

r

2

+

2

y

d

2

)

+

2

p

2

x

d

y

d

]

\begin{bmatrix} x_p\\y_p\end{bmatrix}= (1+k_1r^2+k_2r^4+k_3)\begin{bmatrix} x_d\\y_d\end{bmatrix} +\begin{bmatrix} 2p_1x_dy_d+p_2(r^2+2x_d^2)\\p_1(r^2+2y_d^2)+2p_2x_dy_d\end{bmatrix}

[xpyp]=(1+k1r2+k2r4+k3)[xdyd]+[2p1xdyd+p2(r2+2xd2)p1(r2+2yd2)+2p2xdyd]

1.2 标定方法

一般来说,我们利用Matlab(这个比较简单,直接用就行)和OpenCV来对相机进行标定;

首先我们要保存世界坐标系下棋盘阵的3D点

P

w

P_w

Pw(objectPoints),以及检测到的点阵在图像中的像素坐标

P

u

v

P_{uv}

Puv(imagePoints)。

Tips:一般来说,我们会把 P w P_w Pw的Z坐标令为0,那么就可以变为

[

x

y

]

=

1

s

K

⋅

[

r

1

r

2

r

3

t

]

⋅

[

X

Y

0

1

]

=

1

s

K

⋅

[

r

1

r

2

t

]

⋅

[

X

Y

1

]

\begin{bmatrix} x\\y\end{bmatrix} = \frac 1 {s} K\cdot \begin{bmatrix}r_1\space \space r_2\space\space r_3 \space\space t\end{bmatrix}\cdot \begin{bmatrix} X\\Y\\0\\1\end{bmatrix}=\frac 1 {s} K\cdot \begin{bmatrix}r_1\space \space r_2\space\space t\end{bmatrix}\cdot \begin{bmatrix} X\\Y\\1\end{bmatrix}

[xy]=s1K⋅[r1 r2 r3 t]⋅⎣⎢⎢⎡XY01⎦⎥⎥⎤=s1K⋅[r1 r2 t]⋅⎣⎡XY1⎦⎤

就可以利用此关系来进行求解。

其次,利用函数:

findChessboardCorners( InputArray image,

Size patternSize,

OutputArray corners,

int flags = CALIB_CB_ADAPTIVE_THRESH + CALIB_CB_NORMALIZE_IMAGE );

初步找到imagePoints(corners),但是精度较差,这时候就需要另一个函数对位置进行精确的定位:

寻找亚像素角点:

cornerSubPix( InputArray image,

//findChessboardCorners输出的corners作为输入,之后又作为输出,(精确后的2D点坐标)

InputOutputArray corners,

Size winSize, //一般设置为cv::Size(5, 5)

Size zeroZone, //一般设置为空,即cv::Size(-1, -1)

TermCriteria criteria );

上述的 TermCriteria criteria 是专门用于设置迭代条件的,主要是迭代次数和迭代终止条件,例如:

TermCriteria termCriteria;

termCriteria = TermCriteria(TermCriteria::MAX_ITER +TermCriteria::EPS,

30, //maxCount

0.1); //epsilon

将得到的corners保存到 imagePoints 中。

最后利用函数自动求解 K , d i s t C o e f f s K,distCoeffs K,distCoeffs 以及相机外参 R , T R,T R,T :

double calibrateCamera( InputArrayOfArrays objectPoints,

InputArrayOfArrays imagePoints,

Size imageSize,

InputOutputArray cameraMatrix,

InputOutputArray distCoeffs,

OutputArrayOfArrays rvecs,

OutputArrayOfArrays tvecs,

int flags = 0,

TermCriteria criteria = TermCriteria(

TermCriteria::COUNT + TermCriteria::EPS,

30,

DBL_EPSILON) );

这里要注意的是distCoeffs:

来自官方的解释:

如果你输入的矩阵维度是4,那输出的参数就是 [ k 1 k 2 p 1 p 2 ] [k_1 \space k_2 \space p_1 \space p_2] [k1 k2 p1 p2];

如果你输入的矩阵维度是5,那输出的参数就是 [ k 1 k 2 p 1 p 2 k 3 ] [k_1 \space k_2 \space p_1 \space p_2 \space k_3] [k1 k2 p1 p2 k3];

如果你输入的矩阵维度是8,那输出的参数就是 [ k 1 k 2 p 1 p 2 k 3 k 4 k 5 k 6 ] [k_1 \space k_2 \space p_1 \space p_2\space k_3\space k_4 \space k_5\space k_6] [k1 k2 p1 p2 k3 k4 k5 k6];

1.3 去畸变

如果需要矫正畸变图像,这里有两种方法:

1.首先利用函数initUndistortRectifyMap计算矫正映射 map1 map2,这里需要输入newCameraMatrix,你也可以利用原相机矩阵cameraMatrix,这样得到的图像的相机矩阵还是cameraMatrix,只是相比原图像去除了畸变;输入R 矩阵在单目中一般可以设置为cv::noArray(),在双目中就需要输入相对应的矫正矩阵

R

1

R_1

R1或者

R

2

R_2

R2;

再利用函数remap进行图像映射,就完成了畸变矫正;

void initUndistortRectifyMap( InputArray cameraMatrix,

InputArray distCoeffs,

InputArray R,

InputArray newCameraMatrix,

Size size,

int m1type, //CV_16SC2 或者 CV_32FC1 或者 CV_32FC2

OutputArray map1, OutputArray map2);

void remap( InputArray src,

OutputArray dst,

InputArray map1, InputArray map2,

int interpolation, int borderMode = BORDER_CONSTANT,

const Scalar& borderValue = Scalar());

2.第二种方法就直接利用函数 undistort,输入cameraMatrix,distCoeffs,和 newCameraMatrix即可:

void undistort( InputArray src,

OutputArray dst,

InputArray cameraMatrix,

InputArray distCoeffs,

InputArray newCameraMatrix = noArray() );

实际上undistort函数在内部调用了 initUndistortRectifyMap remap函数,更方便一些。

1.4 代码

CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project(calibrationCamera)

set(CMAKE_BUILD_TYPE "Release")

set(CMAKE_INCLUDE_CURRENT_DIR ON)

find_package(OpenCV REQUIRED)

INCLUDE_DIRECTORIES(${OpenCV_INCLUDE_DIR})

add_executable(${PROJECT_NAME} "main.cpp")

target_link_libraries(${PROJECT_NAME} ${OpenCV_LIBS})

mian.cpp

#include <iostream>

#include <fstream>

#include <vector>

#include <string>

#include "opencv2/core.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/calib3d.hpp"

#include "opencv2/imgproc.hpp"

using namespace std;

using namespace cv;

int main(int argc,char**argv)

{

float squareSize = 1.0; //the length or height of the per broad, unit[mm]

if(argc<4)

{

cout<<"./calibrationCamera [imagepath] [width-1] [height-1] [squareSize = 1.0]\n";

cout<<"输入 [图像路径] [棋盘宽格数-1] [棋盘横格数-1] [每个格子的边长/mm{如果不知道格子长度可以不用输入,默认1.0}]\n";

return -1;

}

if(argc==5)

squareSize = atof(argv[4]);

vector<string> files;

glob(argv[1],files);

cv::Size boardSize(atoi(argv[2]),atoi(argv[3]));

//saver

std::vector<std::vector<cv::Point3f>> objectPoints;

std::vector<std::vector<cv::Point2f>> imagePoints;

Mat cameraMatrix; //Camera intrinsic Matrix

Mat distCoeffs; //five parameters of distCoeffs,(k1,k2,p1,p2[,k3[,k4,k5,k6]])

std::vector<cv::Mat> rvecs, tvecs;

cv::Size imageSize;

vector<Point2f> imageCorners;

vector<Point3f> objectCorners;

//get the Corners' position

for (int i = 0; i < boardSize.height; i++)

{

for (int j = 0; j < boardSize.width; j++)

{

objectCorners.push_back(cv::Point3f(i*squareSize, j*squareSize, 0.0f));

}

}

for(size_t i =0;i<files.size();i++)

{

Mat image = imread(files[i]);

Mat gray;

if(image.empty())

continue;

cvtColor(image,gray,COLOR_BGR2GRAY);

//get Chessboard Corners

bool found = cv::findChessboardCorners(gray, boardSize,imageCorners);

if(found)

{

TermCriteria termCriteria;

termCriteria = TermCriteria(TermCriteria::MAX_ITER +TermCriteria::EPS,

30, //maxCount

0.1); //epsilon

cornerSubPix(gray,

imageCorners,

cv::Size(5, 5), // winSize

cv::Size(-1, -1),

termCriteria); // epsilon

if(imageCorners.size() == boardSize.area())

{

imagePoints.push_back(imageCorners);

objectPoints.push_back(objectCorners);

}

cv::drawChessboardCorners(image, boardSize, imageCorners, found);

imshow("Corners on Chessboard", image);

waitKey(100);

}

imageSize = image.size();

}

cv::destroyAllWindows();

cout<<imageSize<<endl;

calibrateCamera(objectPoints, // 三维点

imagePoints, // 图像点

imageSize, // 图像尺寸

cameraMatrix, // 输出相机矩阵

distCoeffs, // 输出畸变矩阵

rvecs, tvecs // Rs、Ts(外参)

);

cout<<"K = \n"<<cameraMatrix<<endl;

cout<<"distCoeffs = \n"<<distCoeffs<<endl<<endl;

//clear the old .yaml file

string path = string(argv[1])+"/calib.yaml";

ofstream fs(path);

fs.clear();

fs << "# ------camera Intrinsic--------"<<endl;

fs << "Camera.fx: " << cameraMatrix.at<double>(0,0)<<endl;

fs << "Camera.fy: " << cameraMatrix.at<double>(1,1)<<endl;

fs << "Camera.cx: " << cameraMatrix.at<double>(0,2)<<endl;

fs << "Camera.cy: " << cameraMatrix.at<double>(1,2)<<endl<<endl;

fs << "# ------camera Distortion--------"<<endl;

fs << "Camera.k1: " << distCoeffs.at<double>(0,0)<<endl;

fs << "Camera.k2: " << distCoeffs.at<double>(0,1)<<endl;

fs << "Camera.p1: " << distCoeffs.at<double>(0,2)<<endl;

fs << "Camera.p2: " << distCoeffs.at<double>(0,3)<<endl;

fs << "Camera.k3: " << distCoeffs.at<double>(0,4)<<endl<<endl;

fs.close();

cv::FileStorage f(path,cv::FileStorage::APPEND);

f.writeComment(" \n------camera Intrinsic saved by yaml data--------",true);

f<<"camera Matrix K"<<cameraMatrix;

f.writeComment(" \n------camera Distortion saved by yaml data--------",true);

f<<"camera Distortion"<<distCoeffs;

f.release();

//Mat newCameraMatrix = getOptimalNewCameraMatrix(cameraMatrix,distCoeffs,imageSize,0);//you can use 0 to test the images

//cout<<"after undistort newCameraMatrix = \n"<<newCameraMatrix<<endl<<endl;

for(size_t i=0;i<files.size();i++)

{

Mat outputfile;

Mat src = imread(files[i]);

if(src.empty())

continue;

cv::undistort(src,outputfile,cameraMatrix,distCoeffs,cameraMatrix);

//imwrite("../image_/"+to_string(i)+".jpg",outputfile);

Mat srcAnddst(src.rows,src.cols + outputfile.cols,src.type());

Mat submat =srcAnddst.colRange(0,src.cols);

src.copyTo(submat);

submat = srcAnddst.colRange(src.cols,outputfile.cols*2);

outputfile.copyTo(submat);

imshow("sources and undistort",srcAnddst);

waitKey(300);

}

cv::destroyAllWindows();

std::string commad("gedit "+path);

system(commad.c_str());

cout<<"The last config yaml file is : "<<path<<endl;

return 0;

}

编译以后输入参数的方法:

./calibrationCamera [imagepath] [width-1] [height-1] [squareSize = 1.0]

二、双目标定

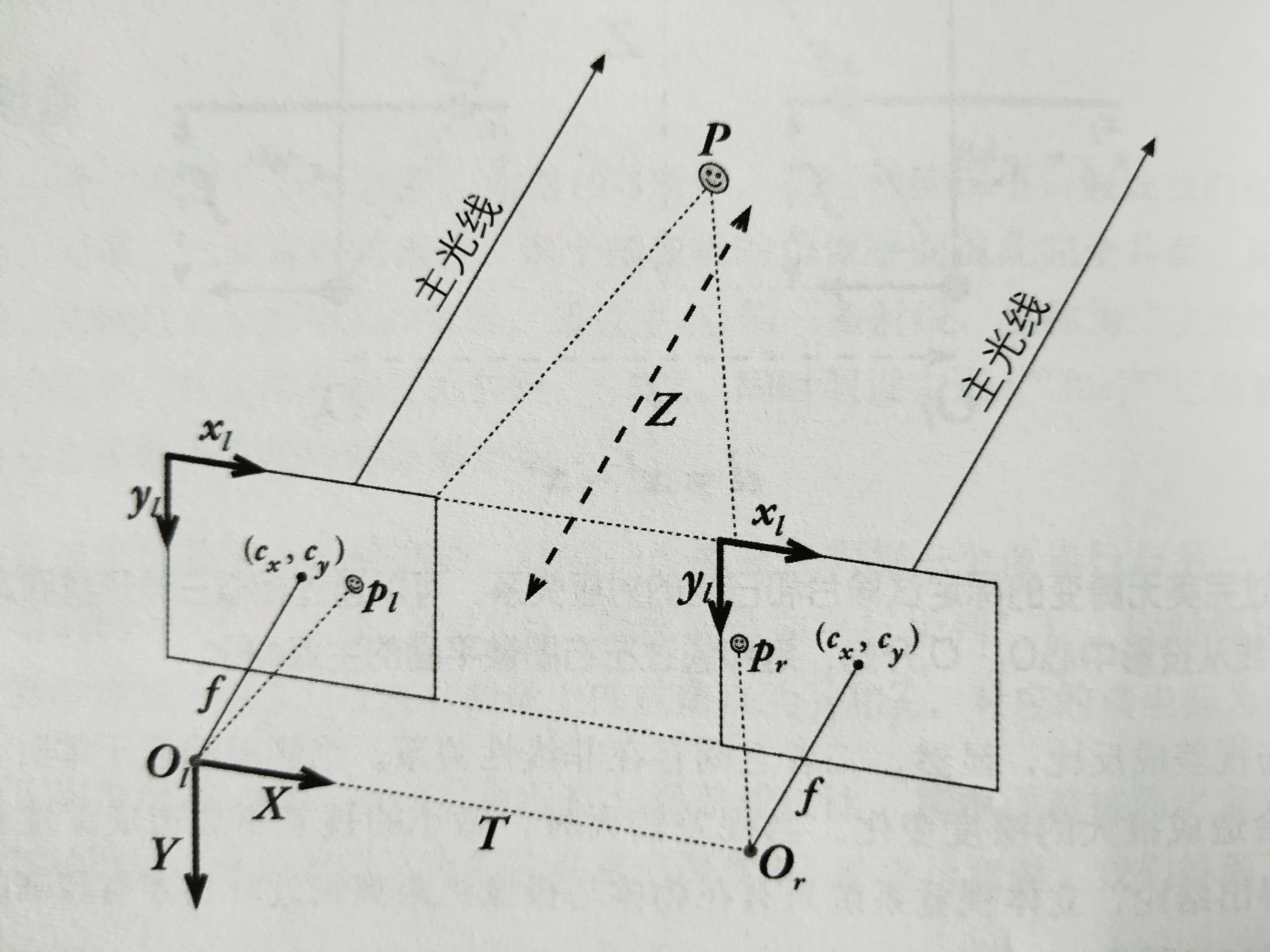

2.1 标定原理

对于左右相机分别存在以下投影关系:

P

u

v

l

=

1

s

l

K

l

⋅

[

R

l

w

∣

T

l

w

]

⋅

P

w

P

u

v

r

=

1

s

r

K

r

⋅

[

R

r

w

∣

T

r

w

]

⋅

P

w

P_{uvl}=\frac 1 {s_l} K_l\cdot[R_{lw}\space|\space T_{lw}]\cdot P_w\\~\\ P_{uvr}=\frac 1 {s_r} K_r\cdot[R_{rw}\space|\space T_{rw}]\cdot P_w

Puvl=sl1Kl⋅[Rlw ∣ Tlw]⋅Pw Puvr=sr1Kr⋅[Rrw ∣ Trw]⋅Pw

左右相机坐标系下的点

P

l

P_l

Pl和

P

r

P_r

Pr存在关系:

P

l

=

R

l

w

P

w

+

T

l

w

P

r

=

R

r

w

P

w

+

T

r

w

P_l = R_{lw}P_w+T_{lw}\\~\\ P_r = R_{rw}P_w+T_{rw}

Pl=RlwPw+Tlw Pr=RrwPw+Trw

如果分别标定左右相机求解出外参

P

l

w

T

l

w

P_{lw} T_{lw}

PlwTlw和

P

r

w

T

r

w

P_{rw}T_{rw}

PrwTrw,那我们可以求出从左相机到右相机的变换矩阵:

{

R

r

l

=

R

r

w

⋅

R

l

w

T

T

r

l

=

T

r

w

−

R

r

l

⋅

T

l

w

\left \{ \begin{aligned} R_{rl} &= R_{rw}\cdot R_{lw}^T\\~\\ T_{rl} &= T_{rw} - R_{rl}\cdot T_{lw} \end{aligned} \right.

⎩⎪⎨⎪⎧Rrl Trl=Rrw⋅RlwT=Trw−Rrl⋅Tlw

我们需要标定的就是

R

r

l

R_{rl}

Rrl 和

T

r

l

T_{rl}

Trl,当然如果需要对图片进行矫正,畸变矫正的参数也很重要。

下面来推导本质矩阵

E

E

E和基础矩阵

F

F

F:

对于相机来说

P

u

v

l

=

1

s

l

K

l

⋅

P

l

P

u

v

r

=

1

s

r

K

r

⋅

P

r

P_{uvl}=\frac 1 {s_l} K_l\cdot P_{l}\\~\\ P_{uvr}=\frac 1 {s_r} K_r\cdot P_{r}

Puvl=sl1Kl⋅Pl Puvr=sr1Kr⋅Pr

所以本质矩阵可以被描述为:

P

u

v

l

T

⋅

K

l

−

T

⋅

E

⋅

K

r

−

1

⋅

P

u

v

r

=

0

P_{uvl}^T\cdot K_l^{-T}\cdot E \cdot K_r^{-1}\cdot P_{uvr} = 0

PuvlT⋅Kl−T⋅E⋅Kr−1⋅Puvr=0

其中基础矩阵F:

F

=

K

l

−

T

⋅

E

⋅

K

r

−

1

F=K_l^{-T}\cdot E \cdot K_r^{-1}

F=Kl−T⋅E⋅Kr−1

从左相机到右相机的像素映射为:

P

u

v

l

T

⋅

F

⋅

P

u

v

r

=

0

P_{uvl}^T\cdot F\cdot P_{uvr} = 0

PuvlT⋅F⋅Puvr=0

其中需要标定得到的参数就可以得到:

K

l

,

K

r

,

d

i

s

t

C

o

e

f

f

s

L

,

d

i

s

t

C

o

e

f

f

s

R

,

R

T

E

,

F

K_l,\quad K_r,\quad distCoeffsL,\quad distCoeffsR,\quad R \quad T\quad E,\quad F

Kl,Kr,distCoeffsL,distCoeffsR,RTE,F

2.2 标定方法

有了单目标定的基础,双目标定就不再叙述单目标定的内容;

我们继续利用单目标定的方法可以标定得到

K

l

,

K

r

,

d

i

s

t

C

o

e

f

f

s

L

,

d

i

s

t

C

o

e

f

f

s

R

K_l,\quad K_r,\quad distCoeffsL,\quad distCoeffsR

Kl,Kr,distCoeffsL,distCoeffsR

另外我们还求出了世界坐标系下棋盘阵的3D点

P

w

P_w

Pw(objectPoints),左相机像素坐标

P

u

v

l

P_{uvl}

Puvl(imagePointsLeft)和左相机像素坐标

P

u

v

r

P_{uvr}

Puvr(imagePointsRight);

那么我们可以利用函数stereoCalibrate进行标定:

double stereoCalibrate( InputArrayOfArrays objectPoints,

InputArrayOfArrays imagePoints1, InputArrayOfArrays imagePoints2,

InputOutputArray cameraMatrix1, InputOutputArray distCoeffs1,

InputOutputArray cameraMatrix2, InputOutputArray distCoeffs2,

Size imageSize,

OutputArray R,OutputArray T, OutputArray E, OutputArray F,

int flags = CALIB_FIX_INTRINSIC,

TermCriteria criteria = TermCriteria(TermCriteria::COUNT+TermCriteria::EPS, 30, 1e-6) );

Tips:这里对flags标签进行解释:

1.如果flags设置为 CALIB_FIX_INTRINSIC ,那么就使用你输入的相机矩阵(固定),一般选择这个是单目标定好以后的相机矩阵;

2.如果flags设置为 CALIB_USE_INTRINSIC_GUESS ,那么你输入的矩阵就是被用作进一步优化的初始值,并通过函数 stereoCalibrate 返回值 设为改进值,简单说就是输入的这个相机矩阵被优化后输出;

3.如果flags设置为 CALIB_NINTRINSIC,那么内联函数 stereoCalibrate 从头开始计算两个相机的相机矩阵和畸变矩阵。所以,你也可以不用每个相机先标定一遍,直接使用 stereoCalibrate 完成一次性的标定(内参、外参、立体参数)。

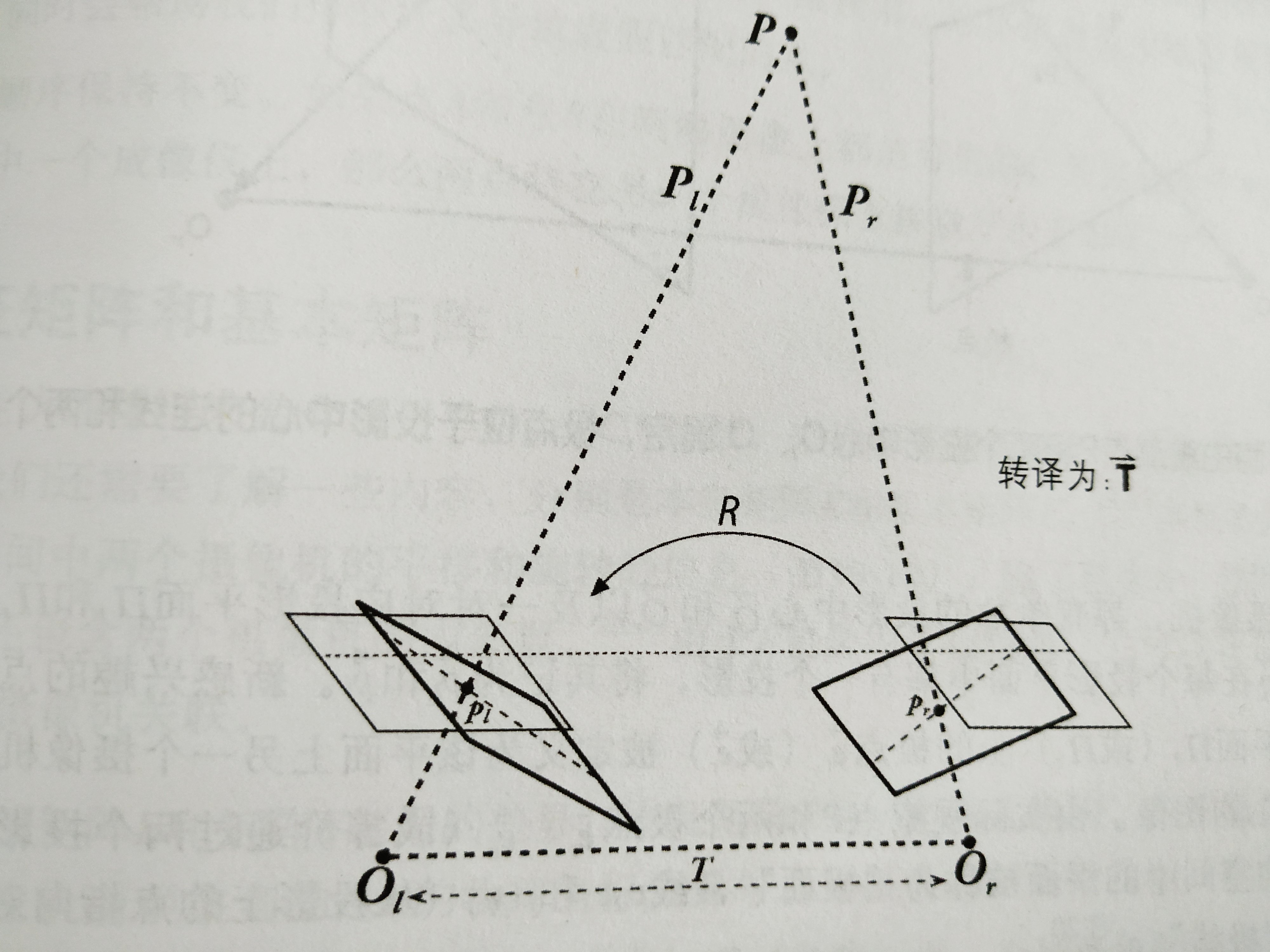

2.3 双目矫正

双目矫正是双目标定中非常重要的一步;

理想无畸变标定摄像机立体坐标系:两个平面行平行,摄像机坐标系是以左摄像机的投影中心为原点

所以双目矫正,就是把消除畸变后的两幅图像严格地行对应,使得两幅图像的对极线恰好在同一水平线上,这样一幅图像上任意一点与其在另一幅图像上的对应点就必然具有相同的行号,只需在该行进行一维搜索即可匹配到对应点。

双目矫正的示意图如下:

粗线描绘的是未矫正的像平面,细线描绘的是矫正后的像平面,使得两个像平面行对齐;我们 R 1 R_1 R1和 R 2 R_2 R2分别是左右相机未矫正像平面坐标系到矫正后像平面坐标系的变换矩阵 R r e c t , L e f t = ( R 1 ) R_{rect,Left} = (R_1) Rrect,Left=(R1)和 R r e c t , R i g h t ( = R 2 ) R_{rect,Right}(=R_2) Rrect,Right(=R2);

存在关系:

R

r

l

=

R

r

e

c

t

,

R

i

g

h

t

−

1

⋅

R

r

e

c

t

,

L

e

f

t

=

R

2

−

1

⋅

R

1

R_{rl} = R_{rect,Right}^{-1}\cdot R_{rect,Left} = R_{2}^{-1}\cdot R_{1}

Rrl=Rrect,Right−1⋅Rrect,Left=R2−1⋅R1

而

P

1

P_1

P1和

P

2

P_2

P2分别是世界坐标系下的点到左右矫正成像坐标系的投影矩阵:

[

x

L

y

L

w

L

]

=

w

L

⋅

[

x

r

e

c

t

L

y

r

e

c

t

L

1

]

=

P

1

⋅

[

X

w

Y

w

Z

w

1

]

[

x

R

y

R

w

R

]

=

w

R

⋅

[

x

r

e

c

t

R

y

r

e

c

t

R

1

]

=

P

2

⋅

[

X

w

Y

w

Z

w

1

]

\begin{bmatrix} x_{L} \\ y_{L} \\ w_L \end{bmatrix}=w_L\cdot\begin{bmatrix} x_{rectL} \\ y_{rectL} \\ 1 \end{bmatrix} = P_1\cdot \begin{bmatrix} X_w\\Y_w\\Z_w\\1\end{bmatrix} \\ \begin{bmatrix} x_{R} \\ y_{R} \\ w_R \end{bmatrix}=w_R\cdot\begin{bmatrix} x_{rectR} \\ y_{rectR} \\ 1 \end{bmatrix} = P_2\cdot \begin{bmatrix} X_w\\Y_w\\Z_w\\1\end{bmatrix}

⎣⎡xLyLwL⎦⎤=wL⋅⎣⎡xrectLyrectL1⎦⎤=P1⋅⎣⎢⎢⎡XwYwZw1⎦⎥⎥⎤⎣⎡xRyRwR⎦⎤=wR⋅⎣⎡xrectRyrectR1⎦⎤=P2⋅⎣⎢⎢⎡XwYwZw1⎦⎥⎥⎤

其中w是比例因子,我们只需要把计算的

x

x

x 和

y

y

y除以

w

R

w_R

wR就可以得到矫正成像平面下的像素坐标

(

x

r

e

c

t

,

y

r

e

c

t

)

;

(x_{rect},y_{rect});

(xrect,yrect);

我们可以直接利用得到R1,R2,P1,P2以及重投影矩阵Q(2维重新投影到3维):

Q

[

x

y

d

1

]

=

[

X

Y

Z

W

]

Q\begin{bmatrix}x\\y\\d\\1 \end{bmatrix}=\begin{bmatrix}X\\Y\\Z\\W \end{bmatrix}

Q⎣⎢⎢⎡xyd1⎦⎥⎥⎤=⎣⎢⎢⎡XYZW⎦⎥⎥⎤

总结概括的说:

矫正以后的像素坐标(矫正成像像素坐标系下)

{

P

u

v

l

′

=

1

s

K

l

⋅

R

r

e

c

t

,

L

e

f

t

⋅

[

R

l

w

∣

T

l

w

]

⋅

P

w

,

R

r

e

c

t

,

L

e

f

t

=

R

1

P

u

v

r

′

=

1

s

K

r

⋅

R

r

e

c

t

,

R

i

g

h

t

⋅

[

R

r

w

∣

T

r

w

]

⋅

P

w

,

R

r

e

c

t

,

R

i

g

h

t

=

R

2

\left \{ \begin{aligned} P_{uvl}'&=\frac 1 {s} K_l\cdot R_{rect,Left} \cdot [R_{lw}\space|\space T_{lw}]\cdot P_w,\quad R_{rect,Left}=R_1\\~\\ P_{uvr}'&=\frac 1 {s} K_r\cdot R_{rect,Right}\cdot [R_{rw}\space|\space T_{rw}]\cdot P_w,\quad R_{rect,Right}=R_2 \end{aligned} \right.

⎩⎪⎪⎪⎪⎨⎪⎪⎪⎪⎧Puvl′ Puvr′=s1Kl⋅Rrect,Left⋅[Rlw ∣ Tlw]⋅Pw,Rrect,Left=R1=s1Kr⋅Rrect,Right⋅[Rrw ∣ Trw]⋅Pw,Rrect,Right=R2

void stereoRectify( InputArray cameraMatrix1, InputArray distCoeffs1,

InputArray cameraMatrix2, InputArray distCoeffs2,

Size imageSize,

InputArray R, InputArray T,

OutputArray R1, OutputArray R2,

OutputArray P1, OutputArray P2,

OutputArray Q,

int flags = CALIB_ZERO_DISPARITY,

double alpha = -1, Size newImageSize = Size(),

CV_OUT Rect* validPixROI1 = 0, CV_OUT Rect* validPixROI2 = 0 );

2.4 矫正映射

有了立体矫正项,我们就可以利用函数initUndistortRectifyMap()计算映射map1,map2,然后利用remap()函数重新对图像进行映射

void initUndistortRectifyMap( InputArray cameraMatrix,

InputArray distCoeffs,

InputArray R,

InputArray newCameraMatrix,

Size size,

int m1type, //CV_16SC2 或者 CV_32FC1 或者 CV_32FC2

OutputArray map1, OutputArray map2);

void remap( InputArray src,

OutputArray dst,

InputArray map1, InputArray map2,

int interpolation, int borderMode = BORDER_CONSTANT,

const Scalar& borderValue = Scalar());

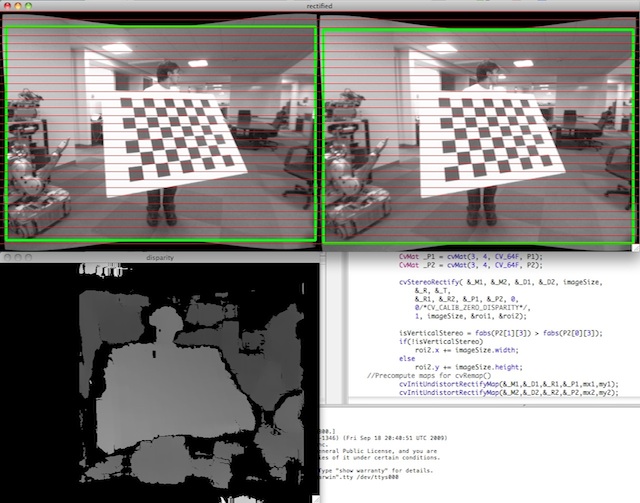

示例图:

矫正映射以后的行对齐;(图片来自OpenCV)

2.5 代码

CMakeLists:

cmake_minimum_required(VERSION 2.8)

project(StereoCalibration)

set(CMAKE_BUILD_TYPE "Release")

set(CMAKE_INCLUDE_CURRENT_DIR ON)

set(CMAKE_CXX_COMPILER "clang++")

find_package(OpenCV REQUIRED)

INCLUDE_DIRECTORIES(${OpenCV_INCLUDE_DIR})

add_executable(openCamera "openCamera.cpp")

target_link_libraries(openCamera ${OpenCV_LIBS})

add_executable(calibrate "calibrate.cpp")

target_link_libraries(calibrate ${OpenCV_LIBS})

openCamera.cpp:(用于双目图像的棋盘图像采集,按c键截图保存,q键结束)根据自己摄像头特点更改

#include <iostream>

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

int main(int argc,char ** argv)

{

if(argc < 3)

{

cout<<"please use : openCamera [leftImagePath] [rightImagePath]\n";

return -1;

}

VideoCapture capture(0);

capture.set(cv::CAP_PROP_FRAME_WIDTH,1280);

capture.set(cv::CAP_PROP_FRAME_HEIGHT,480);

if(!capture.isOpened())

{

cout<<"can't open camera\n";

return -1;

}

string leftPath(argv[1]);

string rightPath(argv[2]);

int index = 0;

while(capture.isOpened())

{

Mat frame,frameRigth,frameLeft;

capture>>frame;

frameLeft = frame(cv::Rect(0,0,640,480));

frameRigth = frame(cv::Rect(640,0,640,480));

imshow("frameLeft",frameLeft);

imshow("frameRight",frameRigth);

int key = waitKey(33);

if(key == 'q')

break;

if(key == 'c')

{

index++;

imwrite(leftPath+"/"+to_string(index)+".jpg",frameLeft);

imwrite(rightPath+"/"+to_string(index)+".jpg",frameRigth);

}

}

return 0;

}

calibrate.cpp

#include <iostream>

#include <fstream>

#include <vector>

#include <string>

#include "opencv2/core.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/calib3d.hpp"

#include "opencv2/imgproc.hpp"

using namespace std;

using namespace cv;

void calibCamera(vector<string> &files, cv::Size boardSize, float squareSize,

string imagePath,

cv::Mat &cameraMatrix,

cv::Mat &distCoeffs,

std::vector<std::vector<cv::Point2f>> &imagePoints,

cv::Size &imageSize,

std::vector<std::vector<cv::Point3f>> &objectPoints,

std::vector<cv::Mat> &rvecs, std::vector<cv::Mat> &tvecs

);

//去畸变

Point2f undistortmypoints(Point2f xyd , Mat distCoeffs, Mat cameraMatrix);

int main(int argc,char**argv)

{

float squareSize = 1.0;

if(argc<5)

{

cout<<"./calibrationCamera [leftImagePath] [rightImagePath] [width-1] [height-1]\n";

cout<<"example: 如果你是10*6(宽10,高6)的棋盘,那么输入的就是 9 5\n";

return -1;

}

if(argc == 6)

{

squareSize = atof(argv[5]);

}

cv::Size boardSize(atoi(argv[3]),atoi(argv[4]));

cv::Size imageSize; //empty, get the data when running the calibCamera

vector<string> leftfiles,rightfiles;

string leftImagePath(argv[1]);

string rightImagePath(argv[2]);

glob(leftImagePath,leftfiles);

glob(rightImagePath,rightfiles);

//saver

std::vector<std::vector<cv::Point3f>> objectPoints;

std::vector<std::vector<cv::Point2f>> imagePointsleft;

std::vector<std::vector<cv::Point2f>> imagePointsRight;

//**********************************************************单目分别标定*********************************************

//----calibrate--

//left camera matrix

cv::Mat cameraMatrixLeft; //Camera intrinsic Matrix

cv::Mat distCoeffsLeft; //five parameters of distCoeffs,(k1,k2,p1,p2[,k3[,k4,k5,k6]])

std::vector<cv::Mat> rvecsLeft, tvecsLeft;

cout<<endl<<"-------------------Left Camera intrinsic Matrix--------------------\n";

calibCamera(leftfiles,boardSize,squareSize,leftImagePath,cameraMatrixLeft,distCoeffsLeft,imagePointsleft,imageSize,objectPoints,rvecsLeft,tvecsLeft);

objectPoints.clear();

//right camera matrix

cv::Mat cameraMatrixRight; //Camera intrinsic Matrix

cv::Mat distCoeffsRight; //five parameters of distCoeffs,(k1,k2,p1,p2[,k3[,k4,k5,k6]])

std::vector<cv::Mat> rvecsRight, tvecsRight;

cout<<endl<<"-------------------Right Camera intrinsic Matrix--------------------\n";

calibCamera(rightfiles,boardSize,squareSize,rightImagePath,cameraMatrixRight,distCoeffsRight,imagePointsRight,imageSize,objectPoints,rvecsRight,tvecsRight);

//************************************************对重投影进行测试********************************

//test the eqution

/*

s*x X X

s*y = K * [r1 r2 r3 t]* Y = K * [r1 r2 t]*Y

s 0 1

1

*/

//**** 验证外参Rve 和 tve的准确性 ****

// 默认选择 0 1,这里只选择一个点来进行测试,当然,你也可以多试几个

int k=0,j=1;//k要小于图片的数量,j要小于棋盘纸 高X宽

Mat Rrw,Rlw;

cv::Rodrigues(rvecsRight[k],Rrw);

cv::Rodrigues(rvecsLeft[k],Rlw);

Mat po = Mat::zeros(3,3,CV_64F);

po.at<double>(0,0) = Rrw.at<double>(0,0);

po.at<double>(1,0) = Rrw.at<double>(1,0);

po.at<double>(2,0) = Rrw.at<double>(2,0);

po.at<double>(0,1) = Rrw.at<double>(0,1);

po.at<double>(1,1) = Rrw.at<double>(1,1);

po.at<double>(2,1) = Rrw.at<double>(2,1);

po.at<double>(0,2) = tvecsLeft[k].at<double>(0,0);

po.at<double>(1,2) = tvecsLeft[k].at<double>(0,1);

po.at<double>(2,2) = tvecsLeft[k].at<double>(0,2);

Mat obj(3,1,CV_64F);

obj.at<double>(0,0) = objectPoints[k][j].x;

obj.at<double>(1,0) = objectPoints[k][j].y;

obj.at<double>(2,0) = 1;

Mat uv = cameraMatrixLeft*po*obj;

Point2f xyd = imagePointsleft[k][j];

//对该点进行畸变矫正

Point2f xyp = undistortmypoints(xyd,distCoeffsLeft,cameraMatrixLeft);

cout<<"Test the outer parameters(请查看下面两个数据的差距,理论上是一样的)"<<endl;

cout<<(uv/uv.at<double>(2,0)).t()<<endl; // [x y] = [x/w y/w]

cout<<xyp<<endl;

/*

* 这里需要说明一点,如果上面两个值输入的差距越小,说明重投影的误差小,那么由公式 Rrl = Rrw*Rlw.inv()计算

* 得到的,即从第一个相机到第二个相机的变换矩阵R的准确率越高;

*/

//****************************************************************双目标定*****************************************************

cout<<endl<<"********************stereo calibrate running******************\n";

Mat R, T, E, F;

double rms = stereoCalibrate(objectPoints,

imagePointsleft, imagePointsRight,

cameraMatrixLeft, distCoeffsLeft,

cameraMatrixRight, distCoeffsRight,

imageSize, R, T, E, F,

CALIB_FIX_INTRINSIC,// CALIB_USE_INTRINSIC_GUESS CALIB_FIX_INTRINSIC CALIB_NINTRINSIC

TermCriteria(TermCriteria::COUNT+TermCriteria::EPS, 100, 1e-5) );

cout << "done with RMS error=" << rms << endl;

/*

Pl = Rlw * Pw+Tlw;

Pr = Rrw * Pw+Trw;

--Pr = Rrl * Pl + Trl

so =====>

Rrl = Rrw * Rlw^T

Trl = Trw - Rrl * Tlw

*/

//**** 验证上述公式是否成立 ****

//--多试试几组值,如果和最后得到的R差距非常大,很可能标定失败;

cout<<endl<<"Test the R(=Rrl)(与输出的R进行比较):"<<endl;

cout<<endl<<Rrw*Rlw.inv()<<endl;

//如果上面Rrw*Rlw.inv() 输出结果 与R输出差距较大,可以去除下列注释测试

//如果最后标定输出的remap()图像效果很差,也可以去除下列注释看看测试结果

//R = Rrw*Rlw.inv(); //Rrl = Rrw * Rlw^T

//T = tvecsRight[k] - R * tvecsLeft[k]; //Trl = Trw - Rrl * Tlw

Mat R1, R2, P1, P2, Q;

Rect validRoi[2];

//************************************************双目矫正*******************************************

stereoRectify(cameraMatrixLeft, distCoeffsLeft,

cameraMatrixRight, distCoeffsRight,

imageSize, R, T, R1, R2, P1, P2, Q,

CALIB_ZERO_DISPARITY, -1, imageSize, &validRoi[0], &validRoi[1]);

cout<<"R is :\n"<<R<<endl;

cout<<"T is :\n"<<T<<endl;

cout<<"R1 is :\n"<<R1<<endl;

cout<<"R2 is :\n"<<R2<<endl;

cout<<"P1 is :\n"<<P1<<endl;

cout<<"P1 is :\n"<<P2<<endl;

cout<<"Q is :\n"<<Q<<endl;

//****************************************双目畸变矫正*************************

//remap

Mat rmap[2][2];

initUndistortRectifyMap(cameraMatrixLeft, distCoeffsLeft, R1, P1, imageSize, CV_16SC2, rmap[0][0], rmap[0][1]);

initUndistortRectifyMap(cameraMatrixRight, distCoeffsRight, R2, P2, imageSize, CV_16SC2, rmap[1][0], rmap[1][1]);

for(size_t i=0;i<leftfiles.size();i++)

{

Mat il = imread(leftfiles[i],0),ilremap;

Mat ir = imread(rightfiles[i],0),irremap;

if(il.empty() || ir.empty())

continue;

remap(il, ilremap, rmap[0][0], rmap[0][1], INTER_LINEAR);

remap(ir, irremap, rmap[1][0], rmap[1][1], INTER_LINEAR);

Mat srcAnddst(ilremap.rows,ilremap.cols + irremap.cols,ilremap.type());

Mat submat =srcAnddst.colRange(0,ilremap.cols);

ilremap.copyTo(submat);

submat = srcAnddst.colRange(ilremap.cols,ilremap.cols + irremap.cols);

irremap.copyTo(submat);

cvtColor(srcAnddst,srcAnddst,COLOR_GRAY2BGR);

//draw rectified image

for (int i = 0; i < srcAnddst.rows;i+=16)

line(srcAnddst, Point(0, i), Point(srcAnddst.cols, i), Scalar(0, 255, 0), 1, 8);

imshow("remap",srcAnddst);

//imshow("ir",irremap);

waitKey(500);

}

//save the config parameters

//clear the old .yaml file

String path = "./calib.yaml";

ofstream fs(path);

fs.clear();

fs << "# ------left camera Intrinsic--------"<<endl;

fs << "Left.Camera.fx: " << cameraMatrixLeft.at<double>(0,0)<<endl;

fs << "Left.Camera.fy: " << cameraMatrixLeft.at<double>(1,1)<<endl;

fs << "Left.Camera.cx: " << cameraMatrixLeft.at<double>(0,2)<<endl;

fs << "Left.Camera.cy: " << cameraMatrixLeft.at<double>(1,2)<<endl<<endl;

fs << "# ------left camera Distortion--------"<<endl;

fs << "Left.Camera.k1: " << distCoeffsLeft.at<double>(0,0)<<endl;

fs << "Left.Camera.k2: " << distCoeffsLeft.at<double>(0,1)<<endl;

fs << "Left.Camera.p1: " << distCoeffsLeft.at<double>(0,2)<<endl;

fs << "Left.Camera.p2: " << distCoeffsLeft.at<double>(0,3)<<endl;

fs << "Left.Camera.k3: " << distCoeffsLeft.at<double>(0,4)<<endl<<endl;

fs <<endl<<endl;

fs << "# ------right camera Intrinsic--------"<<endl;

fs << "Right.Camera.fx: " << cameraMatrixRight.at<double>(0,0)<<endl;

fs << "Right.Camera.fy: " << cameraMatrixRight.at<double>(1,1)<<endl;

fs << "Right.Camera.cx: " << cameraMatrixRight.at<double>(0,2)<<endl;

fs << "Right.Camera.cy: " << cameraMatrixRight.at<double>(1,2)<<endl<<endl;

fs << "# ------right camera Distortion--------"<<endl;

fs << "Right.Camera.k1: " << distCoeffsRight.at<double>(0,0)<<endl;

fs << "Right.Camera.k2: " << distCoeffsRight.at<double>(0,1)<<endl;

fs << "Right.Camera.p1: " << distCoeffsRight.at<double>(0,2)<<endl;

fs << "Right.Camera.p2: " << distCoeffsRight.at<double>(0,3)<<endl;

fs << "Right.Camera.k3: " << distCoeffsRight.at<double>(0,4)<<endl<<endl;

fs.close();

//R, T, R1, R2, P1, P2, Q,

cv::FileStorage f(path,cv::FileStorage::APPEND);

f.writeComment(" \n------camera parameters saved by yaml data--------",true);

f<<"R"<<R;

f<<"T"<<T;

f<<"R1"<<R1;

f<<"R2"<<R2;

f<<"P1"<<P1;

f<<"P2"<<P2;

f<<"Q"<<Q;

f.release();

//查看保存的数据

system("cat ./calib.yaml");

return 0;

}

void calibCamera(vector<string> &files, cv::Size boardSize,

float squareSize,

string imagePath,

cv::Mat &cameraMatrix,

cv::Mat &distCoeffs,

std::vector<std::vector<cv::Point2f>> &imagePoints,

cv::Size &imageSize,

std::vector<std::vector<cv::Point3f>> &objectPoints,

std::vector<cv::Mat> &rvecs, std::vector<cv::Mat> &tvecs)

{

//data objectCorners

vector<Point3f> objectCorners;

vector<Point2f> imageCorners;

//get the Corners' position

for (int i = 0; i < boardSize.height; i++)

{

for (int j = 0; j < boardSize.width; j++)

{

objectCorners.push_back(cv::Point3f(squareSize*i, squareSize*j, 0.0f));

}

}

for(size_t i =0;i<files.size();i++)

{

Mat image = imread(files[i]);

Mat gray;

if(image.empty())

continue;

cvtColor(image,gray,COLOR_BGR2GRAY);

//get Chessboard Corners

bool found = cv::findChessboardCorners(gray, boardSize,imageCorners);

if(found)

{

TermCriteria termCriteria;

termCriteria = TermCriteria(TermCriteria::MAX_ITER +TermCriteria::EPS,

50, //maxCount

0.1); //epsilon

cornerSubPix(gray,

imageCorners,

cv::Size(5, 5), // winSize

cv::Size(-1, -1),

termCriteria); // epsilon

imagePoints.push_back(imageCorners);

objectPoints.push_back(objectCorners);

cv::drawChessboardCorners(image, boardSize, imageCorners, found);

imshow("Corners on Chessboard", image);

waitKey(33);

}

imageSize = image.size();

}

cv::destroyAllWindows();

cout<<"图像尺寸:"<<imageSize<<endl;

double rms = calibrateCamera(objectPoints, // 三维点

imagePoints, // 图像点

imageSize, // 图像尺寸

cameraMatrix, // 输出相机矩阵

distCoeffs, // 输出畸变矩阵

rvecs, tvecs // Rs、Ts(外参)

);

cout << " RMS error=" << rms << endl;

cout<<"K = \n"<<cameraMatrix<<endl;

cout<<"distCoeffs = \n"<<distCoeffs<<endl;

}

//去畸变

Point2f undistortmypoints(Point2f xyd , Mat distCoeffs, Mat cameraMatrix)

{

double x = (xyd.x-cameraMatrix.at<double>(0,2))/cameraMatrix.at<double>(0,0);

double y = (xyd.y-cameraMatrix.at<double>(1,2))/cameraMatrix.at<double>(1,1);

double r = sqrt(x*x+y*y);

double x_distorted = (1+distCoeffs.at<double>(0,0)*r*r+distCoeffs.at<double>(0,1)*r*r*r*r+distCoeffs.at<double>(0,4)*r*r*r*r*r*r)*x

+2*distCoeffs.at<double>(0,2)*x*y+distCoeffs.at<double>(0,3)*(r*r+2*x*x);

double y_distorted = (1+distCoeffs.at<double>(0,0)*r*r+distCoeffs.at<double>(0,1)*r*r*r*r+distCoeffs.at<double>(0,4)*r*r*r*r*r*r)*y

+2*distCoeffs.at<double>(0,3)*x*y+distCoeffs.at<double>(0,2)*(r*r+2*y*y);

double xp = cameraMatrix.at<double>(0,0)*x_distorted+cameraMatrix.at<double>(0,2);

double yp = cameraMatrix.at<double>(1,1)*y_distorted+cameraMatrix.at<double>(1,2);

return Point2f(xp,yp);

}

为了进一步的学习双目的原理、测试,我的双目标定的代码冗长,大家也可以读一读,理解原理。

程序运行的方法:(输入 左相机棋盘图路径 右棋盘图路径 棋盘宽-1 棋盘高-1 格子边长(/mm))

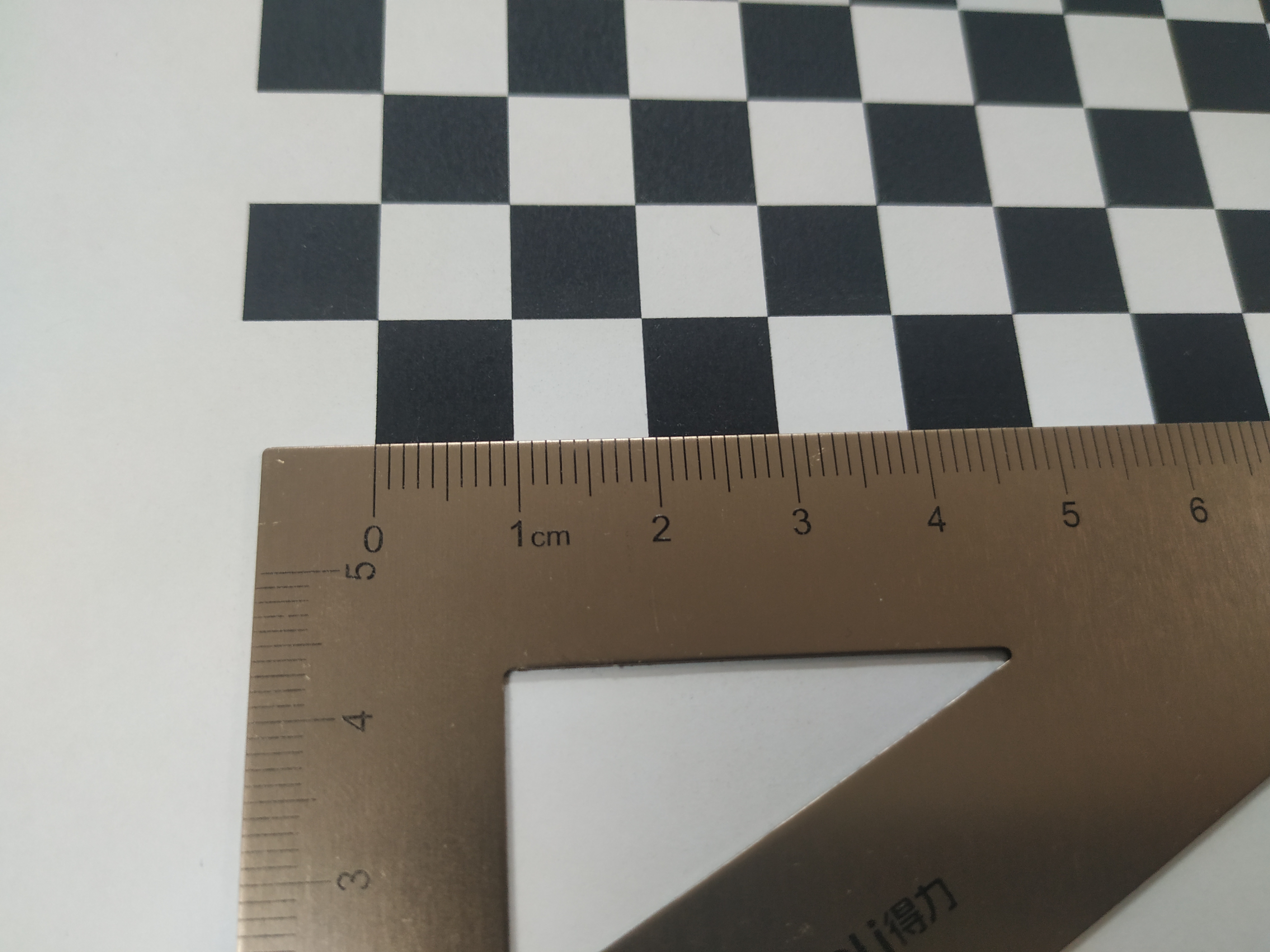

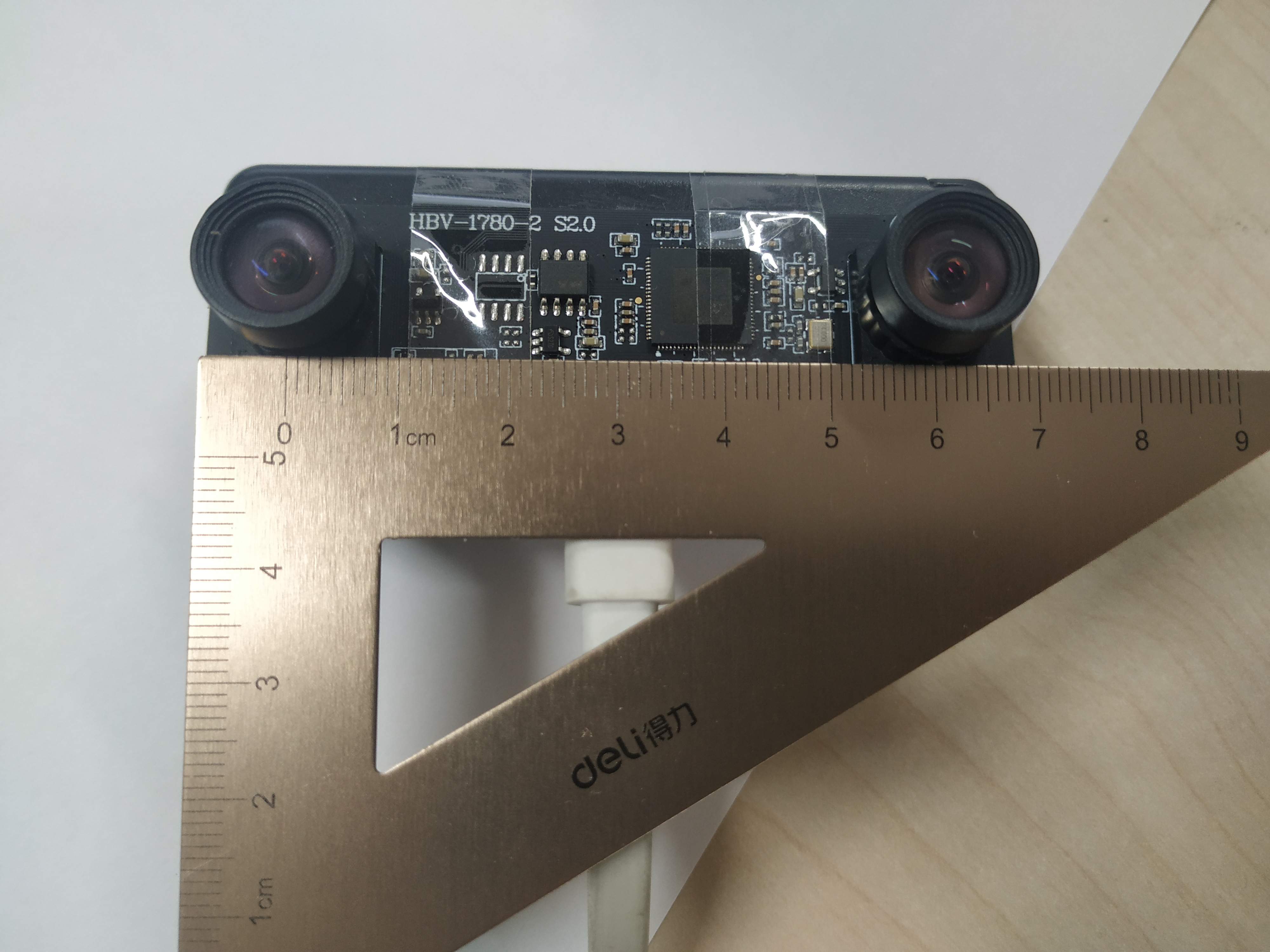

格子的边长最好精确一些,因为影响我们最后得到T矩阵的精度,我这边设置的是9.5mm;如图:

./calibrationCamera [leftImagePath] [rightImagePath] [width-1] [height-1] [squareSize]

Tips:

如果在运行的过程中发现图像输出remap严重变形,那么可能就是标定失败,这时候可以去掉注释:

//R = Rrw*Rlw.inv();

//T = tvecsRight[k] - R * tvecsLeft[k];

利用双目的数学原理对R和T进行赋值,前提是你要保证:

cout<<(uv/uv.at<double>(2,0)).t()<<endl; // [x y] = [x/w y/w]

cout<<xyp<<endl;

输出的差距非常小(说明重投影误差小),才能对双目标定的R和T进行覆盖,一般来说是没有问题的。

结果展示

标定失败的展示:

发现严重变形了,查看了不同的单目标定的外参,发现重投影的误差都非常大,这可能就是标定失败的原因;

发现严重变形了,查看了不同的单目标定的外参,发现重投影的误差都非常大,这可能就是标定失败的原因;

另外,其实在这些外参中也有重投影误差较小的,例如随机取的,重投影及输出为:

Test the outer parameters(请查看下面两个数据的差距,理论上是一样的)

[366.5508470998388, 93.46714778184665, 1]

[366.729, 93.3543]

说明该点对应的外参还是不错的,我们把注释去掉,利用这个比较好的点来计算R 和 T:

R = Rrw*Rlw.inv();

T = tvecsRight[k] - R * tvecsLeft[k];

运行输出:

输出的R 和 T:

R is :

[0.9999085307830105, -0.0002800623385213808, 0.01352226432398404;

0.0001808305448574385, 0.9999730523439697, 0.007339065757845414;

-0.013523955326575, -0.007335949220821691, 0.9998816362356868]

T is :

[-59.24373204951949;

-0.8797720998883989;

-1.360892781247458]

数据意思就是两个摄像头基本没有发生旋转(因为R趋近于单位矩阵),摄像头相距 59mm;

效果还是不错的。

一般来说,利用OpenCV的双目标定就已经足够了,另外棋盘图像的采集一定要在光源好的地方拍摄,保证棋盘纸平整,否则就会出现像我这样标定失败的案例。

参考文献

1.opencv-4.3.0/samples/cpp/stereo_calib.cpp

2.学习OpenCV3(中文版)—Adrian Kaehler, Gary Bradski

915

915

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?