获取源码

github中搜索detr,搜索到的第一个facebook的就是,目前有12k star,然后把项目git clone到本地

搭建项目虚拟环境

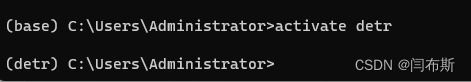

打开anaconda prompt输入以下代码创建好自己的虚拟环境

conda create -n detr python=3.8创建好之后激活虚拟环境

在detr环境下安装pytorch

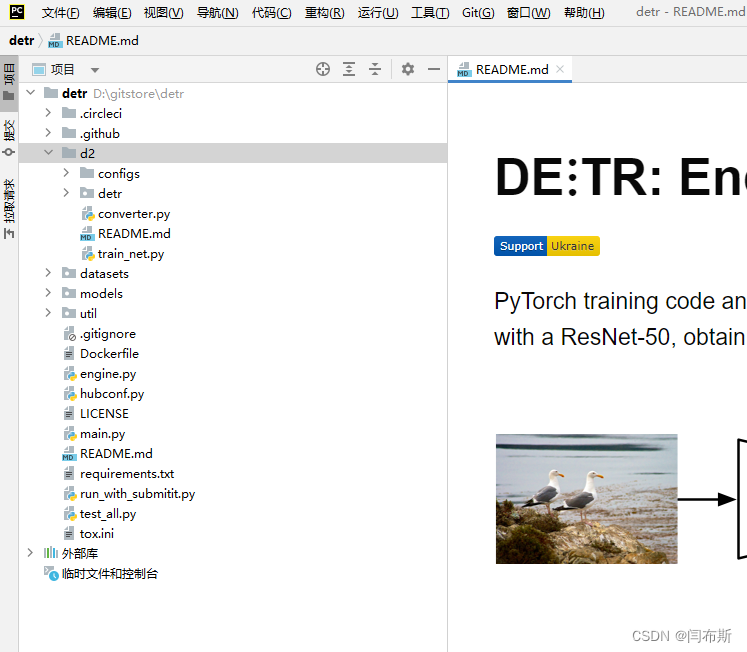

conda install pytorch torchvision torchaudio pytorch-cuda=12.1 -c pytorch -c nvidia之后找到刚刚从github中克隆的detr项目,右键pycharm打开项目

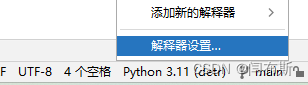

在pycharm右下角配置解释器为刚刚创建的detr虚拟环境,点击添加本地解释器

选择conda环境,按照如下进行选择

选择好刚刚配置的detr环境之后就点击requirements.txt文件安装需要的库

其中pycocotools和panopticapi可能会安装失败,这个时候需要自己去手动安装

准备数据训练模型

安装好所有要求的库之后现在就可以开始准备数据训练模型,训练使用的数据为coco格式,其中文件结构必须如下:

path/to/coco/

annotations/ # annotation json files

train2017/ # train images

val2017/ # val images以下代码可以把xml格式的标签转换成json标签:

# coding:utf-8

# pip install lxml

import os

import glob

import json

import shutil

import numpy as np

import xml.etree.ElementTree as ET

path2 = r"E:\dataset\voc2017"

START_BOUNDING_BOX_ID = 1

def get(root, name):

return root.findall(name)

def get_and_check(root, name, length):

vars = root.findall(name)

if len(vars) == 0:

raise NotImplementedError('Can not find %s in %s.' % (name, root.tag))

if length > 0 and len(vars) != length:

raise NotImplementedError('The size of %s is supposed to be %d, but is %d.' % (name, length, len(vars)))

if length == 1:

vars = vars[0]

return vars

def convert(xml_list, json_file):

json_dict = {"images": [], "type": "instances", "annotations": [], "categories": []}

categories = pre_define_categories.copy()

bnd_id = START_BOUNDING_BOX_ID

all_categories = {}

for index, line in enumerate(xml_list):

# print("Processing %s"%(line))

xml_f = line

tree = ET.parse(xml_f)

root = tree.getroot()

filename = os.path.basename(xml_f)[:-4] + ".jpg"

image_id = 20190000001 + index

size = get_and_check(root, 'size', 1)

width = int(get_and_check(size, 'width', 1).text)

height = int(get_and_check(size, 'height', 1).text)

image = {'file_name': filename, 'height': height, 'width': width, 'id': image_id}

json_dict['images'].append(image)

## Cruuently we do not support segmentation

# segmented = get_and_check(root, 'segmented', 1).text

# assert segmented == '0'

for obj in get(root, 'object'):

category = get_and_check(obj, 'name', 1).text

if category in all_categories:

all_categories[category] += 1

else:

all_categories[category] = 1

if category not in categories:

if only_care_pre_define_categories:

continue

new_id = len(categories) + 1

print(

"[warning] category '{}' not in 'pre_define_categories'({}), create new id: {} automatically".format(

category, pre_define_categories, new_id))

categories[category] = new_id

category_id = categories[category]

bndbox = get_and_check(obj, 'bndbox', 1)

xmin = int(float(get_and_check(bndbox, 'xmin', 1).text))

ymin = int(float(get_and_check(bndbox, 'ymin', 1).text))

xmax = int(float(get_and_check(bndbox, 'xmax', 1).text))

ymax = int(float(get_and_check(bndbox, 'ymax', 1).text))

#assert (xmax >= xmin), "xmax <= xmin, {}".format(line)

#assert (ymax >= ymin), "ymax <= ymin, {}".format(line)

o_width = abs(xmax - xmin)

o_height = abs(ymax - ymin)

ann = {'area': o_width * o_height, 'iscrowd': 0, 'image_id':

image_id, 'bbox': [xmin, ymin, o_width, o_height],

'category_id': category_id, 'id': bnd_id, 'ignore': 0,

'segmentation': []}

json_dict['annotations'].append(ann)

bnd_id = bnd_id + 1

for cate, cid in categories.items():

cat = {'supercategory': 'none', 'id': cid, 'name': cate}

json_dict['categories'].append(cat)

json_fp = open(json_file, 'w')

json_str = json.dumps(json_dict)

json_fp.write(json_str)

json_fp.close()

print("------------create {} done--------------".format(json_file))

print("find {} categories: {} -->>> your pre_define_categories {}: {}".format(len(all_categories),

all_categories.keys(),

len(pre_define_categories),

pre_define_categories.keys()))

print("category: id --> {}".format(categories))

print(categories.keys())

print(categories.values())

if __name__ == '__main__':

classes = ['person']

pre_define_categories = {}

for i, cls in enumerate(classes):

pre_define_categories[cls] = i + 1

# pre_define_categories = {'a1': 1, 'a3': 2, 'a6': 3, 'a9': 4, "a10": 5}

only_care_pre_define_categories = True

# only_care_pre_define_categories = False

train_ratio = 0.9

save_json_train = r'E:\dataset\instances_train2017.json'

save_json_val = r'E:\dataset\instances_val2017.json'

xml_dir = r"E:\dataset\labels"

xml_list = glob.glob(xml_dir + "/*.xml")

xml_list = np.sort(xml_list)

np.random.seed(100)

np.random.shuffle(xml_list)

train_num = int(len(xml_list) * train_ratio)

xml_list_train = xml_list[:train_num]

xml_list_val = xml_list[train_num:]

convert(xml_list_train, save_json_train)

convert(xml_list_val, save_json_val)

if os.path.exists(path2 + "/annotations"):

shutil.rmtree(path2 + "/annotations")

os.makedirs(path2 + "/annotations")

if os.path.exists(path2 + "/images/train2017"):

shutil.rmtree(path2 + "/images/train2017")

os.makedirs(path2 + "/images/train2017")

if os.path.exists(path2 + "/images/val2017"):

shutil.rmtree(path2 + "/images/val2017")

os.makedirs(path2 + "/images/val2017")

f1 = open("train.txt", "w")

for xml in xml_list_train:

img = xml[:-4] + ".jpg"

f1.write(os.path.basename(xml)[:-4] + "\n")

shutil.copyfile(img, path2 + "/images/train2017/" + os.path.basename(img))

f2 = open("test.txt", "w")

for xml in xml_list_val:

img = xml[:-4] + ".jpg"

f2.write(os.path.basename(xml)[:-4] + "\n")

shutil.copyfile(img, path2 + "/images/val2017/" + os.path.basename(img))

f1.close()

f2.close()

print("-------------------------------")

print("train number:", len(xml_list_train))

print("val number:", len(xml_list_val))

这段代码要求输入的labels文件中jpg图片和xml文件全部包含并且一一对应,然后将labels文件中的文件按照指定的比例划分为train和val文件夹并且将xml转换为json文件。

代码运行完成后生成如下文件夹:

训练数据准备完成。

下载预训练权重文件

之后需要在源码页面下载预训练权重文件

下载完backbone为R50的文件后,需要运行代码改变权重文件,把它里面的训练类别数量转变成自己需要的类别数量:

import torch

pretrained_weights = torch.load('detr-r50-e632da11.pth')

num_class = 2 # 这里是你的物体数+1,因为背景也算一个

pretrained_weights["model"]["class_embed.weight"].resize_(num_class + 1, 256)

pretrained_weights["model"]["class_embed.bias"].resize_(num_class + 1)

torch.save(pretrained_weights, "detr-r50_%d.pth" % num_class)例如你要训练的目标只有一个'person'则这里的num_class为2,运行之后生成detr-r50_2.pth文件,这就是之后训练的时候要用到的预训练权重文件。

接下来需要修改detr.py文件中的大概310行左右的代码内容,把其中的数字都改成num_class的值:

num_classes = 2 if args.dataset_file != 'coco' else 2

if args.dataset_file == "coco_panoptic":

# for panoptic, we just add a num_classes that is large enough to hold

# max_obj_id + 1, but the exact value doesn't really matter

num_classes = 2生成自己的模型

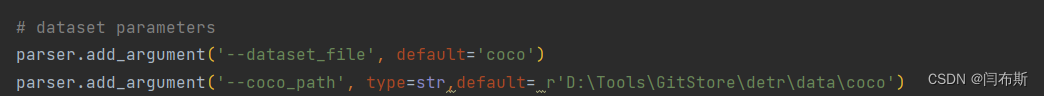

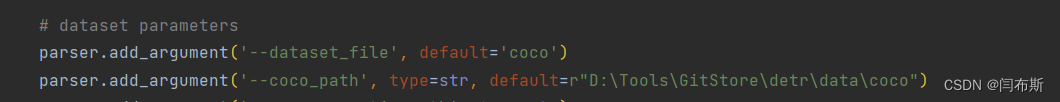

最后可以通过修改main.py中的路径或者直接在终端执行命令来训练生成自己的模型

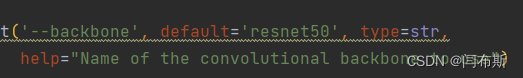

方法一:修改main.py文件

backbone修改为对应下载的(有50和101两种)

数据集修改为自己的路径:

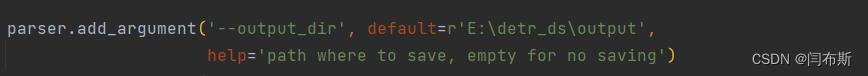

调整为自己的结果输出路径:

切换成自己刚刚运行生成的特定类别数的预训练权重文件:

![]()

如果报错内存不足之类的则减少batch_size大小(最小为1):

![]()

所有的参数都可以通过ctrl+f快捷查找,全部修改完毕之后就可以正常运行main.py函数

方法二:直接Terminal运行

前面在Terminal中激活了detr环境,所以也可以直接Terminal运行:

python main.py --dataset_file "coco" --coco_path D:\Tools\GitStore\detr\data\coco --epochs 20 --lr=1e-4 --batch_size=2 --num_workers=4 --output_dir="output" --resume="detr-r50_2.pth"

##coco_path为自己的coco路径

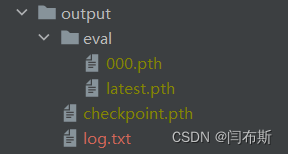

运行完成后结果保存在output文件夹中:

其中checkpoint.pth就是训练好的模型

训练好的模型test

接下来就可以用训练好的模型进行test。

predict.py :

import argparse

import random

import time

from pathlib import Path

import numpy as np

import torch

from models import build_model

from PIL import Image

import os

import torchvision

from torchvision.ops.boxes import batched_nms

import cv2

# -------------------------------------------------------------------------设置参数

def get_args_parser():

parser = argparse.ArgumentParser('Set transformer detector', add_help=False)

parser.add_argument('--lr', default=1e-4, type=float)

parser.add_argument('--lr_backbone', default=1e-5, type=float)

parser.add_argument('--batch_size', default=1, type=int)

parser.add_argument('--weight_decay', default=1e-4, type=float)

parser.add_argument('--epochs', default=300, type=int)

parser.add_argument('--lr_drop', default=200, type=int)

parser.add_argument('--clip_max_norm', default=0.1, type=float,

help='gradient clipping max norm')

# Model parameters

parser.add_argument('--frozen_weights', type=str, default=None,

help="Path to the pretrained model. If set, only the mask head will be trained")

# * Backbone

parser.add_argument('--backbone', default='resnet50', type=str,

help="Name of the convolutional backbone to use")

parser.add_argument('--dilation', action='store_true',

help="If true, we replace stride with dilation in the last convolutional block (DC5)")

parser.add_argument('--position_embedding', default='sine', type=str, choices=('sine', 'learned'),

help="Type of positional embedding to use on top of the image features")

# * Transformer

parser.add_argument('--enc_layers', default=6, type=int,

help="Number of encoding layers in the transformer")

parser.add_argument('--dec_layers', default=6, type=int,

help="Number of decoding layers in the transformer")

parser.add_argument('--dim_feedforward', default=2048, type=int,

help="Intermediate size of the feedforward layers in the transformer blocks")

parser.add_argument('--hidden_dim', default=256, type=int,

help="Size of the embeddings (dimension of the transformer)")

parser.add_argument('--dropout', default=0.1, type=float,

help="Dropout applied in the transformer")

parser.add_argument('--nheads', default=8, type=int,

help="Number of attention heads inside the transformer's attentions")

parser.add_argument('--num_queries', default=100, type=int,

help="Number of query slots")

parser.add_argument('--pre_norm', action='store_true')

# * Segmentation

parser.add_argument('--masks', action='store_true',

help="Train segmentation head if the flag is provided")

# Loss

parser.add_argument('--no_aux_loss', dest='aux_loss', default='False',

help="Disables auxiliary decoding losses (loss at each layer)")

# * Matcher

parser.add_argument('--set_cost_class', default=1, type=float,

help="Class coefficient in the matching cost")

parser.add_argument('--set_cost_bbox', default=5, type=float,

help="L1 box coefficient in the matching cost")

parser.add_argument('--set_cost_giou', default=2, type=float,

help="giou box coefficient in the matching cost")

# * Loss coefficients

parser.add_argument('--mask_loss_coef', default=1, type=float)

parser.add_argument('--dice_loss_coef', default=1, type=float)

parser.add_argument('--bbox_loss_coef', default=5, type=float)

parser.add_argument('--giou_loss_coef', default=2, type=float)

parser.add_argument('--eos_coef', default=0.1, type=float,

help="Relative classification weight of the no-object class")

# dataset parameters

parser.add_argument('--dataset_file', default='coco')

parser.add_argument('--coco_path', type=str, default=r"D:\Tools\GitStore\detr\data\coco")

parser.add_argument('--coco_panoptic_path', type=str)

parser.add_argument('--remove_difficult', action='store_true')

parser.add_argument('--output_dir', default=r'E:\detr_ds\output',

help='path where to save, empty for no saving')

parser.add_argument('--device', default='cuda',

help='device to use for training / testing')

parser.add_argument('--seed', default=42, type=int)

parser.add_argument('--resume', default=r'D:\Tools\GitStore\detr\output\checkpoint.pth',

help='resume from checkpoint')

parser.add_argument('--start_epoch', default=0, type=int, metavar='N',

help='start epoch')

parser.add_argument('--eval', default="True")

parser.add_argument('--num_workers', default=2, type=int)

# distributed training parameters

parser.add_argument('--world_size', default=1, type=int,

help='number of distributed processes')

parser.add_argument('--dist_url', default='env://', help='url used to set up distributed training')

return parser

def box_cxcywh_to_xyxy(x):

# 将DETR的检测框坐标(x_center,y_cengter,w,h)转化成coco数据集的检测框坐标(x0,y0,x1,y1)

x_c, y_c, w, h = x.unbind(1)

b = [(x_c - 0.5 * w), (y_c - 0.5 * h),

(x_c + 0.5 * w), (y_c + 0.5 * h)]

return torch.stack(b, dim=1)

def rescale_bboxes(out_bbox, size):

# 把比例坐标乘以图像的宽和高,变成真实坐标

img_w, img_h = size

b = box_cxcywh_to_xyxy(out_bbox)

b = b * torch.tensor([img_w, img_h, img_w, img_h], dtype=torch.float32)

return b

def filter_boxes(scores, boxes, confidence=0.7, apply_nms=True, iou=0.5):

# 筛选出真正的置信度高的框

keep = scores.max(-1).values > confidence

scores, boxes = scores[keep], boxes[keep]

if apply_nms:

top_scores, labels = scores.max(-1)

keep = batched_nms(boxes, top_scores, labels, iou)

scores, boxes = scores[keep], boxes[keep]

return scores, boxes

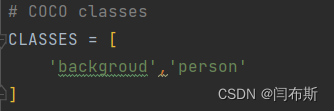

# COCO classes

CLASSES = [

'backgroud','person'

]

def plot_one_box(x, img, color=None, label=None, line_thickness=1):

# 把检测框画到图片上

tl = line_thickness or round(0.002 * (img.shape[0] + img.shape[1]) / 2) + 1 # line/font thickness

color = color or [random.randint(0, 255) for _ in range(3)]

c1, c2 = (int(x[0]), int(x[1])), (int(x[2]), int(x[3]))

cv2.rectangle(img, c1, c2, color, thickness=tl, lineType=cv2.LINE_AA)

if label:

tf = max(tl - 1, 1) # font thickness

t_size = cv2.getTextSize(label, 0, fontScale=tl / 3, thickness=tf)[0]

c2 = c1[0] + t_size[0], c1[1] - t_size[1] - 3

cv2.rectangle(img, c1, c2, color, -1, cv2.LINE_AA) # filled

cv2.putText(img, label, (c1[0], c1[1] - 2), 0, tl / 3, [225, 255, 255], thickness=tf, lineType=cv2.LINE_AA)

def main(args):

print(args)

device = torch.device(args.device)

# ------------------------------------导入网络

# 下面的criterion是算损失函数要用的,推理用不到,postprocessors是解码用的,这里也没有用,用的是自己的。

model, criterion, postprocessors = build_model(args)

# ------------------------------------加载权重

checkpoint = torch.load(args.resume, map_location='cuda')

model.load_state_dict(checkpoint['model'])

# ------------------------------------把权重加载到gpu或cpu上

model.to(device)

# ------------------------------------打印出网络的参数大小

n_parameters = sum(p.numel() for p in model.parameters() if p.requires_grad)

print("parameters:", n_parameters)

# ------------------------------------设置好存储输出结果的文件夹

output_dir = Path(args.output_dir)

# -----------------------------------读取数据集,进行推理

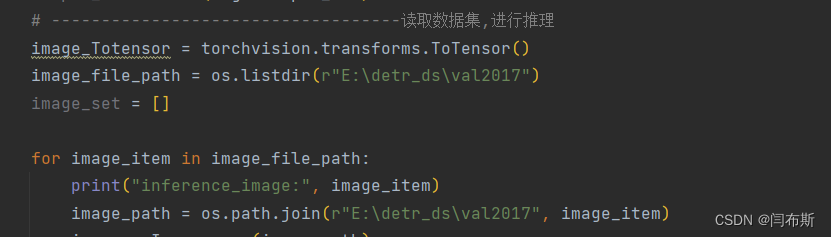

image_Totensor = torchvision.transforms.ToTensor()

image_file_path = os.listdir(r"E:\detr_ds\val2017")

image_set = []

for image_item in image_file_path:

print("inference_image:", image_item)

image_path = os.path.join(r"E:\detr_ds\val2017", image_item)

image = Image.open(image_path)

image_tensor = image_Totensor(image)

image_tensor = torch.reshape(image_tensor,

[-1, image_tensor.shape[0], image_tensor.shape[1], image_tensor.shape[2]])

image_tensor = image_tensor.to(device)

time1 = time.time()

inference_result = model(image_tensor)

time2 = time.time()

print("inference_time:", time2 - time1)

probas = inference_result['pred_logits'].softmax(-1)[0, :, :-1].cpu()

bboxes_scaled = rescale_bboxes(inference_result['pred_boxes'][0,].cpu(),

(image_tensor.shape[3], image_tensor.shape[2]))

scores, boxes = filter_boxes(probas, bboxes_scaled)

scores = scores.data.numpy()

boxes = boxes.data.numpy()

for i in range(boxes.shape[0]):

class_id = scores[i].argmax()

label = CLASSES[class_id]

confidence = scores[i].max()

text = f"{label} {confidence:.3f}"

image = np.array(image)

plot_one_box(boxes[i], image, label=text)

#cv2.imshow("images", image)

#cv2.waitKey(1)

image = Image.fromarray(np.uint8(image))

image.save(os.path.join(args.output_dir, image_item))

if __name__ == '__main__':

parser = argparse.ArgumentParser('DETR training and evaluation script', parents=[get_args_parser()])

args = parser.parse_args()

if args.output_dir:

Path(args.output_dir).mkdir(parents=True, exist_ok=True)

main(args)

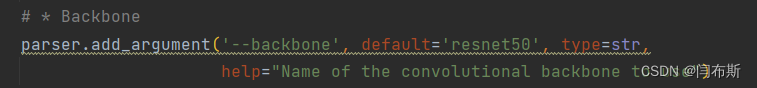

文件中的backbone和main函数中的统一:

修改为自己的coco数据集路径:

指定测试结果输出的路径:

指定刚刚生成的训练模型的地址:

![]()

classes改为自己的

下图的两个路径都改为自己的test图片文件夹路径

之后就可以正常测试生成结果效果如图:

上文中所有的python代码都需要自己创建py文件放入detr项目内,detr源码本身不包含。

本文参考:Windows配置DETR算法模型,实现目标检测-CSDN博客,【DETR】训练自己的数据集-实践笔记_detr训练量_暮已深的博客-CSDN博客

至此结束。

1803

1803

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?