分析 MNIST 数据集,使用pytorch建立具有3个隐藏层的线性神经网络模型,尝试用不同的正则化方法分析数据。

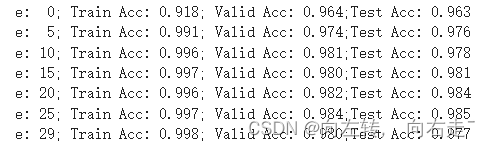

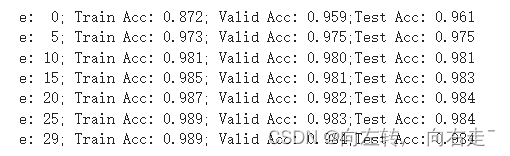

1.不使用正则化方法。

"""

mnist数据

训练具有3个隐藏层的神经网络

隐藏层使用Relu函数作为激活函数

批量随机梯度下降法

使用pytorch

"""

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import TensorDataset, DataLoader

#导入MNIST数据集

def load_mnist_images(filename):

with open(filename, 'rb') as f:

data = np.frombuffer(f.read(), np.uint8, offset=16)

data = data.reshape(-1, 28, 28) # 图像大小为28x28

return data

def load_mnist_labels(filename):

with open(filename, 'rb') as f:

labels = np.frombuffer(f.read(), np.uint8, offset=8)

return labels

# 加载训练和测试图像

train_images = load_mnist_images('train-images.idx3-ubyte')

train_labels = load_mnist_labels('train-labels.idx1-ubyte')

# 加载训练和测试标签

test_images = load_mnist_images('t10k-images.idx3-ubyte')

test_labels = load_mnist_labels('t10k-labels.idx1-ubyte')

#输入数据除以255

np.random.seed(1)

train_images, test_images = train_images/255, test_images/255

#把训练数据(60000*28*28)分成训练数据(50000*28*28)和验证数据(10000*28*28)

index = np.arange(len(train_images))

np.random.shuffle(index)

valid_images, valid_labels = train_images[index[-10000:]], train_labels[index[-10000:]] # 验证

train_images, train_labels = train_images[index[:50000]], train_labels[index[:50000]] # 训练

train_data = TensorDataset(torch.from_numpy(train_images).float(), torch.from_numpy(train_labels))

train_loader = DataLoader(train_data, batch_size = 128, shuffle=True)

# 使用 PyTorch 定义神经网络模型

class NeuralNetwork(nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.layer1 = nn.Linear(784, 1024)

self.layer2 = nn.Linear(1024, 512)

self.layer3 = nn.Linear(512, 256)

self.layer4 = nn.Linear(256, 10)

self.relu = nn.ReLU()

def forward(self, x):

x = self.flatten(x) # 维度从28*28变为784

x = self.relu(self.layer1(x)) # 计算隐藏层1

x = self.relu(self.layer2(x)) # 计算隐藏层2

x = self.relu(self.layer3(x)) # 计算隐藏层3

x = self.layer4(x) # 计算输出层

return x

#创建一个类 NeuralNetwork 的实例

net = NeuralNetwork()

# 定义损失函数和优化器

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=1e-3)

epochs = 30

train_acc_record = []

valid_acc_record = []

test_acc_record = []

for e in range(epochs):

total_correct = 0 # 初始化损失函数和

for batch, (x, y) in enumerate(train_loader): # 迭代每个批次数据

pred = net(x) # 正向传播算法计算预测值

loss = loss_fn(pred, y) # 计算损失函数

total_correct += torch.sum(torch.argmax(pred, axis=1) == y)

optimizer.zero_grad() # 设置模型参数的梯度为0

loss.backward() # 反向传播算法计算参数梯度

optimizer.step() # 更新参数

train_acc = total_correct.numpy()/len(train_data)

train_acc_record.append(train_acc)

valid_pred = net(torch.from_numpy(valid_images).float()).detach().numpy() # 计算测试

valid_acc = np.mean(np.argmax(valid_pred, axis=1) == valid_labels)

valid_acc_record.append(valid_acc)

test_pred = net(torch.from_numpy(test_images).float()).detach().numpy() # 计算测试数据预

test_acc = np.mean(np.argmax(test_pred, axis=1) == test_labels)

test_acc_record.append(test_acc)

if (e % 5 ==0 or e == epochs - 1):

print("e: %2d; Train Acc: %0.3f; Valid Acc: %0.3f;Test Acc: %0.3f" % (e, train_acc, valid_acc,test_acc) )

# 设置字体为SimHei,并确保正确显示 Unicode 字符

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

plt.figure(figsize=(12,8), dpi=120)

# 绘制数据并指定线条样式和颜色

plt.plot(train_acc_record, label='Train Accuracy',marker = "o")

plt.plot(test_acc_record, label='Test Accuracy',marker = "o")

plt.plot(valid_acc_record, label='Valid Accuracy',marker = "o")

# 添加标签和标题

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.title('训练、测试和验证精度')

# 添加图例并设置字体大小

plt.legend(fontsize='large')

plt.show()

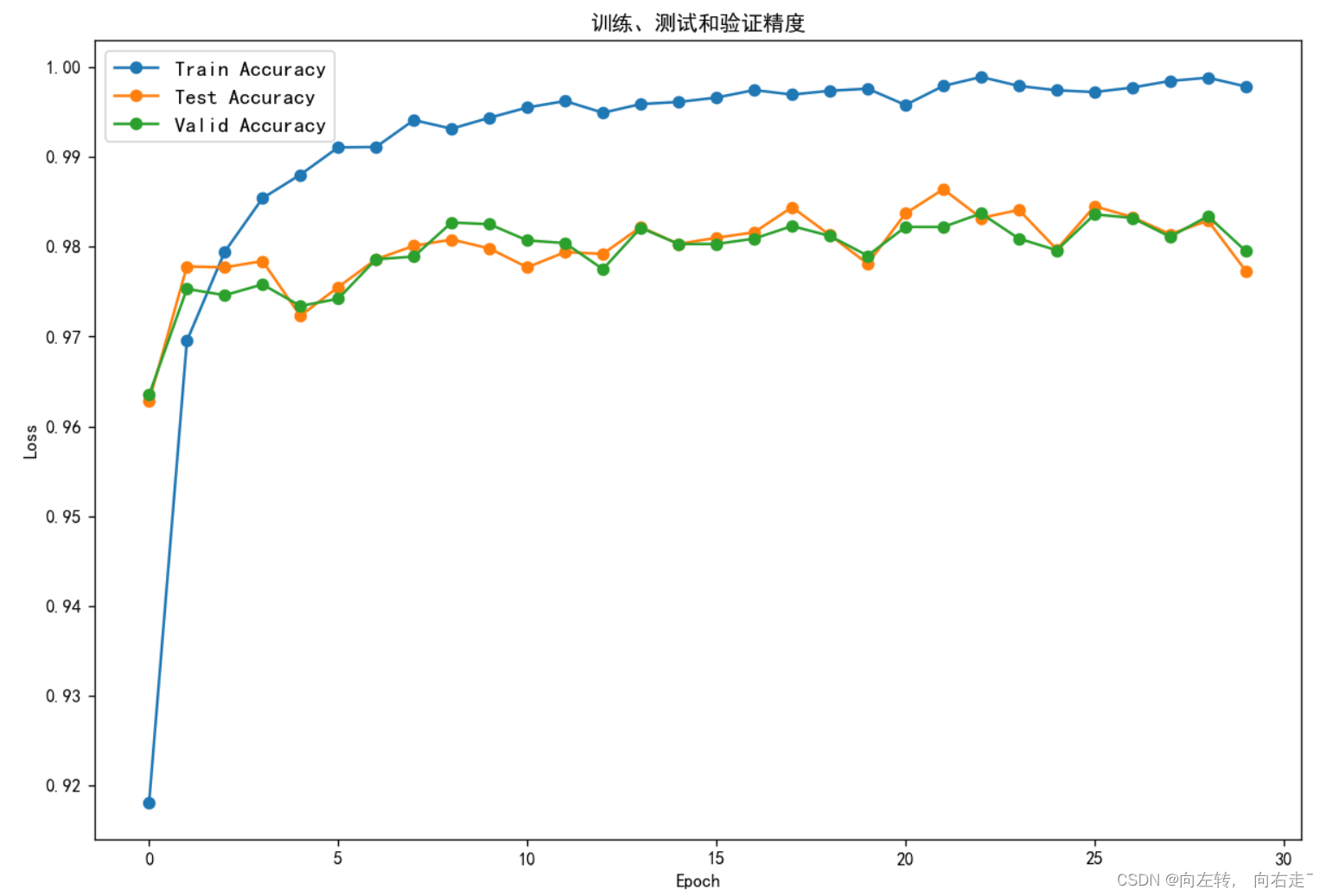

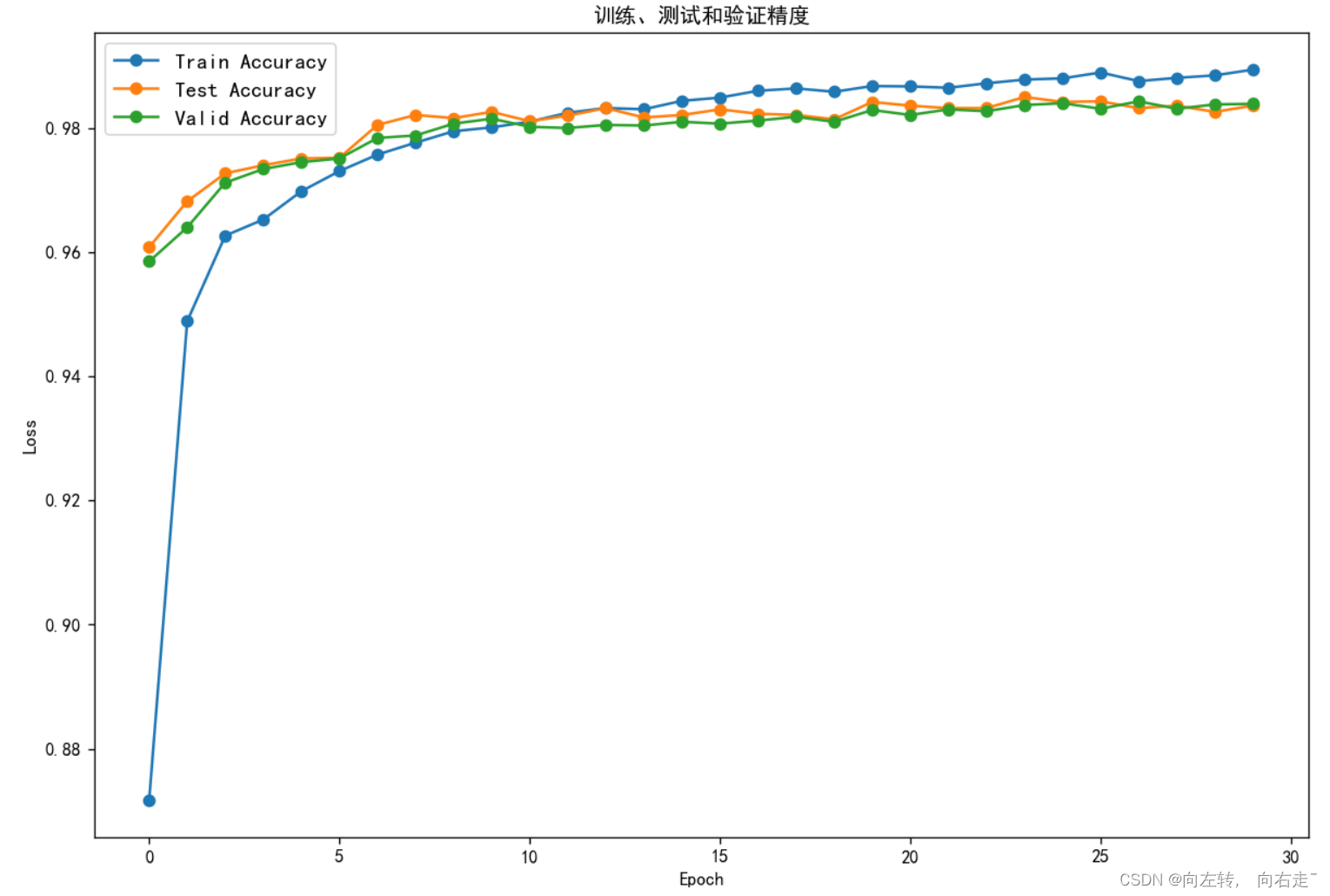

2.使用L2惩罚项。

增加L2惩罚,参数 weight_decay 设置为 0.0001,代码及结果如下。

"""

增加L2惩罚

mnist数据

训练具有3个隐藏层的神经网络

隐藏层使用Relu函数作为激活函数

批量随机梯度下降法

使用pytorch

"""

net = NeuralNetwork()

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=1e-3, weight_decay=1e-4) # weight_decay设置

epochs = 30

train_acc_l2_record = []

valid_acc_l2_record = []

test_acc_l2_record = []

for e in range(epochs):

total_correct = 0

for batch, (x, y) in enumerate(train_loader):

pred = net(x)

loss = loss_fn(pred, y)

total_correct += torch.sum(torch.argmax(pred, axis=1) == y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_acc = total_correct.numpy()/len(train_data)

train_acc_l2_record.append(train_acc)

valid_pred = net(torch.from_numpy(valid_images).float()).detach().numpy()

valid_acc = np.mean(np.argmax(valid_pred, axis=1) == valid_labels)

valid_acc_l2_record.append(valid_acc)

test_pred = net(torch.from_numpy(test_images).float()).detach().numpy() # 计算测试数据预

test_acc = np.mean(np.argmax(test_pred, axis=1) == test_labels)

test_acc_l2_record.append(test_acc)

if (e % 5 ==0 or e == epochs - 1):

print("e: %2d; Train Acc: %0.3f; Valid Acc: %0.3f;Test Acc: %0.3f" % (e, train_acc, valid_acc,test_acc) )

# 设置字体为SimHei,并确保正确显示 Unicode 字符

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

plt.figure(figsize=(12,8), dpi=120)

# 绘制数据并指定线条样式和颜色

plt.plot(np.arange(epochs),train_acc_l2_record, label='Train Accuracy',marker = "o")

plt.plot(np.arange(epochs),test_acc_l2_record, label='Test Accuracy',marker = "o")

plt.plot(np.arange(epochs),valid_acc_l2_record, label='Valid Accuracy',marker = "o")

# 添加标签和标题

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.title('训练、测试和验证精度')

# 添加图例并设置字体大小

plt.legend(fontsize='large')

plt.show()

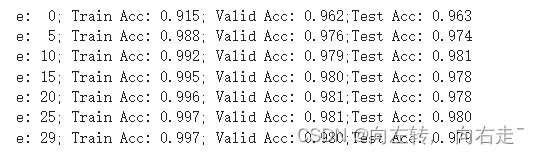

3.使用Dropout方法。

使用Dropout,参数drop_rate=0.5,代码及结果如下。

"""

使用Dropout,参数drop_rate=0.5

mnist数据

训练具有3个隐藏层的神经网络

隐藏层使用Relu函数作为激活函数

批量随机梯度下降法

使用pytorch

"""

class NeuralNetwork2(nn.Module):

def __init__(self, drop_rate=0.5):

super(NeuralNetwork2, self).__init__()

self.drop_rate = drop_rate

self.flatten = nn.Flatten()

layer1 = []

layer1.append(nn.Linear(784, 1024))

layer1.append(nn.Dropout(self.drop_rate))

layer1.append(nn.ReLU(inplace=True))

layer2 = []

layer2.append(nn.Linear(1024, 512))

layer2.append(nn.Dropout(self.drop_rate))

layer2.append(nn.ReLU(inplace=True))

layer3 = []

layer3.append(nn.Linear(512, 256))

layer3.append(nn.Dropout(self.drop_rate))

layer3.append(nn.ReLU(inplace=True))

self.l1 = nn.Sequential(*layer1)

self.l2 = nn.Sequential(*layer2)

self.l3 = nn.Sequential(*layer3)

self.l4 = nn.Linear(256, 10)

def forward(self, x):

x = self.flatten(x) # 维度从28*28变为784

x = self.l1(x) # 计算隐藏层1

x = self.l2(x) # 计算隐藏层2

x = self.l3(x) # 计算输出层

x = self.l4(x)

return x

# 训练模型

net = NeuralNetwork2()

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=1e-3)

epochs = 30

train_acc_drop_record = []

valid_acc_drop_record = []

test_acc_drop_record = []

for e in range(epochs):

total_correct = 0

net.train() # 设置模型为训练模式

for batch, (x, y) in enumerate(train_loader):

pred = net(x) # 正向传播算法计算预测值

loss = loss_fn(pred, y) # 计算损失函数

total_correct += torch.sum(torch.argmax(pred, axis=1) == y)

optimizer.zero_grad() # 设置模型参数的梯度为0

loss.backward() # 反向传播算法计算参数梯度

optimizer.step() # 更新参数

train_acc = total_correct.numpy()/len(train_data)

train_acc_drop_record.append(train_acc)

net.eval() # 设置模型为测试模式

valid_pred = net(torch.from_numpy(valid_images).float()).detach().numpy()

valid_acc = np.mean(np.argmax(valid_pred, axis=1) == valid_labels)

valid_acc_drop_record.append(valid_acc)

test_pred = net(torch.from_numpy(test_images).float()).detach().numpy() # 计算测试数据预

test_acc = np.mean(np.argmax(test_pred, axis=1) == test_labels)

test_acc_drop_record.append(test_acc)

if (e % 5 ==0 or e == epochs - 1):

print("e: %2d; Train Acc: %0.3f; Valid Acc: %0.3f;Test Acc: %0.3f" % (e, train_acc, valid_acc,test_acc) )

# 设置字体为SimHei,并确保正确显示 Unicode 字符

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

plt.figure(figsize=(12,8), dpi=120)

# 绘制数据并指定线条样式和颜色

plt.plot(np.arange(epochs),train_acc_drop_record, label='Train Accuracy',marker = "o")

plt.plot(np.arange(epochs),test_acc_drop_record, label='Test Accuracy',marker = "o")

plt.plot(np.arange(epochs),valid_acc_drop_record, label='Valid Accuracy',marker = "o")

# 添加标签和标题

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.title('训练、测试和验证精度')

# 添加图例并设置字体大小

plt.legend(fontsize='large')

plt.show()

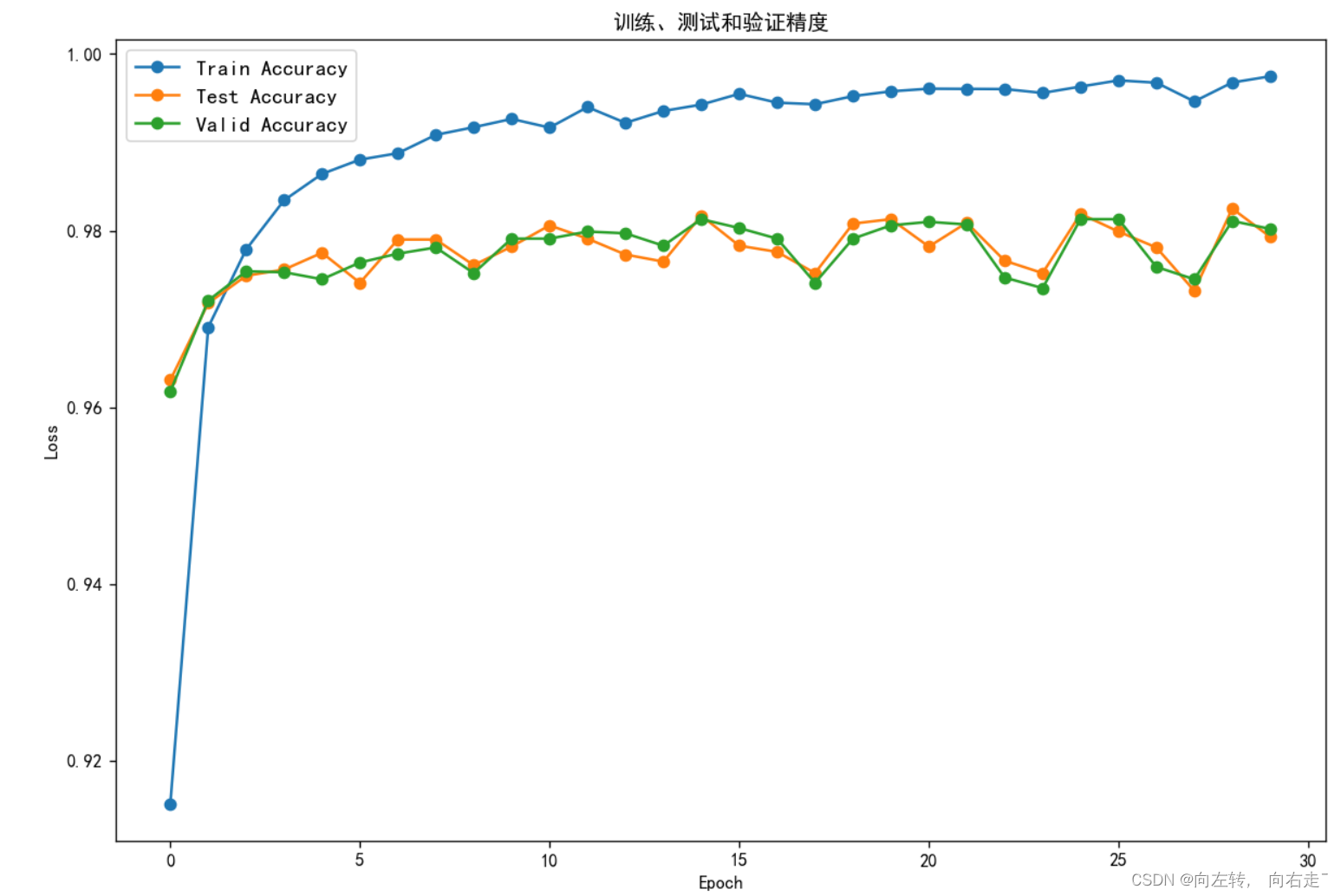

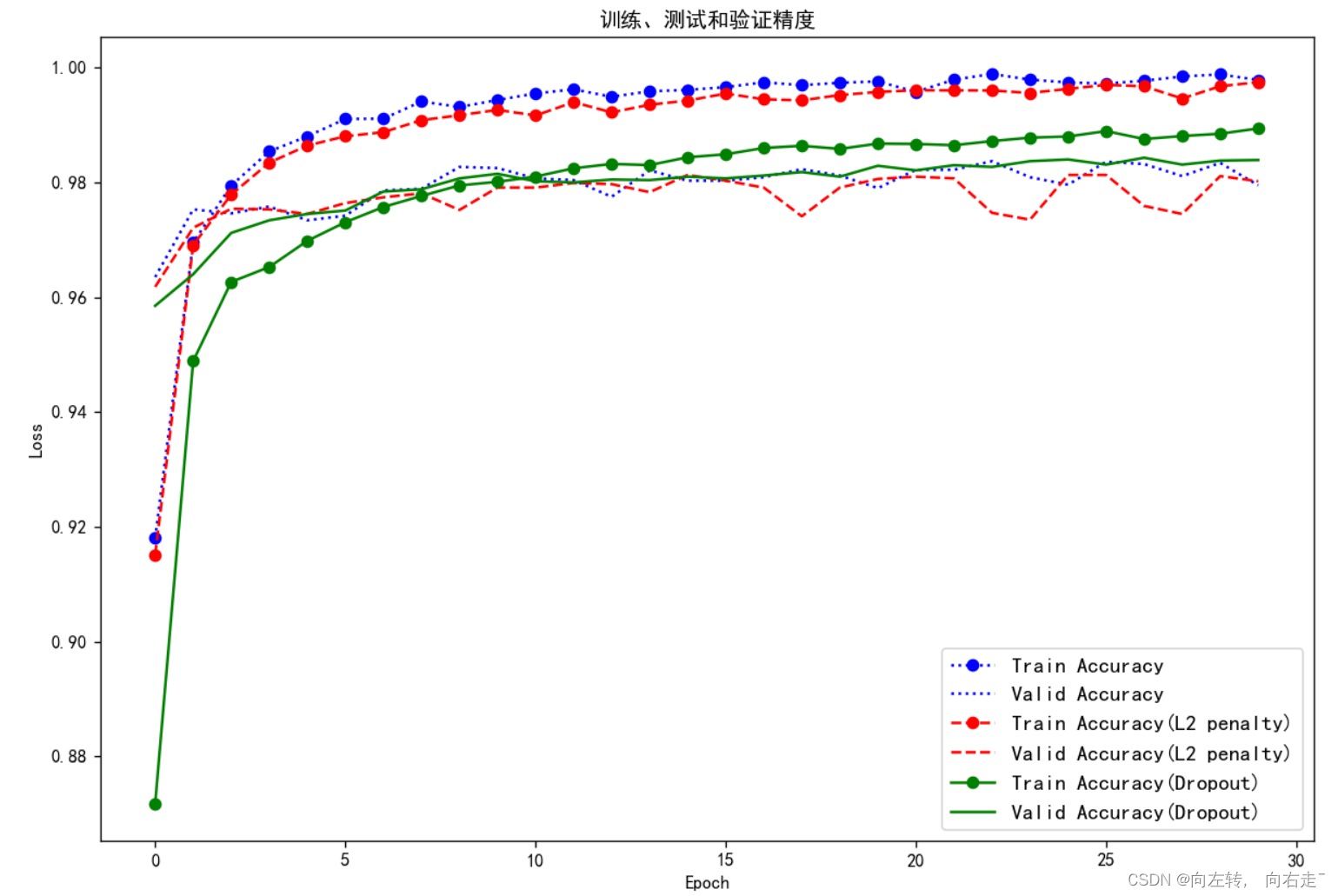

最后对不使用正则化方法,使用L2惩罚,使用Dropout三种情况得到的训练和验证准确率绘制图像如下。

# 设置字体为SimHei,并确保正确显示 Unicode 字符

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

plt.figure(figsize=(12,8), dpi=120)

# 绘制数据并指定线条样式和颜色

plt.plot(np.arange(epochs),train_acc_record, label='Train Accuracy',marker = "o",linestyle=":",color="blue")

plt.plot(np.arange(epochs),valid_acc_record, label='Valid Accuracy',linestyle=":",color="blue")

plt.plot(np.arange(epochs),train_acc_l2_record, label='Train Accuracy(L2 penalty)',marker = "o",linestyle="--",color="red")

plt.plot(np.arange(epochs),valid_acc_l2_record, label='Valid Accuracy(L2 penalty)',linestyle="--",color="red")

plt.plot(np.arange(epochs),train_acc_drop_record, label='Train Accuracy(Dropout)',marker = "o",linestyle="-",color="green")

plt.plot(np.arange(epochs),valid_acc_drop_record, label='Valid Accuracy(Dropout)',linestyle="-",color="green")

# 添加标签和标题

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.title('训练、测试和验证精度')

# 添加图例并设置字体大小

plt.legend(fontsize='large')

plt.show()

2382

2382

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?